Abstract

The resources required to service cloud computing applications are dynamic and fluctuate over time in response to variations in the volume of incoming requests. Proactive autoscaling techniques attempt to predict future resource demand and assign resources before the request. Thus, establishing accurate prediction methods optimizes resource allocation and avoids service-level agreement violations (SLA). Most existing methods for predicting resource usage in cloud computing rely on univariate time series prediction models. These models consider only a single resource usage metric, make future predictions based on historical data for the target metric, and provide predictions for a single step ahead. This paper provides a hybrid method for multivariate time series workload prediction of host machines in cloud data centers that predicts the workload for the next few steps. First, a statistical analysis is used to construct the training set. Then, a convolutional neural network (CNN) is employed to extract the hidden spatial features between all correlated variables. Finally, the spatial features extracted by the CNN are fed into a GRU network optimized with the attention mechanism in order to extract the temporal correlation features. Two experiments employing Google cluster data were conducted to evaluate the proposed method. Experimental results reveal that our method improves prediction accuracy by 2% to 28% compared to baseline methods and previous research.

Similar content being viewed by others

Data Availability

The dataset analyzed during experiments in the current study is available at https://github.com/google/cluster-data.

References

Khallouli W, Huang J (2021) Cluster resource scheduling in cloud computing: literature review and research challenges. J Supercomput. https://doi.org/10.1007/s11227-021-04138-z

Umer A, Nazir B, Ahmad Z (2021) Adaptive market-oriented combinatorial double auction resource allocation model in cloud computing. J Supercomput 78(1):1244–1286. https://doi.org/10.1007/s11227-021-03918-x

Langmead B, Nellore A (2018) Cloud computing for genomic data analysis and collaboration. Nat Rev Genet 19(4):208–219. https://doi.org/10.1038/nrg.2017.113

Bello SA, Oyedele LO, Akinade OO, Bilal M, Davila Delgado JM, Akanbi LA, Ajayi AO, Owolabi HA (2021) Cloud computing in construction industry: Use cases, benefits and challenges. Autom Constr 122:103441. https://doi.org/10.1016/j.autcon.2020.103441

Bittencourt LF, Goldman A, Madeira ER, da Fonseca NL, Sakellariou R (2018) Scheduling in distributed systems: a cloud computing perspective. Computer Science Review 30:31–54. https://doi.org/10.1016/j.cosrev.2018.08.002

Bhardwaj A, Krishna CR (2021) Virtualization in cloud computing: moving from hypervisor to containerization—a survey. Arab J Sci Eng 46(9):8585–8601. https://doi.org/10.1007/s13369-021-05553-3

Helali L, Omri MN (2021) A survey of data center consolidation in cloud computing systems. Comput Sci Rev 39:100366. https://doi.org/10.1016/j.cosrev.2021.100366

Zhu Y, Zhang W, Chen Y, Gao H (2019) A novel approach to workload prediction using attention-based LSTM encoder-decoder network in cloud environment. EURASIP J Wireless Commun Netw. https://doi.org/10.1186/s13638-019-1605-z

Cheng H, Liu B, Lin W, Ma Z, Li K, Hsu CH (2021) A survey of energy-saving technologies in cloud data centers. J Supercomput 77(11):13385–13420. https://doi.org/10.1007/s11227-021-03805-5

Abdel-Basset M, Mohamed R, Elhoseny M, Bashir AK, Jolfaei A, Kumar N (2021) Energy-aware marine predators algorithm for task scheduling in IoT-based fog computing applications. IEEE Trans Industr Inf 17(7):5068–5076. https://doi.org/10.1109/tii.2020.3001067

** C, Bai X, Yang C, Mao W, Xu X (2020) A review of power consumption models of servers in data centers. Appl Energy 265:114806. https://doi.org/10.1016/j.apenergy.2020.114806

Hsieh SY, Liu CS, Buyya R, Zomaya AY (2020) Utilization-prediction-aware virtual machine consolidation approach for energy-efficient cloud data centers. J Parallel and Distribut Comput 139:99–109. https://doi.org/10.1016/j.jpdc.2019.12.014

Vakilinia S (2018) Energy efficient temporal load aware resource allocation in cloud computing datacenters. J Cloud Comput. https://doi.org/10.1186/s13677-017-0103-2

Garí Y, Monge DA, Pacini E, Mateos C, García Garino C (2021) Reinforcement learning-based application autoscaling in the cloud: a survey. Eng Appl Artif Intell 102:104288. https://doi.org/10.1016/j.engappai.2021.104288

Radhika E, Sudha Sadasivam G (2021) A review on prediction based autoscaling techniques for heterogeneous applications in cloud environment. Mater Today: Proceed 45:2793–2800. https://doi.org/10.1016/j.matpr.2020.11.789

Singh P, Kaur A, Gupta P, Gill SS, Jyoti K (2020) RHAS: robust hybrid auto-scaling for web applications in cloud computing. Clust Comput 24(2):717–737. https://doi.org/10.1007/s10586-020-03148-5

Ghobaei-Arani M, Rezaei M, Souri A (2021) An auto-scaling mechanism for cloud-based multimedia storage systems: a fuzzy-based elastic controller. Multimed Tools and Appl. https://doi.org/10.1007/s11042-021-11021-9

Golshani E, Ashtiani M (2021) Proactive auto-scaling for cloud environments using temporal convolutional neural networks. J Parallel Distribut Comput 154:119–141. https://doi.org/10.1016/j.jpdc.2021.04.006

Saxena D, Singh AK (2021) A proactive autoscaling and energy-efficient VM allocation framework using online multi-resource neural network for cloud data center. Neurocomputing 426:248–264. https://doi.org/10.1016/j.neucom.2020.08.076

Dang-Quang NM, Yoo M (2021) Deep learning-based autoscaling using bidirectional long short-term memory for kubernetes. Appl Sci 11(9):3835. https://doi.org/10.3390/app11093835

Raouf AEA, Abo-Alian A, Badr NL (2021) A predictive multi-tenant database migration and replication in the cloud environment. IEEE Access 9:152015–152031. https://doi.org/10.1109/access.2021.3126582

Rampérez V, Soriano J, Lizcano D, Lara JA (2021) FLAS: A combination of proactive and reactive auto-scaling architecture for distributed services. Futur Gener Comput Syst 118:56–72. https://doi.org/10.1016/j.future.2020.12.025

Amiri M, Mohammad-Khanli L, Mirandola R (2018) A sequential pattern mining model for application workload prediction in cloud environment. J Netw Comput Appl 105:21–62. https://doi.org/10.1016/j.jnca.2017.12.015

Ruan L, Bai Y, Li S, He S, **ao L (2021) Workload time series prediction in storage systems: a deep learning based approach. Clust Comput. https://doi.org/10.1007/s10586-020-03214-y

Bi J, Li S, Yuan H, Zhou M (2021) Integrated deep learning method for workload and resource prediction in cloud systems. Neurocomputing 424:35–48. https://doi.org/10.1016/j.neucom.2020.11.011

Singh P, Gupta P, Jyoti K (2018) TASM: technocrat ARIMA and SVR model for workload prediction of web applications in cloud. Clust Comput 22(2):619–633. https://doi.org/10.1007/s10586-018-2868-6

Aslanpour MS, Toosi AN, Taheri J, Gaire R (2021) AutoScaleSim: A simulation toolkit for auto-scaling Web applications in clouds. Simul Model Pract Theory 108:102245. https://doi.org/10.1016/j.simpat.2020.102245

Ruiz AP, Flynn M, Large J, Middlehurst M, Bagnall A (2020) The great multivariate time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Min Knowl Disc 35(2):401–449. https://doi.org/10.1007/s10618-020-00727-3

Shih SY, Sun FK, Lee HY (2019) Temporal pattern attention for multivariate time series forecasting. Mach Learn 108(8–9):1421–1441. https://doi.org/10.1007/s10994-019-05815-0

Patel YS, Jaiswal R, Misra R (2021) Deep learning-based multivariate resource utilization prediction for hotspots and coldspots mitigation in green cloud data centers. J Supercomput. https://doi.org/10.1007/s11227-021-04107-6

Gupta S, Dileep AD, Gonsalves TA (2018) A joint feature selection framework for multivariate resource usage prediction in cloud servers using stability and prediction performance. J Supercomput 74(11):6033–6068. https://doi.org/10.1007/s11227-018-2510-7

Huang H, Cressie N (2016) Spatio-temporal prediction of snow water equivalent using the Kalman filter. Comput Stat Data Anal 22(2):159–175. https://doi.org/10.1016/0167-9473(95)00047-X

Ho SL, **e M, Goh TN (2002) A comparative study of neural network and Box-Jenkins ARIMA modeling in time series prediction. Comput Ind Eng 42(2–4):371–375. https://doi.org/10.1016/S0360-8352(02)00036-0

Calheiros RN, Masoumi E, Ranjan R, Buyya R (2015) Workload prediction Using ARIMA model and its impact on cloud applications’ QoS. IEEE Trans Cloud Comput 3(4):449–458. https://doi.org/10.1109/TCC.2014.2350475

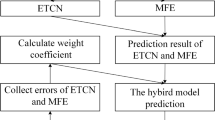

Chen J, Wang Y (2019) A hybrid method for short-term host utilization prediction in cloud computing. J Electric Comput Eng. https://doi.org/10.1155/2019/2782349

Yao F, Yao Y, **ng L, Chen H, Lin Z, Li T (2019) An intelligent scheduling algorithm for complex manufacturing system simulation with frequent synchronizations in a cloud environment. Memetic Comput 11(4):357–370. https://doi.org/10.1007/s12293-019-00284-3

Nehra P, Nagaraju A (2021) Host utilization prediction using hybrid kernel based support vector regression in cloud data centers. J King Saud Univ- Comput Inform Sci. https://doi.org/10.1016/j.jksuci.2021.04.011

Sharifian S, Barati M (2019) An ensemble multi-scale wavelet-GARCH hybrid SVR algorithm for mobile cloud computing workload prediction. Int J Mach Learn Cybern 10(11):3285–3300. https://doi.org/10.1007/s13042-019-01017-1

Zhong W, Zhuang Y, Sun JJ, Gu J (2018) A load prediction model for cloud computing using PSO-based weighted wavelet support vector machine. Appl Intell 48(11):4072–4083. https://doi.org/10.1007/s10489-018-1194-2

Barati M, Sharifian S (2015) A hybrid heuristic-based tuned support vector regression model for cloud load prediction. J Supercomput 71(11):4235–4259. https://doi.org/10.1007/s11227-015-1520-y

Jeddi S, Sharifian S (2019) A water cycle optimized wavelet neural network algorithm for demand prediction in cloud computing. Clust Comput 22(4):1397–1412. https://doi.org/10.1007/s10586-019-02916-2

Shishira SR, Kandasamy A (2020) BeeM-NN: an efficient workload optimization using Bee mutation neural network in federated cloud environment. J Ambient Intell Humaniz Comput 12(2):3151–3167. https://doi.org/10.1007/s12652-020-02474-1

Pushpalatha R, Ramesh B (2021) Amalgamation of neural network and genetic algorithm for efficient workload prediction in data center. Adv VLSI, Signal Process, Power Electron, IoT, Commun Embedded Syst. https://doi.org/10.1007/978-981-16-0443-0_6

Ouhame S, Hadi Y, Akhiat F, El Hassan Elkafssaoui EH (2019) Workload Multivariate Prediction By Vector Autoregressive and The Stacked Lstm Models, Int J Adv Comput Sci Cloud Comput (IJACSCC), 7(1), DOIONLINE NO: IJACSCC-IRAJ-DOIONLINE-16659.

Nguyen HM, Kalra G, Kim D (2019) Host load prediction in cloud computing using long short-term memory encoder–decoder. J Supercomput 75(11):7592–7605. https://doi.org/10.1007/s11227-019-02967-7

Singh AK, Saxena D, Kumar J, Gupta V (2021) A Quantum approach towards the adaptive prediction of cloud workloads. IEEE Trans Parallel Distrib Syst 32(12):2893–2905. https://doi.org/10.1109/tpds.2021.3079341

Ouhame S, Hadi Y, Ullah A (2021) An efficient forecasting approach for resource utilization in cloud data center using CNN-LSTM model. Neural Comput Appl 33(16):10043–10055. https://doi.org/10.1007/s00521-021-05770-9

Dang-Quang N-M, Yoo M (2021) Multivariate deep learning model for workload prediction in cloud computing. Int Conf Inform Commun Technol Convergence (ICTC) 2021:858–862. https://doi.org/10.1109/ICTC52510.2021.9620931

Xu M, Song C, Wu H, Gill S, Ye K, Xu C (2022) esDNN: deep neural network based multivariate workload prediction in cloud computing environments. ACM Trans Internet Technol 22:1–24

Peng H, Wen WS, Tseng ML, Li LL (2021) A cloud load forecasting model with nonlinear changes using whale optimization algorithm hybrid strategy. Soft Comput 25(15):10205–10220. https://doi.org/10.1007/s00500-021-05961-5

Shahbaz M, Shahzad SJH, Mahalik MK, Sadorsky P (2017) How strong is the causal relationship between globalization and energy consumption in developed economies? A country-specific time-series and panel analysis. Appl Econ 50(13):1479–1494. https://doi.org/10.1080/00036846.2017.1366640

Hlavackovaschindler K, Palus M, Vejmelka M, Bhattacharya J (2007) Causality detection based on information-theoretic approaches in time series analysis. Phys Rep 441(1):1–46. https://doi.org/10.1016/j.physrep.2006.12.004

Stern DI (2011) From correlation to granger causality. SSRN Electron J. https://doi.org/10.2139/ssrn.1959624

Liu CL, Hsaio WH, Tu YC (2019) Time series classification with multivariate convolutional neural network. IEEE Trans Industr Electron 66(6):4788–4797. https://doi.org/10.1109/tie.2018.2864702

Dhillon A, Verma GK (2019) Convolutional neural network: a review of models, methodologies and applications to object detection. Prog Artificial Intell 9(2):85–112. https://doi.org/10.1007/s13748-019-00203-0

Nair V., & Hinton G.E. (2010). Rectified linear units improve restricted Boltzmann machines, in: Proceedings of the 27th International Conference on Machine Learning (ICML-10), Israel, Haifa, 2010, 807–814

Sherstinsky A (2020) Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D 404:132306. https://doi.org/10.1016/j.physd.2019.132306

Zia T, Zahid U (2018) Long short-term memory recurrent neural network architectures for Urdu acoustic modeling. Int J Speech Technol 22(1):21–30. https://doi.org/10.1007/s10772-018-09573-7

Lien MD, Sadeghi-Niaraki A, Huy DMinMoon HKH (2018) Deep learning approach for short-term stock trends prediction based on two-stream gated recurrent unit network. IEEE Access 6:55392–55404. https://doi.org/10.1109/access.2018.2868970

Ran X, Shan Z, Fang Y, Lin C (2019) An LSTM-based method with attention mechanism for travel time prediction. Sensors 19(4):861. https://doi.org/10.3390/s19040861

Nair V, Hinton GE (2010) Rectified linear units improve restricted Boltzmann machines, In: Proceedings of the 27th International Conference on Machine Learning (ICML-10), Israel, Haifa, 2010, pp. 807–814

Author information

Authors and Affiliations

Contributions

JD contributed to methodology, software, simulation, and writing—original draft. FK contributed to conceptualization, validation, methodology, and writing—review and editing. MRM contributed to statistical analysis, validation, and methodology. MS contributed to conceptualization, software, and writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dogani, J., Khunjush, F., Mahmoudi, M.R. et al. Multivariate workload and resource prediction in cloud computing using CNN and GRU by attention mechanism. J Supercomput 79, 3437–3470 (2023). https://doi.org/10.1007/s11227-022-04782-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-022-04782-z