Abstract

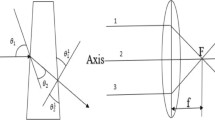

The paper proposes a non triangulation method for estimating the depth of a person in slanting position relative to camera lens center. The influence of three parameters, namely lens aperture radius, focal length, and object size on person depth along the axial line, is well investigated while recording the in-focused portions of the person at various distances on the axial line. This investigation is taken as a basis for estimating the depth of the person on the axial line for different combination of aforementioned parameters using machine learning framework. Further to estimate the slanting depth, a geometrical relation is obtained from the predicted axial depth, deviation taken by the person to the left/right of the axial line. Considering the ground truth depth data taken within 3 to 6mts range using Nikon D5300, the model is validated and is inferred that the camera to object distance (or depth) anticipated along axial line is 99.06% correlated with actual camera to object distance at a confidence level of 95% with RMSE of 17.36

Similar content being viewed by others

References

Achar S, Bartels JR, Whittaker WL, Kutulakos KN, Narasimhan SG (2017) Epipolar time-of-flight imaging. ACM Trans Graph (ToG) 36(4)37. ACM

Alphonse PJA, Sriharsha KV (2020) Depth perception in a single RGB camera using body dimensions and centroid property. Traitement du Signal 37(2). IIETA

Alphonse PJA, Sriharsha KV (2021) Depth estimation from a single RGB image using target foreground and background scene variations. Comput Electr Eng 94. Elseiver

Alphonse PJA, Sriharsha KV (2021) Depth perception in single rgb camera system using lens aperture and object size: A geometrical approach for depth estimation. SN Appl Sci 3(6)1–16. Springer

Anandan P (1989) A computational framework and an algorithm for the measurement of visual motion. Int J Comput Vis 2(3)283–310. Springer

Benjamin Jr JM (1974) The laser cane. Bull Prosthet Res 443–450

Bhatti A (2012) Current advancements in stereo vision. InTech

Cabezas I, Padilla V, Trujillo M (2011) A measure for accuracy disparity maps evaluation. Iberoamerican Congress on Pattern Recognition 223–231. Springer

Chaudhuri S, Rajagopalan AN (2012) Depth from defocus: a real aperture imaging approach, Springer Science & Business Media

Chen Y, Wang X, Zhang Q (2016) Depth extraction method based on the regional feature points in integral imaging. Optik-International Journal for Light and Electron Optics 127(2)763-768. Elseiver

Fekri-Ershad S, Fakhrahmad S, Tajeripour F (2018) Impulse noise reduction for texture images using real word spelling correction algorithm and local binary patterns. International Arab Journal of Information Technology 15(6):1024–1030

Fuchs S (2010) Multipath interference compensation in time-of-flight camera images. Pattern Recognition (ICPR), 2010 20th International Conference on, IEEE, 3583–3586

Hannah MJ (1974) Computer matching of areas in stereo images. Stanford Univ Ca Dept Comput Sci

Hansard M, Lee S, Choi O, Horaud RP (2012) Time-of-flight cameras: Principles, methods and applications. Springer Science & Business Media

Langmann B (2014) Depth camera assessment. Wide Area 2D/3D Imaging 5–19. Springer

Lefloch D, Nair R, Lenzen F, Schäfer H, Streeter L, Cree MJ, Koch R, Kolb A (2013) Technical foundation and calibration methods for time-of-flight cameras. Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications, 3–24, Springer

Levin A, Fergus R, Frédo D, Freeman WT (2007) ACM Transactions on Graphics (TOG) 26:70

Li L (2014) Time-of-flight camera-an introduction. Technical white paper, Texas Instruments Dallas, Tex, USA

Liu Y, Cao X, Dai Q, Xu W (2009) Continuous depth estimation for multi-view stereo. Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on, 2121–2128. IEEE

Monteiro NB, Marto S, Barreto JP, Gaspar J (2018) Depth range accuracy for plenoptic cameras. Comput Vis Image Underst 168, 104-117. Elseiver

Munro P, Gerdelan AP (2009) Stereo Vision Computer Depth Perception, Country United States City University Park Country Code US Post code, 16802. Citeseer

Mure-Dubois, J, Hügli H (2007) Real-time scattering compensation for time-of-flight camera. Proceedings of the ICVS Workshop on Camera Calibration Methods for Computer Vision Systems

Niwa H, Ogata T, Komatani K, Hiroshi OG (2007) Distance estimation of hidden objects based on acoustical holography by applying acoustic diffraction of audible sound. Robotics and Automation, 2007 IEEE International Conference on, 423–428. IEEE

Pertuz S, Pulido-Herrera E, Kamarainen JK (2018) Focus model for metric depth estimation in standard plenoptic cameras. ISPRS ISPRS J Photogramm Remote Sens 144, 38–47. Elseiver

Redmon J, Divvala S, Girshick R, Farhadi A (2015) You look only once: Unified real-time object detection

Reynolds M, Doboš J, Peel L, Weyrich T, Brostow GJ (2011) Capturing time-of-flight data with confidence. Computer Vision and Pattern Recognition (CVPR), 2011 IEEE Conference on, 945–952

Sánchez-Ferreira C, Mori JY, Farias MCQ, Llanos CH (2018) Depth range accuracy for plenoptic cameras. Comput Vis Image Underst 168, 104–117. Elseiver

Sarbolandi H, Lefloch D, Kolb A (2015) Kinect range sensing: Structured-light versus time-of-flight kinect. Comput Vis Image Underst 139, 1–20. Elseiver

Scharstein D (1999) View synthesis using stereo vision. Springer-Verlag

Scharstein D, Szeliski R (1998) Stereo matching with nonlinear diffusion. Int J Comput Vis 28(2)7–42 Springer

Scharstein D, Szeliski R (2002) A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int J Comput Vis 47(1-3)7–42. Springer

Shan-shan C, Wu-heng Z, Zhi-lin F (2011) Depth estimation via stereo vision using Birchfield’s algorithm. Communication Software and Networks (ICCSN), 2011 IEEE 3rd International Conference on, 403–407. IEEE

Wahab MNA, Sivadev N, Sundaraj K (2011) Development of monocular vision system for depth estimation in mobile robot-Robot socce. Sustainable Utilization and Development in Engineering and Technology (STUDENT), 2011 IEEE Conference on, 36–41. IEEE

Wang TC, Efros AA, Ramamoorthi R (2016) Depth estimation with occlusion modeling using light-field cameras. IEEE Trans Pattern Anal Mach Intell 38(11)2170–2181. IEEE

Whyte O, Sivic J, Zisserman A, Ponce J (2012) Non-uniform deblurring for shaken images. Int J Comput Vis 98, 2, 168-186. Springer

Zhang L, Deshpande A, Chen X (2010) Denoising vs. deblurring: HDR imaging techniques using moving cameras. Computer Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on, 522–529. IEEE

Acknowledgements

This work is supported under Viswesvaraya Ph.D scheme for Electronics & IT[Order No:PhD-MLA/4(16)/2014], a division of the Ministry of Electronics & IT,Govt of India.The writers also gratefully acknowledge the association of Sica Southern Indian Cinematographers, Chennai, for creating the dataset to validate the proposed theory.The author would also like to thank the Student society ,Department of Computer Applications who have been with us and supported us in Dataset creation.The Author is also grateful to the Incharge Faculty and Lab, Sensors & Security Lab for offering experimental validation facilities.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interests

Do not have any conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sriharsha, K.V., Alphonse, P. A computational geometric learning approach for person axial and slanting depth prediction using single RGB camera. Multimed Tools Appl 83, 14133–14149 (2024). https://doi.org/10.1007/s11042-023-15970-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15970-1