Abstract

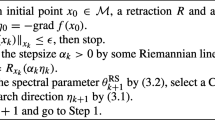

In this paper, we present the multiobjective optimization methods of conjugate gradient on Riemannian manifolds. The concepts of optimality and Wolfe conditions, as well as Zoutendijk’s theorem, are redefined in this setting. We show that under some standard assumptions, a sequence generated by these algorithms converges to a critical Pareto point. This is when the step sizes satisfy the multiobjective Wolfe conditions. In particular, we propose the Fletcher–Reeves, Dai–Yuan, Polak–Ribière–Polyak, and Hestenes–Stiefel parameters and further analyze the convergence behavior of the first two methods and test their performance against the steepest descent method.

Similar content being viewed by others

References

Absil, P.A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Bello Cruz, J.Y., Lucambio Pérez, L.R., Melo, J.G.: Convergence of the projected gradient method for quasiconvex multiobjective optimization. Nonlinear Anal. Theory Methods Appl. 74(16), 5268–5273 (2011)

Bento, G.C., Ferreira, O.P., Oliveira, P.R.: Unconstrained steepest descent method for multicriteria optimization on Riemannian manifolds. J. Optim. Theory Appl. 154(1), 88–107 (2012)

Bento, G.C., Neto, J.C.: A subgradient method for multiobjective optimization on Riemannian manifolds. J. Optim. Theory Appl. 159(1), 125–137 (2013)

Bento, G.C., Neto, J.C., Meireles, L.V.: Proximal point method for locally Lipschitz functions in multiobjective optimization of Hadamard manifolds. J. Optim. Theory Appl. 179(1), 37–52 (2018)

Bento, G.C., Neto, J.C., Santos, P.: An inexact steepest descent method for multicriteria optimization on Riemannian manifolds. J. Optim. Theory Appl. 159, 108–124 (2013)

Bonnel, H., Iusem, A.N., Svaiter, B.F.: Proximal methods in vector optimization. SIAM J. Optim. 15, 953–970 (2005)

Boumal, N.: An Introduction to Optimization on Smooth Manifolds. Cambridge University Press, Cambridge (2023)

Cai, T., Song, L., Li, G., Liao, M.: Multi-task learning with Riemannian optimization. In: ICIC 2021: Intelligent Computing Theories and Application. Lecture Notes in Computer Science 12837, 499–509 (2021)

Carrizo, G.A., Lotito, P.A., Maciel, M.C.: Trust-region globalization strategy for the nonconvex unconstrained multiobjective optimization problem. Math. Program. 159, 339–369 (2016)

Carrizosa, E., Frenk, J.B.G.: Dominating sets for convex functions with some applications. J. Optim. Theory Appl. 96(2), 281–295 (1998)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Das, I., Dennis, J.E.: A closer look at drawbacks of minimizing weighted sums of objectives for Pareto set generation in multicriteria optimization problems. Struct. Optim. 14, 63–69 (1997)

Das, I., Dennis, J.E.: Normal-boundary intersection: a new method for generating the Pareto surface in nonlinear multicriteria optimization problems. SIAM J. Optim. 8(3), 631–657 (1998)

Deb, K.: Multiobjective Optimization using Evolutionary Algorithms. Wiley, New York (2001)

Eschenauer, H., Koski, J., Osyczka, A.: Multicriteria Design Optimization. Springer, Berlin (1990)

Eslami, N., Najafi, B., Vaezpour, S.M.: A trust-region method for solving multicriteria optimization problems on Riemannian manifolds. J. Optim. Theory Appl. 196(1), 212–239 (2023)

Evans, G.W.: An overview of techniques for solving multiobjective mathematical programs. Manage. Sci. 30(11), 1268–1282 (1984)

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1964)

Fliege, J.: OLAF—a general modeling system to evaluate and optimize the location of an air polluting facility. OR Spektrum 23, 117–136 (2001)

Fliege, J., Graña Drummond, L.M., Svaiter, B.F.: Newton’s method for multiobjective optimization. SIAM J. Optim. 20(2), 602–626 (2009)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 51(3), 479–494 (2000)

Fliege, J., Vicente, L.N.: Multicriteria approach to bilevel optimization. J. Optim. Theory Appl. 131(2), 209–225 (2006)

Fukuda, E.H., Graña Drummond, L.M.: On the convergence of the projected gradient method for vector optimization. Optimization 60(89), 1009–1021 (2011)

Fukuda, E.H., Graña Drummond, L.M.: Inexact projected gradient method for vector optimization. Comput. Optim. Appl. 54(3), 473–493 (2013)

Gass, S., Saaty, T.: The computational algorithm for the parametric objective function. Naval Res. Logist. Quart. 2(1–2), 39–45 (1955)

Geoffrion, A.M.: Proper efficiency and the theory of vector maximization. J. Math. Anal. Appl. 22(3), 618–630 (1968)

Graña Drummond, L.M., Iusem, A.: A projected gradient method for vector optimization problems. Comput. Optim. Appl. 28, 5–29 (2004)

Graña Drummond, L.M., Raupp, F.M.P., Svaiter, B.F.: A quadratically convergent Newton method for vector optimization. Optimization 63(5), 661–677 (2014)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49, 409–435 (1952)

Lu, F., Chen, C.R.: Newton-like methods for solving vector optimization problems. Appl. Anal. 93(8), 1567–1586 (2014)

Lucambio Pérez, L.R., Prudente, L.F.: Nonlinear conjugate gradient methods for vector optimization. SIAM J. Optim. 28(3), 2690–2720 (2018)

Polak, E., Ribiere, G.: Note sur la convergence de méthodes de directions conjuguées. Revue française d’informatique et de recherche opérationnelle. Série rouge 3(R1), 35–43 (1969)

Ring, W., Wirth, B.: Optimization methods on Riemannian manifolds and their application to shape space. SIAM J. Optim. 22(2), 596–627 (2012)

Sato, H.: Riemannian Optimization and its Applications. Springer, New York (2021)

Sato, H., Iwai, T.: A new, globally convergent Riemannian conjugate gradient method. Optimization 64(4), 1011–1031 (2015)

Zitzler, E., Deb, K., Thiele, L.: Comparison of multiobjective evolutionary algorithms: Empirical results. Evol. Comput. 8(2), 173–195 (2000)

Acknowledgements

The authors are deeply grateful to the editor and anonymous referees, whose patience and numerous detailed comments greatly enhanced the quality of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Sándor Zoltán Németh.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Najafi, S., Hajarian, M. Multiobjective Conjugate Gradient Methods on Riemannian Manifolds. J Optim Theory Appl 197, 1229–1248 (2023). https://doi.org/10.1007/s10957-023-02224-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-023-02224-1