Abstract

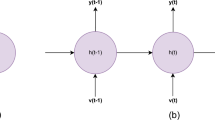

In recent years, neural topic modeling has increasingly raised extensive attention due to its capacity on generating coherent topics and flexible deep neural structures. However, the widely used Dirichlet distribution in shallow topic models is difficult to reparameterize. Therefore, most existing neural topic models assume the Gaussian as the prior of topic proportions for reparameterization. Gaussian distribution does not have the sparsity like Dirichlet distribution, which limits the model’s topic extraction ability. To address this issue, we propose a novel neural topic model approximating the Dirichlet prior with the reparameterizable Kumaraswamy distribution, namely Kumaraswamy Neural Topic Model (KNTM). Specifically, we adopted the stick-breaking process for posterior inference with the Kumaraswamy distribution as the base distribution. Besides, to capture the dependencies among topics, we propose a Kumaraswamy Recurrent Neural Topic Model (KRNTM) based on the recurrent stick-breaking construction to ensure that the model can still generate coherent topical words in high-dimensional topic space. We examined our method on five prevalent benchmark datasets over six Dirichlet-approximating neural topic models, among which KNTM has the lowest perplexity and KRNTM performance best on topic coherence and topic uniqueness. Qualitative analysis of the top topical words verifies that our proposed models can extract more semantically coherent topics compared with state-of-the-art models, further demonstrating our method’s effectiveness. This work contributes to the broader application of VAEs with Dirichlet priors.

Similar content being viewed by others

Availability of data and materials

The data and materials are available

Code availability

The codes are also available

Notes

References

Blei DM, Ng AY, Jordan MI (2003) Latent Dirichlet allocation. J Mach Learn Res 3:993–1022

Cheng X, Yan X, Lan Y, Guo J (2014) BTM: topic modeling over short texts. IEEE Trans Knowl Data Eng 26(12):2928–2941

Wang Y, Tong Y, Shi D (2020) Federated latent Dirichlet allocation: a local differential privacy based framework. In: The thirty-fourth AAAI conference on artificial intelligence, AAAI 2020, the thirty-second innovative applications of artificial intelligence conference, IAAI 2020, the tenth AAAI symposium on educational advances in artificial intelligence, EAAI 2020, New York, NY, USA, February 7–12, 2020, pp 6283–6290

Zhou Q, Chen H, Zheng Y, Wang Z (2021) Evalda: Efficient evasion attacks towards latent Dirichlet allocation. In: Thirty-fifth AAAI conference on artificial intelligence, AAAI 2021, thirty-third conference on innovative applications of artificial intelligence, IAAI 2021, the eleventh symposium on educational advances in artificial intelligence, EAAI 2021, Virtual Event, February 2–9, 2021, pp 14602–14611

Cheevaprawatdomrong J, Schofield, A, Rutherford A (2022) More than words: collocation retokenization for latent Dirichlet allocation models. In: Muresan S, Nakov P, Villavicencio A (eds) Findings of the association for computational linguistics: ACL 2022, Dublin, Ireland, May 22–27, 2022, pp 2696–2704

Kingma DP, Welling M (2014) Auto-encoding variational bayes. In: Bengio Y, LeCun Y (eds) International conference on learning representations, Banff, AB, Canada

Rezende DJ, Mohamed S, Wierstra D (2014) Stochastic backpropagation and approximate inference in deep generative models. In: Proceedings of the 31th international conference on machine learning, Bei**g, China, pp 1278–1286

Zhang L, Hu X, Wang B, Zhou D, Zhang Q, Cao Y (2022) Pre-training and fine-tuning neural topic model: a simple yet effective approach to incorporating external knowledge. In: Muresan S, Nakov P, Villavicencio A (eds) Proceedings of the 60th annual meeting of the association for computational linguistics (volume 1: long papers), ACL 2022, Dublin, Ireland, May 22–27, 2022, pp 5980–5989

Wang B, Zhang L, Zhou D, Cao Y, Ding J (2023) Neural topic modeling based on cycle adversarial training and contrastive learning. In: Rogers A, Boyd-Graber JL, Okazaki N (eds) Findings of the association for computational linguistics: ACL 2023, Toronto, Canada, July 9–14, 2023, pp 9720–9731

Li R, González-Pizarro F, **ng L, Murray G, Carenini G (2023) Diversity-aware coherence loss for improving neural topic models. In: Rogers A, Boyd-Graber JL, Okazaki N (eds) Proceedings of the 61st annual meeting of the association for computational linguistics (volume 2: short papers), ACL 2023, Toronto, Canada, July 9–14, 2023, pp 1710–1722

Wallach HMA, Mimno D (2009) Rethinking LDA: why priors matter. In: Advances in neural information processing systems

Nan F, Ding R, Nallapati R, **ang B (2019) Topic modeling with Wasserstein autoencoders. In: Proceedings of the 57th annual meeting of the association for computational linguistics. Association for Computational Linguistics, Florence, Italy, pp 6345–6381

Srivastava A, Sutton C (2017) Autoencoding variational inference for topic models. In: International conference on learning representations. OpenReview.net, Toulon, France

Figurnov M, Mohamed S, Mnih A (2018) Implicit reparameterization gradients. In: Bengio S, Wallach HM, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R (eds) Neural information processing systems, pp 439–450

Burkhardt S, Kramer S (2019) Decoupling sparsity and smoothness in the Dirichlet variational autoencoder topic model. J Mach Learn Res 20:131–113127

Naesseth CA, Ruiz FJR, Linderman SW, Blei DM (2017) Reparameterization gradients through acceptance-rejection sampling algorithms. In: Singh A, Zhu XJ (eds) Artificial Intelligence and Statistics, vol 54. Proceedings of machine learning research. PMLR, Fort Lauderdale, pp 489–498

Joo W, Lee W, Park S, Moon I (2020) Dirichlet variational autoencoder. Pattern Recognit 107:107514

Zhang H, Chen B, Guo D, Zhou M (2018) WHAI: Weibull hybrid autoencoding inference for deep topic modeling. In: International conference on learning representations. OpenReview.net, Vancouver, BC, Canada

Li Y, Wang C, Duan Z, Wang D, Chen B, An B, Zhou M (2022) Alleviating “posterior collapse” in deep topic models via policy gradient. In: NeurIPS

Stirn A, Jebara T, Knowles DA (2019) A new distribution on the simplex with auto-encoding applications. In: Wallach HM, Larochelle H, Beygelzimer A, d’Alché-Buc F, Fox EB, Garnett R (eds) Neural information processing systems. Vancouver, BC, Canada, pp 13670–13680

Isonuma M, Mori J, Bollegala D, Sakata I (2020) Tree-structured neural topic model. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 800–806

Zhang Z, Zhang X, Rao Y (2022) Nonparametric forest-structured neural topic modeling. In: Proceedings of the 29th international conference on computational linguistics, Gyeongju, Republic of Korea, pp 2585–2597

Hao X, Shafto P (2023) Coupled variational autoencoder. In: Krause A, Brunskill E, Cho K, Engelhardt B, Sabato S, Scarlett J (eds) International conference on machine learning, ICML 2023, 23–29 July 2023, Honolulu, Hawaii, USA, vol 202, pp 12546–12555

Estermann B, Wattenhofer R (2023) DAVA: disentangling adversarial variational autoencoder. In: The eleventh international conference on learning representations, ICLR 2023, Kigali, Rwanda, May 1–5, 2023

Zhao Y, Linderman SW (2023) Revisiting structured variational autoencoders. In: Krause A, Brunskill E, Cho K, Engelhardt B, Sabato S, Scarlett J (eds) International conference on machine learning, ICML 2023, 23–29 July 2023, Honolulu, Hawaii, USA. Proceedings of machine learning research, vol 202, pp 42046–42057

Detkov A, Salameh M, Qharabagh MF, Zhang J, Luwei R, Jui S, Niu D (2023) Reparameterization through spatial gradient scaling. In: The eleventh international conference on learning representations, ICLR 2023, Kigali, Rwanda, May 1–5, 2023

Li C, Qiu Q, Zhang Z, Guo J, Cheng X (2023) Learning adversarially robust sparse networks via weight reparameterization. In: Williams B, Chen Y, Neville J (eds) Thirty-seventh AAAI conference on artificial intelligence, AAAI 2023, thirty-fifth conference on innovative applications of artificial intelligence, IAAI 2023, thirteenth symposium on educational advances in artificial intelligence, EAAI 2023, Washington, DC, USA, February 7–14, 2023, pp 8527–8535

Miao Y, Yu L, Blunsom P (2016) Neural variational inference for text processing. In: Balcan M, Weinberger KQ (eds) International conference on machine learning, vol 48. JMLR.org, New York City, pp 1727–1736

Miao Y, Grefenstette E, Blunsom P (2017) Discovering discrete latent topics with neural variational inference. In: Precup D, Teh YW (eds) International conference on machine learning, vol 70. PMLR, Sydney, pp 2410–2419

Wang R, Hu X, Zhou D, He Y, **ong Y, Ye C, Xu H (2020) Neural topic modeling with bidirectional adversarial training. In: Proceedings of the 58th annual meeting of the association for computational linguistics, pp 340–350

Kingma DP, Mohamed S, Rezende DJ, Welling M (2014) Semi-supervised learning with deep generative models. In: Ghahramani Z, Welling M, Cortes C, Lawrence ND, Weinberger KQ (eds) Neural information processing systems, pp 3581–3589

Hoffman MD, Johnson MJ (2016) ELBO surgery: yet another way to carve up the variational evidence lower bound. In: Workshop in advances in approximate Bayesian inference, NIPS, vol 1

Nalisnick ET, Smyth P (2017) Stick-breaking variational autoencoders. In: International conference on learning representations. OpenReview.net, Toulon, France

Sethuraman J (1994) A constructive definition of Dirichlet priors. Statistica Sinica 639–650

Ishwaran H, James LF (2001) Gibbs sampling methods for stick-breaking priors. J Am Stat Assoc 96(453):161–173

Frigyik B A, GMR Kapila A (2010) Introduction to the Dirichlet distribution and related processes. Department of Electrical Engineering, University of Washington, 6–127

Kumaraswamy P (1980) A generalized probability density function for double-bounded random processes. J Hydrol 46(1–2):79–88

Hinz T, Wermter S (2018) Inferencing based on unsupervised learning of disentangled representations. In: European symposium on artificial neural networks

Hoeffding W (1992) A class of statistics with asymptotically normal distribution. In: Breakthroughs in statistics. Springer, New York, pp 308–334

Miao Y, Grefenstette E, Blunsom P (2017) Discovering discrete latent topics with neural variational inference. In: International conference on machine learning. PMLR, pp 2410–2419

Nassar J, Linderman S, Bugallo M, Park IM (2019) Tree-structured recurrent switching linear dynamical systems for multi-scale modeling. In: International conference on learning representations

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Perrone V, Jenkins PA, Spanò D, Teh YW (2017) Poisson random fields for dynamic feature models. J Mach Learn Res 18(127):1–45

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: Bengio Y, LeCun Y (eds) ICLR

Bowman SR, Vilnis L, Vinyals O, Dai AM, Józefowicz R, Bengio S (2016) Generating sentences from a continuous space. In: Goldberg Y, Riezler S (eds) Computational natural language learning, CoNLL. ACL, Berlin, pp 10–21

Bai H, Chen Z, Lyu MR, King I, Xu Z (2018) Neural relational topic models for scientific article analysis. In: Cuzzocrea A, Allan J, Paton NW, Srivastava D, Agrawal R, Broder AZ, Zaki MJ, Candan KS, Labrinidis A, Schuster A, Wang H (eds) Conference on information and knowledge management. ACM, Torino, pp 27–36

Acknowledgements

We would like to acknowledge support for this project from National Natural Science Foundation of China (NSFC) [No.62006094, No.61876071] and China Postdoctoral Science Foundation [No.2019M661210] and Scientific and Technological Develo** Scheme of Jilin Province [No.20180201003SF, No.20190701031GH] and Energy Administration of Jilin Province [No.3D516L921421].

Funding

This work is supported by National Natural Science Foundation of China (NSFC) [No.62006094, No.61876071] and China Postdoctoral Science Foundation [No.2019M661210] and Scientific and Technological Develo** Scheme of Jilin Province [No.20180201003SF, No.20190701031GH] and Energy Administration of Jilin Province [No.3D516L921421]

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis are performed by Jihong Ouyang, Teng Wang, **gyue Cao, Yiming Wang. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest.

Ethical approval

This research does not involvehuman participants nor animals.

Consent to participate

Consent to submit this manuscript has been received tacitly from the author’s institution, Jilin University.

Consent for publication

All authors are consent for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ouyang, J., Wang, T., Cao, J. et al. Applying Kumaraswamy distribution on stick-breaking process: a Dirichlet neural topic model approach. Neural Comput & Applic (2024). https://doi.org/10.1007/s00521-024-09783-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00521-024-09783-y