Abstract

Large language models (LLMs) have demonstrated promising in-context learning capabilities, especially with instructive prompts. However, recent studies have shown that existing large models still face challenges in specific information extraction (IE) tasks. Moreover, it could have more effectively utilized various prompts such as instruction tuning, diverse demonstrations of in-context learning, and long-range token sequences for assisting language modeling in understanding context. In this study, we propose DILUIE, a unified information extraction framework based on in-context learning with diverse demonstration examples. DILUIE is encoded with an EVA attention mechanism and incremental encoding technology. Based on the constructed diverse demonstrations, we expand the size of instances efficiently in both instruction tuning and in-context learning to gain insights into the potential benefits of utilizing diverse information extraction datasets. To deepen the understanding of context, we further design three auxiliary tasks to assist in aligning contextual semantics. Experimental results demonstrate that DILUIE achieves 2.23 and 2.53% improvements in terms of Micor-/Macor-F1 on average relative to the current state-of-the-art baseline, which also significantly outperforms the GPT-3.5-turbo in zero-shot settings, and the average token length of achieving the best performance over tasks is around 15k. Furthermore, we observe that in-context learning shows enhanced performance when provided with more demonstrations during multiple-shot instruction tuning (8 k). Additionally, increasing the length of instructions (10 k) can result in a more substantial improvement in the upper limits of scaling for in-context learning. Code is available on https://github.com/Phevos75/DILUIE.

Similar content being viewed by others

Data availability statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Al-Rfou R, Kulkarni V, Perozzi B, Skiena S (2015) Polyglot-ner: massive multilingual named entity recognition. In: In: Proceedings of the 2015 SIAM international conference on data mining. SIAM, pp 586–594

Chen W, Ma X, Wang X, Cohen WW (2022) Program of thoughts prompting: disentangling computation from reasoning for numerical reasoning tasks. ar**v preprint ar**v:2211.12588

Chen P, Xu H, Zhang C, Huang R (2022) Crossroads, buildings and neighborhoods: a dataset for fine-grained location recognition. In: Proceedings of the 2022 conference of the North American chapter of the association for computational linguistics: human language technologies, pp 3329–3339

Chen X, Ye J, Zu C, Xu N, Zheng R, Peng M, Zhou J, Gui T, Zhang Q, Huang X (2023) How robust is gpt-3.5 to predecessors? A comprehensive study on language understanding tasks. ar**v preprint ar**v:2303.00293

Chia YK, Bing L, Poria S, Si L (2022) Relationprompt: leveraging prompts to generate synthetic data for zero-shot relation triplet extraction. ar**v preprint ar**v:2203.09101

Chowdhery A, Narang S, Devlin J, Bosma M, Mishra G, Roberts A, Barham P, Chung HW, Sutton C, Gehrmann S, Schuh P (2022) Palm: scaling language modeling with pathways. ar**v preprint ar**v:2204.02311

Chung HW, Hou L, Longpre S, Zoph B, Tay Y, Fedus W, Li Y, Wang X, Dehghani M, Brahma S, Webson A (2022) Scaling instruction-finetuned language models. ar**v preprint ar**v:2210.11416

Wang X, Zhu W, Wang WY (2023) Large language models are implicitly topic models: Explaining and finding good demonstrations for incontext learning. corr, abs/2301.11916. https://doi.org/10.48550/ar**v.2301.11916

Derczynski L, Bontcheva K, Roberts I (2016) Broad twitter corpus: a diverse named entity recognition resource. In: Proceedings of COLING 2016, the 26th international conference on computational linguistics: technical papers, pp 1169–1179

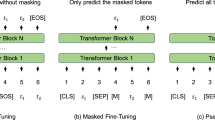

Devlin J, Chang MW, Lee K, Toutanova K (2018) Bert: pre-training of deep bidirectional transformers for language understanding. ar**v preprint ar**v:1810.04805

Dong Q, Li L, Dai D, Zheng C, Wu Z, Chang B, Sun X, Xu J, Sui Z (2022) A survey for in-context learning. ar**v preprint ar**v:2301.00234

Fei H, Wu S, Li J, Li B, Li F, Qin L, Zhang M, Zhang M, Chua TS (2022) Lasuie: unifying information extraction with latent adaptive structure-aware generative language model. Adv Neural Inf Process Syst 35:15460–15475

Guan R, Man KL, Chen F, Yao S, Hu R, Zhu X, Smith J, Lim EG, Yue Y (2023) Findvehicle and vehiclefinder: a ner dataset for natural language-based vehicle retrieval and a keyword-based cross-modal vehicle retrieval system. ar**v preprint ar**v:2304.10893

Gurulingappa H, Rajput AM, Roberts A, Fluck J, Hofmann-Apitius M, Toldo L (2012) Development of a benchmark corpus to support the automatic extraction of drug-related adverse effects from medical case reports. J Biomed Informat 45(5):885–892

Hendrickx I, Kim SN, Kozareva Z, Nakov P, Séaghdha DO, Padó S, Pennacchiotti M, Romano L, Szpakowicz S (2019) Semeval-2010 task 8: multi-way classification of semantic relations between pairs of nominals. ar**v preprint ar**v:1911.10422

He J, Wang L, Hu Y, Liu N, Liu H, Xu X, Shen HT (2023) Icl-d3ie: in-context learning with diverse demonstrations updating for document information extraction. ar**v preprint ar**v:2303.05063

Hovy E, Marcus M, Palmer M, Ramshaw L, Weischedel R (2006) Ontonotes: the 90% solution. In: Proceedings of the human language technology conference of the NAACL, companion volume: short papers, pp 57–60

Jat S, Khandelwal S, Talukdar P (2018) Improving distantly supervised relation extraction using word and entity based attention. ar**v preprint ar**v:1804.06987

Jordan Michael I, Kearns Michael J, Solla Sara A (1998) Advances in Neural Information Processing Systems 10: Proceedings of the 1997 Conference, vol 10. MIT Press

Kim J-D, Ohta T, Tateisi Y, Tsujii J (2003) Genia corpus-a semantically annotated corpus for bio-textmining. Bioinformatics, 19(suppl_1):i180–i182

Kingma Diederik P, Ba Jimmy (2014) Adam: a method for stochastic optimization. ar**v preprint ar**v:1412.6980

Kocaman V, Talby D (2022) Accurate clinical and biomedical named entity recognition at scale. Softw Impacts 13:100373

Kocaman V, Talby D (2021) Biomedical named entity recognition at scale. In: Pattern recognition. ICPR international workshops and challenges: virtual event, January 10–15, 2021, proceedings, part I. Springer, pp 635–646

Krallinger M, Rabal O, Leitner F, Vazquez M, Salgado D, Lu Z, Leaman R, Lu Y, Ji D, Lowe DM, Sayle RA (2015) The chemdner corpus of chemicals and drugs and its annotation principles. J Cheminformat 7(1):1–17

Abramski K, Citraro S, Lombardi L, Rossetti G, Stella M (2023) Cognitive network science reveals bias in gpt3, gpt3.5 turbo, and gpt4 mirroring math anxiety in highschool students. Big Data and Cognitive Computing 7(3):124

Li M, Gong S, Feng J, Xu Y, Zhang J, Wu Z, Kong L (2023) In-context learning with many demonstration examples. ar**v preprint ar**v:2302.04931

Li J, Sun Y, Johnson RJ, Sciaky D, Wei CH, Leaman R, Davis AP, Mattingly CJ, Wiegers TC, Lu Z (2016) Biocreative v cdr task corpus: a resource for chemical disease relation extraction. In: Database

Liu Z, Xu Y, Yu T, Dai W, Ji Z, Cahyawijaya S, Madotto A, Fung P (2021) Crossner: evaluating cross-domain named entity recognition. Proc AAAI Confer Artif Intell 35:13452–13460

Liu Y, Meng F, Zhang J, Xu J, Chen Y, Zhou J (2019) Gcdt: a global context enhanced deep transition architecture for sequence labeling. ar**v preprint ar**v:1906.02437

Liu J, Shen D, Zhang Y, Dolan B, Carin L, Chen W (2021) What makes good in-context examples for gpt-3? ar**v preprint ar**v:2101.06804

Lou J, Lu Y, Dai D, Jia W, Lin H, Han X, Sun L, Wu H (2023) Universal information extraction as unified semantic matching. ar**v preprint ar**v:2301.03282

Luan Y, He L, Ostendorf M, Hajishirzi H (2018) Multi-task identification of entities, relations, and coreference for scientific knowledge graph construction. ar**v preprint ar**v:1808.09602

Lu Y, Bartolo M, Moore A, Riedel S, Stenetorp P (2021) Fantastically ordered prompts and where to find them: overcoming few-shot prompt order sensitivity. ar**v preprint ar**v:2104.08786

Lu Y, Lin H, Xu J, Han X, Tang J, Li A, Sun L, Liao M, Chen S (2021) Text2event: controllable sequence-to-structure generation for end-to-end event extraction. ar**v preprint ar**v:2106.09232

Lu Y, Liu Q, Dai D, **ao X, Lin H, Han X, Sun L, Wu H (2022) Unified structure generation for universal information extraction. ar**v preprint ar**v:2203.12277

Min S, Lewis M, Zettlemoyer L, Hajishirzi H (2021) Metaicl: learning to learn in context. ar**v preprint ar**v:2110.15943

Mirowski P, Steck H, Whiting P, Palaniappan R, MacDonald M, Ho TK (2011) Kl-divergence kernel regression for non-gaussian fingerprint based localization. In: 2011 international conference on Indoor positioning and Indoor navigation. IEEE, pp 1–10

Mitchell A, Strassel S, Huang S, Zakhary R (2005) Ace 2004 multilingual training corpus. Linguist Data Consort, Phila 1:1

Ott M, Edunov S, Baevski A, Fan A, Gross S, Ng N, Grangier D, Auli M (2019) fairseq: a fast, extensible toolkit for sequence modeling. ar**v preprint ar**v:1904.01038

Ouyang L, Wu J, Jiang X, Almeida D, Wainwright C, Mishkin P, Zhang C, Agarwal S, Slama K, Ray A, Schulman J (2022) Training language models to follow instructions with human feedback. Adv Neural Inf Process Syst 35:27730–27744

Pan X, Zhang B, May J, Nothman J, Knight K, Ji H (2017) Cross-lingual name tagging and linking for 282 languages. In: Proceedings of the 55th annual meeting of the association for computational linguistics (volume 1: long papers), pp 1946–1958

Poolsawad N, Kambhampati C, Cleland JGF (2014) Balancing class for performance of classification with a clinical dataset. Proc World Congr Eng 1:1–6

Riedel S, Yao L, McCallum A (2010) Modeling relations and their mentions without labeled text. In: Machine learning and knowledge discovery in databases: European conference, ECML PKDD 2010, Barcelona, Spain, September 20–24, 2010, proceedings, part III, vol 21. Springer, pp 148–163

Roth D, Yih W-T (2004) A linear programming formulation for global inference in natural language tasks. In: Proceedings of the 8th conference on computational natural language learning (CoNLL-2004) at HLT-NAACL 2004, pp 1–8

Sang EF, De Meulder F (2003) Introduction to the conll-2003 shared task: Language-independent named entity recognition. ar**v preprint ar**v:cs/0306050

Shaikh O, Zhang H, Held W, Bernstein M, Yang D (2022) On second thought, let’s not think step by step! bias and toxicity in zero-shot reasoning. ar**v preprint ar**v:2212.08061

Sun Z, Li J, Pergola G, Wallace BC, John B, Greene N, Kim J, He Y (2022) Phee: a dataset for pharmacovigilance event extraction from text. ar**v preprint ar**v:2210.12560

Takanobu R, Zhang T, Liu J, Huang M (2019) A hierarchical framework for relation extraction with reinforcement learning. Proc AAAI Confer Artif Intell 33:7072–7079

Tedeschi S, Navigli R (2022) Multinerd: a multilingual, multi-genre and fine-grained dataset for named entity recognition (and disambiguation). In: Findings of the association for computational linguistics: NAACL 2022, pp 801–812

Tedeschi S, Maiorca V, Campolungo N, Cecconi F, Navigli R (2021) Wikineural: combined neural and knowledge-based silver data creation for multilingual NER. In: Findings of the association for computational linguistics: EMNLP 2021, pp 2521–2533

Ushio A, Neves L, Silva V, Barbieri F, Camacho-Collados J (2022) Named entity recognition in twitter: a dataset and analysis on short-term temporal shifts. ar**v preprint ar**v:2210.03797

Walker C, Strassel S, Medero J, Maeda K (2006) Ace 2005 multilingual training corpus. Linguist Data Consort, Phila 57:45

Wan Z, Cheng F, Mao Z, Liu Q, Song H, Li J, Kurohashi S (2023) Gpt-re: in-context learning for relation extraction using large language models. ar**v preprint ar**v:2305.02105

Wang X, Dou S, **ong L, Zou Y, Zhang Q, Gui T, Qiao L, Cheng Z, Huang X (2022) Miner: Improving out-of-vocabulary named entity recognition from an information theoretic perspective. ar**v preprint ar**v:2204.04391

Wang B, Min S, Deng X, Shen J, Wu Y, Zettlemoyer L, Sun H (2022) Toward understanding chain-of-thought prompting: an empirical study of what matters. ar**v preprint ar**v:2212.10001

Wang X, Zhou W, Zu C, **a H, Chen T, Zhang Y, Zheng R, Ye J, Zhang Q, Gui T, Kang J (2023) Instructuie: Multi-task instruction tuning for unified information extraction. ar**v preprint ar**v:2304.08085

Wang X, Zhu W, Saxon M, Steyvers M, Wang WY (2023) Large language models are implicitly topic models: explaining and finding good demonstrations for in-context learning. ar**v preprint ar**v:2301.11916

Wei J, Wang X, Schuurmans D, Bosma M, **a F, Chi E, Le QV, Zhou D (2022) Chain-of-thought prompting elicits reasoning in large language models. Adv Neural Inf Process Syst 35:24824–24837

Wu Z, Wang Y, Ye J, Kong L (2022) Self-adaptive in-context learning. ar**v preprint ar**v:2212.10375

**e SM, Raghunathan A, Liang P, Ma T (2021) An explanation of in-context learning as implicit bayesian inference. ar**v preprint ar**v:2111.02080

**e SM, Raghunathan A, Liang P, Ma T (2023) Efficient attention via control variates. ar**v preprint ar**v:2302.04542

Yan H, Dai J, Qiu X, Zhang Z (2021) A unified generative framework for aspect-based sentiment analysis. ar**v preprint ar**v:2106.04300

Ye J, Chen X, Xu N, Zu C, Shao Z, Liu S, Cui Y, Zhou Z, Gong C, Shen Y, Zhou J (2023) A comprehensive capability analysis of gpt-3 and gpt-3.5 series models. ar**v preprint ar**v:2303.10420

Zeng A, Attarian M, Ichter B, Choromanski K, Wong A, Welker S, Tombari F, Purohit A, Ryoo M, Sindhwani V, Lee J (2022) Socratic models: Composing zero-shot multimodal reasoning with language. ar**v preprint ar**v:2204.00598

Zhang S, Roller S, Goyal N, Artetxe M, Chen M, Chen S, Dewan C, Diab M, Li X, Lin XV, Mihaylov T (2022) Opt: open pre-trained transformer language models. ar**v preprint ar**v:2205.01068

Zhang D, Wang D (2015) Relation classification via recurrent neural network. ar**v preprint ar**v:1508.01006

Zhang Y, Zhong V, Chen D, Angeli G, Manning CD (2017) Position-aware attention and supervised data improve slot filling. In: Conference on empirical methods in natural language processing

Zheng L, Wang C, Kong L (2022) Linear complexity randomized self-attention mechanism. In: International conference on machine learning. PMLR, pp 27011–27041

Zhengyuan Yang, Zhe Gan, Jianfeng Wang, **aowei Hu, Yumao Lu, Zicheng Liu, Lijuan Wang (2022) An empirical study of gpt-3 for few-shot knowledge-based VQA. Proc AAAI Confer Artif Intell 36:3081–3089

Zhou D, Scharli N, Hou L, Wei J, Scales N, Wang X, Schuurmans D, Cui C, Bousquet O, Le Q, Chi E (2022) Least-to-most prompting enables complex reasoning in large language models. ar**v preprint ar**v:2205.10625

Acknowledgements

This research is financially supported by Science and Technology Committee of Shanghai Municipality (STCSM) (Science and Technology Program Grants 22511104800 and 22DZ1204903).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential Conflict of interest was reported by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Guo, Q., Guo, Y. & Zhao, J. Diluie: constructing diverse demonstrations of in-context learning with large language model for unified information extraction. Neural Comput & Applic (2024). https://doi.org/10.1007/s00521-024-09728-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00521-024-09728-5