Abstract

Pests significantly negatively affect product yield and quality in agricultural production. Agricultural producers may not accurately identify pests and signs of pest damage. Thus, incorrect or excessive insecticides may be used. Excessive use of insecticides not only causes human health and environmental pollution, but also increases input costs. Therefore, early detection and diagnosis of pests is extremely important. In this study, the effectiveness of the instance segmentation method, a deep learning-based method, was investigated for the early detection of the damage caused by the T. absoluta pest in the leaf part of the tomato plant under greenhouse conditions. An original dataset was created by acquiring 800 healthy and damaged images under greenhouse conditions. The acquired images were labelled as bounding box and automatically converted to a mask label with the Segment Anything Model (SAM) model. The created dataset was trained with YOLOv8(n/s/m/l/x)-Seg models. As a result of the training, the box performance of the proposed YOLOv8l-Seg model was measured as 0.924 in the mAP0.5 metric. The YOLOv8l-Seg model mask values are, respectively: mAP0.5, mAP0.5–0.95, Precision, Recall showed the best performance with values of 0.935, 0.806, 0.956 and 0.859. Then, the YOLOv8l-Seg model, trained with different data input sizes, showed the best performance at 640 × 640 size and the lowest performance with a value of 0.699 in the mAP0.5 metric in the 80 × 80 size. The same dataset was trained with YOLOv7, YOLOv5l, YOLACT and Mask R-CNN instance segmentation models and performance comparisons were made with the YOLOv8l-Seg model. As a result, it was determined that the model that best detected T. absoluta damage in tomato plants was the YOLOv8l-Seg model. The Mask R-CNN model showed the lowest performance with a metric of 0.806 mAP0.5. The results obtained from this study revealed that the proposed model and method can be used effectively in detecting the damage caused by the T. absoluta pest.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

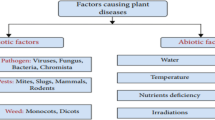

Agriculture is a complex activity that combines biological, physical, and chemical processes. In recent years, due to many factors, such as weather and climate conditions, soil degradation, diseases and pests, and environmental pollution, the agricultural sector has faced significant difficulties in feeding the world population with sufficient quantity and quality food [6, 44]. As a result of climate change, the number of various plant diseases and pest species is increasing, and the losses are reaching increasingly serious levels. Co** with plant diseases and pests is a fundamental requirement to ensure agricultural crop productivity [25]. One of these pests, T. absoluta, is considered one of the most serious invasive pests, originating from South America and causing great damage to tomato production in Mediterranean Basin countries [9]. The pest can cause damage to many different parts of the plant, from leaves to flowers, from stems to fruits [1]. Therefore, real-time and early identification of T. absoluta may play an important role in improving farmers' pest management decisions [47]. However, detecting plant diseases and pest damage accurately and objectively, and identifying affected areas are still challenging processes [58]. The use of technological methods that allow rapid detection of the severity and development of the disease in the diagnosis of diseases offers many advantages [43]. Image analysis tools developed to detect plant diseases and pest damage accurately and effectively can help prevent loss of labour and time that may occur during evaluations made by experts with the naked eye [53]. For this reason, technological approaches that diagnose plant diseases and pests independently of humans are being intensively studied with techniques, such as image processing and deep learning [42]. Diagnosing diseases in plants can be done based on visual symptoms, such as different coloured spots, lines or dots that appear on the stems, leaves or different organs of the plant. These symptoms can be analysed using image processing techniques and differentiated according to the types of plant diseases. Deep learning methods are a powerful tool used to recognise these visual symptoms and diagnose plant diseases [41]. One of the deep learning methods that has recently attracted great attention in various computer vision applications is instance segmentation. Semantic segmentation, which is similar to the instance segmentation method, provides precise inferences by predicting the labels for each pixel in the input image, and each pixel is labelled according to the object class it is in. Instance segmentation, on the other hand, is designed to identify and segment the pixels of each object instance [16, 17]. Therefore, the instance segmentation method not only detects the position of the object but also marks the edges for each instance [49]. Due to this feature, it also performs object detection and semantic segmentation operations at the same time [38].

In agriculture, state-of-the-art (SOTA) artificial intelligence methods are used in many applications, such as monitoring plant development [12], determining plant nutritional needs [50], detecting plant diseases [2], and yield estimation [32, 55], in order to increase efficiency and quality and contribute to reducing losses. There are also studies on the detection of many agricultural pests [29, 62] and the damage they cause [15, 65] using SOTA models. The detection of one of these pests, T. absoluta, has been a topic of interest in the field of deep learning. However, whilst most of these studies focussed on classifying T. absoluta impacts, a limited number of studies specifically addressed T. absoluta detection using object detection techniques. In particular, detection poses a more complex challenge than classification and is of greater importance in real-world applications [15]. Several studies have explored the application of artificial intelligence (AI) and deep learning models in detecting and managing T. absoluta damage. Mkonyi et al. [37] has introduced an AI-powered tool using Convolutional Neural Networks (CNNs) that detects T. absoluta damage with 91.9% accuracy. Loyani et al. [35] used U-Net and Mask R-CNN models to precisely identify damaged parts in tomato leaves. Georgantopoulos et al. [14] highlighted the importance of Faster R-CNN in detecting T. absoluta and presented a comprehensive dataset for localization and classification. The researchers stated that they used the Feature Pyramid Networks (FPN) module with the Faster R-CNN architecture to address the challenge of detecting small damages and made significant progress in early pest detection. However, [15] noted a decrease in performance when models, such as Faster R-CNN and RetinaNet, were used alone for T. absoluta detection in tomato plants, highlighting their complexity in addressing plant health scenarios with real-world datasets. For this reason, in the study, it was stated that ensemble techniques, such as Non-Maximum Suppression (NMS), Soft Non-Maximum Suppression (Soft NMS), Non-Maximum Weighted (NMW), and Weighted Boxes Fusion (WBF), were applied to improve Faster R-CNN performance. At the end of the study, it was reported that a 20% improvement was achieved in the mAP metric. Şahin et al. [51] studied the impact of T. absoluta on agricultural production. In the study, it was reported that a single-stage YOLOv5 model was used to classify leaves and detect galleries formed by larvae and pests, and an 80% accuracy rate was achieved. In addition, the findings of the study emphasised the potential of YOLO deep learning algorithms to reduce pesticide use in agriculture. Yue et al. [61], in their study where they detected tomato diseases with the YOLOv8-Seg instance segmentation model, stated that YOLOv8 adopted an innovative anchor-free method, as opposed to the anchor-based detection head of YOLOv5. It has been stated that with this method, the number of hyperparameters is reduced and the segmentation performance is improved whilst increasing the scalability of the model.

In this study, the proposed method is successful in capturing complex spatial relationships and patterns in 800 tomato leaf images obtained from greenhouse conditions with natural illumination, making it possible to determine the damage caused by tomato leafminer—T. absoluta (Meyrick) (Lepidoptera: Gelechiidae) pest on the leaf part of the tomato plant by the instance segmentation method. Compared to methods using traditional bounding boxes and classification tools in detecting agricultural pest damage, the instance segmentation method is expected to provide convenience to experts and growers and contribute to increasing efficiency as it increases classification accuracy. The featured aspects of the study and its contributions to the literature are highlighted below are highlighted below:

-

1.

When training deep learning models, the dataset plays one of the most critical roles in pest damage prediction. The most widely used public datasets for tomato disease and pest damage detection are PlantVillage and PlantDoc. However, these datasets were created in a laboratory/controlled environment and the models trained on them do not perform well on real-world images [13]. For the proposed method to perform well under real growing conditions, an original dataset of 800 tomato leaf images with healthy and pest damage was created under naturally illuminated greenhouse conditions (different illumination, shadows and complex backgrounds). The number of images was increased to 1680 with data augmentation techniques.

-

2.

The created dataset was first labelled with the help of the bounding box labelling tool. These labels were then automatically converted into mask labels using the Segment Everything Model (SAM) [27] algorithm. In this way, the difficult process of mask labelling was completed more quickly. Additionally, mask labelling helped the model perform better than traditional bounding box labelling.

-

3.

In real-time applications, single-stage deep learning models are frequently preferred because they make faster inferences [21, 36, 48, 56]. For this reason, single-stage YOLOv8(n/s/m/l/x)-Seg models with different sizes were preferred in the study. The YOLOv8l-Seg model proposed in the study showed the best performance in training results.

-

4.

Experiments with different input sizes are important to improve the overall performance of the model and obtain better results. In this context, tests were conducted to evaluate how the proposed model responds to input data at various resolutions and to determine the input size that gives the best performance. The proposed model showed the best performance with an input size of 640.

-

5.

Finally, performance comparisons of the proposed model with two-stage and single-stage instance segmentation models, such as Mask R-CNN [19], YOLACT [7], YOLOv5l-Seg [23] and YOLOv7-Seg [54], were made. As a result of the comparison, it was determined that the model that best detected T. absoluta damage on tomato plants was the proposed YOLOv8l-Seg model.

Materials and methods

Tomato leafminer—Tuta absoluta (Meyrick) (Lepidoptera: Gelechiidae)

Tomato cultivation is a sector where human health and environmental risks increase due to excessive use of insecticides to combat various pests [14]. T. absoluta (Meyrick) is a pest species belonging to the Gelechiidae family of the Lepidoptera order and is also known as the tomato leafminer [45]. The pest produces approximately 12 generations per year. The mature female of the pest can lay 250–300 eggs at a time. An important invader of tomato plants, this pest has a life cycle with four developmental stages: egg (Fig. 1A), larvae (Fig. 1B), pupa (Fig. 1C) and adult (Fig. 1D) [37]. T. absoluta larvae hatch from the eggs and begin to feed by entering fruits, leaves, stems, and trunks. The larvae can damage all parts of the tomato plant except the roots, and this damage occurs at all stages of growth. The larva feeds by opening galleries between the two epidermises of tomato leaves. Over time, these galleries dry out and turn into necrotic brown spots. Due to these damages, the green parts of the plant may dry out and serious damage may occur [26]. The size of the pupa of the pest is 6 mm. The pupa, which is first close to green, later turns light brown. The adult of the pest is slender, 6 mm long, with a wingspan of approximately 10 mm.

The developmental stages of the tomato leafminer—T. absoluta: A egg, B larva, C pupa and D adult [11]

T. absoluta can cause different severities of damage on tomato leaves (Fig. 2). Since the economic threshold value is exceeded when a high damage rate (Fig. 2c) occurs, the damage must be detected in the early period (Fig. 2b).

YOLOv8l-Seg instance segmentation model

YOLO models do not include any region proposal stages as in two-stage detectors such as the R-CNN family [46]. Unlike two-stage methods, single-stage methods focus on predicting the object's location and class simultaneously, and they can also achieve faster and higher detection accuracy. At the same time, single-stage models are more suitable for real-time applications than two-stage models as they provide lower training times and faster inference. Due to these advantages, the YOLOv8 model [24], the most current version of the YOLO family, was preferred in the study. The YOLOv8 model is used in various object detection tasks. The YOLOv8 architecture is developed from YOLOv5 by integrating important design aspects of new algorithms, such as YOLOX, YOLOv6 and YOLOv7 [63]. However, in the YOLOv8 model, significant updates have been made to the layer and block structures compared to previous versions. The C2f module, created by combining the C3 module in the spine and neck networks of the YOLOv5 model and the ELAN structure in the YOLOv7 model, has been integrated into the YOLOv8 model [34]. YOLOv8s network consists of backbone (Fig. 3a), neck (Fig. 3b) and head (Fig. 3c) parts, as its architecture is given in Fig. 3. The C2f module (Fig. 3e), located in the backbone and neck, facilitates a more robust gradient information flow by integrating two parallel gradient flow [5]. Spatial Pyramid Pooling Fast (SPPF) module (Fig. 3f) located in the backbone section performs serial and parallel spatial pyramid pooling, increasing the coverage area of feature maps and integrating multi-scale information. YOLOv8 can be used for instance segmentation as well as object detection [40]. Since YOLOv8 is an anchor-free model, unlike previous YOLO models, it has been made suitable for instance segmentation tasks under the name YOLOv8-Seg [3]. YOLOv8-Seg shares the same basic architecture as YOLOv8 with an additional output module in the head that produces mask coefficients, and an additional Fully Convolutional Networks (FCN) layer called the Proto module (Fig. 3d). This module outputs masks that help segmentation.

YOLOv8-Seg is divided into five models: YOLOv8n-Seg, YOLOv8s-Seg, YOLOv8m-Seg, YOLOv8l-Seg and YOLOv8x-Seg [59]. The scaling parameters of these models are given in Table 1. Since each model contains different layers, blocks, modules and channel numbers, training was conducted with all YOLOv8-Seg models to determine the model that will show the best performance in the study.

Data acquisition

To create the dataset used in the study, images of 800 tomato leaves, healthy and with T. absoluta damage, were acquired from the Gümenek village of the Central district of Tokat province, Turkey, on different dates (August 10 and September 7, 2023) under greenhouse conditions with natural lighting. Two smartphones (iPhone XS and iPhone 8, Apple Inc., California, USA) were used to acquire images. Images were acquired in.jpeg format, 1173 × 1173 1:1 with 2 × optical zoom. Each image in the dataset has a complex background. To create diversity in the dataset, images were acquired to cover different levels of damage caused by the pest on the leaf. This diversity allows the damage caused by the pest to the leaves to be represented in varying intensity and extent, from the lowest to the highest levels. The images acquired in this way can be used to evaluate the model's ability to detect different levels of damage. During the process of obtaining these images, care was taken to ensure that there were no signs of different diseases or pests in the greenhouse under the control of a plant protection specialist and that no pesticides had been applied beforehand.

Data preprocessing, annotation, and augmentation

Roboflow platform was used for image pre-processing, labelling and data augmentation. The dataset label names are in 2 classes: “Healthy” and “Tuta absoluta”. Images with a size of 1173 × 1173 pixels were converted to 640 × 640 pixels on the Roboflow platform. In the literature, in deep learning studies where plant disease and pest damage detection is performed, datasets are generally divided in cross-validation methodology ratios of 8:1:1 [60], 7:3 [61], 7:2:1 [31], 9:1 [8] ve 7:1:2 [4]. Based on these studies, the dataset was divided in the ratio of 7:1:2 as given in Table 2.

Segment anything model (SAM)

The Segment Anything Model (SAM), introduced in April 2023, is used as a benchmark model to segment various natural images [20]. SAM specifically employs a vision transformer-based image encoder to extract image features and incorporates prompt encoders to integrate user interactions. Subsequently, it utilises a mask decoder to generate segmentation results and confidence scores based on the image embedding, prompt embedding, and output token. It is capable of achieving zero-shot generalisation to unfamiliar objects and images without the need for additional training [10]. Regional labelling required for instance segmentation is a time-consuming and challenging process. In the study, the general architecture showing the transformation stages of images reduced in size and labelled as bounding box into mask labels is given in the flow chart in Fig. 4. With the SAM algorithm using the smart polygon labelling tool, mask borders were automatically drawn around the areas where pest damage occurs. After labelling, data augmentation was applied. Data augmentation is a method used to effectively solve problems in cases where insufficient and unbalanced samples are available. In this method, similar but different samples are created by changing the original samples. By further improving the dataset with the examples created, the generalisation ability of the model is increased and helps to avoid overfitting [18]. The structure and the quality of the data are very important in a training dataset. In the study, data augmentation with rotation methods was preferred to avoid affecting the structure and quality of the data. The number of 560 images allocated for training was increased to 1680 with "Clockwise" and "Counter-clockwise" data augmentation methods. For training the models of the dataset whose labelling was completed, an export API code was created via the Roboflow platform in YOLOv5 PyTorch format for YOLO models and in COCO JSON format for YOLACT and Mask R-CNN models.

The labels of the images were made manually in the first stage with the bounding box label tool. To check the accuracy of the bounding box label in the created dataset, the statistical results shown in Fig. 5 were obtained. Here, Fig. 5a shows the number of labels in the created dataset. This provides information about the diversity and distribution of labels used in training, validation and testing of the model. “Tuta absoluta” class has the highest number of labels due to its smaller label size compared to the “Healthy” class. Figure 5b shows the bounding box size used for labels. Figure 5c shows the normalised position map of the labels on the data. This shows the distribution and density of locations on the images based on the size of the labels. Figure 5d is the normalised label size map. In this map, which is based on the width and height of the labels, it is seen that the distribution progresses linearly and labels with low width and height are more dense.

The accuracy of bounding box labels on the data was also evaluated from another perspective. The distribution and the correlation of the labels are shown in the correlogram distribution graph created in Fig. 6. When the label position (x,y) and label size (width, height) are compared in rows and columns according to the histogram and correlation graphs, it is seen that there is a clear similarity, closeness to 1 and a positive correlation. As the label position (x,y) and label size (width, height) variables increase or decrease, other variables also move in the same direction. These visual results mean that labels often grow or shrink proportionally. These presented graphs show that the label sizes and their positions on the data have a regular distribution and do not contain any irregular data. These results indicate that the quality of the dataset to be used for training the model is high and the labels are placed correctly.

Models train

Google Colab notebook was used for training YOLOv8(n/s/m/l/x)-Seg models. The model software was run on this notebook. The dataset and the label file, labelled on the Roboflow platform, were exported into the software in the form of API code and into the algorithm in YOLOv5 Pytorch format. In the study, a computer (Macbook Air 2017, Apple Inc., California, USA) with MacOS Monterey operating system was used to run the Colab notebook. The required GPU Nvidia Tesla A100 (Virtual GPU, 40G) was used to train the model on the Colab notebook. There are studies in the literature that use instance segmentation and object detection models, such as improved YOLOv8-Seg [61], YOLOv5n-Seg [64], MHSA-YOLOv8 [31] and Alpha-EIOU-YOLOv8 [52], in detecting plant disease and pest damage. In these studies, it was observed that the hyperparameters (learning rate, batch size, momentum, etc.) in the training models were used as standard and model-optimised parameters. For this reason, standard training hyperparameters given in Table 3 were used in training the model.

Model evaluation indicators

In the study experiments, mAP0.5 and mAP0.5–0.95 values and Eq. (1) for mAP0.5 and Eq. (2) for mAP0.5–0.95 were used to measure the box and mask accuracy of the model [59, 62].

In the formula, "nc" indicates the number of classes, "P" represents precision and "R" represents recall. P is calculated as shown in Eq. (3) and R is calculated as shown in Eq. (4) [62].

TP is the True Positive concept and refers to correctly detected results, where it represents the number of prediction bins with IoU > 0.5. FP is the False Positive concept and represents incorrectly detected results, as well as prediction boxes with an IoU value of 0.5. FN is Background and represents the number of unpredicted labels. These metrics measure the efficiency of the model in correctly identifying and segmenting objects in images [39].

Experimental results and discussion

Model results

In the study, training was carried out using YOLOv8-Seg models of different sizes and the parameters in Table 3. Comparison of the model performances is given in Table 4. When Table 4 is examined, the criteria include the number of parameters, the number of layers, the training time, the GFLOPs, which is the unit that measures the computational intensity of a model, the GPU use in Tesla A100, and (0.5, 0.5–0.95) the mask mAP values at different IoU thresholds. It is seen that as the model parameters increase, the training time and the GPU use also increase. Although the smallest model, YOLOv8s-Seg, has a low number of parameters, number of layers, training time and GPU use, it showed the lowest performance when evaluated in the mask mAP0.5 metric. YOLOv8x-Seg is the model with the highest number of parameters, number of layers, training time and GPU use of the family. However, in the experiments performed, the model is not the model with the highest mask mAP values. This shows that the model is very complex and slow, but also not very accurate. Although YOLOv8l-Seg is the mid-level model in terms of number of parameters, number of layers, training time, GFLOPs value and GPU use, it outperformed all other models. These results show that it is more computationally appropriate to use fewer complex models for determinations made on agricultural data. When the mask performance of each model is evaluated, it is seen that the mAP0.5, the mAP0.5–0.95 and the precision values of the YOLOv8l-Seg model are higher than the other models. For this reason, the study was continued with the YOLOv8l-Seg model.

The box and mask performance of the YOLOv8l-Seg model was evaluated on a class basis. When Tables 5 and 6 are examined, it is seen that the “Tuta absoluta” mask mAP0.5 and precision values give better results than the box values. Whilst the model detected the “Tuta absoluta” class with a value of 0.862 in the mAP0.5 metric on a bounding box basis, this value showed better performance with a value of 0.883 on a mask basis. The model showed the same performance as 0.986 in the mAP0.5 metric in detecting the “Healthy” class both on the bounding box and mask basis. The results show that the regional automatic labelling method proposed in the study with the instance segmentation method creates improvements in the "Tuta absoluta" detection performance of the proposed model. When evaluated in terms of class label sizes, it was seen that masks with smaller label sizes showed better performance compared to bounding box performance. Additionally, these findings show that masking tagging provides more detailed and sensitive detection.

As a result of the training, the confusion matrix shown in Fig. 7 was created to better understand the “Healthy” and “Tuta absoluta” detection performance of the YOLOv8l-Seg model in terms of various categories. YOLO v8-Seg model trained to detect “Healthy” and “Tuta absoluta” has the best recognition performance of the “Healthy” class, followed by “Tuta absoluta”. The "Tuta absoluta" class was the most confused with the background, with a value of 0.18. The “Healthy” class was the class that mixed the least with the background, with 0.04. The reason for this confusion is that the “Tuta absoluta” class has a smaller label.

In addition, to evaluate the reliability of the model training process of the study, box and mask loss graphs in the training and validation phases of the model are given as shown in Fig. 8. It is seen that the graphs created from bounding box (box_loss), mask (seg_loss), classification (cls_loss) ve distribution focal (dfl_loss) loss graphs show similar formations in training and validation. When the results are examined in more detail, it is seen that the bounding box (box_loss) loss values decreased to 0.4 in 100 epochs during both the training and validation processes. Likewise, it is seen that the mask (seg_loss) loss values are between 0.7 and 0.6 in both the training and validation processes. Similarly, it is seen that other classification (cls_loss) and distribution focal (dfl_loss) loss values produce similar results in both the training and validation processes. These results show that the loss values in the training and validation processes are close to each other. This shows that the model has a good generalisation ability. Good generalisation ability indicates that the model can be successful in dealing with different agricultural pests that may be encountered in real-world conditions and in adapting to various agricultural environments.

Likewise, box and mask performance metrics mAP0.5, mAP0.5–0.95, recall and precision graphs during the model training and validation process are given in Fig. 9. The model showed the best performance with mask in mAP0.5 metric during 100 epochs. In the mAP0.5–0.95 metric, bounding box performed better. This shows that the bounding box method is more successful in detecting objects at the low threshold value of the model (IoU: 0.5–0.95). In the precision metric, the model mask method performed better than the bounding box method. In addition, the fact that precision and recall metrics show similar results in both methods shows that the model does not overfit and can adapt well to new data.

The YOLOv8l-Seg model is trained on 4 different input sizes. The performance result of the model varied in parallel with the input sizes. The model showed the best performance with an input size of 640. It showed the lowest performance at 80 input size. Figure 10 shows the performance metric chart of the model at different input sizes. These findings helped select an appropriate input size to optimise both detection speed and accuracy of the model.

Training time and GPU use were measured during training with Tesla A100 GPU at different input sizes of the model. Figure 11 shows the training time and GPU use graph. In the experiments, it was seen that increasing input sizes increased both training time and GPU memory use. The model showed the highest training time and the highest GPU use at the input size of 640.

Sample outputs from the test dataset of the YOLOv8l-Seg model are given in Fig. 12. Here are the original images along with the model outputs (Healthy, Healthy—Tuta absoluta and Tuta absoluta). When we examined the test data images that the model had never encountered during the training and validation processes, it was seen that the model detected even tomato leaves with low T. absoluta damage with high accuracy. These visual results show that the model can accurately detect new and unseen data samples, that the model has high generalisation ability, and that it has the potential to obtain reliable results in real-world conditions.

Comparison with different instance segmentation algorithm

The same dataset used in training the YOLOv8l-Seg model was also trained with different models using the instance segmentation method using 640 input size, number of epochs, batch size and Tesla A100 GPU. In the experiments carried out with different instance segmentation models (Table 7), care was taken to ensure that the models consisted of single- and two-stage models. For this reason, the two-stage model Mask R-CNN was also included in the experiment. Since the Mask R-CNN model includes a region proposal network (RPN) in its architecture and performs feature extraction and sample segmentation capabilities in separate stages, it had the longest training time compared to other single-stage models in the experiment. The lowest mask performance was shown by the Mask R-CNN model. YOLACT, which has the lowest number of parameters at 36 M and a GFLOPs value of 118.5, is a single-stage segmentation model suitable for real-time systems. This model architecture uses the Proto module and feature pyramid network like the YOLOv8l-Seg model proposed in the study. The YOLACT model was included in the experiment due to its similarities to the model proposed in the study. In the experimental results, the YOLACT model outperformed the Mask R-CNN and YOLOv5l-Seg models in all metrics and stands out with the shortest training time in the experiment. Finally, the YOLOv5l-Seg and YOLOv7-Seg single-stage models, which are previous variations of the proposed YOLOv8-Seg model, were also included in the experiment. As a result of the training, the YOLOv8l-Seg model showed the best mask performance. The YOLOv8l-Seg model was followed by the YOLOv7-Seg model. Additionally, the model achieved the highest GFLOPs value of 220.1 in all experiments. Increasing GFLOPs value means that the processing load capacity and inference speed of the model increases. The high GFLOPs value showed that the proposed model has a faster inference ability compared to other models.

Comparison with similar algorithms available in the literature

The performance results obtained in the algorithm used in the study were compared with some agricultural studies available in the literature. When the model proposed in the study is compared with the YOLO-based models in the literature, it is seen that in some performance metrics they are close to each other and in others our proposed model performs better (Table 8). Ready datasets were mainly used in studies in the literature. In our study, a ready dataset was not used, and a new dataset was created. The results revealed that the YOLOv8l-Seg model showed the best performance amongst others with a metric value of 0.935 mAP0.5 based on mask. As a result, when the values are examined, it is seen that the algorithm used in the study, the proposed dataset, the labelling method, and the performance metrics provide successful results. With these results, it was concluded that it can be used safely in the detection of T. absoluta pest damage.

Conclusion

In this study, YOLOv8l-Seg model was proposed to determine the leaf damage caused by the T. absoluta pest, which greatly affects the development and yield of tomato plants. In the model, the use of the sample segmentation method using regional mask labels instead of the bounding box methods used in the literature to reduce the complex background in agricultural images enabled the detection of pest damage more successfully. Additionally, to solve the problem that mask labelling required, for instance, segmentation method is a time-consuming process, an automatic mask labelling method has been implemented with the support of the SAM algorithm. Furthermore, the key findings of the study are summarised below:

-

An original dataset was created with images obtained from a farmer's greenhouse. The created dataset was labelled as bounding box and automatic mask labelling was performed with the SAM model.

-

YOLOv8-Seg models of different sizes were trained on the dataset with the determined parameters. The highest performance was achieved with the YOLOv8l-Seg model. Mask performance was higher than box performance. This result showed that the instance segmentation method, which makes regional detection in images with complex backgrounds, is more successful than the object detection method. In addition, the YOLOv8l-Seg model was trained on different input sizes and showed the highest performance at 640 size. As a result of model training, inference speed values were found as pre-process 2.5 ms, inference 8.3 ms and post-process per image 8.7. The obtained inference speed values show that the model can be used effectively in real-time applications.

-

Training was also done with Mask R-CNN, YOLACT, YOLOv5l-Seg and YOLOv7-Seg different instance segmentation models and performance comparisons were made with the YOLOv8l-Seg model. In these comparison results, the YOLOv8l-Seg model showed the highest performance.

Experimental results show that the dataset, labelling method, model parameters and YOLOv8l-Seg model have great application potential in detecting damage caused by T. absoluta pest on the leaf part of tomato plants. In addition, when the proposed method is evaluated in terms of plant protection applications, it is predicted that it may contribute to the development of systems that will assist plant protection experts and farmers in detecting leaf damage caused by pests at an early stage. It is thought that the systems to be developed will detect pest damage at an early stage and take effective control measures quickly, ensuring a more effective fight against pests and minimising the use of pesticides. In the future, with further development of algorithms using larger datasets, better detection systems can be created, and these systems can be integrated with agricultural robots or smart spraying machines. Our next goals include early detection of visible pests such as T. absoluta in many plant species by agricultural early warning systems using Deep-Sort algorithms, before they cause damage to the plant, and real-time pest counting to determine the economic threshold value for plant protection practices.

Data availability

The data set used within the scope of the study will be shared upon request.

Abbreviations

- AP:

-

Average precision

- API:

-

Application Programming Interface

- DFL:

-

Distribution focal loss

- ELAN:

-

Efficient layer aggregation network

- FCN:

-

Fully convolutional networks

- FN:

-

False negative

- FP:

-

False positive

- GPU:

-

Graphics processing unit

- IoU:

-

Intersection over union

- lr:

-

Learning rate

- mAP:

-

Mean average precision

- R-CNN:

-

Region-based convolutional neural network

- RPN:

-

Region proposal network

- SAM:

-

Segment anything model

- SGD:

-

Stochastic gradient descent

- SiLU:

-

Sigmoid Linear Unit

- SPPF:

-

Spatial pyramid pooling fast

- TP:

-

True positive

- YOLO:

-

You only look once

- YOLACT:

-

You only look at coefficients

References

Abdel-Razek AS, Masry SHD, Sadek HE, Gaafar HE (2019) Efficacy of trichogramma wasps for controlling tomato leaf miner Tuta absoluta. Arch Phytopathol Plant Prot 52(5–6):443–457. https://doi.org/10.1080/03235408.2019.1634782

Adem K, Ozguven MM, Altas Z (2023) A sugar beet leaf disease classification method based on image processing and deep learning. Multimed Tools Appl 82:12577–12594. https://doi.org/10.1007/s11042-022-13925-6

Ahmed NS, Noor SS, Sikder AIS, Paul A (2023) Bengali Document layout analysis a YOLOV8 based ensembling approach. Comput Vis Pattern Recognit. https://doi.org/10.48550/ar**v.2309.00848

Altaş Z, Özgüven MM, Adem K (2023) Automatic detection and classification of some vineyard diseases with faster R-CNN model. Turk J Agric - Food Sci Technol 11(1):97–103. https://doi.org/10.24925/turjaf.v11i1.97-103.5665

Bai R, Shen F, Wang M, Lu J, Zhang Z (2023) Improving detection capabilities of YOLOv8-n for small objects in remote sensing imagery: towards better precision with simplified model complexity. https://doi.org/10.21203/rs.3.rs-3085871/v1

Blekos A, Chatzis K, Kotaidou M, Chatzis T, Solachidis V, Konstantinidis D, Dimitropoulos KA (2023) Grape dataset for instance segmentation and maturity estimation. Agronomy 13:1995. https://doi.org/10.3390/agronomy13081995

Bolya D, Zhou C, **ao F, Lee YJ (2019) YOLACT: Real-time instance segmentation. https://doi.org/10.48550/ar**v.1904.02689

Bhandari M, Shahi TB, Neupane A, Walsh KB (2023) BotanicX-AI: identification of tomato leaf diseases using an explanation-driven deep-learning model. J Imaging 9(2):53. https://doi.org/10.3390/jimaging9020053

Caparros Megido R, Haubruge E, Verheggen FJ (2012) First evidence of deuterotokous parthenogenesis in the tomato Leafminer, Tuta absoluta (Meyrick) (Lepidoptera: Gelechiidae). J Pest Sci 85:409–412. https://doi.org/10.1007/s10340-012-0458-6

Carraro A, Sozzi M, Marinello F (2023) The segment anything model (SAM) for accelerating the smart farming revolution. Smart Agric Technol 6:100367. https://doi.org/10.1016/j.atech.2023.100367

CIP (1996) Major potato diseases, insects and nematodes, 3rd edn. Centro Internacional de la Papa, Lima

Ge Y, Lin S, Zhang Y, Li Z, Cheng H, Dong J, Shao S, Zhang J, Qi X, Wu Z (2022) Tracking and counting of tomato at different growth period using an improving YOLO-deepsort network for inspection robot. Machines 10:489. https://doi.org/10.3390/machines10060489

Gehlot M, Saxena RK, Gandhi GC (2023) “Tomato-Village”: a dataset for end-to-end tomato disease detection in a real-world environment. Multimed Syst 29(6):3305–3328. https://doi.org/10.1007/s00530-023-01158-y

Georgantopoulos PS, Papadimitriou D, Constantinopoulos C, Manios T, Daliakopoulos IN, Kosmopoulos D (2023) A multispectral dataset for the detection of Tuta absoluta and Leveillula taurica in tomato plants. Smart Agric Technol 4:100146. https://doi.org/10.1016/j.atech.2022.100146

Giakoumoglou N, Pechlivani EM, Frangakis N, Tzovaras D (2023) Enhancing Tuta absoluta detection on tomato plants: ensemble techniques and deep learning. AI 4(4):996–1009. https://doi.org/10.3390/ai4040050

Gu W, Bai S, Kong L (2022) A review on 2D instance segmentation based on deep neural networks. Image Vis Comput 120:104401. https://doi.org/10.1016/j.imavis.2022.104401

Hafiz AM, Bhat GMA (2020) Survey on instance segmentation: state of the art. Int J Multimed Info Retr 9:171–189. https://doi.org/10.1007/s13735-020-00195-x

Han T, Cao T, Zheng Y, Chen L, Wang Y, Fu B (2023) Improving the detection and positioning of camouflaged objects in YOLOv8. Electronics 12:4213. https://doi.org/10.3390/electronics12204213

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. https://doi.org/10.48550/ar**v.1703.06870

He S, Bao R, Li J, Stout J, Bjornerud A, Grant PE, Ou Y (2023) Computer-vision benchmark segment-anything model (SAM) in medical images: accuracy in 12 datasets. https://doi.org/10.48550/ar**v.2304.09324

Hu K, Chen Z, Kang H, Tang Y (2024) 3D vision technologies for a self-developed structural external crack damage recognition robot. Autom Constr 159:105262. https://doi.org/10.1016/j.autcon.2023.105262

Islam A, Raisa SS, Khann N, Rifat A (2023) A deep learning approach for classification and segmentation of leafy vegetables and diseases. In: 2023 international conference on next-generation computing, IoT and machine learning (NCIM), Gazipur, Bangladesh, 2023. pp 1–6. https://doi.org/10.1109/NCIM59001.2023.10212506

Jocher G, Nishimura K, Mineeva T, Vilariño R (2020) YOLOv5. GitHub repository: https://github.com/ultralytics/yolov5

Jocher G, Laughing Q, Chaurasia A (2023) https://docs.ultralytics.com/tasks/segment/#export. Accessed 5 Oct 2023

Kang J, Zhao L, Wang K, Zhang K (2023) Research on an improved YOLOv8 image segmentation model for crop pest. Advances in computer, signals and systems, vol 7. Clausius Scientific Press, Canada. https://doi.org/10.23977/acss.2023.070301 (ISSN 2371-8838)

Kılıç T (2008) Bitki Zararlıları Zirai Mücadele Teknik Talimatları. Bitki Sağlığı Araştırmaları Daire Başkanlığı, pp 28–29. Tarımsal Araştırmalar ve Politikalar Genel Müdürlüğü-Bitki Sağlığı Araştırmaları Daire Başkanlığı (in Turkish)

Kirillov A, Mintun E, Ravi N, Mao H, Rolland C, Gustafson L, **ao T, Whitehead S, Berg AC, Lo WY, Dollár P, Girshick R (2023) Segment anything. https://doi.org/10.48550/ar**v.2304.02643

Khan F, Zafar N, Tahir MN, Aqib M, Waheed H, Haroon Z (2023) A mobile based system for maize plant leaf disease detection and classification using deep learning. Front Plant Sci 14:1079366. https://doi.org/10.3389/fpls.2023.1079366

Khalid S, Oqaibi HM, Aqib M, Hafeez Y (2023) Small pests detection in field crops using deep learning object detection. Sustainability 15(8):6815. https://doi.org/10.3390/su15086815

Kumar VS, Jaganathan M, Viswanathan A, Umamaheswari M, Vignesh J (2023) Rice leaf disease detection based on bidirectional feature attention pyramid network with YOLO v5 model. Environ Res Commun 5:065014. https://doi.org/10.1088/2515-7620/acdece

Li PZJ, Li PLH, Li M, Gao L (2023) Tomato maturity detection and counting model based on MHSA-YOLOv8. Sensors 23(15):6701. https://doi.org/10.3390/s23156701

Li D, Sun X, Jia Y, Yao Z, Lin P, Chen Y, Zhou H, Zhou Z, Wu K, Shi L, Li J (2023) A Longan yield estimation approach based on UAV images and deep learning. Front Plant Sci 14:1132909. https://doi.org/10.3389/fpls.2023.1132909

Lin J, Bai D, Xu R, Lin H (2023) TSBA-YOLO: an improved tea diseases detection model based on attention mechanisms and feature fusion. Forests 14:619. https://doi.org/10.3390/f14030619

Lou H, Duan X, Guo J, Liu H, Gu J, Bi L, Chen H (2023) DC-YOLOv8: small-size object detection algorithm based on camera sensor. Electronics 12:2323. https://doi.org/10.3390/electronics12102323

Loyani LK, Bradshaw K, Machuve D (2021) Segmentation of Tuta absoluta’s damage on tomato plants: a computer vision approach. Appl Artif Intell 35(14):1107–1127. https://doi.org/10.1080/08839514.2021.1972254

Meng F, Li J, Zhang Y, Qi S, Tang Y (2023) Transforming unmanned pineapple picking with spatio-temporal convolutional neural networks. Comput Electron Agric 214:108298. https://doi.org/10.1016/j.compag.2023.108298

Mkonyi L, Rubanga D, Richard M, Zekeya N, Sawahiko S, Maiseli B, Machuve D (2020) Early Identification of Tuta absoluta in tomato plants using deep learning. Sci Afr 10:e00590. https://doi.org/10.1016/j.sciaf.2020.e00590

Mohamed E, Shaker A, El-Sallab A, Hadhoud M (2021) INSTA-YOLO: real-time instance segmentation. https://doi.org/10.48550/ar**v.2102.06777

Mohana Sri S, Swethaa S, Aouthithiye Barathwaj SRY, Sai Ganesh CS (2023) Intelligent debris mass estimation model for autonomous underwater vehicle. Comput Vis Pattern Recognit. https://doi.org/10.48550/ar**v.2309.10617

Mukhamadiev S, Nesteruk S, Illarionova S, Somov A (2023) Enabling multi-part plant segmentation with instance-level augmentation using weak annotations. Information 14:380. https://doi.org/10.3390/info14070380

Ozguven MM (2020) Deep learning algorithms for automatic detection and classification of mildew disease in cucumber. Fresenius Environ Bull 29(08/2020):7081–7087

Ozguven MM, Altas Z (2022) A new approach to detect mildew disease on cucumber (Pseudoperonospora cubensis) leaves with image processing. J Plant Pathol. https://doi.org/10.1007/s42161-022-01178-z

Ozguven MM, Yanar Y (2022) The technology uses in the determination of sugar beet diseases. In: Misra V, Srivastava S, Mall AK (eds) Sugar beet cultivation, management and processing. Springer, Singapore. https://doi.org/10.1007/978-981-19-2730-0_30

Ozguven MM (2023) The digital age in agriculture. CRC Press Taylor & Francis Group LLC (ISBN 978-103-23-8577-8)

Pandey M, Bhattarai N, Pandey P, Chaudhary P, Katuwal DR, Khanal D (2023) A review on biology and possible management strategies of tomato leaf miner, Tuta absoluta (Meyrick), Lepidoptera: Gelechiidae in Nepal. SSRN J. https://doi.org/10.2139/ssrn.4525117

Pham MT, Courtrai L, Friguet C, Lefèvre S, Baussard A (2020) YOLO-fine: one-stage detector of small objects under various backgrounds in remote sensing images. Remote Sens 12(15):2501. https://doi.org/10.3390/rs12152501

Rubanga DP, Loyani LK, Richard M, Shimada S (2020) A deep learning approach for determining effects of Tuta absoluta in tomato plants. ar**v preprint ar**v:2004.04023

Sampurno RM, Liu Z, Abeyrathna RMRD, Ahamed T (2024) Intrarow uncut weed detection using you-only-look-once instance segmentation for orchard plantations. Sensors 24:893. https://doi.org/10.3390/s24030893

Sharma R, Saqib M, Lin CT, Blumenstein M (2022) A survey on object instance segmentation. SN Comput Sci 3:499. https://doi.org/10.1007/s42979-022-01407-3

Sikati J, Nouaze JC (2023) YOLO-NPK: a lightweight deep network for lettuce nutrient deficiency classification based on improved YOLOv8 Nano. ECSA. https://doi.org/10.3390/ecsa-10-16256

Şahin YS, Erdinç A, Bütüner AK, Erdoğan H (2023) Detection of Tuta absoluta larvae and their damages in tomatoes with deep learning-based algorithm. Int J Next-Gener Comput. https://doi.org/10.47164/ijngc.v14i3.1287

Trinh DC, Mac AT, Dang KG, Nguyen HT, Nguyen HT, Bui TD (2024) Alpha-EIOU-YOLOv8: an improved algorithm for rice leaf disease detection. AgriEngineering 6:302–317. https://doi.org/10.3390/agriengineering6010018

Uygun T, Ozguven MM, Yanar D (2020) A new approach to monitor and assess the damage caused by two-spotted spider mite. Exp Appl Acarol 82(3):335–346. https://doi.org/10.1007/s10493-020-00561-8

Wang CY, Bochkovskiy A, Liao HYM (2022) YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. ar**v 2022, ar**v:2207.02696

Wang H, Feng J, Yin H (2023) Improved method for apple fruit target detection based on YOLOv5s. Agriculture 13(11):2167. https://doi.org/10.3390/agriculture13112167

Wang C, Li C, Han Q, Wu F, Zou X (2023) A performance analysis of a litchi picking robot system for actively removing obstructions, using an artificial intelligence algorithm. Agronomy 13(11):2795. https://doi.org/10.3390/agronomy13112795

Wang G, Chen Y, An P, Hong H, Hu J, Huang T (2023) UAV-YOLOv8: a small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 23:7190. https://doi.org/10.3390/s23167190

Wu J, Wen C, Chen H, Ma Z, Zhang T, Su H, Yang C (2022) DS-DETR: a model for tomato leaf disease segmentation and damage evaluation. Agronomy. https://doi.org/10.3390/agronomy12092023

Wu Y, Han Q, ** Q, Li J, Zhang Y (2023) LCA-YOLOv8-Seg: an improved lightweight YOLOv8-seg for real-time pixel-level crack detection of dams and bridges. Appl Sci 13:10583. https://doi.org/10.3390/app131910583

Yang G, Wang J, Nie Z, Yang H, Yu S (2023) A lightweight Yolov8 tomato detection algorithm combining feature enhancement and attention. Agronomy 13(7):1824. https://doi.org/10.3390/agronomy13071824

Yue X, Qi K, Na X, Zhang Y, Liu Y, Liu C (2023) Improved YOLOv8-Seg network for instance segmentation of healthy and diseased tomato plants in the growth stage. Agriculture 13:1643. https://doi.org/10.3390/agriculture13081643

Zhang L, Ding G, Li C, Li D (2023) DCF-Yolov8: an improved algorithm for aggregating low-level features to detect agricultural pests and diseases. Agronomy 13(8):2012. https://doi.org/10.3390/agronomy13082012

Zhao X, Ding W, An Y, Du Y, Yu T, Li M, Tang M, Wang J (2023) Fast segment anything. https://doi.org/10.48550/ar**v.2306.12156

Zheng S, Liu Y, Weng W, Jia X, Yu S, Wu Z (2023) Tomato recognition and localization method based on improved YOLOv5n-seg model and binocular stereo vision. Agronomy 13(9):2339. https://doi.org/10.3390/agronomy13092339

Zhu R, Hao F, Ma D (2023) Research on polygon pest-infected leaf region detection based on YOLOv8. Agriculture 13(12):2253. https://doi.org/10.3390/agriculture13122253

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no known conflict of interests or personal relationships that could have appeared to influence the work reported in this paper.

Compliance with ethics requirements

No ethical compliance is required within the scope of this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Uygun, T., Ozguven, M.M. Determination of tomato leafminer: Tuta absoluta (Meyrick) (Lepidoptera: Gelechiidae) damage on tomato using deep learning instance segmentation method. Eur Food Res Technol 250, 1837–1852 (2024). https://doi.org/10.1007/s00217-024-04516-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00217-024-04516-w