Abstract

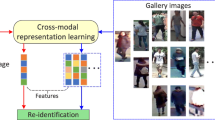

Visible-infrared person re-identification (VI-ReID) is used to search person images across cameras under different modalities, which can address the limitation of visible-based ReID in dark environments. Intra-class discrepancy and feature-expression discrepancy caused by modal changes are two major problems in VI-ReID. To address these problems, a VI-ReID model based on feature consistency and modal indistinguishability is proposed. Specifically, image features of the two modalities are obtained through a one-stream network. Aiming at the problem of intra-class discrepancy, the class-level central consistency loss is developed, which makes the two modal feature centroids of the same identity close to their class centroids. To reduce the discrepancy of feature expression, a modal adversarial learning strategy is designed to distinguish whether the features are consistent with the modality attributes. The aim is that the generator generates the feature which the discriminator cannot distinguish its modality, thereby shortening the modality discrepancy and improving the modal indistinguishability. The generator network is optimized by the cross-entropy loss, the triplet loss, the proposed central consistency loss and the modal adversarial loss. The discriminator network is optimized by the modal adversarial loss. Experiments on the two datasets SYSU-MM01 and RegDB demonstrate that our method has better VI-ReID performance. The code has been released in https://github.com/Nicholasxin/FCMI_VI_reid.

Similar content being viewed by others

References

Frikha, M., Fendri, E., Hammami, M.: Multi-shot person re-identification based on appearance and spatial-temporal cues in a large camera network. Mach. Vis. Appl. 32(85), 1–13 (2021)

Wu, A.C., Zheng, W.S., Yu, H.X., Gong, S.G., Lai, J.H.: RGB-infrared cross-modality person re-identification. In: IEEE International Conference on Computer Vision, pp. 5380–5389 (2017)

Nguyen, D., Hong, H., Kim, K., Park, K.: Person recognition system based on a combination of body images from visible light and thermal cameras. Sensors 17(3), 605 (2017)

Hao, Y., Wang, N.N., Gao, X.B., Li, J., Wang, X.Y.: Dual-alignment feature embedding for cross-modality person re-identification. In ACM International Conference on Multimedia, pp. 57–65 (2019)

Liu, H.J., Tan, X.H., Zhou, X.C.: Parameter sharing exploration and hetero-center triplet loss for visible-thermal person reidentification. IEEE Trans. Multimedia 23, 4414–4425 (2020)

G. A. Wang, T. Z. Zhang, J. Cheng, S. Liu, Y. Yang, Z.G. Hou, RGB-infrared cross-modality person re-identification via joint pixel and feature alignment, in IEEE International Conference on Computer Vision, 2019: 3623–3632.

Dai, P. Y., Ji, R. R., Wang, H. B., Wu, Q. , Huang, Y. Y.: Cross-modality person re-identification with generative adversarial training. In International Joint Conference on Artificial Intelligence, (vol 1, no. 3, pp. 677-683) (2018)

Zhou, Y., Li, R., Sun, Y. J., Dong, K. W., Li, S.: Knowledge self-distillation for visible-infrared cross-modality person re-identification, Appl. Intell., (2022) online.

Ye, M., Lan, X., Li, J., Yuen, P. C.: Hierarchical discriminative learning for visible thermal person re-identification. In AAAI Conference on Artificial Intelligence, pp. 7501–7508 (2018)

Ye, M., Wang, Z., Lan, X., Yuen, P. C.: Visible thermal person reidentification via dual-constrained top-ranking. In: International Joint Conference on Artificial Intelligence, pp. 1092–1099 (2018)

Liu, Q., Teng, Q.Z., Chen, H.G., Li, B., Qing, L.B.: Dual adaptive alignment and partitioning network for visible and infrared cross-modality person re-identification. Appl. Intell. 52, 547–563 (2021)

Feng, Z.X., Lai, J.H., **e, X.H.: Learning modality-specific representations for visible-infrared person re-identification. IEEE Trans. Image Process. 29, 579–590 (2020)

Ye, M., Lan, X.Y., Leng, Q.M., Shen, J.B.: Cross-modality person re-identification via modality-aware collaborative ensemble learning. IEEE Trans. Image Process. 29, 9387–9399 (2020)

Wang, P.Y., Su, F., Zhao, Z.C., Zhao, Y.Y., Yang, L., Li, Y.: Deep hard modality alignment for visible thermal person re-identification. Pattern Recogn. Lett. 133, 195–201 (2020)

Ye, H.R., Liu, H., Meng, F.Y., Li, X.: Bi-directional exponential angular triplet loss for RGB-infrared person re-identification. IEEE Trans. Image Process. 30, 1583–1595 (2021)

Wang, P.Y., Zhao, Z.C., Su, F., Zhao, Y.Y., Wang, H.Y., Yang, L., Li, Y.: Deep multi-patch matching network for visible thermal person re-identification. IEEE Trans. Multimedia 23, 1474–1488 (2020)

Basaran, E., Gökmen, M., Kamasak, M.E.: An efficient framework for visible-infrared cross modality person re-identification, Signal processing: Image Commun., 87 (2020)

Choi, S., Lee, S., Kim, Y., Kim, T., Kim, C.: Hi-CMD: Hierarchical cross-modality disentanglement for visible-infrared person re-identification. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 10257–10266 (2020)

Wang, G.A., Zhang, T.Z., Yang, Y., Cheng, J., Chang, J.L., Liang, X., Hou, Z.G.: Cross-modality paired-images generation for RGB-infrared person re-identification. In: AAAI Conference on Artificial Intelligence, pp. 12144–12151 (2020)

Wang, Z.X., Wang, Z., Zheng, Y.Q., Chuang, Y.Y., Satoh, S.: Learning to reduce dual-level discrepancy for infrared-visible person reidentification. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 618–626 (2019)

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S.: Generative adversarial networks, Adv. Neural Inf. Process. Syst. pp. 2672–2680 (2014)

Zhu, J.Y., Park, T., Isola, P., Efros, A. A., Unpaired image-to-image translation using cycle-consistent adversarial networks. In: IEEE International Conference on Computer Vision, pp. 2223–2232 (2017).

Hao, Y., Li, J., Wang, N. N., Gao, X. B., Modality adversarial neural network for visible-thermal person re-identification, Pattern Recognition, 107 (2020)

Li, D., Wei, X., Hong, X., Gong, Y.: Gong, Infrared-visible cross-modal person re-identification with an X modality. In AAAI Conference on Artificial Intelligence, (vol 34, pp. 4610-4617) (2020)

Ye, M., Shen, J.B., Shao, L.: Visible-infrared person re-identification via homogeneous augmented tri-modal learning. IEEE Trans. Inf. Forensics Secur. 16, 728–739 (2021)

Ye, M., Ruan, W. J., Du, B., Shou, M. Z.: Channel augmented joint learning for visible-infrared recognition: In IEEE International Conference on Computer Vision, pp. 13567–13576. (2021)

Mirza, M., Osindero, S., Conditional generative adversarial nets, ar**v preprint, ar**v:1411.1784,(2014).

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations, ar**v preprint, ar**v:2002.05709,(2020).

Grill, J. B., Strub, F., Altché, F.: Bootstrap your own latent A new approach to self-supervised Learning, ar**v preprint, ar**v:2006.07733,(2020).

He, K. M., Fan, H. Q., Wu, Y. X., **e, S. N., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 9729–9738 (2020).

Ye, M., Shen, J.B., Lin, G.J., **ang, T., Shao, L., Hoi, S.C.H.: Deep learning for person re-identification: a survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 44(6), 2872–2893 (2021)

Ge, Y. X., Zhu, F., Chen, D. P., Zhao, R., Li, H. S.: Self-paced contrastive learning with hybrid memory for domain adaptive object re-ID, in Conference and Workshop on Neural Information Processing Systems, NeurIPS (2020).

Dai, Z. Z., Wang, G. Y., Zhu, S. Y., Yuan, W. H., Tan, P.: Cluster contrast for unsupervised person re-identification, ar**v preprint, ar**v:2103.11568 (2021)

J. Sun, Y. F. Li, H. J. Chen, X. D. Zhu, Y. H. Peng, Y. F. Peng, Inter-cluster and intra-cluster joint optimization for unsupervised cross-domain person re-identification

He, K. M., Zhang, X. Y., Ren, S. Q., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Zheng, L., Shen, L., Tian, L.: Scalable person re-identification: A benchmark, in IEEE Conference on Computer Vision and Pattern Recognition, pp. 1116–1124 (2015)

Yuan, B. W., Chen, B. R., Tan, Z. Y., Shao, X., Bao, B. K.: Unbiased feature enhancement framework for cross-modality person re-identification, Multimedia Systems, 2022, online.

Chen, Y. H. S., Wan, L., Li, Z. H., **g, Q. Y., Sun, Z. Y.: Neural feature search for RGB-Infrared person re-identification. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 587–597 (2021)

Wu, Q., Dai, P., Chen, J., Lin, C. W., Wu, Y., Huang, F., Zhong, B., Ji, R.: Discover cross-modality nuances for visible-infrared person re-identification. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 4330–4339 (2021)

Zhu, Y.X., Yang, Z., Wang, L., Zhao, S., Hu, X., Tao, D.P.: Hetero-center loss for cross-modality person re-identification. Neurocomputing 386, 97–109 (2020)

Maaten, L.V.D.M., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9(86), 2579–2605 (2008)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 62172029 and No. 61872030).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, J., Li, Y., Chen, H. et al. Visible-infrared person re-identification model based on feature consistency and modal indistinguishability. Machine Vision and Applications 34, 14 (2023). https://doi.org/10.1007/s00138-022-01368-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01368-w