Abstract

Large-scale surveys are common in social and behavioral science research. Missing data often occur at item levels due to nonresponses or planned missing data designs. In practice, the item scores are typically aggregated into scale scores (i.e., sum or mean scores) for further analyses. Although several strategies to handle item-level missing data have been proposed, most of them are not easy to implement, especially for applied researchers. Using Monte Carlo simulations, we examined a practical hybrid approach to deal with item-level missing data in Likert scale items with a varying number of categories (i.e., four, five, and seven) and missing data mechanisms. Specifically, the examined approach first uses proration to calculate the scale scores for a participant if a certain proportion of item scores is available (a cutoff criterion of proration) and then use full information maximum likelihood to deal with missing data at the scale level when scale scores cannot be computed due to the selected proration cutoff criterion. Our simulation results showed that the hybrid approach was generally acceptable when the missing data were randomly spread over the items, even when they had different thresholds/means and loadings, with caution to be taken when the missingness is determined by one of the scale items. Based on the results, we recommend using the cutoff of 30% or 40% for proration when the sample size is small and the cutoff of 40% or 50% when the sample size is moderate or large.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Questionnaires with multi-item scales are commonly used in social and behavioral science to measure constructs that cannot be directly observed. In practice, missing data can occur on the items due to nonresponse or planned missing data designs (Graham et al., 2006), raising questions on how to appropriately handle the item-level missing data. Optimal ways of handling missing data may vary depending on the target analyses. For example, if the target analysis is to examine the psychometric properties of a scale using a factor analysis model that incorporates individual item scores within the analysis model, full information maximum likelihood (FIML) would be a convenient and appropriate approach. However, in many studies, analyses (e.g., regression analyses) are not performed directly on item scores but on scale scores. Scale scores are typically calculated as a linear combination (i.e., average or sum) of the corresponding item scores and represent the corresponding individuals’ standing on the latent construct’s distribution. Dealing with item-level missing data in this scenario is more complicated because there are multiple strategies for handling the problem, and they all have their pros and cons.

As further explained below, some strategies discard the item-level information from an individual for a whole scale if he/she had incomplete item scores. In contrast, others attempt to utilize all or part of the information available at the item level. Although methods that preserve all available information in the data may sound optimal, they could be challenging to implement and are not always practical for applied researchers (see detail below). This article proposes and examines a practical approach to deal with item-level missing data when the items are to be aggregated to scale scores for further analysis. We term this approach a hybrid approach. In the following, we describe five existing strategies to handle item-level missing data, along with the proposed hybrid approach.

Strategies to deal with item-level missing data

The first strategy is to treat scale scores as missing for an individual whenever there are missing item scores. Valid missing data treatment techniques such as FIML and multiple imputation (MI) can be used to deal with the missing data at the scale-score level when data are missing at random (MAR). This strategy will result in missing scale scores for all incomplete cases regardless of the number of items with missing data. Gottschall et al. (2012) examined this strategy with FIML and referred to it as FIML at the scale level (FIMLscale). This strategy is easy to implement. However, there are two problems. First, it discards any available item scores from incomplete cases, resulting in a substantial loss of item-level information and the power for the analysis conducted at the scale level, particularly when the incomplete cases have only a small proportion of missing item scores. Second, suppose missingness on an item score is dependent on the score of another item from the same individual. In that case, this strategy could also introduce bias in parameter estimates because the missing data predictor is not accounted for in the subsequent analyses (Gottschall et al., 2012).

Mazza et al. (2015) developed a method to mitigate the problems of FIMLscale mentioned above. This method follows the same process as the first strategy except that all but one item for each scale will be included as auxiliary variables when analyzing the scale scores using the saturated model approach with FIML (Graham, 2003). Mazza et al. (2015) found that this approach produced unbiased parameter estimates while preventing the information loss problem of the FIMLscale strategy. However, including item-level variables as auxiliary variables can drastically increase the number of variables in the analysis, which may create convergence problems, particularly when the proportion of incomplete cases is high and the sample size is relatively small. For instance, Mazza et al. (2015) reported severe convergence problems in 30% of the replications in one of their simulation conditions (i.e., 25% missing and sample size = 200). Note that their study considered only two multiple-item scales. If more scales are involved in target analyses, more auxiliary variables need to be incorporated in their strategy, and the convergence problem could be more severe. Because of the convergence problem, Mazza et al. (2015) suggested ways to reduce the number of auxiliary variables by aggregating items or including only incomplete variables. However, these suggestions only serve as general principles. Researchers will still need to tailor their analytical plan based on their data characteristics (e.g., missing data patterns and sample size). This creates another layer of complexity and uncertainty when implementing Mazza et al. (2015)’s strategy.

The third strategy is to use multiple imputation (MI) at the item level (MIitem, see Gottschall et al., 2012; van Buuren, 2010; van Ginkel, 2010). MIitem will result in complete item scores and scale scores for each imputed dataset. Target analysis is performed on each imputed dataset, and the estimated parameters are aggregated following the Rubin’s rule (Rubin, 1987). Note that there are different algorithms for implementing the MIitem: joint distribution approach and fully conditional specification or univariate distribution approach (see van Buuren, 2010; Wu et al., 2015). The latter is deemed more flexible if the Likert scale items are imputed using a categorical model (Wu et al., 2015). Gottschall et al. (2012) found that MIitem outperformed FIMLscale by utilizing all available information at the item level. However, the method shares similar problems as the second strategy (i.e., FIMLscale with auxiliary variables, see Mazza et al., 2015). With multiple scales in target analyses, a large number of variables should be incorporated in the imputation model, which may cause severe convergence problems. Such problems can be substantial when the sample size is relatively small. In extreme cases, the number of variables in the model may exceed the sample size, making data imputations impossible. Gottschall et al. (2012) mentioned a potential solution to the problem by imputing data sequentially (e.g., one scale at a time). This idea of sequential imputation is basically the same as the nested multiple imputation approach proposed by Rubin (2003). However, the procedure has not been automated by any software package to date. Thus it is still challenging for applied researchers to use. It also involves extra steps and decision rules (e.g., the imputation order), and the method has not yet been systematically and empirically evaluated.

Note that there are variants of the imputation strategy for survey items. For example, Bernaards and Sijtsma (2000) proposed a two-way imputation method to impute unidimensional scale data. This method will impute missing item scores using person and item effects based on a two-way ANOVA model. van Ginkel et al. (2007) extended this approach to multidimensional data, requiring researchers to apply a principal component analysis with varimax rotation on the scale and weight the items based on their loadings obtained from the analysis (see also van Ginkel, 2010). Although this method has merits, a later study by van Buuren (2010) suggests that it was inferior to and less flexible than the MIitem based on fully conditional specifications. In addition, this method has not been automated in commonly used software packages, making it challenging for applied researchers to use.

The fourth strategy is the two-stage ML (TSML) developed by Savalei and Rhemtulla (2017). This method will first use FIML to estimate the mean and covariance matrix of item variables. The summary data will then be used to calculate the mean and covariance matrix of the corresponding scale scores, based on which analysis on the scale scores (e.g., regression) will be performed. This approach is comparable to MIitem and superior to FIMLscale because it can preserve all available item-level information. One downside of TSML is that it may fail to converge under small sample sizes (Chen et al., 2020). In addition, it relies on sophisticated matrix algebra and has not been automated by any software package to date, making it challenging for applied researchers to implement at this point. Note that researchers are develo** user-friendly interfaces for TSML. For example, a Shiny app has been developed to implement TSML for univariate regression (Chen et al., 2020). Thus this approach may become more accessible to applied researchers for multiple regression or more complex analyses in the foreseeable future.

The last and probably the simplest strategy is proration, which uses the mean of the available item scores for a participant to impute the scale scores for the participant when a certain proportion of item scores are available (a cutoff criterion for proration). Proration is equivalent to person-mean imputation (i.e., single mean imputation within a person) with a cutoff criterion. Depending on the applied cutoff criterion, this strategy may result in scale-level missing data for some but not all of the incomplete cases. Proration has been a common approach to deal with item-level missing data in practice. A literature review on the wide use of proration in practice can be found in Mazza et al. (2015). Their review reveals that a cutoff criterion of 50% or 80% is frequently used in practice. In other words, scale scores for an individual will be calculated using proration if at least 50% or 80% item scores are available for the individual. Otherwise, the scale score will be deemed missing. For the missing scale scores, missing data techniques such as listwise deletion, FIML, or MI could be potentially used. We have chosen to consider FIML for its ease in implementation, given that FIML is generally comparable to MI (Graham et al., 2007) and is superior to listwise deletion (Enders, 2010). We view the approach that combines proration at the item level and FIML at the scale level (proration + FIMLscale) as a hybrid approach. This hybrid approach is the focus of the current study.

When proration and FIMLscale are implemented separately, they both have their own limitations. As mentioned above, FIMLscale allows information to be borrowed from other scales to recover missing information. Nevertheless, it fails to account for item-level information for incomplete cases. Proration is the opposite. It imputes missing item scores from a participant by using available item scores within the same scale from the same participant. Because the scale items for the same participants are usually highly correlated, proration has its utility in leveraging within-scale information (Siddiqui, 2015). However, it cannot borrow information outside of the scale or from other participants. In addition, proration treats all items the same without accounting for the potential differences in means and correlations among the items, based on which researchers have speculated that proration could only work when items are highly homogeneous (Graham, 2009; Schafer & Graham, 2002). Despite its wide use in practice (see Mazza et al., 2015), systematic evaluations on proration are limited, and findings on the proration performance are mixed. For example, in the context of analysis of variance, Siddiqui (2015) found that proration was a robust method to deal with item-level missing data regardless of whether items were missing at random or not at random. However, Lee et al. (2014) showed that proration could produce biased parameter estimates, especially when items with higher means had more missing data.

Mazza et al. (2015) conducted a systematic simulation study to examine proration. They demonstrated that proration could produce biased results even under the missing completely at random (MCAR) mechanism when items with missing data had differential means or correlations than those with complete data. However, their study has the following limitations. First, they imposed missing data only on the items with higher means (.5 vs. 0) or loadings (.75 vs. .5) than the rest of the items. Thus, the MCAR mechanism they simulated produced random missingness across individuals but not items. Second, they applied person-mean imputations on all incomplete cases. Therefore, there were complete data at the scale level, which is often not the case in practice. Third, they did not explore cutoff criteria for proration. Fourth, they only considered ordinal items with seven categories, which would restrict the application in practice.

We argue that the hybrid approach combines the strengths of both proration and FIMLscale while complementing their identified limitations. Thus, we expect it to perform better than proration or FIMLscale alone. Specifically, using proration may carry forward some of the item-level information to FIMLscale. Additionally, using FIMLscale could complement proration by borrowing information from other scales. One important consideration of the hybrid approach is to what extent one method will have more influence on the result. This will depend on the adopted cutoff criteria for proration. For example, a low cutoff will decrease the amount of missing data at the scale level, shifting the hybrid approach towards proration. In contrast, a high cutoff criterion would increase the amount of missing data at the scale level, thus shifting the approach towards FIMLscale. Accordingly, evaluating the effect of cutoff criteria for proration in the context of the hybrid approach is critical for its application in practice.

The current study aims to evaluate the performance of the hybrid approach using Monte Carlo simulations. The primary motivation for studying the hybrid approach is that it may provide a practical alternative to other existing item-level missing data strategies for applied researchers. The hybrid approach is much easier to implement and requires little effort from researchers relative to the other proposed methods. The hybrid approach could also substantially reduce the number of variables that need to be integrated into the missing data handling processes. Given that the cutoff for proration is critical in applying the hybrid approach, one main focus is to explore the appropriate cutoffs so that guidance on what cutoff to use in practice can be developed. The existing literature has suggested 50% or 80% cutoff criteria for proration (Mazza et al., 2015), yet neither has been supported by rigorous research.

To achieve the above goal, we conducted three simulation studies. These studies were differentiated by three possible missing data mechanisms for the missingness in item scores within a scale: the missingness is determined by an observed external variable, by the latent factor underlying the items, or by one of the scale items. As further explained below, these mechanisms can be characterized as missing at random (MAR), missing not at random (MNAR), or a mix of both. Given that survey items are often ordinal in nature (e.g., Likert scale), we consider ordinal items in the simulations. In addition, we assume that the scales are unidimensional given that it is a general requirement for valid scale scores (Peters, 2014).

In the remainder of the article, the designs and main findings of the simulation studies are first presented. Because the designs of the simulation studies are mostly identical, the design of Study 1 is described in detail, and the differences of the other two studies from the first study are mentioned briefly. The results from the three studies are then synthesized and discussed. We conclude the study by offering some general recommendations for applied researchers in implementing the proposed hybrid approach.

Simulation Study 1

Data generation

To generate data, we assumed that there were two unidimensional scales (A and B scales) with ten Likert scale (i.e., ordinal) items for each (A1–A10 and B1–B10). There were also two observed continuous variables named X1 and X2. The target analysis examines to what extent A scale scores can be predicted by B scale scores along with X1 and X2. The data were generated using the following procedure.

We first generated four standard normal variables: ηA, ηB, X1, and X2, where ηA and ηB are scores for the latent factors underlying the two scales. Specifically, we generated ηB, X1, and X2 first with the correlations among the three predictors set at .3. We then calculated ηA using the following equation:

where we fixed β2 at .3, β3 at .5, and varied β1 at two levels (0 or .3). We fixed the variance of the residual e at a value so that the variance of ηA will be equal to 1. Based on ηA and ηB, we then generated the data for the items in each scale (A1–A10 and B1–B10, respectively) using a single-factor model. The loadings for the two constructs were varied at two levels to represent different scale reliabilities (explained below). All variances of the unique factors were fixed at specific values so that all item scores will have a variance of 1.

After generating the continuous item scores, we categorized them into ordinal data using K − 1 thresholds with K = 4, 5, or 7. Given that the ordinal items will likely have different thresholds, we set the thresholds for half of the items to be symmetric and the other half to be asymmetric. Because item thresholds vary across applied studies and they are not routinely reported in practice, we borrowed the thresholds used by Rhemtulla et al. (2012) to generate the ordinal data with a different number of categories (see Table 1). Different thresholds resulted in different means of the items. Note that we only used the symmetric and moderately asymmetric thresholds in their study to simulate the items.

Missing data generation

We imposed missing data on some of the A and B items. In this study, missingness in the A items was determined by X1 and missingness in the B items was determined by X2. Because both missing data predictors are observed variables correlated with the A and B items, the missing data mechanism is MAR according to Rubin’s (1976) definition. Specifically, missing data on the A items were created in two steps. First, a certain proportion (e.g., 30%) of cases are selected to have missing data on some of the A items based on the X1 variable. In other words, the probability of having missing observations on any of the ordinal indicators in the A scale for a participant was determined by the individual’s score on X1. This relationship is expressed using the following logistic regression equation (Muthén & Muthén, 2002):

The intercepts (B0) were varied to manipulate the proportion of missing data (B0 was 2.60 for 10%, −1.00 for 30%, and 0 for 50% missing data). The regression weight (B1) in the logistic regression equation was also varied (explained further below) to manipulate the strength of the missing data mechanism, with a larger B1 indicating a stronger missing data mechanism. Second, for each participant selected to have missing data, we randomly selected one to ten items to have missing observations in order to reflect the reality that the number of missing items can vary across participants. On average, five out of ten items had missing observations for the cases with incomplete data. Missing data on the B scale items were generated using the same process except that the probability of have missing observations on any of the B scale items was determined by X2. This way of assigning missing data is different from Mazza et al. (2015) in two aspects: (1) the missing data assignment was random across items but not across individuals, and (2) the number of incomplete items ranged from one to ten in our study while it ranged from one to five in Mazza et al. (2015). Thus, our study simulated more item-level missing data, given the number of incomplete cases being equal.

Design factors

To examine the performance of the approach under different conditions, we varied the following factors.

-

1)

Sample size (N = 100, 200, or 500). These Ns fall in the range of the typical sample sizes in social and behavioral science research. For ease of presentation, we call N = 100, 200, and 500 a small, moderate, and large sample size, respectively.

-

2)

Proportion of cases with missing data (i.e., 10%, 30%, or 50%). These missing data proportions represent small, moderate, and large proportions examined in the previous literature (Wu et al., 2015). Note that 50% missing is higher than the missing data proportions considered in many of the previous studies; for example, the highest missing data proportions considered in both Mazza et al. (2015) and Chen et al. (2020) were lower than 30%). However, echoing van Buuren (2010), we want to use a high missing data rate to see whether the method can still hold as uncertainty grows.

-

3)

Strength of missing data mechanism. As mentioned above, the strength of the missing data mechanism is reflected by B1 in Equation 2. We varied B1 at two levels: B1 = 1 or 2, which was translated to an odds ratio of 2.7 or 7.39, representing a small to moderate or large effect size according to Chen, Cohen, and Chen (2010). The McKelvey and Zavoina’s pseudo R2 values (McKelvey & Zavoina, 1975) corresponding to the two levels, measuring explained variance in missingness, were 23% and 55%, respectively. For ease of presentation, we refer to the two levels as weak and strong mechanisms.

-

4)

Factor loadings. For both A and B scales, we varied the factor loadings of the data generation model at two levels: .7 for all items, which led to a Cronbach alpha of .90. In addition, to reflect the reality where indicators are likely to have differential contributions to the latent factor, we included a condition in which the loadings varied across the indicators (i.e., three indicators had loadings of .4, four indicators had loadings of .6, and three indicators had loadings of .8). The mixed loadings led to a Cronbach alpha of .81.

-

5)

Cutoff criterion for proration. We varied the cutoff criteria for proration from 10% to 90% in increments of 10% (i.e., 10%, 20%, …, 80%, 90%). With cutoff = 10%, the scale scores for an individual were calculated using the mean of the available items if there were at least one available item score; otherwise, the scale scores were left as missing. As the cutoff increases, scale scores are calculated for fewer cases, resulting in more missing data at the scale score level. With cutoff = 90%, a scale score was only calculated if 90% of item scores were available, almost equivalent to the FIMLscale strategy.

-

6)

Size of the regression coefficient for predicting ηA from the scale score of ηB (see Equation 1) at two levels: 0 or .3. The two effect sizes allow us to examine both type I error rates and power associated with the proposed approach. Note that for effect = .3 condition, the regression slope of predicting A scale scores (i.e., an average of A scale items, denoted by YA) from B scale scores (i.e., an average of B scale items, denoted by XB) was not exactly .3 and could not be determined analytically because of categorizing and averaging item scores. Thus, we used the averaged regression slope from a large number of simulated complete data for each combination to approximate the true value of the regression slope.

In sum, there are 3 (sample size) × 2 (effect size) × 3 (number of categories) × 2 (strength of missing data mechanism) × 3 (proportion of missing data) × 2 (factor loadings) × 9 (cutoff criterion for proration) = 1944 combinations. We created 1000 replications for each combination. The data were generated using SAS PROC IML. After generating the data, we applied the regression analysis of YA on XB, X1, and X2 to each replication (see Equation 3). The regression analyses were conducted using SAS PROC CALIS with the FIML estimator. Example SAS code for simulating the data and implementing the examined approach can be downloaded from http://hdl.handle.net/1805/24059.

Evaluation criteria

To evaluate the method, we focused on the regression slopes (i.e., b1–b3) in Equation 3. The following criteria were used to evaluate the method.

Relative bias in point estimate

We used % relative bias to quantify the bias in a nonzero parameter (θ).

where \( {\hat{\uptheta}}_i \) is the parameter estimate for the ith replication, q is the number of replications, and θ0 is the population parameter value. Following Muthén et al. (1987), we deemed % relative bias ≤10% acceptable. For parameters with zero population value, the bias is calculated by the same formula without the denominator.

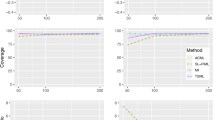

95% CI coverage

We also reported the 95% CI coverage for the regression slopes. The 95% CI coverage for each parameter was calculated as the proportion of the 95% CIs that covered the population value across replications. The upper and lower limits of the CI for the regression slope were computed based on the corresponding standard error estimate. Following Enders (2001), a CI coverage value below 90% was deemed problematic.

Type I error rate and power

We reported power to detect a nonzero effect/regression slope and Type I error rate to detect a significant effect when the true effect was zero. Type I error rate and power were quantified as the percentage of the 1000 replications that gave a significant result on the regression coefficient. Following Bradley’s (1978) “liberal criterion,” Type I error rates between 2.5% and 7.5% were deemed acceptable.

Root mean squared error (RMSE)

RMSE is the square root of the averaged squared discrepancy between the parameter estimate and the population parameter value.

RMSE measures overall inaccuracy (the extent to which sample estimates deviate from the population parameter value), which is a function of both bias and variance of estimation. A cutoff with a smaller RMSE leads to more accurate estimates thus is preferred. Because the raw RMSE values can be influenced by various factors such as sample size, missing data, and effect size, they are not directly interpretable. However, holding other factors equal, we can facilitate the comparisons of RMSEs from multiple cutoffs by calculating a ratio of RMSE from each cutoff relative to the lowest RMSE. For example, a cutoff with an RMSE ratio of two means that RMSE from the cutoff is twice the lowest RMSE. The raw RMSE values under missing data and complete data can be found in the appendix.

Appropriate cutoff criteria

Appropriate cutoffs for proration under a specific condition were identified using the following evaluation criteria: relative bias ≤ 10%, CI coverage ≥ 90%, and type I error rate is between 2.5% and 7.5%. Note that the RMSE ratio is not included in this set of criteria because there is no clear guideline for acceptable RMSE ratios. Nevertheless, if multiple appropriate cutoffs are available, the one with a lower RMSE ratio would lead to more accurate results.

Study 1 result

The models for all conditions in the study converged properly except for some replications when the cutoffs were extremely high (i.e., 90% or 80%) and the missing proportions were high (i.e., 50%). The non-convergence rates were generally less than 10%; however, when the sample size was small (N = 100), particularly with four-category data, the non-convergence rates could reach up to 21%. Given that the results were unlikely valid with a substantial non-convergence rate, we decided to treat the result as missing when the non-convergence rate was above 20%.

Given that the results showed similar patterns across the two conditions for factor loadings (i.e., same loadings and mixed loadings), we collapsed the results over the two conditions. In addition, the results were all acceptable when the missing data proportion was low (i.e., 10%) regardless of the cutoff used for proration. They were also similar between weak and strong missing data mechanism conditions or between 30% and 50% missing data, with the results slightly worse under the strong missing data mechanism, or 50% missing data. Furthermore, the results for b2 and b3 were either better than or led to the same conclusions as the results for b1. Thus, for simplicity, we only reported the results for b1 under the worst scenario: 50% missing data with a strong MAR mechanism. The results for the other conditions and for b2 and b3 can be requested from the corresponding author. In the following, we organized the results by the number of categories in the ordinal items. Because the results for the five- and seven-category data showed very similar patterns, we described the results together.

Results for four-category items

Relative bias

The biases tended to be all acceptable when the true effect was zero. However, for nonzero effects, extremely low (i.e., 10%) and high cutoff criteria (i.e., 90%) resulted in substantial biases in the regression slope (see Table 2). With an extremely low cutoff (e.g., 10%), scale scores could be calculated when observations were available on only one item, potentially decreasing the validity of the scale scores. On the other hand, when the cutoff was too high, minimal item-level information will be utilized, which also could increase the bias.

95% CI coverage

CI coverage rates were above 90% across all conditions, except for a few conditions with very low cutoffs for proration (i.e., 10% and 20%, see Table 2).

RMSE ratio

For RMSE, holding other factors equal, lower cutoffs tended to yield lower RMSEs. The relative performance of the different cutoffs was contingent on sample size. With a smaller sample size, there was less tolerance on information loss, thus increasing the impact of the cutoff criterion on the RMSE ratio.

Type I error rate and power

Type I error rates were only evaluated for b1 because we set b1 to be either zero or nonzero. The Type I error rates appeared to be higher with smaller sample sizes and higher cutoffs. The Type I error rates were all acceptable under the large sample size. With a small or moderate sample size (i.e., N = 100 or 200), the Type I error rates under higher cutoffs could be higher than 7.5% (see Table 2). In terms of power, power tended to be lower with extremely low (e.g., 10%) or high cutoffs (e.g., 80% and 90%) for proration. For the other cutoffs, power rates were generally comparable.

Appropriate cutoffs

Taking all the criteria (relative biases ≤ 10%, CI coverage rate ≥ 90%, type I error rate is between 2.5% and 7.5%) together, appropriate cutoffs were identified. As mentioned above, any cutoff was acceptable with a small amount of missing data (i.e., 10%). We broke down the acceptable cutoffs across the moderate and large amount of missing data conditions (i.e., 30% or 50%) by sample size. The range of acceptable cutoffs was wider with a larger sample size. With a small sample size, cutoffs between 20% and 50% would satisfy all the criteria (see Table 2). With a moderate or large sample size, cutoffs between 30% and 70% seemed appropriate. Within the range of appropriate cutoffs, lower cutoffs were preferable because they led to smaller RMSEs.

Results for five- or seven-category items

The results for items with five categories are summarized in Table 3 for strong MAR and 50% missing data. Note that the general pattern of the results applies to seven-category data. For the MAR mechanism, the results for items with five- and seven-category data seemed better than those with four-category data, with slightly higher CI coverages and lower Type I error rates in many conditions. However, the patterns were quite similar. Specifically, very low or high cutoffs could produce problematic results. High cutoffs tended to increase biases in parameter estimates and inflate type I errors, and low cutoffs could increase biases in parameter estimates and lower the CI coverage.

Appropriate cutoffs

Again, any cutoff was acceptable with the small amount of missing data. Comparing to the four-category data, a wider range of cutoffs turned out to be appropriate for five- or seven-category data when the amount of missing data was moderate or large. Cutoffs between 20% and 70% worked for the small sample size (i.e., N = 100), and between 20% and 90% were acceptable for moderate or large sample size (see Table 3).

Simulation Study 2

In the second simulation study, we examined a missing data mechanism where the probability of a participant having missing data on the ordinal indicators in A or B scale was determined by the corresponding latent factor underlying the indicators. Because the latent factor was not observed, this is an MNAR mechanism (Rubin, 1976). The same data generation process in Study 1 was adopted except that X1 in Equation 2 was replaced by ηA for scale A or ηB for scale B. As in Study 1, 1944 conditions were examined.

Study 2 result

The results from the MNAR mechanism are presented in Tables 4 and 5 for four- and five-category data, respectively. Again, the results for the seven-category data are not presented because they were very close to those from the five-category data. Similar to Study 1, the models for all conditions converged properly except for some replications when the cutoff was extremely high (i.e., 90% or 80%) and the missing proportion was 50%. The non-convergence rates ranged from 1% to 18%.

The MNAR results were comparable to the MAR results in general. For some conditions, the results were worse under MNAR (e.g., the Type I error rates were higher), particularly when the small sample size was combined with a high cutoff. However, the differences were small. As in Study 1, all cutoffs for proration were acceptable when the amount of missing data was 10%. With a moderate or large amount of missing data, cutoffs of 20% and 30% appeared acceptable with N = 100, and cutoffs between 20% and 80% were appropriate with N = 200 or 500, regardless of the number of categories (see Tables 4 and 5).

Simulation Study 3

In the third simulation study, we examined a missing data mechanism where the missingness in item scores in scale A or B was determined by one of the items in the scale. A similar mechanism has been examined in past research (e.g., Chen et al., 2020; Gottschall et al., 2012). Again, the same data generation process was adopted except that the missing data predictor in Equation 2 for the A or B scale was the 10th item in the scale (i.e., A10 or B10). Note that A10 or B10 had a loading of .7 or .8; thus it was one of the most informative items. Because items were randomly selected to have missing data, A10 and B10 could have missing observations by themselves. Although this is different from the past research in which the item that determines the missingness had complete data, we argue that the scenario examined in the study could be more realistic. Consequently, the simulated missing data mechanism could be deemed a mix of MAR and MNAR. Specifically, for the cases with missing observations on A10 or B10, the mechanism was MNAR, while for those with observed scores on A10 and B10 and missing observations on any of the other indicators, the mechanism would be MAR.

We included all conditions examined in the first two studies except that we only simulated strong missing data mechanism (i.e., B1 = 2 in Equation 2) given that the strong missing data mechanism would be a worse scenario. In sum, there are 3 (sample size) × 2 (effect size) × 3 (number of categories) × 3 (proportion of missing data) × 2 (factor loadings) × 9 (cutoff criterion for proration) = 972 combinations. The same set of criteria was used to evaluate the performance of the method.

Study 3 result

Similar to the first two studies, some of the replications failed to converge when the cutoff was extremely high (i.e., 90% or 80%). However, the convergence problem was much more severe in this study. The non-convergence rate could reach as high as 88% when the sample size was small and the amount of missing data was high for the two extreme cutoffs. In addition, the results were no longer all acceptable for the 10% missing data conditions. Thus, we presented the results under 10% and 50% missing data for this study (see Tables 6, 7 and 8). We did not report the 30% missing data results, given that they were close to and slightly better than the 50% missing data results.

The main trends observed from the previous two studies remained in Study 3. For example, extremely high and low cutoffs were problematic; higher cutoffs were associated with higher RMSEs and lower power; and there was a wider range of appropriate cutoffs, less inflated Type I error rates, and higher CI coverage rates with a larger sample size. Nevertheless, the results from this study were all notably worse than those from the previous two studies, indicating that the missing data mechanism considered in the study can be more challenging for the hybrid approach. Consequently, the range of acceptable cutoffs became narrower than those identified in the previous studies. Furthermore, as the number of categories increased, the results became worse instead of better, which was also different from the previous two studies.

Regarding acceptable cutoffs, when the amount of missing data was low (i.e., 10%), cutoffs of 30% and 40% were acceptable for the small sample size (i.e., N = 100) except for the seven-category data, cutoffs between 30% and 50% were acceptable for the moderate sample size (i.e., N = 200), and cutoffs between 40% and 70% were appropriate for the large sample size (i.e., N = 500), regardless of the number of categories (see Tables 6, 7 and 8). When the amount of missing data was moderate or high, we failed to find a cutoff that satisfied all criteria for the small sample size, regardless of the number of categories, although cutoffs of 30 and 40% appeared to be close in terms of our criteria. The cutoffs of 40 and 50% were appropriate for the moderate sample size, and the cutoffs between 40 and 60% were acceptable for the large sample size (see Tables 6, 7 and 8).

Conclusion and discussion

In the current article, we conducted simulation studies to examine a hybrid approach to deal with item-level missing data for Likert scales when the items are to be aggregated into scale scores for further analyses (e.g., regression analysis). This approach combines proration at the item level and FIML at the scale level. We varied the type and strength of missing data mechanisms, the number of item categories, sample sizes and the amount of missing data, as well as the size of target effects. We also examined the performance of a wide range of cutoffs for proration (i.e., the proportion of available items required for scale score calculation). The simulation results suggest that the hybrid approach could be a practical alternative to deal with item-level missing data when the missing data are randomly spread over the items, even when the items have different thresholds/means and loadings. When appropriate proration cutoffs are used, it could yield acceptable parameter estimates and valid statistical inferences for effects examined at the scale score level.

The hybrid approach, however, did not perform equally well across all missing data mechanisms examined in the article. Its performance was the best under the MAR mechanism (i.e., an observed variable outside of the scale determined the missingness in item scores). It is interesting to see that the performance of the hybrid approach under the MNAR mechanism (i.e., missing data predictor was the latent factor underlying each of the scales) was similar to that under the MAR mechanism. This is probably because the latent factors, although not directly observed, had substantial redundancy with the observed items and external variables (i.e., X1 and X2). Through the redundancy, the hybrid method could utilize the information from these observed variables to reduce the bias. The performance of the hybrid approach was worst when the missingness was determined by one of the scale items. One possible explanation is that the missing data predictors in this scenario (i.e., the 10th item in each scale) were one of the most informative items directly used to form the scale scores. Thus they could have a stronger impact on the result than the other two types of missing data predictors (i.e., external variables and latent factors). The fact that the missing data predictors could have missing observations by themselves likely exaggerated the problem. Furthermore, the FIMLscale component of the hybrid method might not contribute as much as in the previous two scenarios because FIMLscale can only utilize information outside of the scales, and the external variables had weaker correlations with the individual items than with the latent factors in the simulation.

As mentioned previously, the main goal of the current study was to explore appropriate cutoff criteria for proration. As we expected, it is problematic to have too high or too low cutoffs. The range of appropriate cutoff criteria seemed to vary by sample size and the amount of missing data, because these factors influence the amount of available information in the dataset. It appears that less information (as a result of a smaller sample size or higher amount of missing data) calls for a lower cutoff criterion to compensate for the loss of information. The range of appropriate cutoff criteria also differed across missing data mechanisms, with the range narrowest for the last mechanism (i.e., missingness in items is determined by one of the scale items). For the last mechanism, the appropriate cutoff could also vary substantially across the number of categories.

General recommendations

Because it is usually challenging to differentiate the different missing data mechanisms in practice (Enders, 2010), we provide the following general recommendations based on the cutoffs that performed well across all or most of the missing data mechanisms. Nevertheless, if the missing data mechanism (e.g., MAR) in a study is known, then the appropriate cutoffs identified in the result section for the specific mechanism may be used. If the sample size is small and the amount of missing data is low (i.e., ≤10% of the cases have missing data), we would recommend the cutoff of 30% or 40%. These cutoffs worked well across all conditions except that they may inflate Type I error rates for seven-category data when the missingness is determined by one of the scale items. If the sample size is small and the amount of missing data is moderate or large, we would still recommend the cutoff of 30 or 40%, with a warning that the cutoffs could result in slightly biased parameter estimates and/or inflated type I error rates regardless of the number of categories when the missingness is determined by one of the items. If the sample size is moderate or large and the amount of missing data is small, we recommend the cutoffs between 40% and 60%. If both the sample size and amount of missing data are at least moderate, the cutoffs of 40% and 50% are recommended. Overall, the cutoff of 40% appears to be a good choice across all the combinations.

Given our recommended cutoffs above, the cutoff of 50% adopted in the existing literature seems appropriate when the sample size is at least moderate. The cutoff of 80% appears too high, although it may be acceptable under limited conditions such as when the sample size is at least moderate, and the missingness is unlikely determined by one of the items. Researchers should be cautious about using such a high cutoff because (1) it could lead to a high RMSE, and (2) it is associated with a higher risk of non-convergence.

Our study shows that the appropriate cutoffs for proration can be affected by various factors, and there could be multiple appropriate cutoffs based on the study conditions. Also, because of the ad-hoc nature of the hybrid approach, our recommendations are limited to the conditions examined in the study and may not be generalizable to other conditions (explained further below). Given these considerations, we encourage researchers to preregister their adopted cutoff rules and include sensitivity analysis for alternative cutoffs. If the conclusions from different cutoffs are consistent, then stronger evidence can be obtained supporting the conclusion. Furthermore, we used a liberal threshold for acceptable type I error rates (i.e., less than 7.5%). Researchers are advised to acknowledge this fact in their report, especially in areas where this threshold may be deemed too high. In addition, sample size plays an important role in the hybrid approach. With a larger sample size, there is more flexibility in selecting cutoffs.

Limitations and future research

Some limitations of the current study are worth mentioning. First, although we considered varying factor loadings and thresholds across items as well as the number of missing items across individuals, the items were randomly assigned to have missing data for each participant. As a result, missing data had an equal probability of occurring on more important items (with higher loadings) and less important items. If the missing data concentrate on more informative items or less informative items, the results may change. We would expect the bias to increase for the former, and a higher cutoff criterion will likely be preferred because there is more harm than gain in utilizing the available item scores if they are less predictive of the latent construct. In severe cases, the bias may increase to the degree that no cutoff would work, and other missing data methods such as MIitem or TSML may be better used to deal with the missing data. In contrast, lower cutoffs may work better for the latter because missing data on less informative items are expected to have less impact, and lower cutoffs can increase the power and decrease RMSEs. Similarly, the missing data simulated in our study had an equal probability of occurring on items with higher or lower means. If the missing data concentrate on items with very different means from those of the other items, then bias would increase, and in severe cases, our proposed approach may also fail.

Second, we only examined items with four, five, or seven response categories. Thus, our results may not be generalizable to items with fewer than four or more than seven categories (e.g., ten). However, given the pattern of the current results, we predict that the outcomes will likely be worse for the former and will improve for the latter for the first two missing data mechanisms examined in the study (i.e., MAR and MNAR). If the missingness is determined by one of the items within the scale, the expected direction is likely the opposite.

Third, the goal of the study was to evaluate whether the hybrid approach would be acceptable in dealing with item-level missing data by referring to some absolute criteria such as relative bias, CI coverage, and type I error rate. In the future, it would be interesting to examine whether the performance of our proposed approach would be close to the other existing item-level missing data handling strategies for the same conditions examined in the current study. We did not include these strategies in our simulation because they would not influence our conclusion about the hybrid approach. Note that some of the missing data approaches (e.g., MIitem and TSML) are developed for MAR data and have been mainly studied under the MAR assumption (e.g., Chen et al., 2020; Gottschall et al., 2012). Thus, it is unclear how they would perform under the last two missing data mechanisms involving MNAR data.

Fourth, although we examined three missing data mechanisms and varied the strength of the mechanisms, we used a specific parametric approach (i.e., a logistic regression) to simulate missing data. In reality, the models to determine missingness may be different or more complex than what we have examined in the study. For example, the probability of missingness may be high at two tails of the distribution of a missing data predictor and low in the middle. This kind of relationship is nonlinear and cannot be characterized by a monotonic function such as the logistic function (Chen et al., 2020). Thus, we cannot confidently say that the hybrid approach would show similar performance beyond the missing data mechanisms simulated in the study.

Finally, we only considered cross-sectional data in the current study. Due to attrition in longitudinal data collection, there are likely more missing data, at both the item and wave levels, in longitudinal studies. However, attrition typically causes missing data on all item scores for a participant at a specific wave. For this kind of missing data pattern, treating the scale scores as missing would be an unavoidable choice. For the other missing data patterns, the hybrid approach could still be applicable if the missing data occur randomly across items.

In sum, our study provides evidence that with appropriate cutoff criteria for proration, combining proration and FIML at the scale level could be an acceptable strategy to deal with item-level missing data in Likert scales when missing data occur randomly across items, with caution to be taken when missingness is determined by one of the items with a small sample size. Our intention is not to suggest that the hybrid approach can replace more theoretically sound strategies such as multiple imputation at the item level and two-stages ML, but to demonstrate that it could be a practical alternative to those methods when they are not convenient to use or fail to converge. In other words, these methods should be still preferred when they can be appropriately used. In practice, researchers may also combine the hybrid approach with other strategies in their studies to deal with missing data when it is appropriate. For example, in studies that involve many multiple item scales, one could apply the hybrid approach to some of the scales which have a relatively small amount of missing data and are more in line with the conditions examined in the study so that the number of variables can be significantly reduced for the other strategies.

References

Bernaards, C. A., & Sijtsma, K. (2000). Influence of imputation and EM methods on factor analysis when item nonresponse in questionnaire data is nonignorable. Multivariate Behavioral Research, 35, 321–364.

Bradley, J. V. (1978). Robustness? British Journal of Mathematical and Statistical Psychology, 31(2), 144-152. https://doi.org/10.1111/j.2044-8317.1978.tb00581.x

Chen, H., Cohen, P., & Chen, S. (2010). How Big is a Big Odds Ratio? Interpreting the Magnitudes of Odds Ratios in Epidemiological Studies. Communications in Statistics - Simulation and Computation, 39(4), 860-864. https://doi.org/10.1080/03610911003650383

Chen, L., Savalei, V., & Rhemtulla, M. (2020). Two-stage maximum likelihood approach for itemlevel missing data in regression. Behavior Research Methods, 52(6), 2306-2323.

Enders, C. K. (2001). The impact of nonnormality on full information maximum-likelihood estimation for structural equation models with missing data. Psychological Methods, 6(4), 352–370.

Enders, C. K. (2010). Applied missing data analysis. The Guilford Press.

Gottschall, A. C., West, S. G., & Enders, C. K. (2012). A Comparison of Item-Level and Scale-Level Multiple Imputation for Questionnaire Batteries. Multivariate Behavioral Research, 47(1), 1-25.

Graham, J. W. (2003). Adding missing-data-relevant variables to FIML-based structural equation models. Structural Equation Modeling, 10(1), 80-100.

Graham, J. W. (2009). Missing data analysis: Making it work in the real world. Annual Review of Psychology, 60, 549–576.

Graham, J. W., Taylor, B. J., Olchowski, A. E., & Cumsille, P. E. (2006). Planned missing data designs in psychological research. Psychological Methods, 11(4), 323-343.

Graham, J. W., Olchowski, A. E., & Gilreath, T. D. (2007). How many imputations are really needed? Some practical clarifications of multiple imputation theory. Prevention Science, 8(3), 206–213.

Lee, M. R., Bartholow, B. D., McCarthy, D. M., Pederson, S. L., & Sher, K. J. (2014). Two alternative approaches to conventional person-mean imputation scoring of the Self-Rating of the Effects of Alcohol Scale (SRE). Psychology of Addictive Behaviors, 29(1), 231–236.

Mazza, G. L., Enders, C. K., & Ruehlman, L. S. (2015). Addressing item-level missing data: A comparison of proration and full information maximum likelihood estimation. Multivariate Behavioral Research, 50(5), 504-519. https://doi.org/10.1080/00273171.2015.1068157

McKelvey, R., & Zavoina, W. (1975). A Statistical Model for the Analysis of Ordinal Level Dependent Variables, Journal of Mathematical Sociology, 4, 103–120.

Muthén, L. K., & Muthén, B. O. (2002). How to use a Monte Carlo study to decide on sample size and determine power. Structural Equation Modeling, 9(4), 599–620.

Muthén, B., Kaplan, D., & Hollis, M. (1987). On structural equation modeling with data that are not missing completely at random. Psychometrika, 52(3), 431-462.

Peters, G.-J. Y. (2014). The alpha and the omega of scale reliability and validity: Why and how to abandon Cronbach’s alpha and the route towards more comprehensive assessment of scale quality. European Health Psychologist, 16(2), 56–69.

Rhemtulla, M., Brosseau-Liard, P. É., & Savalei, V. (2012). When can categorical variables be treated as continuous? A comparison of robust continuous and categorical SEM estimation methods under suboptimal conditions. Psychological Methods, 17, 354 - 373.

Rubin, D. B. (1976). Inference and missing data. Biometrika, 63, 581–592.

Rubin, D. B. (1987). Multiple Imputation for Nonresponse in Surveys. Wiley

Rubin, D. B. (2003). Nested multiple imputation of NMES via partially incompatible MCMC. Statistica Neerlandica, 57(1), 3-18.

Savalei, V., & Rhemtulla, M. (2017). Normal theory two-stage ML estimator when data are missing at the item level. Journal of Educational and Behavioral Statistics, 42(4), 405-431. https://doi.org/10.3102/1076998617694880

Schafer, J. L., & Graham, J. W. (2002). Missing data: Our view of the state of the art. Psychological Methods, 7, 147–177.

Siddiqui, O. I. (2015). Methods for computing missing item response in psychometric scale construction. American Journal of Biostatistics, 5(1), 1.

van Buuren, S. (2010). Item imputation without specifying scale structure. Methodology. 6(1), 31–36. https://doi.org/10.1027/1614-2241/a000004

Van Ginkel, J. R. (2010). Investigation of multiple imputation in low-quality questionnaire data. Multivariate Behavioral Research, 45, 574–598.

van Ginkel, J. R., van der Ark, L.A. and Sijtsma, K. (2007), Multiple imputation for item scores when test data are factorially complex. British Journal of Mathematical and Statistical Psychology, 60: 315-337. https://doi.org/10.1348/000711006X117574

Wu, W., Jia, F., & Enders, C. (2015). A Comparison of Imputation Strategies for Ordinal Missing Data on Likert Scale Variables. Multivariate Behavioral Research, 50(5), 484-503.

Author information

Authors and Affiliations

Corresponding author

Additional information

A working example of the examined approach can be downloaded from http://hdl.handle.net/1805/24059. All the simulation results can be requested from the corresponding author.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Wu, W., Gu, F. & Fukui, S. Combining proration and full information maximum likelihood in handling missing data in Likert scale items: A hybrid approach. Behav Res 54, 922–940 (2022). https://doi.org/10.3758/s13428-021-01671-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-021-01671-w