Abstract

Artificial intelligence (AI)-based radiomics has attracted considerable research attention in the field of medical imaging, including ultrasound diagnosis. Ultrasound imaging has unique advantages such as high temporal resolution, low cost, and no radiation exposure. This renders it a preferred imaging modality for several clinical scenarios. This review includes a detailed introduction to imaging modalities, including Brightness-mode ultrasound, color Doppler flow imaging, ultrasound elastography, contrast-enhanced ultrasound, and multi-modal fusion analysis. It provides an overview of the current status and prospects of AI-based radiomics in ultrasound diagnosis, highlighting the application of AI-based radiomics to static ultrasound images, dynamic ultrasound videos, and multi-modal ultrasound fusion analysis.

Similar content being viewed by others

Introduction

Ultrasound has become an indispensable tool for medical diagnosis and treatment because of its non-invasiveness, portability, and real-time imaging capabilities [1]. Artificial intelligence (AI)-based radiomics, the use of machine learning algorithms to extract and analyze quantitative features (such as texture, shape, and intensity) from medical images to assist clinicians to diagnose and predict disease prognosis, has attracted research attention in the field of medical imaging, including ultrasound diagnosis [2,3,4]. It has been extensively studied using various medical imaging modalities, including computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography [5,6,7,8,9,10,11,12,13]. However, compared to other imaging modalities, ultrasound imaging has unique advantages such as high temporal resolution, inexpensiveness, and no radiation exposure. This renders it a preferred imaging modality for several clinical scenarios [14]. The application of AI-based radiomics in ultrasound diagnosis has attracted increasing attention from researchers and clinicians recently.

This review includes a detailed introduction to imaging modalities, including B-mode ultrasound (BUS) [15], color Doppler flow imaging (CDFI) [16], ultrasound elastography (UE) [17], contrast-enhanced ultrasound (CEUS) [18], and multi-modal fusion analysis of BUS, CDFI, UE, and CEUS. It aims to provide an overview of the current status and prospects of AI-based radiomics in ultrasound diagnosis by highlighting the application of AI-based radiomics to static ultrasound images, dynamic ultrasound videos, multi-modal ultrasound fusion analysis, and advanced methods of intelligent ultrasound analysis.

AI has three fundamental tasks in the field of ultrasound: classification, segmentation, and detection. Static ultrasound involves two-dimensional imaging. Furthermore, BUS images reflect the anatomical structure of lesions and are commonly used for classification and segmentation tasks. Additionally, CDFI images reflect the blood flow information of lesions, whereas UE images reflect the tissue hardness of lesions. These two modalities are often used for classification tasks. Dynamic ultrasound involving videos (BUS videos) dynamically reflect changes in the anatomical structure and position of lesions and are frequently used for tasks such as classification, segmentation, detection, and tracking. In addition, CEUS reflects dynamic changes in blood flow and is commonly used for classification. The relationships between AI tasks and different ultrasound modalities are shown in Table 1.

In the next few sections, the applications of AI in ultrasound diagnosis will be discussed in detail. In the static ultrasound image section, the application of AI-based radiomics to extract quantitative features (such as tumor size, shape, texture, and echogenicity) from ultrasound images to assist in the diagnosis and differentiation of various diseases is discussed. The application of AI-based radiomics to analyze changes in the intensity and texture of lesions during the CEUS process is introduced in the dynamic ultrasound video section. This can provide valuable information for the diagnosis and treatment of diseases.

In the multi-modal ultrasound fusion analysis section, the use of AI-based radiomics to integrate information from multiple ultrasound modalities, such as BUS, CDFI, UE, and CEUS, and improve the accuracy of disease diagnosis and treatment planning is discussed. Tables 2, 3 and 4 present the related studies, their aims, and the performance of the AI algorithms, respectively. In addition, the challenges and limitations of AI-based radiomics in ultrasound diagnosis, such as the lack of standardization in feature extraction and the need for large-scale multicenter studies to validate the clinical efficacy of AI-based radiomics is discussed.

AI-based radiomics has the potential to revolutionize the field of ultrasound diagnosis by providing objective and quantitative analyses of ultrasound images. This can improve the accuracy and efficiency of disease diagnosis and treatment. This review provides valuable insights into the current status and future prospects of AI-based radiomics in ultrasound diagnosis and serves as a useful reference for researchers and clinicians in this field.

Application of AI-based radiomics in ultrasound diagnosis

Static ultrasound

Static ultrasound refers to the use of BUS, color CDFI, and UE to generate static images of tissues and organs within the body. These modalities are widely used in medical diagnosis and research, and they provide valuable information on the morphology, vascularity, and elasticity of various tissues. Static ultrasound imaging modalities provide detailed spatial information about tissues and organs, rendering them valuable tools for medical diagnosis and research. Owing to the advancement in technology and an increased understanding of its clinical utility, static ultrasound plays an important role in AI-based radiomics in ultrasound diagnosis (Fig. 1).

Static ultrasound analysis model for diagnosis and prediction. Static ultrasound reflects the spatial characteristics of lesions. It involves two-dimensional imaging. The BUS images provide information on the anatomical structure, shape, texture, and position of lesions. Moreover, the CDFI images reveal the direction of blood flow in the lesions. Additionally, UE images reflect the tissue hardness of the lesions. The existing intelligent analysis methods for static ultrasound include SVM, lasso regression, CNNs, and transformers. These are commonly used for the diagnosis and prognosis of diseases such as breast cancer, ovarian cancer, lymph nodes, and liver fibrosis

BUS

This is the most common type of ultrasonography and involves various examinations. Analysis of ultrasound images performed using AI differs for different examination sites and purposes. It mainly performs classification, segmentation, and detection tasks.

The most common classification task is diagnostics. Specifically, there are diagnoses of specific diseases by traditional and deep learning, such as diagnosis of hepatocellular carcinoma (HCC) [50], triple negative breast cancer [19], cervical tuberculous lymphadenitis [51], and v-raf murine sarcoma viral oncogene homolog B (BRAF) mutation in thyroid cancer [52]. These are generally dichotomous tasks (diagnosis as positive or negative) and staging of the same lesion (such as liver fibrosis staging [41], lung B-line severity assessment [53], classification of COVID-19 markers [54], and determination of the type of liver lesion [55]). They are generally multi-classified tasks. Additional categorical tasks include the preoperative prediction of Ki-67 expression levels in breast cancer patients [56], prediction of axillary lymph node metastasis in breast cancer patients [20], and distant metastasis in follicular thyroid cancer [21]. The classification task usually extracts the features of BUS images from the region of interest (ROI) selected by the doctor. Some of them add relevant data features and use them to make judgments. Some of the tasks generate heat maps by the AI after the judgment, which explains the AI’s judgment and inspires the doctors to summarize their experiences. A heatmap is an image that describes which regions of an input image a model focuses on. The colors’ intensity in the heatmap indicates the level of attention the model gives to corresponding areas. Doctors can use heatmaps to understand which regions the model prioritizes. This enables targeted observation and study of those specific areas, leading to the accumulation of experience for diagnosis and prognosis prediction.

The classification task makes a judgment on the entire image, whereas the detection task adds a localization task to the judgment. This can localize the carotid artery cross-section in ultrasound images [57], lesions on abdominal ultrasound images [58], and thyroid nodules [59]. The detection task can generate multiple labels on a single BUS image. It uses rectangular boxes to mark the positions corresponding to the labels and can handle multiple ROIs in a single image.

The segmentation task is a more accurate classification task. It classifies each pixel point, after which information such as the boundary, size, and location of different regions in the ultrasound image becomes clear. AI can perform arterial plaque [22, 60, 61], breast tumor [23] proposed the use of a newly developed deep learning radiomics of elastography (DLRE) model to assess liver fibrosis stages. DLRE adopts a radiomic strategy for the quantitative analysis of heterogeneity in two-dimensional shear wave elastography (SWE) images. This study aimed to address the clinical problem of accurately diagnosing the stages of liver fibrosis in hepatitis B virus-infected patients using noninvasive methods, a significant challenge for conventional approaches. Zhou et al. [26] proposed deep learning radiomics of an elastography model, which adopted a CNN based on transfer learning as a noninvasive method to assess liver fibrosis stages. This is essential for the prognosis and surveillance of chronic hepatitis B patients. Lu et al. [25] proposed an updated deep-learning radiomics model of elastography (DLRE2.0) to discriminate significant fibrosis (≥ F2) in patients with chronic liver disease. The dataset used in their study included 807 patients and 4842 images from three hospitals. The DLRE2.0 model showed a significantly improved performance compared to the previous DLRE model with an AUC of 0.91 for evaluating ≥ F2. The radiomics models showed good robustness in an independent external test cohort. Destrempes et al. [84] proposed a machine learning model based on random forests to select combinations of quantitative ultrasound features and SWE stiffness for the classification of steatosis grade, inflammation grade, and fibrosis stage in patients with chronic liver disease.

The UE-based AI can also improve the classification and diagnosis of lymph diseases. Zhang et al. [85] used a random forest model for the differential diagnosis of thyroid nodules based on conventional ultrasound and real-time UE. Qin et al. [86] proposed a method based on a CNN that combined the characteristics of conventional ultrasound and ultrasound elasticity images to form a hybrid feature space for the classification of benign and malignant thyroid nodules. Zhao et al. [87] used a machine learning model that incorporated radiomic features extracted from ultrasound and SWE images to develop ML-assisted radiomics approaches. Liu et al. [88] proposed a radiomic approach using BUS and strain elastography to estimate the lymph node status in patients with papillary thyroid carcinoma.

UE is an adjunctive instrument for refining the identification and non-intrusive delineation of breast lesions, facilitating radiologists in amplifying patient care. The incorporation of SWE into BUS demonstrated the potential to provide supplementary diagnostic insights and to reduce the need for unwarranted biopsies [89]. Li et al. [90] proposed an innovative dual-mode AI architecture that could automatically integrate information from ultrasonography and SWE to assist in breast tumor classification. Kim et al. [91] used deep learning-based computer-aided diagnosis and SWE with BUS to evaluate breast masses detected by screening ultrasound and addressed the low specificity and operator dependency of breast ultrasound screening.

Similarly, UE is also widely used to diagnose other diseases. Additionally, UE-based AI can improve lymph node classification, compared to that performed only by radiologist evaluations. Tahmasebi et al. [24] evaluated an AI system for the classification of axillary lymph nodes on UE. They aimed to compare the performance of the AI system with that of experienced radiologists in predicting the presence of metastasis to the axillary lymph nodes on ultrasound.

AI + UE has shown considerable potential in revolutionizing medical diagnoses. It offers a partial solution to the limitations of UE, enhances diagnostic accuracy, and enables individualized classification and predictive models for liver diseases. Additionally, UE-based AI can aid in lymph node disease classification and non-intrusive delineation of breast lesions, reducing the need for unnecessary biopsies and improving patient care. Furthermore, the application of AI to other diseases (such as lymph node classification) highlights its ability to outperform radiologist evaluations. The combination of AI and UE can advance medical imaging and patient outcomes.

Waveform graph

Doppler ultrasound is capable of measuring blood flow velocity, which results in a waveform graph showing the blood supply. No prior studies were found for the use of AI to analyze Doppler flow spectrograms. However, a study conducted to diagnose early allograft dysfunction in liver transplant patients showed that a deep learning model could be used to analyze and determine blood flow spectrograms. The results of the AI analysis of the flow velocity spectrograms related to blood flow can lead to medical conclusions related to blood flow, such as determining the cause of the disease to assist the physician’s treatment. Because the results learned by AI are significantly better than the empirical results of doctors, the heat maps generated assist doctors to understand the information contained in blood flow spectrograms.

Dynamic ultrasound

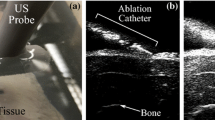

Dynamic ultrasound (also known as CEUS) is an effective imaging tool for analyzing the spatiotemporal characteristics of lesions and diagnosing or predicting diseases. Real-time high-resolution images produced by CEUS are often comparable to those obtained by CT or MRI [92]. Thus, a quick, reliable, and relatively inexpensive CEUS may reduce the need for additional testing. Additionally, CEUS is routinely used worldwide to detect heart disease and stratify the risk of heart attack or stroke. It is also used to identify, characterize, and stage tumors of the liver [93], kidney [94], prostate [95], breast [96], and other organ systems and to monitor the effectiveness of cancer therapies. However, because CEUS is considerably affected by operator bias, the image quality is relatively unstable and the tumor boundary (TB) is often unclear. These disadvantages often limit the accuracy of the direct analysis by radiologists. Therefore, several studies recently tend to use deep learning methods to extract spatiotemporal features from CEUS sequences to assist treatment (Fig. 2).

Dynamic ultrasound analysis model for diagnosis and prediction. Dynamic ultrasound reflects the spatiotemporal characteristics of lesions. It involves videos. Common ultrasound modalities include BUS, CDFI, UE, and CEUS. Current studies on ultrasound videos primarily focus on BUS and CEUS videos. The commonly used intelligent analysis methods for ultrasound videos include three-dimensional CNN, CNN + RNN, R(2 + 1)D, dual-stream CNN, and transformers. These are frequently employed for the diagnosis and prognosis of diseases such as breast cancer, liver cancer, thyroid nodules, kidney disease, and diabetes

Furthermore, AI deep-learning models can aid in diagnostic classification. Tong et al. [27] developed an end-to-end DLR model based on CEUS to assist radiologists in identifying pancreatic ductal adenocarcinoma and chronic pancreatitis. To train and test the model, 558 patients with pancreatic lesions were included. The DLR model achieved an AUC of 0.986 (95%CI: 0.975–0.994), 0.978 (95%CI: 0.950–0.996), 0.967 (95%CI: 0.917–1.000), and 0.953 (95%CI: 0.877–1.000) in the training, internal validation, and external validation cohorts 1 and 2, respectively. Similarly, Chen et al. [28] proposed a three-dimensional CNN model based on CEUS videos. This model was validated using a Breast-CEUS dataset comprising 221 cases. The results show that the proposed model in this study achieved a sensitivity and an accuracy of 97.2% and 86.3%, respectively. The incorporation of domain knowledge led to a 3.5% and 6.0% improvement in sensitivity and specificity, respectively. Liu et al. [29] established and validated an AI-based radiomics strategy for predicting personalized responses of patients with HCC to the first transarterial chemoembolization (TACE) session by quantitatively analyzing CEUS cines. One hundred and thirty patients with HCC (89 and 41 for training and validation, respectively) who underwent ultrasound examinations (CEUS and BUS) within one week before the first TACE session were retrospectively enrolled. The AUCs of deep learning radiomics-based CEUS model (R-DLCEUS), machine learning R-TIC, and machine learning radiomics-based BUS images model were 0.93 (95%CI: 0.80–0.98), 0.80 (95% CI:, 0.64–0.90), and 0.81 (95%CI: 0.67–0.95) in the validation cohort, respectively.

In addition, AI deep learning algorithms can be used to select and predict treatment methods and effects, respectively. Liu et al. evaluated the performance of a deep learning-based radiomics strategy designed for analyzing CEUS to predict the progression-free survival (PFS) of radiofrequency ablation (RFA) and surgical resection (SR) and to optimize the treatment selection for patients with very early or early stage HCC [30]. Their study retrospectively enrolled 419 patients examined using CEUS within one week before receiving RFA or SR (RFA: 214, SR: 205) between January 2008 and 2016. The R-RFA and SR showed remarkable discrimination (C-index: 0.726 and 0.741 for RFA and SR, respectively). The model identified that 17.3% and 27.3% of the RFA and SR patients should swap their treatment, indicating that their average probability of two-year PFS would increase by 12% and 15%, respectively. Similarly, Sun and Lu [31] evaluated the efficacy of atorvastatin in the treatment of diabetic patients using CEUS, based on a three-dimensional reconstruction algorithm. One hundred and fifty-six DN patients were divided into experimental (conventional treatment + atorvastatin) and control (conventional treatment) groups. The kidney volume and hemodynamic parameters, including the maximal kidney volume, minimal kidney volume, and resistance index of all patients were measured and recorded before and after treatment. The volume (136.07 ± 22.16 cm3) in the experimental group after the treatment was smaller, in contrast to the control group (159.11 ± 31.79 cm3) (P < 0.05).

Because delineating the ROI containing the lesion and the surrounding microvasculature frame-by-frame in CEUS is a time-consuming task [32], some AI methods have been proposed to realize automatic segmentation of lesions. Meng et al. [32] proposed a novel U-net-like network with dual top-down branches and residual connections known as CEUSegNet. The CEUSegNet uses the US and CEUS parts of a dual-amplitude CEUS image as inputs. The lesion position can be determined exactly under US guidance, and the ROI can be delineated in the CEUS image. Regarding the CMLN dataset, CEUSegNet achieved 91.05% Dice and 80.06% intersection over the union (IOU). Considering the BL dataset, CEUSegNet achieved 89.97% Dice and 75.62% IOU. Iwasa et al. [33] evaluated the capability of the deep learning model U-Net for automatic segmentation of pancreatic tumors on CEUS video images and the possible factors affecting automatic segmentation. This retrospective study included 100 patients who underwent CEUS for pancreatic tumors. The degree of respiratory movement and TB were divided into three-degree intervals for each patient and were evaluated as possible factors affecting the segmentation. The concordance rate was calculated using IOU. The median IOU for all the cases was 0.77. The median IOU for TB-1 (approximately clear), TB-2, and TB-3 (more than half unclear) were 0.80, 0.76, and 0.69, respectively. The IOU of TB-1 was significantly higher than that of TB-3 (P < 0.01).

Thus, CEUS is a powerful tool for diagnosing and predicting various diseases. It offers real-time high-resolution images comparable to those of CT or MRI, thereby reducing the need for additional tests. Although AI-based deep learning models are affected by operator variability and unclear tumor boundaries, they can assist radiologists in obtaining precise diagnosis and treatment selection. Furthermore, AI models have achieved impressive results in classifying pancreatic lesions, predicting liver fibrosis stages, and optimizing the treatment choices for patients with HCC. Additionally, AI can aid in lesion segmentation, automate time-consuming processes, and improve accuracy. Integrating AI with CEUS has immense potential for advancing medical imaging and improving patient outcomes.

Dual-/multi-modal ultrasound and AI-powered ultrasound image analysis

Various ultrasound modalities depict lesions from different aspects, which provides clinicians with the power to understand lesions more comprehensively. Naturally, a more satisfactory performance is expected via intelligent fusion analysis of combinations of ultrasound modalities. Existing studies in the area of dual/multi-modal ultrasound fusion analysis can be divided into two parts: clinical application and algorithm studies, which focus on the performance of specific clinical problems and the development of fusion methods. Figure 3 summarizes the related studies in recent years.

Research ideas for dual-/multi-modal ultrasound fusion analysis. The fusion analysis of different ultrasound modalities is of significant clinical importance. Each modality of ultrasound has its own advantages and limitations, and an efficient modality fusion method can complement each modality. This improves the accuracy of ultrasound AI diagnosis. Currently, there are various modality combinations such as BUS + CDFI, BUS + UE, BUS + CDFI + UE + CEUS, and US + CT/MRI. Common fusion methods include logistic regression, machine, and deep learning. These are frequently used to diagnose diseases such as breast cancer, liver cancer, thyroid nodules, and COVID-19

Conclusions

Ultrasound diagnosis is widely used in clinical practice because of its noninvasive and real-time imaging capabilities. Radiomics, an emerging field in medical imaging, can extract quantitative features from medical images and provide a comprehensive analysis of image data. The application of AI in radiomics has significantly improved the accuracy and efficiency of ultrasound diagnosis. Static ultrasound images such as BUS, CDFI, and UE are widely used in clinical practice. Additionally, AI-based radiomics can analyze the texture, shape, and other quantitative features of images to identify patterns and provide diagnostic information. For example, AI-based radiomics have been used to differentiate benign from malignant thyroid nodules using BUS images with high accuracy. Dynamic ultrasound videos such as CEUS, provide additional information on the blood flow and perfusion of the imaged tissue. Additionally, AI-based radiomics can be used to analyze temporal changes in contrast enhancement and provide a more accurate diagnosis of liver tumors, breast lesions, and other diseases. Multi-modal ultrasound fusion analysis combines multiple ultrasound imaging modalities (such as BUS, CDFI, UE, and CEUS) to provide a comprehensive analysis of the imaged tissue. Furthermore, AI-based radiomics can be used to analyze the quantitative features of these images to provide a more accurate and comprehensive diagnosis. For example, AI-based radiomics has been utilized to differentiate malignant and benign breast lesions using a combination of BUS, CDFI, and CEUS images. In conclusion, AI-based radiomics has a considerable potential for improving the accuracy and efficiency of ultrasound diagnoses. By analyzing the quantitative features of static ultrasound images, dynamic ultrasound videos, and multi-modal ultrasound fusion, AI-based radiomics can provide a more accurate diagnosis of various diseases (including liver tumors, breast lesions, and thyroid nodules). However, some challenges (such as the need for large-scale datasets and standardized imaging protocols) must be addressed. With further research and development, AI-based radiomics are expected to play an increasingly important role in ultrasound diagnosis.

Availability of data and materials

Not applicable.

Abbreviations

- B-mode:

-

Brightness-mode

- AI:

-

Artificial intelligence

- CT:

-

Computed tomography

- MRI:

-

Magnetic resonance imaging

- BUS:

-

B-mode ultrasound

- CDFI:

-

Color doppler flow imaging

- UE:

-

Ultrasound elastography

- CEUS:

-

Contrast-enhanced ultrasound

- ROI:

-

Region of interest

- CNN:

-

Convolutional neural network

- DLRE:

-

Deep learning radiomics of elastography

- SWE:

-

Shear wave elastography

- HCC:

-

Hepatocellular carcinoma

- TACE:

-

Transarterial chemoembolization

- R-DLCEUS:

-

Radiomics-based CEUS model

- R-TIC:

-

Radiomics-based time intensity curve of the CEUS model

- R-BMode:

-

Radiomics-based BUS image model

- PFS:

-

Progression-free survival

- RFA:

-

Radiofrequency ablation

- SR:

-

Surgical resection

- IOU:

-

Intersection over union

- TB:

-

Tumor boundary

- AGI:

-

Artificial general intelligence

- DLR:

-

Deep learning radiomics

- SVM:

-

Support vector machine

References

Ma LF, Wang R, He Q, Huang LJ, Wei XY, Lu X et al (2022) Artificial intelligence-based ultrasound imaging technologies for hepatic diseases. iLIVER 1(4):252–264. https://doi.org/10.1016/j.iliver.2022.11.001

Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M et al (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88. https://doi.org/10.1016/j.media.2017.07.005

Janiesch C, Zschech P, Heinrich K (2021) Machine learning and deep learning. Electron Markets 31(3):685–695. https://doi.org/10.1007/s12525-021-00475-2

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Ozsahin I, Sekeroglu B, Musa MS, Mustapha MT, Ozsahin DU (2020) Review on diagnosis of COVID-19 from chest CT images using artificial intelligence. Comput Math Methods Med 2020:9756518. https://doi.org/10.1155/2020/9756518

Lin A, Kolossváry M, Motwani M, Išgum I, Maurovich-Horvat P, Slomka PJ et al (2021) Artificial intelligence in cardiovascular CT: Current status and future implications. J Cardiovasc Comput Tomogr 15(6):462–469. https://doi.org/10.1016/j.jcct.2021.03.006

Blanc D, Racine V, Khalil A, Deloche M, Broyelle JA, Hammouamri I et al (2020) Artificial intelligence solution to classify pulmonary nodules on CT. Diagn Interventional Imaging 101(12):803–810. https://doi.org/10.1016/j.diii.2020.10.004

Sheth D, Giger ML (2020) Artificial intelligence in the interpretation of breast cancer on MRI. J Magn Reson Imaging: JMRI 51(5):1310–1324. https://doi.org/10.1002/jmri.26878

Jiang YL, Edwards AV, Newstead GM (2021) Artificial intelligence applied to breast MRI for improved diagnosis. Radiology 298(1):38–46. https://doi.org/10.1148/radiol.2020200292

Codari M, Schiaffino S, Sardanelli F, Trimboli RM (2019) Artificial intelligence for breast MRI in 2008-2018: a systematic map** review. AJR Am J Roentgenol 212(2):280–292. https://doi.org/10.2214/AJR.18.20389

Rauschecker AM, Rudie JD, **e L, Wang JC, Duong MT, Botzolakis EJ et al (2020) Artificial intelligence system approaching neuroradiologist-level differential diagnosis accuracy at brain MRI. Radiology 295(3):626–637. https://doi.org/10.1148/radiol.2020190283

Wei JW, Jiang HY, Gu DS, Niu M, Fu FF, Han YQ et al (2020) Radiomics in liver diseases: Current progress and future opportunities. Liver Int 40(9):2050–2063. https://doi.org/10.1111/liv.14555

Hu WM, Yang HY, Xu HF, Mao YL (2020) Radiomics based on artificial intelligence in liver diseases: where are we?. Gastroenterol Rep 8(2):90–97. https://doi.org/10.1093/gastro/goaa011

Colombo M (2015) Diagnosis of liver nodules within and outside screening programs. Ann Hepatol 14(3):304–309

Smith SE, Salanitri J, Lisle D (2007) Ultrasound evaluation of soft tissue masses and fluid collections. Semin Musculoskelet Radiol 11(2):174–191. https://doi.org/10.1055/s-2007-1001882

Jansson T, Persson HW, Lindström K (1999) Estimation of blood perfusion using ultrasound. Proc Inst Mech Eng, Part H: J Eng Med 213(2):91–106. https://doi.org/10.1243/0954411991534834

Sigrist RMS, Liau J, Kaffas AE, Chammas MC, Willmann JK (2017) Ultrasound elastography: review of techniques and clinical applications. Theranostics 7(5):1303–1329. https://doi.org/10.7150/thno.18650

Quaia E (2007) Microbubble ultrasound contrast agents: an update. Eur Radiol 17(8):1995–2008. https://doi.org/10.1007/s00330-007-0623-0

Ye H, Hang J, Zhang MM, Chen XW, Ye XH, Chen J et al (2021) Automatic identification of triple negative breast cancer in ultrasonography using a deep convolutional neural network. Sci Rep 11(1):20474. https://doi.org/10.1038/s41598-021-00018-x

Zhou WJ, Zhang YD, Kong WT, Zhang CX, Zhang B (2021) Preoperative prediction of axillary lymph node metastasis in patients with breast cancer based on radiomics of gray-scale ultrasonography. Gland Surg 10(6):1989–2001. https://doi.org/10.21037/gs-21-315

Kwon MR, Shin JH, Park H, Cho H, Kim E, Hahn SY (2020) Radiomics based on thyroid ultrasound can predict distant metastasis of follicular thyroid carcinoma. J Clin Med 9(7):2156. https://doi.org/10.3390/jcm9072156

Meshram NH, Mitchell CC, Wilbrand S, Dempsey RJ, Varghese T (2020) Deep learning for carotid plaque segmentation using a dilated U-net architecture. Ultrason Imaging 42(4–5):221–230. https://doi.org/10.1177/0161734620951216

Wang K, Lu X, Zhou H, Gao YY, Zheng J, Tong MH et al (2019) Deep learning Radiomics of shear wave elastography significantly improved diagnostic performance for assessing liver fibrosis in chronic hepatitis B: a prospective multicentre study. Gut 68(4):729–741. https://doi.org/10.1136/gutjnl-2018-316204

Tahmasebi A, Qu EZ, Sevrukov A, Liu JB, Wang S, Lyshchik A et al (2021) Assessment of axillary lymph nodes for metastasis on ultrasound using artificial intelligence. Ultrason Imaging 43(6):329–336. https://doi.org/10.1177/01617346211035315

Lu X, Zhou H, Wang K, ** JY, Meng FK, Mu XJ et al (2021) Comparing radiomics models with different inputs for accurate diagnosis of significant fibrosis in chronic liver disease. Eur Radiol 31(11):8743–8754. https://doi.org/10.1007/s00330-021-07934-6

Zhou H, Wang K, Tian J (2020) The accurate non-invasive staging of liver fibrosis using deep learning radiomics based on transfer learning of shear wave elastography. In: Proceedings of the SPIE 11319, medical imaging 2020: ultrasonic imaging and tomography, SPIE, Houston, 16 March 2020

Tong T, Gu JH, Xu D, Song L, Zhao QY, Cheng F et al (2022) Deep learning radiomics based on contrast-enhanced ultrasound images for assisted diagnosis of pancreatic ductal adenocarcinoma and chronic pancreatitis. BMC Med 20(1):74. https://doi.org/10.1186/s12916-022-02258-8

Chen C, Wang Y, Niu JW, Liu XF, Li QF, Gong XT (2021) Domain knowledge powered deep learning for breast cancer diagnosis based on contrast-enhanced ultrasound videos. IEEE Trans Med Imaging 40(9):2439–2451. https://doi.org/10.1109/TMI.2021.3078370

Liu D, Liu F, **e XY, Su LY, Liu M, **e XH et al (2020) Accurate prediction of responses to transarterial chemoembolization for patients with hepatocellular carcinoma by using artificial intelligence in contrast-enhanced ultrasound. Eur Radiol 30(4):2365–2376. https://doi.org/10.1007/s00330-019-06553-6

Liu F, Liu D, Wang K, **e XH, Su LY, Kuang M et al (2020) Deep learning radiomics based on contrast-enhanced ultrasound might optimize curative treatments for very-early or early-stage hepatocellular carcinoma patients. Liver Cancer 9(4):397–413. https://doi.org/10.1159/000505694

Sun XY, Lu QL (2022) Contrast-enhanced ultrasound in optimization of treatment plans for diabetic nephropathy patients based on deep learning. J Supercomput 78(3):3539–3560. https://doi.org/10.1007/s11227-021-04002-0

Meng ZL, Zhu YY, Fan X, Tian J, Nie F, Wang K (2022) CEUSegNet: a cross-modality lesion segmentation network for contrast-enhanced ultrasound. In: Proceedings of the IEEE 19th international symposium on biomedical imaging, IEEE, Kolkata, 28-31 March 2022. https://doi.org/10.1109/ISBI52829.2022.9761594

Iwasa Y, Iwashita T, Takeuchi Y, Ichikawa H, Mita N, Uemura S et al (2021) Automatic segmentation of pancreatic tumors using deep learning on a video image of contrast-enhanced endoscopic ultrasound. J Clin Med 10(16):3589. https://doi.org/10.3390/jcm10163589

Zhang Q, Song S, **ao Y, Chen S, Shi J, Zheng HR (2019) Dual-mode artificially-intelligent diagnosis of breast tumours in shear-wave elastography and B-mode ultrasound using deep polynomial networks. Med Eng Phys 64:1–6. https://doi.org/10.1016/j.medengphy.2018.12.005

Jiang M, Li CL, Chen RX, Tang SC, Lv WZ, Luo XM et al (2021) Management of breast lesions seen on US images: dual-model radiomics including shear-wave elastography may match performance of expert radiologists. Eur J Radiol 141:109781. https://doi.org/10.1016/j.ejrad.2021.109781

Misra S, Jeon S, Managuli R, Lee S, Kim G, Yoon C et al (2022) Bi-modal transfer learning for classifying breast cancers via combined B-mode and ultrasound strain imaging. IEEE Trans Ultrason, Ferroelectr, Freq Control 69(1):222–232. https://doi.org/10.1109/TUFFC.2021.3119251

Qian XJ, Pei J, Zheng H, **e XX, Yan L, Zhang H et al (2021) Prospective assessment of breast cancer risk from multimodal multiview ultrasound images via clinically applicable deep learning. Nat Biomed Eng 5(6):522–532. https://doi.org/10.1038/s41551-021-00711-2

Chen Y, Jiang JW, Shi J, Chang WY, Shi J, Chen M et al (2020) Dual-mode ultrasound radiomics and intrinsic imaging phenotypes for diagnosis of lymph node lesions. Ann Transl Med 8(12):742. https://doi.org/10.21037/atm-19-4630

Zhang Q, Suo JF, Chang WY, Shi J, Chen M (2017) Dual-modal computer-assisted evaluation of axillary lymph node metastasis in breast cancer patients on both real-time elastography and B-mode ultrasound. Eur J Radiol 95:66–74. https://doi.org/10.1016/j.ejrad.2017.07.027

Zhu YY, Meng ZL, Fan X, Duan Y, Jia YY, Dong TT et al (2022) Deep learning radiomics of dual-modality ultrasound images for hierarchical diagnosis of unexplained cervical lymphadenopathy. BMC Med 20(1):269. https://doi.org/10.1186/s12916-022-02469-z

Xue LY, Jiang ZY, Fu TT, Wang QM, Zhu YL, Dai M et al (2020) Transfer learning radiomics based on multimodal ultrasound imaging for staging liver fibrosis. Eur Radiol 30(5):2973–2983. https://doi.org/10.1007/s00330-019-06595-w

Yao Z, Dong Y, Wu GQ, Zhang Q, Yang DH, Yu JH et al (2018) Preoperative diagnosis and prediction of hepatocellular carcinoma: Radiomics analysis based on multi-modal ultrasound images. BMC Cancer 18(1):1089. https://doi.org/10.1186/s12885-018-5003-4

Tao Y, Yu YY, Wu T, Xu XL, Dai Q, Kong HQ et al (2022) Deep learning for the diagnosis of suspicious thyroid nodules based on multimodal ultrasound images. Front Oncol 12:1012724. https://doi.org/10.3389/fonc.2022.1012724

Yuan HX, Wang CY, Tang CY, You QQ, Zhang Q, Wang WP (2023) Differential diagnosis of gallbladder neoplastic polyps and cholesterol polyps with radiomics of dual modal ultrasound: a pilot study. BMC Med Imaging 23(1):26. https://doi.org/10.1186/s12880-023-00982-y

Zhong X, Peng JY, **e YH, Shi YF, Long HY, Su LY et al (2022) A nomogram based on multi-modal ultrasound for prediction of microvascular invasion and recurrence of hepatocellular carcinoma. Eur J Radiol 151:110281. https://doi.org/10.1016/j.ejrad.2022.110281

**ang Z, Zhuo QL, Zhao C, Deng XF, Zhu T, Wang TF et al (2022) Self-supervised multi-modal fusion network for multi-modal thyroid ultrasound image diagnosis. Comput Biol Med 150:106164. https://doi.org/10.1016/j.compbiomed.2022.106164

Huang RB, Lin ZH, Dou HR, Wang J, Miao JZ, Zhou GQ et al (2021) AW3M: An auto-weighting and recovery framework for breast cancer diagnosis using multi-modal ultrasound. Med Image Anal 72:102137. https://doi.org/10.1016/j.media.2021.102137

Meng ZL, Zhu YY, Pang WJ, Tian J, Nie F, Wang K (2023) MSMFN: an ultrasound based multi-step modality fusion network for identifying the histologic subtypes of metastatic cervical lymphadenopathy. IEEE Trans Med Imaging 42(4):996–1008. https://doi.org/10.1109/TMI.2022.3222541

Gao Y, Fu XL, Chen YP, Guo CY, Wu J (2023) Post-pandemic healthcare for COVID-19 vaccine: Tissue-aware diagnosis of cervical lymphadenopathy via multi-modal ultrasound semantic segmentation. Appl Soft Comput 133:109947. https://doi.org/10.1016/j.asoc.2022.109947

Mitrea DA, Brehar R, Nedevschi S, Lupsor-Platon M, Socaciu M, Badea R (2023) Hepatocellular carcinoma recognition from ultrasound images using combinations of conventional and deep learning techniques. Sensors 23(5):2520. https://doi.org/10.3390/s23052520

Yang GY, Zhang Y, Yu TZ, Chen MH, Chen PJ (2022) Exploratory study on the predictive value of ultrasound radiomics for cervical tuberculous lymphadenitis. Clin Imaging 86:61–66. https://doi.org/10.1016/j.clinimag.2022.03.005

Kwon MR, Shin JH, Park H, Cho H, Hahn SY, Park KW (2020) Radiomics study of thyroid ultrasound for predicting BRAF mutation in papillary thyroid carcinoma: preliminary results. Am J Neuroradiol 41(4):700–705. https://doi.org/10.3174/ajnr.A6505

Baloescu C, Toporek G, Kim S, McNamara K, Liu R, Shaw MM et al (2020) Automated lung ultrasound B-line assessment using a deep learning algorithm. IEEE Trans Ultrason, Ferroelectr, Freq Control 67(11):2312–2320. https://doi.org/10.1109/TUFFC.2020.3002249

Roy S, Menapace W, Oei S, Luijten B, Fini E, Saltori C et al (2020) Deep learning for classification and localization of COVID-19 markers in point-of-care lung ultrasound. IEEE Trans Med Imaging 39(8):2676–2687. https://doi.org/10.1109/TMI.2020.2994459

Peng JB, Peng YT, Lin P, Wan D, Qin H, Li X et al (2022) Differentiating infected focal liver lesions from malignant mimickers: value of ultrasound-based radiomics. Clin Radiol 77(2):104–113. https://doi.org/10.1016/j.crad.2021.10.009

Liu JJ, Wang XC, Hu MS, Zheng Y, Zhu L, Wang W et al (2022) Development of an ultrasound-based radiomics nomogram to preoperatively predict Ki-67 expression level in patients with breast cancer. Front Oncol 12:963925. https://doi.org/10.3389/fonc.2022.963925

Jain PK, Gupta S, Bhavsar A, Nigam A, Sharma N (2020) Localization of common carotid artery transverse section in B-mode ultrasound images using faster RCNN: a deep learning approach. Med Biol Eng Comput 58(3):471–482. https://doi.org/10.1007/s11517-019-02099-3

Dadoun H, Rousseau AL, de Kerviler E, Correas JM, Tissier AM, Joujou F et al (2022) Deep learning for the detection, localization, and characterization of focal liver lesions on abdominal US images. Radiol: Artif Intell 4(3):e210110. https://doi.org/10.1148/ryai.210110

Zhang L, Zhuang Y, Hua Z, Han L, Li C, Chen K et al (2021) Automated location of thyroid nodules in ultrasound images with improved YOLOV3 network. J X-ray Sci Technol 29(1):75–90. https://doi.org/10.3233/XST-200775

Zhou R, Azarpazhooh MR, Spence JD, Hashemi S, Ma W, Cheng XY et al (2021) Deep learning-based carotid plaque segmentation from B-mode ultrasound images. Ultrasound Med Biol 47(9):2723–2733. https://doi.org/10.1016/j.ultrasmedbio.2021.05.023

Jain PK, Sharma N, Giannopoulos AA, Saba L, Nicolaides A, Suri JS (2021) Hybrid deep learning segmentation models for atherosclerotic plaque in internal carotid artery B-mode ultrasound. Comput Biol Med 136:104721. https://doi.org/10.1016/j.compbiomed.2021.104721

Zhang YT, **an M, Cheng HD, Shareef B, Ding JR, Xu F et al (2022) BUSIS: a benchmark for breast ultrasound image segmentation. Healthcare 10(4):729. https://doi.org/10.3390/healthcare10040729

Liao WX, He P, Hao J, Wang XY, Yang RL, An D et al (2020) Automatic identification of breast ultrasound image based on supervised block-based region segmentation algorithm and features combination migration deep learning model. IEEE J Biomed Health Inf 24(4):984–993. https://doi.org/10.1109/JBHI.2019.2960821

Kumar V, Webb J, Gregory A, Meixner DD, Knudsen JM, Callstrom M et al (2020) Automated segmentation of thyroid nodule, gland, and cystic components from ultrasound images using deep learning. IEEE Access 8:63482–63496. https://doi.org/10.1109/access.2020.2982390

Akkus Z, Kim BH, Nayak R, Gregory A, Alizad A, Fatemi M (2020) Fully automated segmentation of bladder sac and measurement of detrusor wall thickness from transabdominal ultrasound images. Sensors 20(15):4175. https://doi.org/10.3390/s20154175

Jain PK, Sharma N, Kalra MK, Johri A, Saba L, Suri JS (2022) Far wall plaque segmentation and area measurement in common and internal carotid artery ultrasound using U-series architectures: An unseen Artificial Intelligence paradigm for stroke risk assessment. Comput Biol Med 149:106017. https://doi.org/10.1016/j.compbiomed.2022.106017

Zhou R, Guo FM, Azarpazhooh MR, Hashemi S, Cheng XY, Spence JD et al (2021) Deep learning-based measurement of total plaque area in B-mode ultrasound images. IEEE J Biomed Health Inf 25(8):2967–2977. https://doi.org/10.1109/JBHI.2021.3060163

Jain PK, Sharma N, Saba L, Paraskevas KI, Kalra MK, Johri A et al (2022) Automated deep learning-based paradigm for high-risk plaque detection in B-mode common carotid ultrasound scans: an asymptomatic Japanese cohort study. Int Angiol 41(1):9–23. https://doi.org/10.23736/S0392-9590.21.04771-4

del Mar Vila M, Remeseiro B, Grau M, Elosua R, Betriu À, Fernandez-Giraldez E et al (2020) Semantic segmentation with DenseNets for carotid artery ultrasound plaque segmentation and CIMT estimation. Artif Intell Med 103:101784. https://doi.org/10.1016/j.artmed.2019.101784

Meiburger KM, Marzola F, Zahnd G, Faita F, Loizou CP, Lainé N et al (2022) Carotid Ultrasound Boundary Study (CUBS): Technical considerations on an open multi-center analysis of computerized measurement systems for intima-media thickness measurement on common carotid artery longitudinal B-mode ultrasound scans. Comput Biol Med 144:105333. https://doi.org/10.1016/j.compbiomed.2022.105333

Cunningham RJ, Loram ID (2020) Estimation of absolute states of human skeletal muscle via standard B-mode ultrasound imaging and deep convolutional neural networks. J R Soc, Interface 17(162):20190715. https://doi.org/10.1098/rsif.2019.0715

Leblanc T, Lalys F, Tollenaere Q, Kaladji A, Lucas A, Simon A (2022) Stretched reconstruction based on 2D freehand ultrasound for peripheral artery imaging. Int J Comput Assisted Radiol Surg 17(7):1281–1288. https://doi.org/10.1007/s11548-022-02636-w

Tang SY, Yang X, Shajudeen P, Sears C, Taraballi F, Weiner B et al (2021) A CNN-based method to reconstruct 3-D spine surfaces from US images in vivo. Med Image Anal 74:102221. https://doi.org/10.1016/j.media.2021.102221

Ahn SS, Ta K, Lu A, Stendahl JC, Sinusas AJ, Duncan JS (2020) Unsupervised motion tracking of left ventricle in echocardiography. In: Proceedings of the SPIE 11319, medical imaging 2020: ultrasonic imaging and tomography, SPIE, Houston, 16 March 2020

Yagasaki S, Koizumi N, Nishiyama Y, Kondo R, Imaizumi T, Matsumoto N et al (2020) Estimating 3-dimensional liver motion using deep learning and 2-dimensional ultrasound images. Int J Comput Assisted Radiol Surg 15(12):1989–1995. https://doi.org/10.1007/s11548-020-02265-1

Evain E, Faraz K, Grenier T, Garcia D, De Craene M, Bernard O (2020) A pilot study on convolutional neural networks for motion estimation from ultrasound images. IEEE Trans Ultrason, Ferroelectr, Freq Control 67(12):2565–2573. https://doi.org/10.1109/TUFFC.2020.2976809

Liang JM, Yang X, Huang YH, Li HM, He SC, Hu XD et al (2022) Sketch guided and progressive growing GAN for realistic and editable ultrasound image synthesis. Med Image Anal 79:102461. https://doi.org/10.1016/j.media.2022.102461

Guo Q, Dong ZW, Jiang LX, Zhang L, Li ZY, Wang DM (2022) Assessing whether morphological changes in axillary lymph node have already occurred prior to metastasis in breast cancer patients by ultrasound. Medicina 58(11):1674. https://doi.org/10.3390/medicina58111674

Yu TF, He W, Gan CG, Zhao MC, Zhu Q, Zhang W et al (2021) Deep learning applied to two-dimensional color Doppler flow imaging ultrasound images significantly improves diagnostic performance in the classification of breast masses: a multicenter study. Chin Med J 134(4):415–424. https://doi.org/10.1097/CM9.0000000000001329

Shen YQ, Shamout FE, Oliver JR, Witowski J, Kannan K, Park J et al (2021) Artificial intelligence system reduces false-positive findings in the interpretation of breast ultrasound exams. Nat Commun 12(1):5645. https://doi.org/10.1038/s41467-021-26023-2

Sultan LR, Schultz SM, Cary TW, Sehgal CM (2018) Machine learning to improve breast cancer diagnosis by multimodal ultrasound. In: Proceedings of the 2018 IEEE international ultrasonics symposium, IEEE, Kobe, 22-25 October 2018. https://doi.org/10.1109/ultsym.2018.8579953

Wang B, Perronne L, Burke C, Adler RS (2021) Artificial intelligence for classification of soft-tissue masses at US. Radiol: Artif Intell 3(1):e200125. https://doi.org/10.1148/ryai.2020200125

Wu XL, Li MY, Cui XW, Xu GP (2022) Deep multimodal learning for lymph node metastasis prediction of primary thyroid cancer. Phys Med Biol 67(3):035008. https://doi.org/10.1088/1361-6560/ac4c47

Destrempes F, Gesnik M, Chayer B, Roy-Cardinal MH, Olivié D, Giard JM et al (2022) Quantitative ultrasound, elastography, and machine learning for assessment of steatosis, inflammation, and fibrosis in chronic liver disease. PLoS One 17(1):e0262291. https://doi.org/10.1371/journal.pone.0262291

Zhang B, Tian J, Pei SF, Chen YB, He X, Dong YH et al (2019) Machine learning-assisted system for thyroid nodule diagnosis. Thyroid 29(6):858–867. https://doi.org/10.1089/thy.2018.0380

Qin PL, Wu K, Hu YS, Zeng JC, Chai XF (2020) Diagnosis of benign and malignant thyroid nodules using combined conventional ultrasound and ultrasound elasticity imaging. IEEE J Biomed Health Inf 24(4):1028–1036. https://doi.org/10.1109/JBHI.2019.2950994

Zhao CK, Ren TT, Yin YF, Shi H, Wang HX, Zhou BY et al (2021) A comparative analysis of two machine learning-based diagnostic patterns with thyroid imaging reporting and data system for thyroid nodules: diagnostic performance and unnecessary biopsy rate. Thyroid 31(3):470–481. https://doi.org/10.1089/thy.2020.0305

Liu TT, Ge XF, Yu JH, Guo Y, Wang YY, Wang WP et al (2018) Comparison of the application of B-mode and strain elastography ultrasound in the estimation of lymph node metastasis of papillary thyroid carcinoma based on a radiomics approach. Int J Comput Assisted Radiol Surg 13(10):1617–1627. https://doi.org/10.1007/s11548-018-1796-5

Park SY, Kang BJ (2021) Combination of shear-wave elastography with ultrasonography for detection of breast cancer and reduction of unnecessary biopsies: a systematic review and meta-analysis. Ultrasonography 40(3):318–332. https://doi.org/10.14366/usg.20058

Li CX, Li JJ, Tan T, Chen K, Xu Y, Wu R (2021) Application of ultrasonic dual-mode artificially intelligent architecture in assisting radiologists with different diagnostic levels on breast masses classification. Diagn Interv Radiol 27(3):315–322. https://doi.org/10.5152/dir.2021.20018

Kim MY, Kim SY, Kim YS, Kim ES, Chang JM (2021) Added value of deep learning-based computer-aided diagnosis and shear wave elastography to b-mode ultrasound for evaluation of breast masses detected by screening ultrasound. Medicine 100(31):e26823. https://doi.org/10.1097/MD.0000000000026823

Chung YE, Kim KW (2015) Contrast-enhanced ultrasonography: advance and current status in abdominal imaging. Ultrasonography 34(1):3–18. https://doi.org/10.14366/usg.14034

Qu MJ, Jia ZH, Sun LP, Wang H (2021) Diagnostic accuracy of three-dimensional contrast-enhanced ultrasound for focal liver lesions: A protocol for systematic review and meta-analysis. Medicine 100(51):e28147. https://doi.org/10.1097/MD.0000000000028147

Kadyrleev RA, Busko EA, Kostromina EV, Shevkunov LN, Kozubova KV, Bagnenko SS (2021) Diagnostic algorithm of solid kidney lesions with contrast-enhanced ultrasound. Diagn Radiol Radiother 12(1):14–23

Ashrafi AN, Nassiri N, Gill IS, Gulati M, Park D, de Castro Abreu AL (2018) Contrast-enhanced transrectal ultrasound in focal therapy for prostate cancer. Curr Urol Rep 19(10):87. https://doi.org/10.1007/s11934-018-0836-6

Zhou SC, Le J, Zhou J, Huang YX, Qian L, Chang C (2020) The role of contrast-enhanced ultrasound in the diagnosis and pathologic response prediction in breast cancer: a meta-analysis and systematic review. Clin Breast Cancer 20(4):e490–e509. https://doi.org/10.1016/j.clbc.2020.03.002

Maghsoudinia F, Tavakoli MB, Samani RK, Hejazi SH, Sobhani T, Mehradnia F et al (2021) Folic acid-functionalized gadolinium-loaded phase transition nanodroplets for dual-modal ultrasound/magnetic resonance imaging of hepatocellular carcinoma. Talanta 228:122245. https://doi.org/10.1016/j.talanta.2021.122245

Lin HM, Chen Y, **e SY, Yu MM, Deng DQ, Sun T et al (2022) A dual-modal imaging method combining ultrasound and electromagnetism for simultaneous measurement of tissue elasticity and electrical conductivity. IEEE Trans Biomed Eng 69(8):2499–2511. https://doi.org/10.1109/TBME.2022.3148120

Zhang YC, Wang LD (2022) Adaptive dual-speed ultrasound and photoacoustic computed tomography. Photoacoustics 27:100380. https://doi.org/10.1016/j.pacs.2022.100380

Han M, Choi W, Ahn J, Ryu H, Seo Y, Kim C (2020) In vivo dual-modal photoacoustic and ultrasound imaging of sentinel lymph nodes using a solid-state dye laser system. Sensors 20(13):3714. https://doi.org/10.3390/s20133714

Zhang YC, Wang Y, Lai PX, Wang LD (2022) Video-rate dual-modal wide-beam harmonic ultrasound and photoacoustic computed tomography. IEEE Trans Med Imaging 41(3):727–736. https://doi.org/10.1109/TMI.2021.3122240

Aimacaña CMC, Perez DAQ, Pinto SR, Debut A, Attia MF, Santos-Oliveira R et al (2021) Polytetrafluoroethylene-like nanoparticles as a promising contrast agent for dual modal ultrasound and X-ray bioimaging. ACS Biomater Sci Eng 7(3):1181–1191. https://doi.org/10.1021/acsbiomaterials.0c01635

Ke HT, Yue XL, Wang JR, **ng S, Zhang Q, Dai ZF et al (2014) Gold nanoshelled liquid perfluorocarbon nanocapsules for combined dual modal ultrasound/CT imaging and photothermal therapy of cancer. Small 10(6):1220–1227. https://doi.org/10.1002/smll.201302252

Song YX, Zheng J, Lei L, Ni ZP, Zhao BL, Hu Y (2022) CT2US: Cross-modal transfer learning for kidney segmentation in ultrasound images with synthesized data. Ultrasonics 122:106706. https://doi.org/10.1016/j.ultras.2022.106706

Acknowledgements

Not applicable.

Funding

This study was supported by the National Natural Science Foundation of China, Nos. 92159305, 92259303, 62027901, 81930053, and 82272029; Bei**g Science Fund for Distinguished Young Scholars, No. JQ22013; and Excellent Member Project of the Youth Innovation Promotion Association CAS, No. 2016124.

Author information

Authors and Affiliations

Contributions

HYZ designed the study and completed the final manuscript; ZLM wrote the dual-/multimodal ultrasound and AI-powered ultrasound image analysis; JYR wrote the dynamic ultrasound; YQM wrote the BUS; and KW supervised the study. All the authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, H., Meng, Z., Ru, J. et al. Application and prospects of AI-based radiomics in ultrasound diagnosis. Vis. Comput. Ind. Biomed. Art 6, 20 (2023). https://doi.org/10.1186/s42492-023-00147-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s42492-023-00147-2