Abstract

In this paper, mathematical analysis is proposed on the synchronization problem for stochastic reaction-diffusion Cohen-Grossberg neural networks with Neumann boundary conditions. By introducing several important inequalities and using Lyapunov functional technique, some new synchronization criteria in terms of p-norm are derived under periodically intermittent control. Some previous known results in the literature are improved, and some restrictions on the mixed time-varying delays are removed. The influence of diffusion coefficients, diffusion space, stochastic perturbation and control width on synchronization is analyzed by the obtained synchronization criteria. Numerical simulations are presented to show the feasibility of the theoretical results.

Similar content being viewed by others

1 Introduction

Synchronization introduced by Pecora and Carrol [1] means two or more systems which are either chaotic or periodic and share a common dynamic behavior. Synchronization problems of Cohen-Grossberg neural networks have been widely researched because of their extensive applications in secure communication, information processing and chaos generators design. Up to now, various control methods have been introduced to achieve synchronization of neural networks including sampled data control [2], pinning control [3], adaptive control [4], sliding mode control [5], impulsive control [6], periodically intermittent control [7–10], and so on.

In the process of signal transmission, an external control should be loaded when the signal becomes weak due to diffusion, and be unloaded when the signal strength reaches the upper bound considering the cost. Hence, discontinuous control methods including impulsive control and intermittent control are more economical and effective than continuous control methods. For impulsive control, the external control is added only at certain points and the control width is zero, but the control width of the intermittent control is non-zero. Therefore, intermittent control can be seen as the transition from impulsive control to continuous control, and it has the advantages of these two methods.

Many results with respect to the synchronization of Cohen-Grossberg neural networks have been obtained based on periodically intermittent control in recent years (see, for example, [11–18]). In [15], the exponential synchronization of Cohen-Grossberg neural networks with time-varying delays was discussed based on periodically intermittent control:

where \(u(t)=(u_{1}(t),u_{2}(t),\ldots,u_{n}(t))^{\mathrm{T}}\) denotes the state of the drive system at time t; \(v(t)=(v_{1}(t),v_{2}(t),\ldots ,v_{n}(t))^{\mathrm{T}}\) denotes the state of the response system at time t; \(\alpha_{i}(\cdot)\) represents the amplification function of the ith neuron; \(\beta_{i}(\cdot)\) is the appropriately behaved function of the ith neuron; \(f_{j}(\cdot)\) denotes the activation function of the jth neuron; \(a_{ij}\) is the connection strength between the jth neuron and the ith neuron; \(b_{ij}\) is the discrete time-varying delay connection strength of the jth neuron on the ith neuron; \(0<\tau_{j}(t)\leq\tau\) is the discrete time-varying delay of jth neuron and corresponds to finite speed of axonal signal transmission at time t; \(J_{i}\) denotes the input from outside of the networks; \(K_{i}(t)\) is a periodically intermittent controller. The exponential synchronization criteria were obtained by using some analysis techniques.

Discrete time-varying delays were considered in [15]. In fact, the neural signals propagate along a multitude of parallel pathways with a variety of axon sizes and lengths over a period of time. In order to reduce the influence of the distant past behaviors of the state, distributed delays are introduced to describe this property. In addition, stochastic effects on the synchronization should be considered in real neural networks since synaptic transmission is completed by releasing random fluctuations from neurotransmitters or other random causes [16]. Besides, dynamic behaviors of neural networks derive from the interactions of neurons, which is not only dependent on the time of each neuron but also its space position [17]. Hence, it is essential to study the state variables varying with the time and space variables, especially when electrons are moving in nonuniform electromagnetic fields. Such phenomena can be described by reaction-diffusion equations. In conclusion, more realistic neural networks should consider the effects of mixed time-varying delays, stochastic perturbation and spatial diffusion.

In [18], Gan studied the synchronization problem for Cohen-Grossberg neural networks with mixed time-varying delays, stochastic noise disturbance and spatial diffusion:

where \(u(t,x)=(u_{1}(t,x),u_{2}(t,x),\ldots,u_{n}(t,x))^{\mathrm{T}}\) denotes the state of the drive system at time t and in space x; \(v(t,x)=(v_{1}(t,x),v_{2}(t,x),\ldots,v_{n}(t,x))^{\mathrm{T}}\) denotes the state of the response system at time t and in space x; \(\Omega=\{ x=(x_{1}, x_{2}, \ldots, x_{l^{\ast}})^{\mathrm{T}}| \vert x_{k} \vert < m_{k}, k=1,2,\ldots , l^{\ast}\}\subset R^{l^{\ast}}\) is a bound compact set with smooth boundary ∂Ω and mes \(\Omega>0\); \(e(t,x)=(e_{1}(t,x),e_{2}(t,x),\ldots, e_{n}(t,x))=v(t,x)-u(t,x)\) is the synchronization error signal; \(d_{ij}\) is the distributed delay connection strength between the jth neuron and the ith neuron; \(f_{j}(\cdot)\), \(g_{j}(\cdot)\) and \(h_{j}(\cdot)\) denote the activation functions; \(D_{ik}>0\) is the diffusion coefficient along the ith neuron; \(0<\tau_{ij}^{\ast}(t)\leq\tau^{\ast}\) is the distributed time-varying delay between the jth neuron and the ith neuron; \(\sigma=(\sigma_{ij})_{n\times n}\) is the noise intensity matrix; \(K_{i}(t,x)\) is an intermittent controller; \(\omega(t)=(\omega _{1}(t),\omega_{2}(t),\ldots,\omega_{n}(t))^{T}\in\mathbb{R}^{n}\) is the stochastic disturbance which is a Brownian motion defined on the complete probability space \((\Omega,\mathcal{F},\mathcal{P})\) (where Ω is the sample, \(\mathcal{F}\) is the σ-algebra of subsets of the sample space and \(\mathcal{P}\) is the probability measure on \(\mathcal{F}\)), and

where \(\mathbf{E}\{\cdot\}\) is the mathematical expectation operator with respect to the given probability measure \(\mathcal{P}\). By using Lyapunov theory and stochastic analysis methods, sufficient conditions were given to realize the exponential synchronization based on p-norm.

The exponential synchronization criteria obtained in [18] assumed that \(\dot{\tau}_{ij}(t)\leq\varrho<1\) and \(\dot{\tau}^{\ast}_{ij}(t)\leq\varrho^{\ast}<1\) for all t, that is, the time-varying delays were slowly varying delays. In fact, the continuous varying of delays may be slow or fast. Hence, these restrictions are unnecessary and impractical. Furthermore, the boundary conditions in [18] are assumed to be Dirichlet boundary conditions. In engineering applications, such as thermodynamics, Neumann boundary conditions need to be considered. As far as we know, there are few results concerning the synchronization of reaction-diffusion stochastic Cohen-Grossberg neural networks with Neumann boundary conditions.

Based on the above discussion, we are concerned with the combined effects of mixed time-varying delays, stochastic perturbation and spatial diffusion on the exponential synchronization of Cohen-Grossberg neural networks with Neumann boundary conditions in terms of p-norm via periodically intermittent control to improve the previous results. To this end, we discuss the following neural networks:

where \(\Delta=\sum_{k=1}^{l^{\ast}}\frac{\partial^{2}}{\partial x_{k}^{2}}\) is the Laplace operator; \(D_{i}>0\) is the diffusion coefficient along the ith neuron.

The boundary conditions and the initial values of system (1.6) take the form

and

where \(\bar{\tau}=\max\{\tau,\tau^{*}\}\), \(\phi(s,x)=(\phi _{1}(s,x),\phi_{2}(s,x),\ldots,\phi_{n}(s,x))^{\mathrm{T}}\in\mathcal {C}\triangleq\mathcal{C}([-\bar{\tau},0)\times\Omega, \mathbb{R}^{n})\) which denotes continuous functions with p-norm:

System (1.6) is called drive system. The response system is described in the following:

The boundary conditions and initial values of the response system (1.9) are given in the following forms:

and

where \(\psi(s,x)=(\psi_{1}(s,x), \psi_{2}(s,x),\ldots, \psi_{n}(s,x))\in \mathcal{C}\) is bounded and continuous.

Let \(K(t,x)=(K_{1}(t,x),K_{2}(t,x),\ldots,K_{n}(t,x))\) be an intermittent controller defined by

where \(m\in N=\{0,1,2,\ldots\}\), \(k_{i}>0\) (\(i\in\ell\)) denote the control strength, \(T>0\) denotes the control period and \(0<\delta<T\) denotes the control width.

In this paper, the intermittent controller \(K(t,x)\) is designed to achieve exponential synchronization of systems (1.6) and (1.9). The model is derived under the following assumptions.

(H1) There exist positive constants \(L_{i}\), \(L_{i}^{\ast}\), \(M_{i}\), \(M_{i}^{\ast}\), \(N_{i}\), and \(N_{i}^{\ast}\) such that:

where \(\hat{v}_{i}\), \(\check{v}_{i}\in\mathbb{R}\), \(i\in\ell\).

(H2) There exist positive constants \(\bar{\alpha}_{i}\) and \(\alpha_{i}^{\ast}\) such that

for all \(\hat{v}_{i}\), \(\check{v}_{i}\in\mathbb{R}\), \(i\in\ell\).

(H3) There exist positive constants \(\gamma_{i}\) such that

for all \(\hat{v}_{i}, \check{v}_{i}\in\mathbb{R}\), and \(\hat{v}_{i}\neq \check{v}_{i}\), \(i\in\ell\).

(H4) There exist positive constants \(\eta_{ij}\) such that

for all \(\tilde{v}_{1},\tilde{v}_{2},\hat{v}_{1},\hat{v}_{2},\check {v}_{1},\check{v}_{2}\in\mathbb{R}\), and \(\sigma_{ij}(0,0,0)=0\), \(i,j\in \ell\).

The organization of this paper is as follows. In Section 2, some definitions and lemmas which will be essential to our derivation are introduced. In Section 3, by using Lyapunov functional technique, some new criteria are obtained to achieve the exponential synchronization of systems (1.6) and (1.9). Some numerical examples are given to verify the feasibility of the theoretical results in Section 4. This paper ends with a brief conclusion in Section 5.

2 Preliminaries

In this section, we propose some definitions and lemmas used in the proof of the main results.

Definition 2.1

The response system (1.9) and the drive system (1.6) are exponential synchronization under the periodically intermittent controller (1.12) based on p-norm if there exist constants \(\mu>0\) and \(M\geq1\) such that

where \(u(t,x)\) and \(v(t,x)\) are solutions of systems (1.6) and (1.9) with differential initial functions \(\phi, \varphi\in\mathcal{C}\), respectively, and

Lemma 2.1

Wang [19] Itô’s formula

Let \(x(t)\) (\(t\geq 0\)) be Itô processes, and

where \(f\in\mathcal{L}^{1}(R^{+},R^{n})\) (\(\mathcal{L}^{1}\) is the space of absolutely integrable function), \(g\in\mathcal{L}^{2}(R^{+},R^{n\times m})\) (\(\mathcal{L}^{2}\) is the space of square integrable function). If \(V(x,t)\in C^{2,1}(R^{n}\times R^{+};R)\), (\(C^{2,1}(R^{n}\times R^{+};R)\) is the family of all nonnegative functions on \(R^{+}\times R^{n}\) which are continuously twice differentiable in x and once differentiable in t), then \(V(x(t),t)\) is still Itô processes, and

where

Lemma 2.2

Gu[20]

Suppose that Ω is a bounded domain of \(R^{l^{\ast}}\) with a smooth boundary ∂Ω. \(u(x)\), \(v(x)\) are real-valued functions belonging to \(\mathcal{C}^{2}(\Omega\cup \partial\Omega)\). Then

where \(\nabla=(\frac{\partial}{\partial x_{1}}, \frac{\partial }{\partial x_{2}}, \ldots,\frac{\partial}{\partial x_{l^{\ast}}})^{\mathrm{T}}\) is the gradient operator.

Lemma 2.3

Let \(p\geq2\) be a positive integer and Ω be a bounded domain of \(R^{l^{\ast}}\) with a smooth boundary ∂Ω. \(\varphi(x)\in\mathcal{C}^{1}(\Omega)\) is a real-valued function and \(\frac{\partial\varphi(x)}{\partial\mathbf{n}}| _{\partial\Omega }=\mathbf{0}\). Then

where \(\lambda_{1}\) is the smallest positive eigenvalue of the Neumann boundary problem

Proof

According to the eigenvalue theory of elliptic operators, the Laplacian −Δ on Ω with the Neumann boundary conditions is a self-adjoint operator with compact inverse, so there exists a sequence of nonnegative eigenvalues \(0=\lambda_{0}<\lambda _{1}<\lambda_{2}<\cdots\) , (\(\lim_{i\to\infty}\lambda_{i}=+\infty \)) and a sequence of corresponding eigenfunctions \(\vartheta_{0}(x), \vartheta_{1}(x), \vartheta_{2}(x), \ldots\) for the Neumann boundary problem (2.4), that is,

Integrate the second equation of (2.5) over Ω after multiplying by \(\vartheta_{m}^{p-1}(x)\) (\(m=1,2,\ldots\)). Then, by Green’s formula (2.2), we obtain

It is easy to show that (2.6) is also true for \(m=0\).

The sequence of eigenfunctions \(\{\vartheta_{i}(x)\}_{i\geq0}\) contains an orthonormal basis of \(\mathcal{L}^{2}(\Omega)\). Hence, for any \(\varphi(x)\in\mathcal{L}^{2}(\Omega)\), there exists a sequence of constants \(\{c_{m}\}_{m\geq0}\) such that

It follows from (2.6) and (2.7) that

□

Remark 1

If \(p=2\), the integral inequality (2.3) is the Poincaré integral inequality in [21]. The smallest eigenvalue \(\lambda_{1}\) of the Neumann boundary problem (2.4) is determined by the boundary of Ω [21]. If \(\Omega=\{ x=(x_{1}, x_{2}, \ldots, x_{l^{\ast}})^{\mathrm{T}}| m_{k}^{-}\leq x_{k}\leq m_{k}^{+}, k=1,2,\ldots, l^{\ast}\}\subset R^{l^{\ast}}\), then

Lemma 2.4

Mei[22]

Let \(p\geq2\) and \(a,b,h>0\). Then

3 Exponential synchronization criterion

In this section, the exponential synchronization criterion of the drive system (1.6) and the response system (1.9) is obtained by designing the suitable T, δ and \(k_{i}\). For convenience, the following denotations are introduced.

Denote

where \(\xi_{i}\), \(\zeta_{i}\), \(\rho_{i}\), \(\varrho_{i}\), \(\epsilon_{i}\) and \(\varsigma _{i}\) are positive constants.

Consider the following function:

where \(\varepsilon\geq0\), \(w=w_{1}\tau^{\ast}+w_{2}+w_{3}\), \(\kappa=\min_{i\in\ell}\{\kappa_{i}\}\). If the following holds:

(H5) \(\kappa>w\), then \(F(0)<0\), and \(F_{i}(\varepsilon_{i})\rightarrow+\infty\) as \(\varepsilon_{i}\rightarrow+\infty\). Noting that \(F(\varepsilon)\) is continuous on \([0,+\infty)\) and \(F'(\varepsilon)>0\), using the zero point theorem, we obtain that there exists a unique positive constant ε̄ such that \(F(\bar{\varepsilon})=0\).

Theorem 3.1

Under assumptions (H1)-(H5), the response system (1.9) and the drive system (1.6) are exponential synchronization under the periodically intermittent controller (1.12) based on p-norm, if the following condition holds:

(H6) \(\theta>0\), \(\bar{\varepsilon}-\frac{(T-\delta )\theta}{T}>0\), where \(\theta=\kappa+\max_{i\in\ell}\{ -\kappa_{i}+pk_{i}\}\).

Proof

Subtract (1.6) from (1.9), and we obtain the error system

where

Define

For \((t,x)\in[mT, mT+\delta)\times\Omega\), by (1.5), Itô’s differential formula (2.1) and the Dini right-upper derivative, we get

If (H1)-(H4) hold, it is easy to show that

From the boundary conditions (1.7), (1.10) and Lemma 2.3, we get

It follows from Lemma 2.4 that

Substituting (3.6)-(3.7) into (3.5), we have

where

Similarly, for \((t,x)\in[mT+\delta, (m+1)T)\times\Omega\), we derive that

Denote \(Q(t)=H(t)-hU\), where

Evidently,

Now, we prove that

Otherwise, there exists \(t_{0}\in[0,\delta)\) such that

It follows from (3.8) that

By (3.12), we conclude that

Hence, we know from (3.13) and (3.14) that

which contradicts (3.12). Then (3.11) holds.

Next, we show that

Otherwise, there exists \(t_{1}\in[\delta,T)\) such that

By (3.17), we have

For \(\bar{\tau}>0\), if \((t_{1}-\bar{\tau})\in[\delta, t_{1})\), we derive from (3.18) that

If \(t_{1}-\bar{\tau}\in[-\bar{\tau},\delta)\), by (3.11) and (3.18), we see that

Therefore, for any \(\bar{\tau}>0\),

Then, we conclude from (3.9), (3.18) and (3.19) that

which contradicts (3.17). Then equality (3.16) holds. That is, for \(t\in[\delta, T)\),

On the other hand, it follows from (3.10) and (3.11) that for \(t\in [-\bar{\tau}, \delta)\),

Therefore, for all \(t\in[-\bar{\tau}, T)\),

Similar to the proof of (3.11) and (3.16), respectively, we can show that

Using the mathematical induction method, the following inequalities can be proved to be true for any nonnegative integer l.

If \(t\in[lT, lT+\delta)\), then \(l\leq t/T\), we derive from (3.20) that

If \(t\in[lT+\delta,(l+1)T)\), then \(l+1> t/T\), we conclude from (3.21) that

Hence, for any \(t\in[0,+\infty)\),

Note that

From (3.22) and (3.23), we have

where

Hence, the response system (1.9) and the drive system (1.6) are exponential synchronization under the periodically intermittent controller (1.12) based on p-norm. This completes the proof of Theorem 3.1. □

Remark 2

In this paper, by introducing the important inequality (2.3) in Lemma 2.3 and using Lyapunov functional theory, the exponential synchronization criteria relying on diffusion coefficients and diffusion space are derived for the proposed Cohen-Grossberg neural networks with Neumann boundary conditions under the periodically intermittent control. References [23–26] also researched the synchronization of reaction-diffusion neural networks with Neumann boundary conditions. The corresponding synchronization criteria obtained in these papers are all irrelevant to the diffusion coefficients and diffusion space. The influence of the reaction-diffusion terms on the synchronization of neural networks cannot be found. Hence, our results have wider application prospects.

Remark 3

In [13, 14] and [18], the authors have obtained the exponential synchronization criteria for neural networks by assuming that \(\dot{\tau}_{ij}(t)\leq\varrho<1\) and \(\dot{\tau}^{\ast}_{ij}(t)\leq\varrho^{\ast}<1\) for all t. These restrictions are removed in this paper. Therefore, the synchronization criteria obtained in this paper are less conservative.

4 Numerical simulations

In this section, some examples are given to demonstrate the feasibility of the proposed synchronization criteria in Theorem 3.1.

System (1.6) with \(n=2\), \(k=1\) takes the form

where \(\alpha_{1}(u_{1}(t,x))=0.7+\frac{0.2}{1+u_{1}^{2}(t,x)}\), \(\alpha_{2}(u_{2}(t,x))=1+\frac{0.1}{1+u_{2}^{2}(t,x)}\), \(\beta_{1}(u_{1}(t,x))=1.4u_{1}(t,x)\), \(\beta_{2}(u_{2}(t,x))=1.6u_{2}(t,x)\), \(f_{j}(u_{j}(t,x))=g_{j}(u_{j}(t,x))=h_{j}(u_{j}(t,x))=\mathrm{tanh}(u_{j}(t,x))\), \(\tau(t)=0.55\pi+0.1\pi\cos t\), \(\tau^{\ast}(t)=0.102+0.01\sin (t-0.1)\). The parameters of (4.1) are assumed to be \(D_{1}=0.1\), \(D_{2}=0.1\), \(a_{11}=1.5\), \(a_{12}=-0.25\), \(a_{21}=3.2\), \(a_{22}=1.9\), \(b_{11}=-1.8\), \(b_{12}=-1.3\), \(b_{21}=-0.2\), \(b_{22}=2.5\), \(d_{11}=0.9\), \(d_{12}=-0.15\), \(d_{21}=0.2\), \(d_{22}=-0.2\), \(x\in\Omega=[-5,5]\). The initial conditions of system (4.1) are chosen as

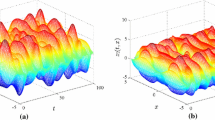

where \((s,x)\in[-0.65\pi,0]\times\Omega\). Numerical simulation illustrates that system (4.1) with boundary condition (1.7) and initial condition (4.2) shows chaotic phenomenon (see Figure 1).

Chaotic behaviors of Cohen-Grossberg neural networks ( 4.1 ).

The response system takes the form

where

The initial conditions for response system (4.3) are chosen as

where \((s,x)\in[-0.65\pi,0]\times\Omega\).

It is easy to know that \(L_{i}^{*}=M_{i}^{\ast}=N_{i}^{\ast}=L_{i}=M_{i}=N_{i}=1\), \(i=1,2\), \(\bar{\alpha}_{1}=0.2\), \(\bar{\alpha}_{2}=0.1\), \(\alpha_{1}^{\ast}=0.9\), \(\alpha_{2}^{\ast}=1.1\), \(\gamma_{1}=0.84\), \(\gamma_{2}=1.52\), \(\eta_{11}=0.12\), \(\eta_{12}=0\), \(\eta_{21}=0\), \(\eta_{22}=0.03\), \(\tau=0.65\pi\), \(\tau^{\ast}=0.112\), \(\lambda_{1}=0.0987\). Therefore, assumptions (H1)-(H4) hold for systems (4.1) and (4.3).

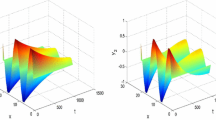

Let \(p=2\), \(\xi_{i}=\zeta_{i}=\rho_{i}=\varrho_{i}=\epsilon _{i}=\varsigma_{i}=1\) for \(i=1,2\), \(l=1,2\), and choose the control parameters \(k_{1}=10\), \(k_{2}=10\), \(\delta=9.8\), \(T=10\), then \(\kappa=10.0527\), \(w=3.2258\), \(\theta=20.0000\). Therefore, \(\bar{\varepsilon}= 0.5301\). Obviously, systems (4.1) and (4.3) satisfy assumptions (H5)-(H6). Hence, by Theorem 3.1, systems (4.1) and (4.3) are exponentially synchronized as shown in Figure 2 by numerical simulation.

Remark 4

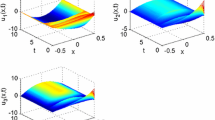

Clearly, if the control width δ increases, assumptions (H1)-(H6) can be satisfied easily. Hence, the exponential synchronization of Cohen-Grossberg neural networks with the larger control width is more easily realized. Dynamic behaviors of the synchronization errors between systems (4.1) and (4.3) with differential control width are shown in Figure 3.

Remark 5

It follows from (H5) that the larger stochastic perturbation is, the more difficult (H5) can be satisfied. Hence, the exponential synchronization of Cohen-Grossberg neural networks with the smaller stochastic perturbation is more easily achieved. Dynamic behaviors of the synchronization errors between systems (4.1) and (4.3) with differential stochastic perturbation are shown in Figure 4.

Remark 6

For the given control strength \(k_{i}=k(i\in\ell)\), as long as \(D_{i}\) is large enough or \(\vert x_{k} \vert \) is small enough, assumptions (H5) and (H6) can always be satisfied. Hence, it is beneficial for reaction-diffusion Cohen-Grossberg neural networks to realize the synchronization by increasing diffusion coefficients or reducing diffusion space. Dynamic behaviors of the errors between systems (4.1) and (4.3) with differential diffusion coefficients and differential diffusion space are shown in Figures 5 and 6.

Remark 7

In many cases, two-neuron networks show the same behavior as large size networks, and many research methods used in two-neuron networks can be applied to large size networks. Therefore, a two-neuron networks can be used as an example to improve our understanding of our theoretical results. In addition, the parameter values are selected randomly to ensure that neural networks (4.1) exhibit a chaotic behavior.

5 Conclusion

In this paper, a periodically intermittent controller was designed to achieve the exponential synchronization for stochastic reaction-diffusion Cohen-Grossberg neural networks with Neumann boundary conditions and mixed time-varying delays based on p-norm. By constructing the Lyapunov functional, the exponential synchronization criteria dependent on diffusion coefficients, diffusion space, stochastic perturbation and control width were obtained. Theory analysis revealed that stochastic reaction-diffusion Cohen-Grossberg neural networks can achieve exponential synchronization more easily by increasing diffusion coefficients and control width or reducing diffusion space and stochastic perturbation. Compared with the previous works [13, 14, 18, 23–26], the obtained synchronization criteria are less conservative and have wider application prospects.

Note that the important inequality (2.3) in Lemma 2.3 holds under the assumption that \(p\geq2\). Hence, it is our future work to study the exponential synchronization of stochastic reaction-diffusion Cohen-Grossberg neural networks with Neumann boundary conditions for \(p=1\) or \(p=\infty\).

References

Pecora, LM, Carrol, TL: Control synchronization in chaotic system. Phys. Rev. Lett. 64, 821-824 (1990)

Li, R, Wei, HZ: Synchronization of delayed Markovian jump memristive neural networks with reaction-diffusion terms via sampled data control. Int. J. Mach. Learn. Cyb. 7, 157-169 (2016)

Ghaffari, A, Arebi, S: Pinning control for synchronization of nonlinear complex dynamical network with suboptimal SDRE controllers. Nonlinear Dyn. 83, 1003-1013 (2016)

Wu, HQ, Zhang, XW, Li, RX: Synchronization of reaction-diffusion neural networks with mixed time-varying delays. Int. J. Control. Autom. Syst. 25, 16-27 (2015)

Cao, JB, Cao, BG: Neural network sliding mode control based on on-line identification for electric vehicle with ultracapacitor-battery hybrid power. Int. J. Control. Autom. Syst. 7, 409-418 (2009)

Zhao, H, Li, LX, Peng, HP: Impulsive control for synchronization and parameters identification of uncertain multi-links complex network. Nonlinear Dyn. 83, 1437-1451 (2016)

Mei, J, Jiang, MH, Wu, Z, Wang, XH: Periodically intermittent controlling for finite-time synchronization of complex dynamical networks. Nonlinear Dyn. 79, 295-305 (2015)

Liu, XW, Chen, TP: Cluster synchronization in directed networks via intermittent pinning control. IEEE Trans. Neural Netw. 22, 1009-1020 (2011)

Liu, XW, Chen, TP: Synchronization of nonlinear coupled networks via aperiodically intermittent pinning control. IEEE Trans. Neural Netw. Learn. Syst. 26, 113-126 (2015)

Liu, XW, Chen, TP: Synchronization of complex networks via aperiodically intermittent pinning control. IEEE Trans. Autom. Control 60, 3316-3321 (2015)

Yang, SJ, Li, CD, Hunag, TW: Exponential stabilization and synchronization for fuzzy model of memristive neural networks by periodically intermittent control. Neural Netw. 75, 162-172 (2016)

Li, N, Cao, JD: Periodically intermittent control on robust exponential synchronization for switched interval coupled networks. Neurocomputing 131, 52-58 (2014)

Hu, C, Yu, J, Jiang, HJ, Teng, ZD: Exponential synchronization for reaction-diffusion networks with mixed delays in terms of p-norm via intermittent control. Neural Netw. 31, 1-11 (2012)

Gan, QT, Zhang, H, Dong, J: Exponential synchronization for reaction-diffusion neural networks with mixed time-varying delays via periodically intermittent control. Nonlinear Anal. Model. Control. 19, 1-25 (2014)

Yu, J, Hu, C, Jiang, HJ, Teng, ZD: Exponential synchronization of Cohen-Grossberg neural networks via periodically intermittent control. Neurocomputing 74, 1776-1782 (2011)

Haykin, S: Neural Networks. Prentice-Hall, Englewood Cliffs (1994)

Song, HH, Chen, DD, Li, WX, Qu, YB: Graph-theoretic approach to exponential synchronization of stochastic reaction-diffusion Cohen-Grossberg neural networks with time-varying delays. Neurocomputing 177, 179-187 (2016)

Gan, QT: Exponential synchronization of stochastic Cohen-Grossberg neural networks with mixed time-varying delays and reaction-diffusion via periodically intermittent control. Neural Netw. 31, 12-21 (2012)

Wang, K: Stochastic Biomathematics Model. The Science Publishing Company, Bei**g (2010)

Gu, CH, Li, DQ, Chen, SX, Zheng, SM, Tan, YJ: Equations of Mathematical Physics. Higher Education Press, Bei**g (2002)

Pan, J, Liu, XZ, Zhong, SM: Stability criteria for impulsive reaction-diffusion Cohen-Grossberg neural networks with time-varying delays. Math. Comput. Model. 51, 1037-1050 (2010)

Mei, J, Jiang, MH, Wang, B, Liu, Q, Xu, WM, Liao, T: Exponential p-synchronization of non-autonomous Cohen-Grossberg neural networks with reaction-diffusion terms via periodically intermittent control. Neural Process. Lett. 40, 103-126 (2014)

Yang, XY, Cui, BT: Asymptotic synchronization of a class of neural networks with reaction-diffusion terms and time-varying delays. Comput. Math. Appl. 52, 897-904 (2006)

Wang, YY, Cao, JD: Synchronization of a class of delayed neural networks with reaction-diffusion terms. Phys. Lett. A 369, 201-211 (2007)

Sheng, L, Yang, HZ, Lou, XY: Adaptive exponential synchronization of delayed neural networks with reaction-diffusion terms. Chaos Solitons Fractals 40, 930-939 (2009)

Liu, XW: Synchronization of linearly coupled neural networks with reaction-diffusion terms and unbounded time delays. Neurocomputing 73, 2681-2688 (2010)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (11371368).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors have read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, L., Xu, R. & Wang, Z. Synchronization analysis for stochastic reaction-diffusion Cohen-Grossberg neural networks with Neumann boundary conditions via periodically intermittent control. Adv Differ Equ 2017, 141 (2017). https://doi.org/10.1186/s13662-017-1193-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1193-3