Abstract

The development Open Source Software fundamentally depends on the participation and commitment of volunteer developers to progress on a particular task. Several works have presented strategies to increase the on-boarding and engagement of new contributors, but little is known on how these diverse groups of developers self-organise to work together. To understand this, one must consider that, on one hand, platforms like GitHub provide a virtually unlimited development framework: any number of actors can potentially join to contribute in a decentralised, distributed, remote, and asynchronous manner. On the other, however, it seems reasonable that some sort of hierarchy and division of labour must be in place to meet human biological and cognitive limits, and also to achieve some level of efficiency. These latter features (hierarchy and division of labour) should translate into detectable structural arrangements when projects are represented as developer-file bipartite networks. Thus, in this paper we analyse a set of popular open source projects from GitHub, placing the accent on three key properties: nestedness, modularity and in-block nestedness –which typify the emergence of heterogeneities among contributors, the emergence of subgroups of developers working on specific subgroups of files, and a mixture of the two previous, respectively. These analyses show that indeed projects evolve into internally organised blocks. Furthermore, the distribution of sizes of such blocks is bounded, connecting our results to the celebrated Dunbar number both in off- and on-line environments. Our conclusions create a link between bio-cognitive constraints, group formation and online working environments, opening up a rich scenario for future research on (online) work team assembly (e.g. size, composition, and formation). From a complex network perspective, our results pave the way for the study of time-resolved datasets, and the design of suitable models that can mimic the growth and evolution of OSS projects.

Similar content being viewed by others

Introduction

Open Source Software (OSS) is a key actor in the current software market, and a major factor in the consistent growth of the software economy. The promise of OSS is better quality, higher reliability, more flexibility, lower cost, and an end to predatory vendor lock-in, according to the Open Source initiative1. These goals are achieved thanks to the active participation of the community2: indeed, OSS projects depend on contributors to progress3,4.

The emergence of GitHub and other platforms as prominent public repositories, together with the availability of APIs to access comprehensive datasets on most projects’ history, has opened up the opportunities for more systematic and inclusive analyses of how OSS communities operate. In the last years, research on OSS has left behind a rich trace of facts. For example, we now know that the majority of code contributions are highly skewed towards a small subset of projects5,6, with many projects quickly losing community interest and being abandoned at very early stages7. Moreover, most projects have a low truck factor, meaning that a small group of developers is responsible for a large set of code contributions8,9,10. This pushes projects to depend more and more on their ability to attract and retain occasional contributors (also known as “drive-by” commits11) that can complement the few core developers and help them to move the project forward. Along these lines, several works have focused on strategies to increase the on-boarding and engagement of such contributors (e.g., by using simple contribution processes12, extensive documentation13, gamification techniques14 or ad hoc on-boarding portals15, among others16). Other social, economic, and geographical factors affecting the development of OSS have been scrutinised as well, see Cosentino et al.17 for a thorough review.

Parallel to these macroscopic observations and statistical analyses, social scientists and complex network researchers have focused, in relatively much fewer papers, on analysing how a diverse group of (distributed) contributors work together, i.e. the structural features of projects. Most often, these works pivot on the interactions between developers, building explicit or implicit collaborative networks, e.g. email exchanges18,19 and unipartite projections from the contributor-file bipartite network20, respectively. These developer social networks have been analysed to better understand the hierarchies that emerge among contributors, as well as to identify topical clusters, i.e. cohesive subgroups that manifest strongly in technical discussions. However, the behaviour of OSS communities cannot be fully understood only accounting for the relations between project contributors, since their interactions are mostly mediated through the edition of project files (no direct communication is present between group members). To overcome this limitation, here we focus on studying the structural organisation of OSS projects as contributor-file bipartite graphs. On top of technical and methodological adaptations, the consideration of these two elements composing the OSS system allows retaining valuable information (as opposed to collapsing it on a unipartite network) and, above all, recognising both classes as co-evolutionary units that place mutual constraints on each other.

Our interest on the structural features of OSS projects departs from some obvious, but worth highlighting, observations. First, public collaborative repositories place no limits, in principle, to the number of developers (and files) that a project should host. In this sense, platforms like GitHub resemble online social networks (e.g. Twitter or Facebook), in which the number of allowed connections is virtually unbounded. However, we know that other factors –biological, cognitive– set well-defined limits to the amount of active social connections an individual can have21, also online22. But, do these limits apply in collaborative networks, where contributors work remotely and asynchronously? Does a division of labour arise, even when interaction among developers is mostly indirect (that is, via the files that they edit in common)? And, even if specialised subgroups emerge (as some evidence already suggests, at least in developer social networks20), do these exhibit some sort of internal organisation?

To answer these questions, we will look at three structural arrangements which have been identified as signatures of self-organisation in both natural and artificial systems: nestedness23,24, modularity25,26,27, and in-block nestedness28,29,30. The first one, nestedness, is a suitable measure to quantify and visualise how the mentioned low truck factor, and the existence of core/drive-by developers31, translates into a project’s network structure. As for modularity, it provides a natural way to check whether OSS projects split in identifiable compartments, suggesting specialisation, and whether such compartments are subject to size limitations, along the mentioned bio-cognitive limits. Finally, since modularity and nestedness are, to some extent, incompatible in the same network32,17.

These findings open up a rich scenario, with many questions lying ahead. On the OSS environment side, our results contribute to an understanding of how successful projects self-organise towards a modular architecture: large and complex tasks, involving hundreds (and even thousands) of files appear to be broken down, presumably for the sake of efficiency and task specialisation (division of labour). Within this compartmentalisation, mature projects exhibit even further organisation, arranging the internal structure of subgroups in a nested way –something that is not grasped by modularity optimisation only. More broadly, our results demand further investigation, to understand their connection with the general topic of work team assembly (size, composition, and formation), and to the (urgent) issue of software sustainability38. OSS is a prominent example of the “tragedy of the commons”: companies and services benefit from the software, but there is a grossly disproportionate imbalance between those consuming the software and those building and maintaining it. Indeed, by being more aware of the internal self-organisation of their projects, owners and administrators may design strategies to optimise the collaborative efforts of the limited number (and availability) of project contributors. For instance, they can place efforts to drive the actual project’s block decomposition towards a pre-defined software architectural pattern; or ensure that, despite the nested organisation within blocks, all files in a block receive some minimal attention. More research on the derivation of effective project management leadership strategies from the current division of labour in a project is clearly needed and impactful.

Closer to the complex networks and data analysis tradition, our results leave room to widen the scope of this research. First, the present analysis could be complemented with weighted information. On first thought, this is within reach –one should just adapt the techniques and measurements to a weighted scenario. However, the problem is not so much a methodological one, but semantic: the number of times that a contributor interacts with a file (commits, in Git jargon) is not necessarily an accurate measure of the amount of information allocated in the file. Second, future research should tackle a larger and more heterogeneous set of projects, and even across different platforms such as Bitbucket. Admittedly, this work has focused on successful projects, inasmuch we only consider a few dozens among the most popular. Other sampling criteria could be discussed and considered in the future, to ensure richer and more diverse project collection. Beyond the richness of the analysed dataset, the relationship between maturity and structural arrangement (specially in regard to the internal organisation of subgroups) clearly demands further work. Two obvious –and intimately related– lines of research are related to time-resolved datasets, and the design of a suitable model that can mimic the growth and evolution of OSS projects. Regarding a temporal account of OSS projects, some challenges emerge due to the bursty development of projects in git-like environments. For example, a fixed sliding-window scheme would probably harm, rather than improve, possible insights into software development. On the modelling side, further empirical knowledge is needed to better grasp the cooperative-competitive interactions within these type of projects, which in turn determine the dynamical rules for both contributors and files which, presumably, differ largely.

Material and Methods

Data

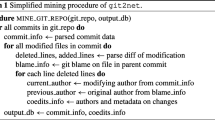

Our open source projects dataset was collected from GitHub39, a social coding platform which provides source code management and collaboration features such as bug tracking, feature requests, tasks management and wiki for every project. Given that GitHub users can star a project (to show interest in its development and follow its advances), we chose to measure the popularity of a GitHub project in terms of its number of stars (i.e. the more stars the more popular the project is considered) and selected the 100 most popular projects. Other possible criteria –number of forks, open issues, watchers, commits and branches– are positively correlated with stars17, and so our proxy to mature, successful and active projects probably overlaps with other sampling procedures. The construction of the dataset involved three phases, namely: (1) cloning, (2) import, and (3) enrichment.

Cloning and import

After collecting the list of 100 most popular projects in GitHub (at the moment of collecting the data) via its API40, we cloned them to collect 100 Git repositories. We analysed the cloned repositories and discarded those ones not involving the development of a software artifact (e.g. collection of links or questions), rejecting 15 projects out of the initial 100. We then imported the remaining Git repositories into a relational database using the Gitana41 tool to facilitate the query and exploration of the projects for further analysis. In the Gitana database, Git repositories are represented in terms of users (i.e. contributors with a name and an email); files; commits (i.e. changes performed to the files); references (i.e. branches and tags); and file modifications. For two projects, the import process failed to complete due missing or corrupted information in the source GitHub repository.

Enrichment

Our analysis needs a clear identification of the author of each commit so that we can properly link contributors and files they have modified. Unfortunately, Git does not control the name and email contributors indicate when pushing commits resulting on clashing and duplicate problems in the data. Clashing appears when two or more contributors have set the same name value (in Git the contributor name is manually configured), resulting in commits actually coming from different contributors appearing with the same commit name (e.g., often when using common names such as “mike”). In addition, duplicity appears when a contributor has several emails, thus there are commits that come from the same person, but are linked to different emails suggesting different contributors. We found that, on average, around 60% of the commits in each project were modified by contributors that involved a clashing/duplicity problem (and affecting a similar number of files). To address this problem, we relied on data provided by GitHub for each project (in particular, GitHub usernames, which are unique). By linking commits to unique usernames, we could disambiguate the contributors behind the commits. Thus, we enriched our repository data by querying GitHub API to discover the actual username for each commit in our repository, and relied on those instead on the information provided as part of the Git commit metadata. This method only failed for commits without a GitHub username associated (e.g. when the user that made that commit was no longer existing in GitHub). In those cases we stick to the email in Git commit as contributor identifier. We reduced considerably the clashing/duplicity problem in our dataset. The percentage of commits modified by contributors that may involve a clashing/duplicity problem was reduced to 0.004% on average (σ = 0.011), and the percentage of files affected was reduced to 0.020% (σ = 0.042).

At the end of this process, we had successfully collected a total number of 83 projects, adding up to 48,015 contributors, 668,283 files and 912,766 commits. 18 more projects (to the total of 65 reported in this work) were rejected due to other limitations. On one hand, we discarded some projects that presented very strong divergence between the number of nodes of the two sets, e.g. projects with very large number of files but very few contributors. In these cases, although \({\mathscr{N}}\), Q and \( {\mathcal I} \) can be quantified, the outcome is hardly interpretable. An example of this is the project material-designs-icons, with 15 contributors involved in the development of 12,651 files. As mentioned above, we also discarded projects that are not devoted to software development, but are rather collections of useful resources (free programming books, coding courses, etc.). Finally, we considered only projects with a bipartite network size within the range 101 ≤ S ≤ 104, as the computational costs to optimise in-block nestedness and modularity for larger sizes were too severe. The complete dataset with the final 65 projects is available at http://cosin3.rdi.uoc.edu, under the Resources section.

Matrix generation

We build a bipartite unweighted network as a rectangular N × M matrix, where rows and columns refer to contributors and source files of an OSS project, respectively. Cells therefore represent links in the bipartite network, i.e. if the cell aij has a value of 1, it represents that the contributor i has modified the file j at least once, otherwise aij is set to 0.

We are aware that an unweighted scheme may be discarding important information, i.e. the heterogeneity of time and effort that developers devote to files. We stress that including weights in our analysis can introduce ambiguities in our results. In the Github environment, the size of a contribution could be regarded either as the number of times a developer commits to a file, or as the number of lines of code (LOC) that a developer modified when updating the file. Indeed, both could represent additional dimensions to our study. Furthermore, at least for the first (number of commits), it is readily available from the data collection methods. However, weighting the links of the network by the number of commits is risky. Consider for example a contributor who, after hours or days of coding and testing, performs a commit that substantially changes a file in a project. On the other side, consider a contributor who is simply documenting some code, thus committing many times small comments to an existing software –without changing the internal logic of it. There is no simple way to distinguish these cases. The consideration of the second item (number of LOC modified) could be a proxy to such distinction, but this is information is not realistically accessible given the current limitations to data collection. Getting a precise number of LOCs requires a deeper analysis of the Git repository associated to the GitHub project, parsing the commit change information one by one –an unfeasible task if we aim at analysing a large set of projects. The same scalability issue would appear if we rely on the GitHub API to get this information, which additionally would involve quota problems with such API. One might consider even a third argument: not every programming language “weighs” contributions in the same way. Many lines of HTML code may have a small effect on the actual advancement of a project, while two brief lines in C may completely change a whole algorithm. In conclusion, we believe there is no generic solution that allows to assess the importance of a LOC variation in a contribution. This will depend first on the kind of file, then on the programming style of each project and finally on an individual analysis of each change. Thus, adding informative and reliable weights to the network is semantically unclear (how should we interpret those weights?) and operationally out of reach.

Nestedness

The concept of nestedness appeared, in the context of complex networks, over a decade ago in Systems Ecology42. In structural terms, a perfect nested pattern is observed when specialists (nodes with low connectivity) interact with proper nested subsets of those species interacting with generalists (nodes with high connectivity), see Fig. 2 (left). Several works have shown that a nested configuration is signature feature of cooperative environments –those in which interacting species obtain some benefit42,43,44. Following this example in natural systems, scholars have sought (and found) this pattern in other kinds of systems32,45,46,47. In particular, measuring nestedness in OSS contributor-file bipartite networks helps to uncover patterns of file development. For instance, in a perfectly nested bipartite network the most generalist developer has contributed to every file in the project, i.e. a core developer. Other contributors will exhibit decreasing amounts of edited files. On top of this hierarchical arrangement, we find asymmetry: specialist contributors (those working on a single file) develop precisely the generalist file, i.e. the file that every other developer also works on. Here, we quantify the amount of nestedness in our OSS networks by employing the global nestedness fitness \({\mathscr{N}}\) introduced by Solé-Ribalta et al.30:

where Oi,j (or Ol,m) measures the degree of links overlap between rows (or columns) node pairs; ki, kj corresponds to the degree of the nodes i,j; Θ(·) is a Heaviside step function that guarantees that we only compute the overlap between pair of nodes when ki ≥ kj. Finally, 〈Oi,j〉 represents the expected number of links between row nodes i and j in the null model, and is equal to \(\langle {O}_{i,j}\rangle =\frac{{k}_{i}{k}_{j}}{M}\). This measure is in the tradition of other overlap measures, i.e. NODF48,49.

Modularity

A modular network structure (Fig. 2, center) implies the existence of well-connected subgroups, which can be identified given the right heuristics to do so. Unlike nestedness (which apparently emerges only in very specific circumstances), modularity has been reported in almost any kind of systems: from food-webs50 to lexical networks51, to the Internet27 and social networks52. Applied to OSS developer-file networks, modularity helps to identify blocks of developers working together in a set of files. High Q values in OSS projects would reveal some level of specialisation (division of labour) in the development of the project. However, if an OSS project is only modular (i.e., any trace of nestedness is missing), it may reveal that, beyond compartmentalisation, no further organisational refinement is at work. Here, we search a (sub)optimal modular partition of the nodes through a community detection analysis26,27. To this end, we apply the extremal optimisation algorithm53 (along with a Kernighan-Lin54 refinement procedure) to maximise Barber’s26 modularity Q,

where L is the number of interactions (links) in the network, \({\tilde{a}}_{ij}\) denotes the existence of a link between nodes i and j, \({\tilde{p}}_{ij}={k}_{i}{k}_{j}/L\) is the probability that a link exists by chance, and δ(αi,αj) is the Kronecker delta function, which takes the value 1 if nodes i and j are in the same community, and 0 otherwise.

In-block nestedness

Nestedness and modularity are emergent properties in many systems, but it is rare to find them in the same system. This apparent incompatibility has been noticed and studied, and it can be explained by different evolutive pressures: certain mechanisms favour the emergence of blocks, while others favour the emergence of nested patterns. Following this logic, if two such mechanisms are concurrent, then hybrid (nested-modular) arrangements may appear. Hence, the third architectural organisation that we consider in our work refers to a mesoscale hybrid pattern, in which the network presents a modular structure, but the interactions within each module are nested, i.e. an in-block nested structure, see Fig. 2 (right). This type of hybrid or “compound” architectures was first described in Lewinsohn et al.28. Although the literature covering this types of patterns is still scarce, the existence of such type of hybrid structure in empirical networks has been recently explored29,30,55, and the results from these works seem to indicate that combined structures are, in fact, a common feature in many systems from different contexts.

In order to compute the amount of in-block nested present in networks, in this work, we have adopted a new objective function30, that is capable to detect these hybrid architectures, and employed the same optimisation algorithms used to maximise modularity. The in-block nestedness objective function can be written as,

Note that, by definition, \( {\mathcal I} \) reduces to \({\mathscr{N}}\) when the number of blocks is 1. This explains why the right half of the ternary plot (Fig. 6) is necessarily empty: \( {\mathcal I} \ge {\mathscr{N}}\), and therefore \({f}_{ {\mathcal I} }\ge {f}_{{\mathscr{N}}}\). On the other hand, an in-block nested structure exhibits necessarily some level of modularity, but not the other way around. This explains why the lower-left area of the simplex in Fig. 6 is empty as well (see Palazzi et al.33 for details).

The corresponding software codes for nestedness measurement, and modularity and in-block nestedness optimisation (both for uni- and bipartite cases), can be downloaded from the web page http://cosin3.rdi.uoc.edu/, under the Resources section.

Stationarity test

Figures 1 and 5 visually suggest that some quantities do not vary as a function of project size –or vary very slowly. As convincing as this visual hint may result, a statistical test is necessary to confirm that, indeed, there is a limit on the quantity at stake. The idea of stationarity on a time series implies that summary statistics of the data, like the mean or variance, are approximately constant when measured from any two starting points in series (different project sizes in our case). Typically, statistical stationarity tests are done by checking for the presence (or absence) of a unit root on the time series (null hypothesis). A time series is said to have a unit root if we can write it as

where ε is an error term. If a = 1 the null hypothesis of non-stationarity is accepted. On the contrary, if a < 1 there is not unit root, and the process is deemed stationary. In this work, we have employed the Augmented Dickey-Fuller (ADF) test56, as implemented in the statsmodels.tsa.stattools Python package. The results of the analysis indicate that, if the test statistic is less than the critical values at different significance levels, then, the null hypothesis of a unit root is rejected, and we can conclude that the data series is stationary.

References

Open source initiative, https://opensource.org/.

Schuwer, R., van Genuchten, M. & Hatton, L. On the impact of being open. IEEE Softw. 32, 81–83 (2015).

Dabbish, L., Stuart, C., Tsay, J. & Herbsleb, J. Social Coding in GitHub: Transparency and Collaboration in an Open Software Repository. In ACM Conf. on Computer-Supported Cooperative Work and Social Computing, 1277–1286 (2012).

Padhye, R., Mani, S. & Sinha, V. S. A Study of External Community Contribution to Open-source Projects on GitHub. In Working Conf. on Mining Software Repositories, 332–335 (2014).

Lima, A., Rossi, L. & Musolesi, M. Coding Together at Scale: GitHub as a Collaborative Social Network. In Int. Conf. on Weblogs and Social Media, 10 (2014).

Dabbish, L., Stuart, C., Tsay, J. & Herbsleb, J. Leveraging Transparency. IEEE Softw. 30, 37–43 (2013).

Fitz-Gerald, S. Book review of:‘internet success: a study of open-source software commons’ by cm schweik and rc english. Int. J. Inf. Manag. 32, 596–597 (2012).

Cosentino, V., Izquierdo, J. L. C. & Cabot, J. Assessing the bus factor of git repositories. In Int. Conf. on Software Analysis, Evolution, and Reengineering, 499–503 (2015).

Yamashita, K., McIntosh, S., Kamei, Y., Hassan, A. E. & Ubayashi, N. Revisiting the Applicability of the Pareto Principle to Core Development Teams in Open Source Software Projects. In Int. Workshop on Principles of Software Evolution, 46–55 (2015).

Avelino, G., Passos, L., Hora, A. & Valente, M. T. A novel approach for estimating truck factors. In Int. Conf. on Program Comprehension, 1–10 (2016).

Pham, R., Singer, L., Liskin, O., Figueira Filho, F. & Schneider, K. Creating a Shared Understanding of Testing Culture on a Social Coding Site. In Int. Conf. on Software Engineering, 112–121 (2013).

Yamashita, K., Kamei, Y., McIntosh, S., Hassan, A. E. & Ubayashi, N. Magnet or Sticky? Measuring Project Characteristics from the Perspective of Developer Attraction and Retention. J. Inf. Process. 24, 339–348 (2016).

Hata, H., Todo, T., Onoue, S. & Matsumoto, K. Characteristics of Sustainable OSS Projects: a Theoretical and Empirical Study. In Int. Workshop on Cooperative and Human Aspects of Software Engineering, 15–21 (2015).

Bertholdo, A. P. O. & Gerosa, M. A. Promoting Engagement in Open Collaboration Communities by Means of Gamification. In Int. Conf. on Human-Computer Interaction, 15–20 (2016).

Steinmacher, I., Conte, T. U., Treude, C. & Gerosa, M. A. Overcoming open source project entry barriers with a portal for newcomers. In Int. Conf. on Software Engineering, 273–284 (2016).

Steinmacher, I., Silva, M. A. G., Gerosa, M. A. & Redmiles, D. F. A systematic literature review on the barriers faced by newcomers to open source software projects. Inf. & Softw. Technol. 59, 67–85 (2015).

Cosentino, V., Izquierdo, J. L. C. & Cabot, J. A systematic map** study of software development with github. IEEE Access 5, 7173–7192 (2017).

Valverde, S. & Solé, R. V. Self-organization versus hierarchy in open-source social networks. Phys. Rev. E 76, 046118 (2007).

Bird, C., Pattison, D., D’Souza, R., Filkov, V. & Devanbu, P. Latent social structure in open source projects. In Proceedings of the 16th ACM SIGSOFT International Symposium on Foundations of software engineering, 24–35 (2008).

Hong, Q., Kim, S., Cheung, S. C. & Bird, C. Understanding a developer social network and its evolution. In Software Maintenance (ICSM), 2011 27th IEEE International Conference on, 323–332 (IEEE, 2011).

Dunbar, R. Neocortex size as a constraint on group size in primates. J. Hum. Evol. 22, 469–493 (1992).

Gonc¸alves, B., Perra, N. & Vespignani, A. Modeling users’ activity on twitter networks: Validation of dunbar’s number. PloS One 6, e22656 (2011).

Patterson, B. D. & Atmar, W. Nested subsets and the structure of insular mammalian faunas and archipelagos. Biol. J. Linnean Soc. 28, 65–82 (1986).

Atmar, W. & Patterson, B. D. The measure of order and disorder in the distribution of species in fragmented habitat. Oecologia 96, 373–382 (1993).

Newman, M. E. & Girvan, M. Finding and evaluating community structure in networks. Phys. Rev. E 69, 026113 (2004).

Barber, M. J. Modularity and community detection in bipartite networks. Phys. Rev. E 76, 066102 (2007).

Fortunato, S. Community detection in graphs. Phys. Reports 486, 75–174 (2010).

Lewinsohn, T. M., Inácio Prado, P., Jordano, P., Bascompte, J. & Olesen, J. M. Structure in plant–animal interaction assemblages. Oikos 113, 174–184 (2006).

Flores, C. O., Valverde, S. & Weitz, J. S. Multi-scale structure and geographic drivers of cross-infection within marine bacteria and phages. The ISME journal 7, 520–532 (2013).

Solé-Ribalta, A., Tessone, C. J., Mariani, M. S. & Borge-Holthoefer, J. Revealing in-block nestedness: detection and benchmarking. Phys. Rev. E 97, 062302 (2018).

Lee, S. H. et al. Network nestedness as generalized core-periphery structures. Phys. Rev. E 93, 022306 (2016).

Borge-Holthoefer, J., Baños, R. A., Gracia-Lázaro, C. & Moreno, Y. Emergence of consensus as a modular-to-nested transition in communication dynamics. Sci. Reports 7, 41673 (2017).

Palazzi, M., Borge-Holthoefer, J., Tessone, C. & Solé-Ribalta, A. Antagonistic structural patterns in complex networks. ar**v preprint ar**v:1810.12785 (2018).

Dunbar, R. How many friends does one person need?: Dunbar’s number and other evolutionary quirks (Faber & Faber, 2010).

Derex, M. & Boyd, R. Partial connectivity increases cultural accumulation within groups. Proc. Natl. Acad. Sci. 113, 2982–2987 (2016).

Derex, M., Perreault, C. & Boyd, R. Divide and conquer: intermediate levels of population fragmentation maximize cultural accumulation. Phil. Trans. R. Soc. B 373, 20170062 (2018).

Olsson, U. Confidence intervals for the mean of a log-normal distribution. J. Stat. Educ. 13 (2005).

Penzenstadler, B. Towards a definition of sustainability in and for software engineering. In Proceedings of the 28th Annual ACM Symposium on Applied Computing, 1183–1185 (2013).

Using the request, https://api.github.com/search/repositories?q=stars:>1&sort=stars&order=desc&per_page=100.

Cosentino, V., Cánovas Izquierdo, J. L. & Cabot, J. Gitana: A SQL-Based Git Repository Inspector. In Int. Conf. on Conceptual Modeling, 329–343 (2015).

Bascompte, J., Jordano, P., Melián, C. J. & Olesen, J. M. The nested assembly of plant–animal mutualistic networks. Proc. Natl. Acad. Sci. 100, 9383–9387 (2003).

Bastolla, U. et al. The architecture of mutualistic networks minimizes competition and increases biodiversity. Nat. 458, 1018–1020 (2009).

Suweis, S., Simini, F., Banavar, J. R. & Maritan, A. Emergence of structural and dynamical properties of ecological mutualistic networks. Nat. 500, 449 (2013).

Saavedra, S., Stouffer, D. B., Uzzi, B. & Bascompte, J. Strong Contributors to Network Persistence Are the Most Vulnerable to Extinction. Nat. 478, 233–235 (2011).

Bustos, S., Gomez, C., Hausmann, R. & Hidalgo, C. A. The dynamics of nestedness predicts the evolution of industrial ecosystems. PloS One 7, e49393 (2012).

Kamilar, J. M. & Atkinson, Q. D. Cultural assemblages show nested structure in humans and chimpanzees but not orangutans. Proc. Natl. Acad. Sci. 111, 111–115 (2014).

Almeida-Neto, M., Guimarães, P., Guimarães, P. R., Loyola, R. D. & Ulrich, W. A consistent metric for nestedness analysis in ecological systems: reconciling concept and measurement. Oikos 117, 1227–1239 (2008).

Ulrich, W., Almeida-Neto, M. & Gotelli, N. J. A consumer’s guide to nestedness analysis. Oikos 118, 3–17 (2009).

Stouffer, D. B. & Bascompte, J. Compartmentalization increases food-web persistence. Proc. Natl. Acad. Sci. 108, 3648–3652 (2011).

Borge-Holthoefer, J. & Arenas, A. Navigating Word Association Norms to Extract Semantic Information. In An. Conf. of the Cognitive Science Society (2009).

Borge-Holthoefer, J. et al. Structural and dynamical patterns on online social networks: the spanish may 15th movement as a case study. PloS One 6, e23883 (2011).

Duch, J. & Arenas, A. Community detection in complex networks using extremal optimization. Phys. Rev. E 72, 027104 (2005).

Kernighan, B. W. & Lin, S. An efficient heuristic procedure for partitioning graphs. The Bell system technical journal 49, 291–307 (1970).

Beckett, S. J. & Williams, H. T. Coevolutionary diversification creates nested-modular structure in phage–bacteria interaction networks. Interface Focus. 3, 20130033 (2013).

Dickey, D. A. & Fuller, W. A. Distribution of the estimators for autoregressive time series with a unit root. J. Am. Stat. Assoc. 74, 427–431 (1979).

Acknowledgements

M.J.P., A.S.-R. and J.B.-H. acknowledge the support of the Spanish MICINN project PGC2018-096999-A-I00 and the Cariparo Visiting Program 2018 (Padova, Italy). M.J.P. acknowledges as well the support of a doctoral grant from the Universitat Oberta de Catalunya (UOC).

Author information

Authors and Affiliations

Contributions

All authors designed research. J.C. and J.C.I. collected and curated the data. M.J.P., A.S.-R. and J.B.-H. and performed research. All authors analysed the results. J.B.-H. and A.S.-R. wrote the paper. All authors approved the final version.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Palazzi, M.J., Cabot, J., Cánovas Izquierdo, J.L. et al. Online division of labour: emergent structures in Open Source Software. Sci Rep 9, 13890 (2019). https://doi.org/10.1038/s41598-019-50463-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-50463-y

- Springer Nature Limited

This article is cited by

-

Ranking species in complex ecosystems through nestedness maximization

Communications Physics (2024)

-

On the analysis of non-coding roles in open source development

Empirical Software Engineering (2022)

-

An ecological approach to structural flexibility in online communication systems

Nature Communications (2021)