Abstract

Point cloud segmentation is an essential task in three-dimensional (3D) vision and intelligence. It is a critical step in understanding 3D scenes with a variety of applications. With the rapid development of 3D scanning devices, point cloud data have become increasingly available to researchers. Recent advances in deep learning are driving advances in point cloud segmentation research and applications. This paper presents a comprehensive review of recent progress in point cloud segmentation for understanding 3D indoor scenes. First, we present public point cloud datasets, which are the foundation for research in this area. Second, we briefly review previous segmentation methods based on geometry. Then, learning-based segmentation methods with multi-views and voxels are presented. Next, we provide an overview of learning-based point cloud segmentation, ranging from semantic segmentation to instance segmentation. Based on the annotation level, these methods are categorized into fully supervised and weakly supervised methods. Finally, we discuss open challenges and research directions in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Understanding indoor scenes is one of the essential tasks for computer vision and intelligence. The rapid development of depth sensors and three-dimensional (3D) scanners, such as RGB-D cameras and LiDAR, has increased interest in 3D indoor scene comprehension for a variety of applications, such as robotics [1], navigation [2] and augmented/virtual reality [3, 4]. The objective of 3D indoor scene understanding is to discern the geometric and semantic context of each interior scene component. There are a variety of 3D data formats, including depth images, meshes, voxels, and point clouds. Among them, point clouds are the most common non-discretized data representation in 3D applications and can be acquired directly by 3D scanners or reconstructed from stereo or multi-view images.

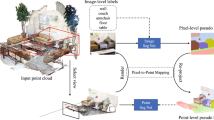

Point cloud segmentation, which attempts to decompose indoor scenes into meaningful parts and label each point, is a fundamental and indispensable step in understanding 3D indoor scenes. Point clouds provide the original spatial information, making them the preferred data format for segmenting indoor scenes. Segmentation of indoor scene point clouds can be divided into semantic segmentation and instance segmentation. Semantic segmentation assigns each point with a scene-level object category label. Instance segmentation is more difficult and requires individual object identification and localization. Unlike outdoor point cloud segmentation, which addresses dynamic objects, indoor point cloud segmentation commonly handles cluttered man-made objects with regularly designed shapes. Indoor point cloud data are usually captured by consumer-level sensors with short ranges, while outdoor point clouds are commonly collected by LiDAR. Indoor point cloud segmentation faces several challenges. First, point cloud data are typically large and voluminous, with varying qualities from different sensors. This makes it difficult to efficiently process and accurately annotate point cloud data. Second, indoor scenes are typically cluttered with severe occlusions. It is challenging to accurately segment objects when they are hidden or close together. Third, unlike regular data structures in two-dimensional (2D) images, point cloud data are sparse and unorganized, making it difficult to apply sophisticated 2D segmentation methods directly to 3D point clouds. Moreover, annotating 3D data is time-consuming and labor-intensive, limiting the ability of fully supervised learning. Existing indoor point cloud datasets are limited and suffer from long-tailed distributions.

Much effort has been devoted to the task of point cloud segmentation. Traditional geometry-based solutions for point cloud segmentation mainly include clustering-based, model-based, and graph-based methods [5]. The majority of these methods rely on hand-crafted features with heuristic geometric constraints. Deep learning has made significant progress in 2D vision [6–8], leading to advances in point cloud segmentation. In recent years, point cloud based deep neural networks [9] have demonstrated the ability to extract more powerful features and provide more reliable geometric cues for better understanding 3D scenes. Learning from 3D data has become a reality with the availability of public datasets such as ShapeNet, ModelNet, PartNet, ScanNet, Semantic3D, and KITTI. Recently, weakly supervised learning for point cloud segmentation has become a popular research topic, because it attempts to learn features from limited annotated data.

This paper provides a comprehensive review of point cloud segmentation for indoor 3D scene understanding, especially methods based on deep learning. We will introduce the primary datasets and methods used for indoor scene point cloud segmentation, analyze the current research trends in this area, and discuss future directions for development. The structure of this paper is as follows. Section 2 begins by introducing 3D indoor datasets that are used for understanding 3D scenes. Section 3 presents a brief review of geometry-based point cloud segmentation methods. Section 4 reviews indirect learning approaches with structured data. Section 5 provides a comprehensive survey of existing point cloud based learning frameworks employed for 3D scene segmentation. Section 6 introduces recent learning-based segmentation methods with multimodal data. Section 7 summarizes the performance of indoor point cloud segmentation using different methods. Section 8 discusses open questions and future research directions. Section 9 concludes the paper.

2 3D indoor scene point cloud datasets

The emergence of 3D datasets has led to the development of deep learning-based segmentation methods, which play a crucial role in advancing the field and promoting progress in research and applications. Public benchmarks have proven to be highly effective in facilitating framework evaluation and comparison. By providing real-world data with ground truth annotations, these benchmarks offer a foundation for researchers to test their algorithms and enable fair comparisons between different approaches. The two most commonly used 3D indoor scene point cloud datasets are ScanNet [10] and S3DIS [11].

ScanNet. ScanNet [10] is an RGB-D video dataset encompassing more than 2.5 million views across more than 1500 scans. This dataset is captured by RGB-D cameras and extensively annotated with essential information such as 3D camera poses, surface reconstructions, and instance-level semantic segmentations. This dataset has led to remarkable advancements in state-of-the-art performance across various 3D scene understanding tasks, including object detection, semantic segmentation, instance segmentation, and computer-aided design (CAD) model retrieval. ScanNet v2, the modified released version, has meticulously gathered 1,513 scans that have been annotated with impressive surface coverage. In the semantic segmentation task, the V2 version is labeled with annotations for 20 classes of 3D voxelized objects. Each of these classes corresponds to a specific furniture category or room layout, allowing for a more granular understanding and analysis of the captured indoor scenes. This makes ScanNetV2 one of the most active online evaluation datasets tailored for indoor scene semantic segmentation. Apart from semantic segmentation benchmarks, ScanNetV2 also provides benchmarks for instance segmentation and scene type classification.

ScanNet200. ScanNet200 [12] was developed on the basis of ScanNetV2 to overcome the limited set of 20 class labels. It significantly expands the number of classes to 200, representing an order of magnitude increase compared to the previous version. This annotation enables a better capture and understanding of real-world indoor scenes with a more diverse range of objects. This new benchmark allows for a more comprehensive analysis of performance across different object class distributions by splitting the 200 classes into three sets. Specifically, the “head” set comprises 66 categories with the highest frequency, the “common” set consists of 68 categories with less frequency, and the “tail” set contains the remaining categories.

S3DIS. The Stanford large-scale 3D indoor spaces dataset [11], known as S3DIS, acquired through the Matterport scanner, is another highly popular dataset that has been extensively employed in point cloud segmentation. This dataset comprises 272 room scenes divided into 6 distinct areas. Each point within the scene is assigned a semantic label corresponding to one of the 13 pre-defined categories, such as walls, tables, chairs, cabinets, and others. This dataset is specifically curated for large-scale indoor semantic segmentation.

Cornell RGBD. This dataset [13] provides 52 labeled indoor scenes comprising point clouds with RGB values. It consists of 24 labeled office scenes and 28 labeled home scenes. The point cloud data are generated from the original RGB-D images via the RGBD-SLAM method. This dataset contains approximately 550 views with 2495 labeled segments across 27 object classes, providing valuable resources for previous research and development in indoor scene understanding.

Washington RGB-D dataset. This dataset [14] includes 14 indoor scene point clouds, which are obtained via RGB-D image registration and stitching. It provides annotations of 9 semantic category labels, such as sofas, teacups, and hats.

3 Geometry-based segmentation

Geometry-based solutions for understanding indoor scenes can be classified as clustering or region growing, or model fitting based methods. By incorporating heuristic geometric constraints, most of these methods use handcrafted features. The intuition behind geometry-based methods is that man-made environments normally consist of many geometric structures.

Clustering or region growing. These approaches assume that points in close proximity to each other are more likely to belong to the same object or surface. By considering the geometric properties of these neighboring points, such as spatial coordinates and surface normals, these methods can identify regions that share similarities in these properties. Mattausch et al. [15] proposed a method for segmenting indoor scenes by identifying repeated objects from multi-room indoor scanning data. To represent the indoor scenes, they employed a collection of nearly planar patches. These patches were clustered based on a patch similarity matrix, which was constructed using shape geometrical descriptors. Using this approach, the researchers aimed to effectively segment indoor scenes by exploiting the inherent repeated object structures. Hu et al. [16] partitioned point clouds into surface patches using the dynamic region growing method to generate initial segmentation. By leveraging this intermediate data representation, the model can better account for shape variations and enhance its ability to classify objects.

Model fitting. Model fitting is proposed as a more efficient and robust strategy, particularly in scenarios where noise and outliers are present. Nan et al. [58], MPRM [44] transforms the input points into a latent representation. This transformation, known as x-conv, is implemented using MLPs. This transformation allows for the application of traditional convolution, which is effective in capturing local and global patterns in regular data domains.

GCN-based methods. Recent studies have explored the application of a graph convolutional network (GCN) to point clouds, recognizing that the points and their neighboring points can form a graph structure [25, 46, 72]. The objective is to extract local geometric structure information while preserving permutation invariance. This is achieved by constructing a spatial or spectral adjacency graph using the features of vertices and their neighbors. DGCNN [46] employs MLPs to aggregate edge features, which consist of nodes and their spatial neighbors. The features of the nodes are then updated based on the edge features. RGCNN [45] considers the features of data points in a point cloud as graph signals and uses spectral-based graph convolution for point cloud classification and segmentation. The spectral-based graph convolution operation is defined using the approximation of the Chebyshev polynomial. Furthermore, the Laplacian matrix of the graph is updated at each layer of the network based on the learned depth features. This allows for the extraction of local structural information while accounting for the unordered nature of the data. DGCNN and RGCNN demonstrate different approaches to the use of GCN. DGCNN focuses on edge feature aggregation and node feature updates, while RGCNN uses spectral-based graph convolution and updates the Laplacian matrix based on learned depth features. SPG [25] is a deep learning framework specifically designed for the task of semantic segmentation in large-scale point clouds with millions of points. The framework introduces the concept of a superpoint graph (SPG), which effectively captures the inherent organization of 3D point clouds. By dividing the scanned scene into geometrically homogeneous elements, SPG provides a compact representation that captures the contextual relationships between different object parts within the point cloud. Leveraging this rich representation, GCN is employed to learn and infer semantic segmentation labels. The combination of the SPG structure and GCN enables the capture of contextual relationships, resulting in accurate semantic segmentation of complex and voluminous point cloud data. PointWeb [52] designs an adaptive feature extraction module to find the interaction between densely connected neighbors. Unlike most point-based deep learning methods, PGCNet [60] incorporates geometric information as a prior and uses surface patches for data representation. The idea behind this method is that man-made objects can be decomposed into a set of geometric primitives. The PGCNet framework first extracts surface patches from indoor scene point clouds using the region growing method. With surface patches and their geometric features as input, a GCN-based network is designed to explore patch features and contextual information. Specifically, a dynamic graph U-Net module, which employs dynamic edge convolution, aggregates hierarchical feature embeddings. Taking advantage of the surface patch representation, PGCNet can achieve competitive semantic segmentation performance with much less training.

Transformer-based methods. The Transformer technique has revolutionized natural language processing (NLP) and 2D vision [73, 77] introduced guided point contrastive learning, which improves feature representation in the semi-supervised network. Augmented point clouds generated from input point clouds are fed into an unsupervised branch for backbone network training. The backbone network, classifier, and projector are shared with the supervised branch to produce semantic scores. By incorporating self-supervised learning, Zhang et al. [71] utilizes a Transformer-based module to directly predict instance masks. Semantic and geometric information is encoded into point features through a stacked Transformer decoder, which provides an instance heatmap that indicates the similarities among the point clouds. Recently, SPFormer [86] has been developed to directly predict instances in an end-to-end manner based on superpoint cross-attention. Superpoint features are aggregated from point clouds and used as input to the Transformer decoder.

In recent years, detection-based methods, which attempt to perform the instance segmentation task by a separate detection step, have received less attention than detection-free approaches that aim for an end-to-end solution. Moreover, different backbones with varying levels of annotation have been explored. However, the accuracy of instance segmentation is still low, and the generality of existing methods lacks strong empirical evidence.

5.2.2 Weakly supervised instance segmentation

While fullly supervised point cloud instance segmentation can suffer from performance degradation when dense annotations are unavailable, weakly supervised frameworks attempt to classify points into objects with small numbers of labels.

Liao et al. [87] proposed a semi-supervised framework for point cloud instance segmentation by using a bounding box for supervision. The input point clouds were decomposed into subsets by bounding box proposals. Semantic information and consistency constraints were used to produce final instance masks. Hou et al. [ Recent advances in foundation models of 2D vision and NLP have inspired the exploration of multi-modality methods in 3D models [12, 91–98]. For instance, Peng et al. [97] proposed a zero-shot approach that co-embeds point features with images and text. Rozenberszki et al. [12] presented a language-grounded method by discovering the joint embedding space of point features and text features. Liu et al. [91] transferred the knowledge from 2D to 3D for part segmentation. Wang et al. [92] trained a multi-modal model that learns from vision, language, and geometry to improve 3D semantic scene understanding. Xue et al. [93] introduced a unified representation of images, text, and 3D point clouds by aligning them during pre-training. Ding et al. [94] distilled knowledge from vision-language models for 3D scene understanding tasks. Zeng et al. [95] aligned 3D representations to open-world vocabularies via a cross-modal contrastive objective. Zhang et al. [98] performed text-scene paired semantic understanding with language-assisted learning. How to facilitate and adapt multi-modalities with point clouds for better scene understanding is worth exploring. These methods utilize rich information from vision and text, enabling a more comprehensive representation of the indoor scene. However, these approaches require high computational resources, and pre-training is highly dependent on limited multi-modal datasets. The widely adopted evaluation metrics for indoor point cloud semantic segmentation include overall accuracy (OA), mean intersection over union (mIoU), and mean accuracy (mAcc). The standard evaluation metric for indoor point cloud instance segmentation is mean average precision with IoU thresholds (mAP) from 0.5 to 0.95. In particular, mAP@50 is AP score with IoU thresholds of 0.5. Additionally, mean precision (mPrec) and mean recall (mRec) are frequently used criteria on the S3DIS dataset. Semantic segmentation results. Tables 1 and 2 present indoor point cloud semantic segmentation results of different methods on S3DIS Area 5 and ScanNet v2, respectively. We can observe that the state-of-the-art methods outperform the pioneering work of PointNet [41] with more than 20% mIoU gains. Transformer-based methods [48, 49, 65] have been the dominant methods in recent years, following the great success in NLP and image understanding. Meanwhile, several weakly supervised methods show the possibility of achieving semantic segmentation with fewer data, reaching more than 65% of mIoU on S3DIS Area 5 and 70% on ScanNet. These results are encouraging, although there is still a gap between fully supervised and weakly supervised approaches. It is desirable to further improve the ability to extract features from limited annotated data. Instance segmentation results. Tables 3 and 4 present indoor point cloud instance segmentation results of different methods on S3DIS Area 5 and ScanNet v2, respectively. Detection-free methods have received more attention than detection-based methods because they attempt to complete the instance segmentation task in an end-to-end manner. Several networks [31, 68, 70, 87, 89] have started to learn instance information from limited annotation. These results clearly show that there is still room for improvement in point cloud instance segmentation using weakly supervised learning. Point cloud segmentation is a crucial task in 3D indoor scene understanding. With the availability of 3D datasets, deep learning-based segmentation methods have gained significant attention and have contributed to the progress in this field. However, obtaining accurate segmentation results often requires dense annotations, which is a laborious and costly process. In order to mitigate the reliance on extensive annotations and enable learning from limited labeled data, the research focus has shifted towards weakly supervised approaches in recent years. By exploring weakly supervised frameworks, researchers aim to achieve satisfactory segmentation results while minimizing the annotation efforts and costs involved. Despite the rapid development of point cloud segmentation, existing frameworks still face several challenges. The size of annotated point cloud data is still limited compared to that of image datasets. Although acquiring point clouds becomes affordable, annotating point clouds is still a time-consuming task. Since both fully supervised and pre-training [99, 100] require a large amount of data, larger datasets with more diverse scenes are desired to advance learning-based point cloud segmentation. Therefore, an efficient and user-friendly annotation method for large datasets is needed. This might be achieved by unsupervised approaches with geometric priors. The recently developed datasets, such as the ScanNet200 dataset [12], have drawn increasing attention to imbalanced learning [101] in point cloud segmentation. Existing point cloud segmentation methods use different data formats, including point clouds, RGB-D images, voxels, and geometric primitives. Each data format has its advantages and drawbacks in different 3D scene understanding tasks. On the basis of point-based networks, we can now directly process point clouds for training and reasoning. Obviously, not all points are needed for scene perception. For indoor scene point cloud data, finding a better representation is still a promising direction of research. Data-efficient learning frameworks are highly desirable because they alleviate the burden of collecting extensive dense annotations for training the model. Although current weakly supervised point cloud segmentation methods can achieve competitive performance with fully supervised learning, there are still gaps to be filled. More importantly, the generality and robustness of these data-efficient methods are not convincing, as they mainly test on public datasets with limited sizes rather than on open-world scenes. Therefore, further exploration of generalist models is the trend for the future. One promising route is to integrate other modalities, such as images and natural languages. Previous works [37, 102, 103] have explored the combination of 2D images and 3D point clouds for better understanding of scenes. Recent developments in foundation models of 2D vision and NLP have served as inspiration sources for investigating multi-modalities in 3D data [12, 91–98]. While these methods achieve incredible results in different 3D tasks, adapting knowledge from other modalities to indoor point cloud segmentation is still challenging. In addition, collecting adequate multi-modal pre-training data can be costly. How to facilitate and adapt multi-modalities with point clouds for a better understanding of indoor scenes is worth exploring. Current learning-based indoor point cloud segmentation methods are mostly designed for static scenes. Indoor objects can be moved around in real-world scenarios, allowing for a more comprehensive representation of the indoor scene. Moreover, annotating such dynamic scenes is even more costly than annotating 3D point clouds. 4D representation learning has become the core of dynamic feature exploitation. Recent work [104, 105] has explored 4D feature extraction and distillation to improve downstream tasks such as scene segmentation. Transferring such information to varying scales of indoor scenes is still challenging. The development of learning methods for dynamic scene segmentation is an interesting prospect for further investigation. Point cloud segmentation plays a key role in 3D vision and intelligence. This paper aims to provide a concise overview of point cloud segmentation techniques for understanding 3D indoor scenes. First, we present public 3D point cloud datasets, which are the foundation of point cloud segmentation research, especially for deep learning-based methods. Second, we review representative approaches for indoor scene point cloud segmentation, including geometry-based and learning-based methods. Geometry-based approaches extract geometric information and can be combined with learning-based methods. Learning-based methods can be divided into structured data-based and point-based methods. We mainly consider point-based semantic and instance segmentation frameworks, including fully supervised networks and weakly supervised networks. Finally, we discuss the open problems in the field and outline future research directions. We expect that this review can provide insights into the field of indoor scene point cloud segmentation and stimulate new research.6 Learning-based segmentation with multi-modality

7 Performance evaluation

7.1 Evaluation metrics

7.2 Results on public datasets

8 Discussion

8.1 Datasets and representations

8.2 Data efficiency and multi-modality

8.3 Learning methods for dynamic scene segmentation

9 Conclusion

Data availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Abbreviations

- CAD:

-

computer-aided design

- CNN:

-

convolutional neural network

- GCN:

-

graph convolutional network

- MLP:

-

multi-layer perceptron

- NLP:

-

natural language processing

- RANSAC:

-

random sample consensus

- SPG:

-

superpoint graph

References

Rusu, R. B., Marton, Z. C., Blodow, N., Dolha, M. E., & Beetz, M. (2008). Towards 3D point cloud based object maps for household environments. Robotics and Autonomous Systems, 56(11), 927–941.

Zhu, Y., Mottaghi, R., Kolve, E., Lim, J. J., Gupta, A., Li, F., et al. (2017). Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the IEEE international conference on robotics and automation (pp. 3357–3364). Piscataway: IEEE.

Wirth, F., Quehl, J., Ota, J., & Stiller, C. (2019). Pointatme: efficient 3D point cloud labeling in virtual reality. In Proceedings of the 2019 IEEE intelligent vehicles symposium (pp. 1693–1698). Piscataway: IEEE.

Li, J., Gao, W., Wu, Y., Liu, Y., & Shen, Y. (2022). High-quality indoor scene 3D reconstruction with RGB-D cameras: a brief review. Computational Visual Media, 8(3), 369–393.

Nguyen, A., & Le, B. (2013). 3D point cloud segmentation: a survey. In Proceedings of the 6th IEEE conference on robotics, automation and mechatronics (pp. 225–230). Piscataway: IEEE.

Long, J., Shelhamer, E., & Darrell, T. (2015). Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3431–3440). Piscataway: IEEE.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE international conference on computer vision (pp. 770–778). Piscataway: IEEE.

He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Mask R-CNN. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2961–2969). Piscataway: IEEE.

Guo, Y., Wang, H., Hu, Q., Liu, H., Liu, L., & Bennamoun, M. (2020). Deep learning for 3D point clouds: a survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(12), 4338–4364.

Dai, A., Chang, A. X., Savva, M., Halber, M., Funkhouser, T., & Nießner, M. (2017). ScanNet: richly-annotated 3D reconstructions of indoor scenes. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5828–5839). Piscataway: IEEE.

Armeni, I., Sener, O., Zamir, A. R., Jiang, H., Brilakis, I., Fischer, M., et al. (2016). 3D semantic parsing of large-scale indoor spaces. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1534–1543). Piscataway: IEEE.

Rozenberszki, D., Litany, O., & Dai, A. (2022). Language-grounded indoor 3D semantic segmentation in the wild. In S. Avidan, G. J. Brostow, M. Cissé, et al. (Eds.), Proceedings of the 17th European conference on computer vision (pp. 125–141). Cham: Springer.

Anand, A., Koppula, H. S., Joachims, T., & Saxena, A. (2013). Contextually guided semantic labeling and search for three-dimensional point clouds. The International Journal of Robotics Research, 32(1), 19–34.

Lai, K., Bo, L., & Fox, D. (2014). Unsupervised feature learning for 3D scene labeling. In Proceedings of the IEEE international conference on robotics and automation (pp. 3050–3057). Piscataway: IEEE.

Mattausch, O., Panozzo, D., Mura, C., Sorkine-Hornung, O., & Pajarola, R. (2014). Object detection and classification from large-scale cluttered indoor scans. Computer Graphics Forum, 33(2), 11–21.

Hu, S.-M., Cai, J.-X., & Lai, Y.-K. (2018). Semantic labeling and instance segmentation of 3D point clouds using patch context analysis and multiscale processing. IEEE Transactions on Visualization and Computer Graphics, 26(7), 2485–2498.

Nan, L., **e, K., & Sharf, A. (2012). A search-classify approach for cluttered indoor scene understanding. ACM Transactions on Graphics, 31(6), 1–10.

Li, Y., Dai, A., Guibas, L., & Nießner, M. (2015). Database-assisted object retrieval for real-time 3D reconstruction. Computer Graphics Forum, 34(2), 435–446.

Shi, Y., Long, P., Xu, K., Huang, H., & **ong, Y. (2016). Data-driven contextual modeling for 3D scene understanding. Computers & Graphics, 55, 55–67.

Fischler, M. A., & Bolles, R. C. (1981). Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 24(6), 381–395.

Monszpart, A., Mellado, N., Brostow, G. J., & Mitra, N. J. (2015). RAPter: rebuilding man-made scenes with regular arrangements of planes. ACM Transactions on Graphics, 34(4), 1–12.

Sun, Y., Miao, Y., Yu, L., & Renato, P. (2018). Abstraction and understanding of indoor scenes from single-view RGB-D scanning data. Journal of Computer-Aided Design & Computer Graphics, 30(6), 1046–1054.

Yu, L., Sun, Y., Zhang, X., Miao, Y., & Zhang, X. (2021). Point cloud instance segmentation of indoor scenes using learned pairwise patch relations. IEEE Access, 9, 15891–15901.

Huang, S.-S., Ma, Z.-Y., Mu, T.-J., Fu, H., & Hu, S.-M. (2021). Supervoxel convolution for online 3D semantic segmentation. ACM Transactions on Graphics, 40(3), 1–15.

Landrieu, L., & Simonovsky, M. (2018). Large-scale point cloud semantic segmentation with superpoint graphs. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4558–4567). Piscataway: IEEE.

Cheng, M., Hui, L., **e, J., Yang, J., & Kong, H. (2020). Cascaded non-local neural network for point cloud semantic segmentation. In Proceedings of the IEEE/RSJ international conference on intelligent robots and systems (pp. 8447–8452). Piscataway: IEEE.

Deng, S., Dong, Q., Liu, B., & Hu, Z. (2022). Superpoint-guided semi-supervised semantic segmentation of 3D point clouds. In Proceedings of the international conference on robotics and automation (pp. 9214–9220). Piscataway: IEEE.

Shi, X., Xu, X., Chen, K., Cai, L., Foo, C. S., & Jia, K. (2021). Label-efficient point cloud semantic segmentation: an active learning approach. ar**v preprint. ar**v:2101.06931.

Cheng, M., Hui, L., **e, J., & Yang, J. (2021). SSPC-Net: semi-supervised semantic 3D point cloud segmentation network. In Proceedings of the 33rd AAAI conference on artificial intelligence (pp. 1140–1147). Palo Alto: AAAI Press.

Liu, Z., Qi, X., & Fu, C.-W. (2021). One thing one click: a self-training approach for weakly supervised 3D semantic segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1726–1736). Piscataway: IEEE.

Du, H., Yu, X., Hussain, F., Armin, M. A., Petersson, L., & Li, W. (2023). Weakly-supervised point cloud instance segmentation with geometric priors. In Proceedings of IEEE/CVF winter conference on applications of computer vision (pp. 4271–4280). Piscataway: IEEE.

Su, H., Maji, S., Kalogerakis, E., & Learned-Miller, E. (2015). Multi-view convolutional neural networks for 3D shape recognition. In Proceedings of the IEEE international conference on computer vision (pp. 945–953). Piscataway: IEEE.

Boulch, A., Guerry, J., Le Saux, B., & Audebert, N. (2018). SnapNet: 3D point cloud semantic labeling with 2D deep segmentation networks. Computers & Graphics, 71, 189–198.

Maturana, D., & Scherer, S. (2015). VoxNet: a 3D convolutional neural network for real-time object recognition. In Proceedings of the IEEE/RSJ international conference on intelligent robots and systems (pp. 922–928). Piscataway: IEEE.

Meng, H.-Y., Gao, L., Lai, Y.-K., & Manocha, D. (2019). VV-Net: voxel VAE net with group convolutions for point cloud segmentation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 8500–8508). Piscataway: IEEE.

Tchapmi, L., Choy, C., Armeni, I., Gwak, J., & Savarese, S. (2017). SEGCloud: semantic segmentation of 3D point clouds. In Proceedings of international conference on 3D vision (pp. 537–547). Piscataway: IEEE.

Hou, J., Dai, A., & Nießner, M. (2019). 3D-SIS: 3D semantic instance segmentation of RGB-D scans. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4421–4430). Piscataway: IEEE.

Riegler, G., Osman Ulusoy, A., & Geiger, A. (2017). Octnet: learning deep 3D representations at high resolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3577–3586). Piscataway: IEEE.

Wang, P.-S., Liu, Y., Guo, Y.-X., Sun, C.-Y., & Tong, X. (2017). O-CNN: octree-based convolutional neural networks for 3D shape analysis. ACM Transactions on Graphics, 36(4).

Wang, P.-S. (2023). OctFormer: octree-based transformers for 3D point clouds. ACM Transactions on Graphics, 42(4), 1–11.

Qi, C. R., Su, H., Mo, K., & Guibas, L. J. (2017). Pointnet: deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 652–660). Piscataway: IEEE.

Qi, C. R., Yi, L., Su, H., & Guibas, L. J. (2017). Pointnet++: deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 30th international conference on neural information processing systems (pp. 5099–5108). Red Hook: Curran Associates.

Hu, Q., Yang, B., **e, L., Rosa, S., Guo, Y., Wang, Z., et al. (2020). RandLA-Net: efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 11108–11117). Piscataway: IEEE.

Li, Y., Bu, R., Sun, M., Wu, W., Di, X., & Chen, B. (2018). PointCNN: convolution on x-transformed points. In Proceedings of the 31st international conference on neural information processing systems (pp. 828–838). Red Hook: Curran Associates.

Te, G., Hu, W., Zheng, A., & Guo, Z. (2018). RGCNN: regularised graph CNN for point cloud segmentation. In Proceedings of the 26th ACM international conference on multimedia (pp. 746–754). New York: ACM.

Wang, Y., Sun, Y., Liu, Z., Sarma, S. E., Bronstein, M. M., & Solomon, J. M. (2019). Dynamic graph CNN for learning on point clouds. ACM Transactions on Graphics, 38(5).

Guo, M.-H., Cai, J.-X., Liu, Z.-N., Mu, T.-J., Martin, R. R., & Hu, S.-M. (2021). PCT: point cloud transformer. Computational Visual Media, 7(2), 187–199.

Zhao, H., Jiang, L., Jia, J., Torr, P. H., & Koltun, V. (2021). Point transformer. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 16259–16268). Piscataway: IEEE.

Wu, X., Lao, Y., Jiang, L., Liu, X., & Zhao, H. (2022). Point transformer v2: grouped vector attention and partition-based pooling. In Proceedings of the 35th international conference on neural information processing systems (pp. 33330–33342). Red Hook: Curran Associates.

Wang, W., Yu, R., Huang, Q., & Neumann, U. (2018). SGPN: similarity group proposal network for 3D point cloud instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2569–2578). Piscataway: IEEE.

Yang, J., Zhang, Q., Ni, B., Li, L., Liu, J., Zhou, M., et al. (2019). Modeling point clouds with self-attention and Gumbel subset sampling. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3323–3332).

Zhao, H., Jiang, L., Fu, C.-W., & Jia, J. (2019). PointWeb: enhancing local neighborhood features for point cloud processing. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5565–5573). Piscataway: IEEE.

Yi, L., Zhao, W., Wang, H., Sung, M., & Guibas, L. J. (2019). GSPN: generative shape proposal network for 3D instance segmentation in point cloud. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 3947–3956). Piscataway: IEEE.

Wang, X., Liu, S., Shen, X., Shen, C., & Jia, J. (2019). Associatively segmenting instances and semantics in point clouds. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4096–4105). Piscataway: IEEE.

Thomas, H., Qi, C. R., Deschaud, J.-E., Marcotegui, B., Goulette, F., & Guibas, L. J. (2019). KPConv: flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 6411–6420). Piscataway: IEEE.

Yang, B., Wang, J., Clark, R., Hu, Q., Wang, S., Markham, A., et al. (2019). Learning object bounding boxes for 3D instance segmentation on point clouds. In Proceedings of the 32nd international conference on neural information processing systems (pp. 6737–6746). Red Hook: Curran Associates.

Engelmann, F., Bokeloh, M., Fathi, A., Leibe, B., & Nießner, M. (2020). 3D-MPA: multi-proposal aggregation for 3D semantic instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9031–9040). Piscataway: IEEE.

Jiang, L., Zhao, H., Shi, S., Liu, S., Fu, C.-W., & Jia, J. (2020). PointGroup: dual-set point grou** for 3D instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4867–4876). Piscataway: IEEE.

Wei, J., Lin, G., Yap, K.-H., Hung, T.-Y., & **e, L. (2020). Multi-path region mining for weakly supervised 3D semantic segmentation on point clouds. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4384–4393). Piscataway: IEEE.

Sun, Y., Miao, Y., Chen, J., & Pajarola, R. (2020). PGCNet: patch graph convolutional network for point cloud segmentation of indoor scenes. The Visual Computer, 36(10), 2407–2418.

Zhang, Y., Li, Z., **e, Y., Qu, Y., Li, C., & Mei, T. (2021). Weakly supervised semantic segmentation for large-scale point cloud. In Proceedings of the 33rd AAAI conference on artificial intelligence (pp. 3421–3429). Palo Alto: AAAI Press.

He, T., Shen, C., & van den Hengel, A. (2021). DyCo3D: robust instance segmentation of 3D point clouds through dynamic convolution. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 354–363). Piscataway: IEEE.

Zhang, Y., Qu, Y., **e, Y., Li, Z., Zheng, S., & Li, C. (2021). Perturbed self-distillation: weakly supervised large-scale point cloud semantic segmentation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 15520–15528). Piscataway: IEEE.

Liang, Z., Li, Z., Xu, S., Tan, M., & Jia, K. (2021). Instance segmentation in 3D scenes using semantic superpoint tree networks. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 2783–2792). Piscataway: IEEE.

Lai, X., Liu, J., Jiang, L., Wang, L., Zhao, H., Liu, S., et al. (2022). Stratified transformer for 3D point cloud segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8500–8509). Piscataway: IEEE.

Li, M., **e, Y., Shen, Y., Ke, B., Qiao, R., Ren, B., et al. (2022). HybridCR: weakly-supervised 3D point cloud semantic segmentation via hybrid contrastive regularization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 14930–14939). Piscataway: IEEE.

Vu, T., Kim, K., Luu, T. M., Nguyen, T., & Yoo, C. D. (2022). Softgroup for 3D instance segmentation on point clouds. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2708–2717). Piscataway: IEEE.

Tao, A., Duan, Y., Wei, Y., Lu, J., & Zhou, J. (2022). SegGroup: seg-level supervision for 3D instance and semantic segmentation. IEEE Transactions on Image Processing, 31, 4952–4965.

Hu, Q., Yang, B., Fang, G., Guo, Y., Leonardis, A., Trigoni, N., et al. (2022). SQN: weakly-supervised semantic segmentation of large-scale 3D point clouds. In S. Avidan, G. J. Brostow, M. Cissé, et al. (Eds.), Proceedings of the 17th European conference on computer vision (pp. 600–619). Cham: Springer.

Tang, L., Hui, L., & **e, J. (2022). Learning inter-superpoint affinity for weakly supervised 3D instance segmentation. In Proceedings of the 16th Asian conference on computer vision (Vol. 13841, pp. 176–192). Cham: Springer.

Schult, J., Engelmann, F., Hermans, A., Litany, O., Tang, S., & Leibe, B. (2023). Mask3D: mask transformer for 3D semantic instance segmentation. In Proceedings of the IEEE international conference on robotics and automation (pp. 8216–8223). Piscataway: IEEE.

Wang, L., Huang, Y., Hou, Y., Zhang, S., & Shan, J. (2019). Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10296–10305). Piscataway: IEEE.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., et al. (2017). Attention is all you need. In Proceedings of the 30th international conference on neural information processing systems. Red Hook: Curran Associates.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., et al. (2020). An image is worth 16x16 words: transformers for image recognition at scale. ar**v preprint. ar**v:2010.11929.

Xu, X., & Lee, G. H. (2020). Weakly supervised semantic point cloud segmentation: towards 10x fewer labels. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 13706–13715). Piscataway: IEEE.

Wu, Z., Wu, Y., Lin, G., Cai, J., & Qian, C. (2022). Dual adaptive transformations for weakly supervised point cloud segmentation. In S. Avidan, G. J. Brostow, M. Cissé, et al. (Eds.), Proceedings of the 17th European conference on computer vision (pp. 78–96). Cham: Springer.

Jiang, L., Shi, S., Tian, Z., Lai, X., Liu, S., Fu, C.-W., et al. (2021). Guided point contrastive learning for semi-supervised point cloud semantic segmentation. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 6423–6432). Piscataway: IEEE.

Wu, Y., Cai, S., Yan, Z., Li, G., Yu, Y., Han, X., et al. (2022). PointMatch: a consistency training framework for weakly supervised semantic segmentation of 3D point clouds. ar**v preprint. ar**v:2202.10705.

Liu, G., van Kaick, O., Huang, H., & Hu, R. (2022). Active self-training for weakly supervised 3D scene semantic segmentation. ar**v preprint. ar**v:2209.07069.

Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You only look once: unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 779–788). Piscataway: IEEE.

Liang, X., Lin, L., Wei, Y., Shen, X., Yang, J., & Yan, S. (2017). Proposal-free network for instance-level object segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(12), 2978–2991.

Liu, S.-H., Yu, S.-Y., Wu, S.-C., Chen, H.-T., & Liu, T.-L. (2020). Learning gaussian instance segmentation in point clouds. ar**v preprint. ar**v:2007.09860.

Zhao, L., & Tao, W. (2020). JSNet: joint instance and semantic segmentation of 3D point clouds. In Proceedings of the 32nd AAAI conference on artificial intelligence (pp. 12951–12958). Palo Alto: AAAI Press.

Pham, Q.-H., Nguyen, T., Hua, B.-S., Roig, G., & Yeung, S.-K. (2019). JSIS3D: joint semantic-instance segmentation of 3D point clouds with multi-task pointwise networks and multi-value conditional random fields. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8827–8836). Piscataway: IEEE.

Liang, Z., Yang, M., Li, H., & Wang, C. (2020). 3D instance embedding learning with a structure-aware loss function for point cloud segmentation. IEEE Robotics and Automation Letters, 5(3), 4915–4922.

Sun, J., Qing, C., Tan, J., & Xu, X. (2023). Superpoint transformer for 3D scene instance segmentation. In Proceedings of the 37th AAAI conference on artificial intelligence (pp. 2393–2401). Palo Alto: AAAI Press.

Liao, Y., Zhu, H., Zhang, Y., Ye, C., Chen, T., & Fan, J. (2021). Point cloud instance segmentation with semi-supervised bounding-box mining. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(12), 10159–10170.

Hou, J., Graham, B., Nießner, M., & **e, S. (2021). Exploring data-efficient 3D scene understanding with contrastive scene contexts. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 15587–15597). Piscataway: IEEE.

Liu, Z., Qi, X., & Fu, C.-W. (2023). One Thing One Click++: self-training for weakly supervised 3D scene understanding. ar**v preprint. ar**v:2303.14727.

Zhang, Z., Ding, J., Jiang, L., Dai, D., & **a, G.-S. (2023). FreePoint: unsupervised point cloud instance segmentation. ar**v preprint. ar**v:2305.06973.

Liu, M., Zhu, Y., Cai, H., Han, S., Ling, Z., Porikli, F., et al. (2023). PartSLIP: low-shot part segmentation for 3D point clouds via pretrained image-language models. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 21736–21746). Piscataway: IEEE.

Wang, Z., Cheng, B., Zhao, L., Xu, D., Tang, Y., & Sheng, L. (2023). VL-SAT: visual-linguistic semantics assisted training for 3D semantic scene graph prediction in point cloud. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 21560–21569). Piscataway: IEEE.

Xue, L., Gao, M., **ng, C., Martín-Martín, R., Wu, J., **ong, C., et al. (2023). ULIP: learning a unified representation of language, images, and point clouds for 3D understanding. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1179–1189). Piscataway: IEEE.

Ding, R., Yang, J., Xue, C., Zhang, W., Bai, S., & Qi, X. (2023). PLA: language-driven open-vocabulary 3D scene understanding. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7010–7019). Piscataway: IEEE.

Zeng, Y., Jiang, C., Mao, J., Han, J., Ye, C., Huang, Q., et al. (2023). CLIP2: contrastive language-image-point pretraining from real-world point cloud data. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 15244–15253). Piscataway: IEEE.

Lu, Y., Xu, C., Wei, X., **e, X., Tomizuka, M., Keutzer, K., et al. (2023). Open-vocabulary point-cloud object detection without 3D annotation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1190–1199). Piscataway: IEEE.

Peng, S., Genova, K., Jiang, C., Tagliasacchi, A., Pollefeys, M., Funkhouser, T., et al. (2023). Openscene: 3D scene understanding with open vocabularies. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 815–824). Piscataway: IEEE.

Zhang, J., Fan, G., Wang, G., Su, Z., Ma, K., & Yi, L. (2023). Language-assisted 3D feature learning for semantic scene understanding. In Proceedings of the 37th AAAI conference on artificial intelligence (pp. 3445–3453). Palo Alto: AAAI Press.

**e, S., Gu, J., Guo, D., Qi, C. R., Guibas, L., & Litany, O. (2020). PointContrast: unsupervised pre-training for 3D point cloud understanding. In A. Vedaldi, H. Bischof, T. Brox, et al. (Eds.), Proceedings of the 16th European conference on computer vision (pp. 574–591). Cham: Springer.

Yang, Y.-Q., Guo, Y.-X., **ong, J.-Y., Liu, Y., Pan, H., Wang, P.-S., et al. (2023). Swin3D: a pretrained transformer backbone for 3D indoor scene understanding. ar**v preprint. ar**v:2304.06906.

Zhong, Z., Cui, J., Yang, Y., Wu, X., Qi, X., Zhang, X., et al. (2023). Understanding imbalanced semantic segmentation through neural collapse. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 19550–19560). Piscataway: IEEE.

Yu, P.-C., Sun, C., & Sun, M. (2022). Data efficient 3D learner via knowledge transferred from 2D model. In S. Avidan, G. J. Brostow, M. Cissé, et al. (Eds.), Proceedings of the 17th European conference on computer vision (pp. 182–198). Cham: Springer.

Kweon, H., & Yoon, K.-J. (2022). Joint learning of 2D-3D weakly supervised semantic segmentation. In Proceedings of the 35th international conference on neural information processing systems (pp. 30499–30511). Red Hook: Curran Associates.

Chen, Y., Nießner, M., & Dai, A. (2022). 4DContrast: contrastive learning with dynamic correspondences for 3D scene understanding. In S. Avidan, G. J. Brostow, M. Cissé, et al. (Eds.), Proceedings of the 17th European conference on computer vision (pp. 543–560). Cham: Springer.

Zhang, Z., Dong, Y., Liu, Y., & Yi, L. (2023). Complete-to-partial 4D distillation for self-supervised point cloud sequence representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 17661–17670). Piscataway: IEEE.

Acknowledgements

The authors express their gratitude to the anonymous reviewers and the editor for their invaluable feedback, which greatly improved the quality of this manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 61972458) and Zhejiang Provincial Natural Science Foundation of China (No. LZ23F020002).

Author information

Authors and Affiliations

Contributions

All authors contributed to the idea for the article. Literature search and analysis were performed by YS. YS prepared the manuscript initially, and all authors participated in the comment and modification of the paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, Y., Zhang, X. & Miao, Y. A review of point cloud segmentation for understanding 3D indoor scenes. Vis. Intell. 2, 14 (2024). https://doi.org/10.1007/s44267-024-00046-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44267-024-00046-x