Abstract

Objective

The objective of this study was to assess the validity of the Cornerstone Diabetes Simulation (CDS), a Microsoft Excel®-based patient-level simulation for type 2 diabetes mellitus based on risk equations from the revised United Kingdom Prospective Diabetes Study Outcomes Model (UKPDS-OM2, also known as UKPDS 82).

Methods

Three levels of validation were conducted. Internal validation was assessed through independent review and model stress-testing. External validation was addressed by populating the CDS with baseline characteristics and treatment effects from three major diabetes clinical trials used in the Fifth Mount Hood Diabetes Challenge (MH5) for computer simulation models. Cross-validation of predicted outcomes was tested versus eight models that participated in the MH5. Simulated results were compared with observed clinical outcomes via the coefficient of determination (R2) for both the absolute risk of each clinical outcome and the difference in absolute risk between control and intervention arm in each trial. We ensured transparency of all model inputs and assumptions in reporting.

Results

The CDS could be used to predict 18 of 39 single and composite endpoints across the three trials. The model obtained an R2 of 0.637 for predicted versus observed absolute risks, and an R2 of 0.442 for predicted versus observed risk differences between control and intervention. Among the other eight models, only one obtained a higher R2 value under both definitions, albeit based on only four predicted endpoints.

Conclusions

The CDS provides good predictions of diabetes-related complications when compared to observed trial outcomes and previously validated models. The model has value as a validated tool in cost-effectiveness evaluations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Disease modeling is commonly employed to evaluate the clinical and economic outcomes associated with new medical interventions and provides useful information for decision makers to optimize allocation of limited health resources. In the setting of chronic and progressive diseases such as type 2 diabetes mellitus (T2DM), modeling is particularly helpful for extrapolating from clinical trials of typically short duration to predict the long-term clinical outcomes and costs of experimental interventions. Over the years, a variety of diabetes simulation models have been developed and since 1999, the Mount Hood Diabetes Challenge Network has organized a meeting bi-annually to compare the structure and performance of participating health economic diabetes simulation models [1].

Health economic models need to gain the confidence of users for model predictions to be accepted in healthcare decision-making. The International Society for Pharmacoeconomics and Outcomes Research (ISPOR) and the Society for Medical Decision Making (SMDM) have jointly published recommendations for validation of health economic models [2]. The recommendations emphasize face validity (i.e., the model design, assumptions, data sources, and results are judged to be sound by experts), internal validity (i.e., model coding is correctly implemented), cross validity (i.e., model predictions are similar to those of other models analyzing the same problem), external validity (i.e., model results are consistent with actual real-world outcomes), and predictive validity (i.e., model predictions are consistent with outcomes observed in prospective clinical studies), with the latter two highlighted as the strongest forms of validation.

Risk equations developed by the United Kingdom Prospective Diabetes Study (UKPDS) have been widely adopted by various diabetes modeling groups [3, 4]. Compared to the original UKPDS Outcomes Model (UKPDS-OM1), the latest, revised UKPDS Outcomes Model (UKPDS-OM2, also known as UKPDS 82) [5] risk equations incorporate a larger set of clinical risk factors as input to provide predictions for a greater number of outcomes for T2DM. The Cornerstone Diabetes Simulation (CDS) provides a user-friendly, Microsoft Excel®-based interface that implements the UKPDS-OM2 risk equations to predict clinical and cost outcomes associated with experimental treatments for T2DM. A unique feature of the CDS is that all calculations are implemented within a single Microsoft Excel® file, providing the user full transparency in terms of reviewing the model calculations as well as maximum flexibility in terms of changing all model input values independently. However, as with any new implementation of simulation models, it is important to demonstrate the predictive validity of the CDS for potential users seeking to adopt this model in health economic evaluations.

This analysis compared predictions by the CDS and those by eight well-established diabetes simulation models that participated in the Fifth Mount Hood Diabetes Challenge (MH5) in 2010 against actual outcomes observed in three major prospective diabetes clinical trials that were selected for the challenge [1]. This analysis thus allows assessment of both the cross-validity and external validity of the CDS.

2 Methods

2.1 Model Overview

The CDS is an individual patient-level, Monte Carlo simulation model developed to determine the long-term health outcomes and economic consequences of managing patients with T2DM. The model was programmed in Microsoft Excel® 2010. The model utilizes risk equations from the UKPDS-OM2 [5] to estimate the incidence of diabetes complications and all-cause mortality in a cohort of patients with T2DM in 1-year time increments over a user-defined time horizon (maximum: 100 years). A list of outcomes predicted by the UKPDS-OM2 risk equations and their predictive risk factors (i.e., input variables) are summarized in Electronic Supplementary Material (ESM) Appendix Tables A1–A3. The CDS is designed to evaluate a control arm and an intervention arm, each of which consists of up to three lines of therapy. Each line of therapy may have a treatment effect in reducing glycated hemoglobin (HbA1C), body mass index (BMI), low-density lipoprotein (LDL), and/or systolic blood pressure (SBP). Therapy switching is dependent on reaching user-prespecified thresholds for A1C and/or time on therapy. The model allows the user to independently specify baseline values and time-path assumptions for all patient characteristics, treatment sequences and effects, and treatment switching thresholds. In addition to predicting the cumulative incidence of diabetes-related complications and mortality over the user-specified time horizon, the model also allows the user to specify cost and health utility inputs to generate cost-effectiveness analysis outputs, including life-years gained, quality-adjusted life-years (QALYs), and the incremental cost-effectiveness ratio (ICER). A model structure diagram is presented in Fig. 1.

2.2 Validation

As suggested by the American Diabetes Association (ADA) guidelines for diabetes computer modeling [6], the CDS underwent an exhaustive validation exercise conducted in line with best validation practices [2, 6].

2.2.1 Internal Validation

The model was independently reviewed by LA, DTG, and LS, who were chosen based on their expertise in health economic modeling and diabetes treatment outcomes. The reviewers assessed the validity of the CDS model assumptions and calculations; final reviewer recommendations were discussed and incorporated into the final version. ZTS and SB completed model stress-testing, which included logic checks with extreme values (e.g., hazard ratio [HR] 1 or extreme values; percentages = 0%, 100%; costs set to $0, etc.), and varying input values and ensuring results changed in a direction consistent with expectation based on clinical insights. To ensure accurate coding of the UKPDS-OM2 risk equations, diabetes complications and all-cause mortality simulated by the CDS for the UKPDS population over 25 years were compared with predictions by the UKPDS-OM2 and the actual outcomes observed in the UKPDS trial [5].

2.2.2 External Validation

Three landmark prospective diabetes clinical trials that were selected for the MH5 [1] were used to assess the external and predictive validity of the CDS. These trials were specifically the lipid-lowering intervention from the ASPEN (Atorvastatin Study for Prevention of Coronary Heart Disease Endpoints in Non-Insulin-Dependent Diabetes Mellitus) trial [7], the blood glucose-lowering intervention from the ADVANCE (Action in Diabetes and Vascular Disease: Preterax and Diamicron Modified Release Controlled Evaluation) trial [8], the blood pressure-lowering intervention from the ACCORD (Action to Control Cardiovascular Risk in Diabetes) trial (ACCORD BP) [9], and the blood glucose-lowering intervention from the ACCORD trial (ACCORD BG) [10]. A total of eight diabetes simulation models participated in the MH5 and provided their predictions for endpoints in these trials [1]. The cross-validity of the CDS could thus be assessed by comparing predictions of the CDS against those of the other eight models. Different event definitions between the UKPDS-OM2 risk equations (and hence the CDS) and those seen in the selected clinical trials required additional calculations, assumptions, and exclusions. ESM Appendix Table A4 lists the endpoints of the three trials and, for those that could be estimated by the CDS, the definitions and assumptions used.

To run simulations for the validation, we populated the CDS separately for each arm (i.e., control and intervention) of each trial using the mean and standard error for predictor variables as reported by the trial. Where a trial did not report the baseline value for a risk factor that was required as input by the CDS model, we used the reported baseline value for the same risk factor from another trial with a similar patient population (LDS [Lipids in Diabetes Study] [11] or intermediate-risk patients in the THIN [The Health Improvement Network] database [12]). For each arm in each trial, the population was set to 250,000 and the simulated time horizon was the same as the reported median follow-up duration. If the reported duration of a trial was not a whole year, we rounded the duration up to a whole year since the CDS runs on fixed 1-year time increments. The model simulated baseline values for each patient using seeded-random numbers drawn from a uniform distribution between 1 and 0 (inclusive). For binary variables, the patient was assigned the characteristic if the random number drawn was equal to or less than the population mean proportion. For continuous variables, the baseline value was calculated using the inverse of the gamma cumulative function for A1C and the inverse of the cumulative normal distribution function for other variables, with the drawn random number serving as the probability at which the inverse function was evaluated. In cases of a negative value, the model assumed the value of the population mean for that simulated patient.

In terms of time-paths of risk factors, where a trial reported the value of a risk factor at the end of the trial, we used either a linear or asymptotic function to model the reported change in value of that risk factor over the trial follow-up period. Generally, linear function was used to model BMI and smoker proportions over time and asymptotic function for all other clinical variables unless the source publication reported a time-path curve for a variable that largely appeared linear. Efficacy of the treatment under investigation was therefore captured through the time-paths of the risk factors (e.g., A1C, SBP). For all other risk factors for which the change in value over the trial follow-up period was not reported, we assumed these factors to be static over the simulation’s time horizon. A full description of baseline values, time-path assumptions, and references specific to the simulation of each clinical trial are presented in ESM Appendix Tables A5–A8.

We compared predicted outcomes by the CDS and the eight models that participated in the MH5 against actual observed outcomes as reported by the trial publications. We assessed the goodness-of-fit of predictions by each of the diabetes models by presenting scatterplots of observed versus predicted outcomes and calculating the coefficient of determination (R2). We evaluated the values of R2 for the absolute risk of each clinical outcome and the difference in absolute risk between control and intervention arm in each clinical trial.

3 Results

3.1 Internal Validation

Cumulative incidence of diabetes complications and all-cause mortality predicted by the CDS for the UKPDS population over 25 years were compared graphically to incidence curves reported by the UKPDS-OM2 source publication [5]. The predicted incidence curves closely matched the reported curves for all first-time events including mortality in the source publication (ESM Appendix Figure A1), even though baseline values for some predictor variables and individual patient data to inform time-paths of predictor variables and censoring were not publicly accessible. The observed differences were considered sufficiently small given the data limitations for internal validation of the CDS.

3.2 External Validation

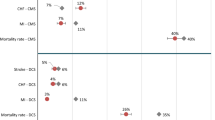

Figure 2 illustrates the relationship between observed versus predicted absolute risks of trial endpoints in control and intervention arms by the CDS and each of the diabetes simulation models that participated in the MH5. There were a total of 39 single and composite endpoints across the three clinical trials selected for the MH5. Overall, the CDS could be used to evaluate 18 of those endpoints. The R2 value between the predicted and observed absolute risks for the CDS was 0.637. Of the eight models that participated in the MH5, only two models achieved a higher R2 value: 0.724 for the Centers for Disease Control and Prevention-RTI Diabetes Cost-effectiveness Model (CDC-RTI), which predicted a total of 20 endpoints, and 0.733 for the UKPDS Risk Engine (UKPDS RE), which predicted only four endpoints (Table 1). The CDS had an improved fit compared to the UKPDS-OM1 model (R2 0.616), the predecessor to the UKPDS-OM2 model on which the CDS is based.

Scatterplots of observed absolute risks (on the horizontal axis) versus predicted absolute risks by each diabetes simulation model (on the vertical axis) for the control and intervention arm for each clinical trial selected for the Fifth Mount Hood Diabetes Challenge, along with fitted linear regression equations and calculated coefficients of determination (R2). CARDIFF Cardiff Research Consortium Model, CDC-RTI Centers for Disease Control and Prevention-RTI Diabetes Cost-effectiveness Model, CDS Cornerstone Diabetes Simulation, EBMI Evidence-Based Medicine Integrator Simulator, ECHO-T2DM Economics and Health Outcomes in Type 2 Diabetes Mellitus Model, IMS CORE IMS CORE Diabetes Model, MICHIGAN Michigan Model for Diabetes, UKPDS-OM1 United Kingdom Prospective Diabetes Study Outcomes Model, UKPDS RE United Kingdom Prospective Diabetes Study Risk Engine

The coefficient of determination was also evaluated for each diabetes model between the predicted and observed risk differences. A risk difference was calculated as the absolute risk of a given trial endpoint in the control arm minus that in the intervention arm. The R2 value calculated under this definition was 0.442 for the CDS; only the UKPDS RE model was associated with a higher value (R2 0.665) based on four predicted endpoints (Table 1). The R2 value under this definition for the UKPDS-OM1 was 0.290, again lower than the CDS.

Detailed results of all the diabetes simulation models’ predicted outcomes versus actual trial outcomes are summarized in ESM Appendix Tables A9–A13 (also see ESM Appendix Figures A2–A11 for scatterplots). When comparing specific trial endpoints predicted by the CDS, several important findings were noted:

Although the CDS’s predicted absolute risks were consistent with observed absolute risks for most endpoints, the model overestimated the cumulative incidence of all-cause mortality in the ADVANCE trial and both the blood pressure and blood glucose interventions of the ACCORD trial. A similar finding was noted for the UKPDS-OM1.

Compared to absolute risks, the risk differences predicted by the CDS were generally more consistent with the risk differences observed in the trials. For example, although the CDS overestimated the absolute risk of all-cause mortality in both control and intervention arms of the ADVANCE trial, the risk difference in all-cause mortality as predicted by the model was similar to the actual value observed in the trial (ESM Table A11).

Similarly, the relative risks predicted by the CDS were generally closer to those observed in the trials.

4 Discussion

This study demonstrated the external and cross-validity of the CDS model, which performed well in predicting absolute risks and risk differences in three prospective diabetes clinical trials used by the MH5. The CDS, based on the UKPDS-OM2 risk equations, obtained a similar coefficient of determination for predicted absolute risks with the UKPDS-OM1, the predecessor to the UKPDS-OM2. This result is consistent with findings reported by the Cardiff Diabetes Model group, who found that the UKPDS-OM2 risk equations exhibit similar levels of external validity to the UKPDS-OM1 [4]. In our study, although the coefficient of determination achieved by the CDS was lower than that achieved by the UKPDS RE, the latter model’s goodness-of-fit was only assessed based on four predicted endpoints, limiting meaningful interpretation of these findings. An alternative model to the CDS exhibiting a similar goodness-of-fit based on a comparable number of predicted endpoints was the CDC-RTI model.

Most importantly, results from our validation analysis suggest that the CDS demonstrates a high level of validity for predicting absolute risk reductions associated with diabetes treatments. This conclusion is supported by the finding that when the goodness-of-fit was assessed between the predicted and observed risk differences between control and intervention arms, the CDS performed similarly to previously validated models, as evident by the achieved coefficient of determination, which was higher than all models except the UKPDS RE. Given this finding, the CDS model may be suitable for cost-effectiveness evaluations, since the values of ICERs are usually driven by risk differences between control and intervention, rather than absolute risks.

Our external validation analysis results are also consistent with the main finding from the MH5: the models that participated in the MH5 generally predicted the relative risks of clinical events better than the absolute risks [1]. This is especially the case for mortality predictions. Similarly, the CDS in general achieved better predictions of risk differences of clinical events between control and intervention than absolute risks of events. In particular, the CDS overestimated the absolute mortality risk for each trial while contrastingly producing reasonable approximations of relative and incremental risks of mortality between control and intervention arms. Additional research is needed to ascertain the reason(s) for the all-cause mortality overestimation using the UKPDS risk equations, and whether any adjustment to the risk equations is warranted for using the CDS to simulate all-cause mortality in patient populations outside of the UKPDS.

Our study faced several limitations. The most significant limitation was the aforementioned lack of baseline values of risk factors from the selected clinical trials to populate all inputs required by the CDS. The use of alternative values likely affected the accuracy of the model predictions. In addition, in our validation analysis many of the trial endpoints were not direct matches for outcomes predicted by the UKPDS-OM2 risk equations; as such, we had to develop formulas to approximate some of these endpoints using a combination of outcomes that could be calculated from the UKPDS-OM2 risk equations. This again likely contributed to some of the observed deviations from expected results. Furthermore, we assessed the goodness-of-fit between observed and predicted outcomes by treating all endpoints equally in our calculations. However, depending on the specific needs and priorities of users of health economic models, certain endpoints might be considered more important than others in terms of accurate model predictions. We tried to address this issue by providing detailed summaries of all individual endpoint predictions by the CDS and other models. Additionally, using the MH5 allowed us to perform cross-validation against established diabetes simulation models but limited the number of clinical trials our model was used to simulate. Lastly, after the MH5 publication, several participant models were updated to incorporate UKPDS-OM2 risk equations, and new models such as the BRAVO (Building, Relating, Assessing, and Validating Outcomes) model based on the ACCORD trial population were recently developed and validated (the BRAVO model achieved a coefficient of determination of 0.86 against 28 endpoints from trials used in the Fourth and Fifth Mount Hood Diabetes Challenges) [13]. However, predictions by these updated models against the landmark clinical trials used by the MH5 are not fully available, and hence updated comparisons to these models were not conducted in this analysis.

Our study highlights the importance of transparency of model inputs and assumptions in documentation of disease modeling. In our validation of the CDS, the primary challenge we faced was a lack of full documentation from existing publications for certain model baseline values and time-paths and we had to rely on either external sources or develop our own assumptions. We were thus unable to determine if the model input values and assumptions we employed were the same as those used by the other models when these modeling groups were completing the MH5. Following recommendations from the Eighth Mount Hood Challenge held in 2016 [14] regarding transparency of model input documentation, we reported all model baseline input values and time-paths used in our simulations. Combined with the fact that the CDS model is implemented in Microsoft Excel® in a fully transparent manner so that all model calculations and assumptions are fully visible to users and all model inputs may be independently varied by users, we ensure full transparency of our model inputs and reproducibility of our simulation results by future investigations.

5 Conclusion

Following best practices for validating computer simulation models, our analysis demonstrated that the CDS is a user-friendly simulation tool that can be used to adequately predict clinical outcomes in managing patients with T2DM. The model may be particularly useful for predicting treatment effects and is potentially suitable for cost-effectiveness analyses. Future research to assess predictions of costs and cost effectiveness would complement this validation and provide useful information for decision makers to optimize health resource allocation.

References

Palmer AJ, Clarke P, Gray A, Leal J, Lloyd A, Mount Hood 5 Modeling Group, et al. Computer modeling of diabetes and its complications: a report on the Fifth Mount Hood challenge meeting. Value Health. 2013;16(4):670–85. https://doi.org/10.1016/j.jval.2013.01.002.

Eddy DM, Hollingworth W, Caro JJ, Tsevat J, McDonald KM, Wong JB, et al. Model transparency and validation: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force-7. Med Decis Mak. 2012;32(5):733–43. https://doi.org/10.1177/0272989X12454579.

Asche CV, Hippler SE, Eurich DT. Review of models used in economic analyses of new oral treatments for type 2 diabetes mellitus. Pharmacoeconomics. 2014;32(1):15–27. https://doi.org/10.1007/s40273-013-0117-7.

McEwan P, Ward T, Bennett H, Bergenheim K. Validation of the UKPDS 82 risk equations within the Cardiff Diabetes Model. Cost Eff Resour Alloc. 2015;13:12. https://doi.org/10.1186/s12962-015-0038-8.

Hayes AJ, Leal J, Gray AM, Holman RR, Clarke PM. UKPDS outcomes model 2: a new version of a model to simulate lifetime health outcomes of patients with type 2 diabetes mellitus using data from the 30 year United Kingdom Prospective Diabetes Study: UKPDS 82. Diabetologia. 2013;56(9):1925–33. https://doi.org/10.1007/s00125-013-2940-y.

American Diabetes Association Consensus Panel. Guidelines for computer modeling of diabetes and its complications. Diabetes Care. 2004;27(9):2262–5.

Knopp RH, d’Emden M, Smilde JG, Pocock SJ. Efficacy and safety of atorvastatin in the prevention of cardiovascular end points in subjects with type 2 diabetes: the Atorvastatin Study for Prevention of Coronary Heart Disease Endpoints in non-insulin-dependent diabetes mellitus (ASPEN). Diabetes Care. 2006;29(7):1478–85. https://doi.org/10.2337/dc05-2415.

Advance Collaborative Group, Patel A, MacMahon S, Chalmers J, Neal B, Billot L, et al. Intensive blood glucose control and vascular outcomes in patients with type 2 diabetes. N Engl J Med. 2008;358(24):2560–72. https://doi.org/10.1056/NEJMoa0802987.

Action to Control Cardiovascular Risk in Diabetes Study Group, Cushman WC, Evans GW, Byington RP, Goff DC Jr, Grimm RH Jr, et al. Effects of intensive blood-pressure control in type 2 diabetes mellitus. N Engl J Med. 2010;362(17):1575–85. https://doi.org/10.1056/NEJMoa1001286.

Action to Control Cardiovascular Risk in Diabetes Study Group, Gerstein HC, Miller ME, Byington RP, Goff DC Jr, Bigger JT, et al. Effects of intensive glucose lowering in type 2 diabetes. N Engl J Med. 2008;358(24):2545–59. https://doi.org/10.1056/NEJMoa0802743.

Clarke PM, Simon J, Cull CA, Holman RR. Assessing the impact of visual acuity on quality of life in individuals with type 2 diabetes using the short form-36. Diabetes Care. 2006;29(7):1506–11. https://doi.org/10.2337/dc05-2150.

McEwan P, Bennett H, Ward T, Bergenheim K. Refitting of the UKPDS 68 risk equations to contemporary routine clinical practice data in the UK. Pharmacoeconomics. 2015;33(2):149–61. https://doi.org/10.1007/s40273-014-0225-z.

Shao H, Fonseca V, Stoecker C, Liu S, Shi L. Novel risk engine for diabetes progression and mortality in USA: building, relating, assessing, and validating outcomes (BRAVO). Pharmacoeconomics. 2018;36(9):1125–34. https://doi.org/10.1007/s40273-018-0662-1.

Palmer AJ, Si L, Tew M, Hua X, Willis MS, Asseburg C, et al. Computer modeling of diabetes and its transparency: a report on the Eighth Mount Hood Challenge. Value Health. 2018;21(6):724–31. https://doi.org/10.1016/j.jval.2018.02.002.

Acknowledgements

The authors would like to thank Margaret Ainslie-Garcia of Cornerstone Research Group Inc. for assistance with manuscript formatting and revision and Evelyn Worthington and Joshua Gauci of Cornerstone Research Group Inc. for assistance with data gathering and manuscript editing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This study was sponsored by Sanofi.

Conflict of interest

Zhuo T. Su, Stephen Brown, and Daniel T. Grima are employees of Cornerstone Research Inc., which was contracted by Sanofi to develop the model. Jose Bartelt-Hofer, Elisheva Lew, and Luc Sauriol are employees of Sanofi. Lieven Annemans has received grants for lectures and advisory boards from Sanofi.

Data availability statement

All data generated or analyzed during this study are included in this published article (and its online supplementary information files).

Author contributions

All authors contributed to the conception and design of the project, interpretation of results, and manuscript review. ZTS conducted data collection and statistical analysis. ZTS, SB, and DTG drafted the manuscript.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Su, Z.T., Bartelt-Hofer, J., Brown, S. et al. The Use of Computer Simulation Modeling to Estimate Complications in Patients with Type 2 Diabetes Mellitus: Comparative Validation of the Cornerstone Diabetes Simulation Model. PharmacoEconomics Open 4, 37–44 (2020). https://doi.org/10.1007/s41669-019-0156-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41669-019-0156-x