Abstract

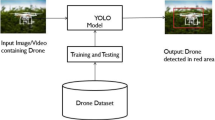

Object detection is frequently a challenging task due to poor visual cues of objects in an image. In this paper, a new efficient deep learning-based detection method, named as deeper and wider YOLO (DW-YOLO), has been proposed for various-sized objects from various perspectives. DW-YOLO is based on YOLOv5 and the following two enhancements have been developed to make the entire network deeper and wider. First, residual blocks in each cross stage partial structure are optimized to strengthen the ability of feature extraction in high-resolution drone images. Second, the entire network becomes wider by increasing the number of convolution kernels, aiming to obtain more discriminative features to fit complex data. The learning ability of a CNN model is related to its complexity. Making the network deeper can increase its complexity so that the ability of feature extraction is improved and the relationship between high-dimensional features can be easily learned. Increasing the network width can make each layer learn richer features in different directions and frequencies. Furthermore, a new large and diverse drone dataset named HDrone for object detection in real drone-view scenarios is introduced. This dataset involves six types of annotations in a wide range of scenarios, which is not limited to the traffic scenario. The experimental results on three datasets among which HDrone and VisDrone are the datasets for drone vision, and KITTI is the dataset for self-driving showing that the proposed DW-YOLO achieves the state-of-the-art results and can detect small-scaled objects well along with large-scaled objects.

Similar content being viewed by others

References

Sultani, W.; Mubarak, S.: Human action recognition in drone videos using a few aerial training examples. Comput. Vis. Image Underst. 22, 103186 (2021)

Ševo, I.; Avramović, A.: Convolutional neural network based automatic object detection on aerial images. IEEE Geosci. Remote Sens. Lett. 13(5), 740–744 (2016)

Audebert, N.; Bertrand, L.S.; Sébastien, L.: Segment-before-detect: vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 9(4), 368 (2017)

Sommer, L.W.; Tobias S.; Jürgen, B.: Fast deep vehicle detection in aerial images. In: 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 311–319. IEEE (2017)

Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Zou, H.: Toward fast and accurate vehicle detection in aerial images using coupled region-based convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 10(8), 3652–3664 (2017)

Jian, D.; Nan, X.; Yang, L.; Gui-Song, X.; Qikai, L: Learning roi transformer for detecting oriented objects in aerial images. In CVPR (2019)

Shuo, Z.; Guanghui, H.; Hai-Bao, C.; Naifeng, J.; Qin, W.: Scale adaptive proposal network for object detection in remote sensing images. In: GRSL (2019)

Chen, Y.; Shin, H.: Multispectral image fusion based pedestrian detection using a multilayer fused deconvolutional single-shot detector. JOSA A 37(5), 768–779 (2020)

Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1440–1448 (2015)

Ren, S.; Kaiming, H.; Ross, G.; Jian, S.: Faster r-cnn: Towards real-time object detection with region proposal networks. ar**v preprint ar**v:1506.01497 (2015).

He, K.; Georgia, G.; Piotr, D.; Ross, G.: Mask r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

Redmon, J.; Santosh, D.; Ross, G.; Ali, F.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 779–788 (2016)

Redmon, J.; Ali, F.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern recognition, pp. 7263–7271 (2017)

Redmon, J.; Ali, F.: Yolov3: An incremental improvement. ar**v preprint ar**v:1804.02767 (2018)

Bochkovskiy, A.; Chien-Yao, W.; Hong-Yuan, M.L. Yolov4: Optimal speed and accuracy of object detection. ar**v preprint ar**v:2004.10934 (2020)

Liu, W.; Dragomir, A.; Dumitru, E.; Christian, S.; Scott, R.; Cheng-Yang, F.; Alexander, C.B.: Ssd: single shot multibox detector. In: European Conference on Computer Vision, pp. 21–37. Springer, Cham (2016)

Zhang, S.; Longyin, W.; **ao, B.; Zhen, L.; Stan Z.L.: Single-shot refinement neural network for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4203–4212 (2018)

Lin, T.-Y.; Priya, G.; Ross, G.; Kaiming, H.; Piotr, D.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Zhu, P.; Dawei, D.; Longyin, W.; **ao, B.; Haibin, L.; Qinghua H.; Tao, P. et al.: VisDrone-VID2019: The vision meets drone object detection in video challenge results. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pp. 0–0 (2019)

Everingham, M.; Luc, V.G.; Christopher, K.I.W.; John, W.; Andrew, Z.: The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Lin, T.-Y.; Michael, M.; Serge, B.; James, H.; Pietro, P.; Deva, R.; Piotr, D.; Lawrence, Z.C.: Microsoft coco: Common objects in context. In: European Conference on Computer Vision, pp. 740–755. Springer, Cham (2014)

He, K.; Zhang, X.; Ren, S.; Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1904–1916 (2015)

Wang, C.-Y.; Hong-Yuan, M.L.; Yueh-Hua, W.; **-Yang, C.; Jun-Wei, H.; Yeh, I.-H.: CSPNet: A new backbone that can enhance learning capability of CNN. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 390–391 (2020)

He, K.; **angyu, Z.; Shaoqing, R.; Jian, S.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

http://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=2d

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Rights and permissions

About this article

Cite this article

Chen, Y., Zheng, W., Zhao, Y. et al. DW-YOLO: An Efficient Object Detector for Drones and Self-driving Vehicles. Arab J Sci Eng 48, 1427–1436 (2023). https://doi.org/10.1007/s13369-022-06874-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-022-06874-7