Abstract

Traditionally colleges have relied on standalone non-credit-bearing developmental education (DE) to support students academically and ensure readiness for college-level courses. As emerging evidence has raised concerns about the effectiveness of DE courses, colleges and states have been experimenting with approaches that place students into credit-bearing coursework more quickly. To better understand which types of students might be most likely to benefit from being placed into college-level math coursework, this study examines heterogeneity in the causal effects of placement into college-level courses using a regression discontinuity design and administrative data from the state of Texas. We focus on student characteristics that are related to academic preparation or might signal a student’s likelihood of success or need for additional support and might therefore be factors considered for placement into college-level courses under “holistic advising” or “multiple measures” initiatives. We find heterogeneity in outcomes for many of the measures we examined. Students who declared an academic major designation, had bachelor’s degree aspirations, tested below college readiness on multiple subjects, were designated as Limited English Proficiency (LEP), and/or were economically disadvantaged status were more likely to benefit from placement into college-level math. Part-time enrollment or being over the age of 21 were associated with reduced benefits from placement into college-level math. We do not find any heterogeneity in outcomes for our high school achievement measure, three or more years of math taken in high school.

Similar content being viewed by others

Notes

Toward the end of the study period, some colleges in Texas also began to pilot corequisites, where students who tested below college-ready were able to directly enter the college course and receive concurrent DE support rather than taking a standalone course.

We observe transfers to other Texas colleges (including private colleges and four-year universities), but we do not observe transfers to colleges outside of Texas, so these transfers are not included in our persistence measure.

This approach has been used by other papers on this topic including Martorell and McFarlin (2011); Calcagno and Long (2008); Boatman and Long (2018).

For instance, this can be because of an exemption or waiver obtained after the student initially tested, in which case the student appears below the cut score in our data but was able to enroll in a college course, or the placement of a student into a corequisite (immediate enrollment in a college course with concurrent DE support).

The Benjamini–Hochberg procedure provides modified standards for statistical significance that become more stringent as the number of statistical tests within the same domain rises. See Benjamini and Hochberg (1995).

This steepness reflects test retaking; many students who initially score near the TSIA cutoff are able to meet the college-readiness standard.

Given the discrete running variable, we use a bin size of 1 for this analysis.

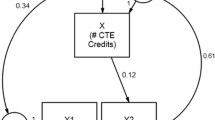

The full baseline covariate set is included in each regression, but is not reported. Each regression adds one covariate interaction term individually to the analysis, while retaining the baseline covariate set.

The total effects shown in Figs. 3 and 4 are the result of adding the baseline effect φ and the interaction effect ϕ for the indicated group (as well as making the appropriate variance addition for the standard errors of the resulting estimate), and maintain the baseline effect and standard error for the non-indicated group in each regression. In Appendix 2, we include a table that shows the regression results in their direct form, including the baseline treatment effect, the additional interaction treatment effect, and the total effect, each with standard errors.

References

Bahr, P. R., Hetts, J., Hayward, C., Willett, T., Lamoree, D., Newell, M. A., Sorey, K., & Baker, R. B. (2017). Improving placement accuracy in california’s community colleges using multiple measures of high school achievement. The Research and Planning Group for California Community Colleges.

Bailey, T., Jeong, D. W., & Cho, S. W. (2010). Referral, enrollment, and completion in developmental education sequences in community colleges. Economics of Education Review, 29, 255–270.

Barnett, E., & Reddy, V. (2017). College Placement Strategies: Evolving Considerations and Practices 1. In Preparing Students for College and Careers (pp. 82–93). Routledge.

Barnett, E. A., Bergman, P., Kopko, E., Reddy, V., Belfield, C., & Roy, S. (2018). Multiple Measures Placement Using Data Analytics An Implementation and Early Impacts Report. Center for the Analysis of Postsecondary Readiness (CAPR): New York NY.

Belfield, C. R., Jenkins, D., & Fink, J. (2019). Early momentum metrics leading indicators for community college improvement (CCRC Research Brief). Columbia University, Teachers College, Community College Research Center.

Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the Royal Statistical Society, Series B, 57(1), 289–300.

Boatman, A & Long, B.T. (2013) The role of remediation and developmental courses in access and persistence. A. Jones, L. Perna (Eds) The state of college access and completion improving college success for students from underrepresented groups. Routledge Books, New York

Boatman, A., & Long, B. T. (2018). Does remediation work for all students? How the effects of postsecondary remedial and developmental courses vary by level of academic preparation. Educational Evaluation and Policy Analysis, 40(1), 29–58.

Calcagno, J. C., & Long, B. T. (2008). The impact of postsecondary remediation using a regression discontinuity approach: Addressing endogenous sorting and noncompliance (No. w14194). New York: National Center for Postsecondary Research.

Cho, S. W., Kopko, E., Jenkins, D., & Jaggars, S. S. (2012). New evidence of success of community college remedial English students: Tracking the outcomes of students in the Accelerated Learning Program (ALP) (CCRC Working Paper No. 53). New York, NY: Columbia University, Teachers College, Community College Research Center.

Cuellar Mejia, C., Rodriguez, O., & Johnson, H. (2016). Preparing students for success in California’s community colleges. Public Policy Institute of California.

Cullinan, D., Barnett, E.A., Ratledge, A., Welbeck, R., Belfield, C. & Lopez, A. (2018). Toward better college course placement: a guide to launching a multiple measures assessment system. New York NY: Center for the Analysis of Postsecondary Readiness (CAPR).

Daugherty, L., Gomez, C. J., Carew, D. G., Mendoza-Graf, A., & Miller, T. (2018). Designing and implementing corequisite models of developmental education: findings from texas community colleges. RAND Corporation.

Gehlhaus, D., Daugherty, L., Karam, R., Miller, T. & Mendoza-Graf, A. (2018). Practitioner Perspectives on Implementing Developmental Education Reforms: A Convening of Six Community Colleges in Texas. Working Paper, Santa Monica, CA: RAND Corporation.

Hodara, M. (2015). The effects of english as a second language courses on language minority community college students. Educational Evaluation and Policy Analysis, 37(2), 243–270.

Hodara, M., & Cox, M. (2016). Developmental education and college readiness at the University of Alaska (No. 2016-123). Portland OR: Regional Educational Laboratory Northwest.

Hu, S., Park, T. J., Woods, C. S., Tandberg, D. A., Richard, K., & Hankerson, D. (2016). Investigating developmental and college-level course enrollment and passing before and after Florida’s developmental education reform (REL 2017–203). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, Regional Educational Laboratory Southeast. Retrieved from http://ies.ed.gov/ncee/edlabs.

Jenkins, D. & Bailey, T. (2017). Early Momentum Metrics: Why They Matter for College Improvement (CCRC Research Brief No. 65). New York, NY: Columbia University, Teachers College, Community College Research Center.

Klasik, D., & Strayhorn, T. L. (2018). The complexity of college readiness: Differences by race and college selectivity. Educational Researcher, 47(6), 334–342.

Logue, A. W., Douglas, D., & Watanabe-Rose, M. (2019). Corequisite mathematics remediation: results over time and in different contexts. Educational Evaluation and Policy Analysis. https://doi.org/10.3102/0162373719848777

Martorell, P., & McFarlin, I. (2011). Help or hindrance? The effects of college remediation on academic and labor market outcomes. The Review of Economics and Statistics, 93(2), 436–454.

McCrary, J. (2008). Manipulation of the running variable in the regression discontinuity design: A density test. Journal of Econometrics, 142(2), 698–714.

Miller, T., Daugherty, L., Martorell, P., & Gerber, R. (2020). Assessing the Effect of Corequisite English Instruction Using a Randomized Controlled Trial. American Institutes for Research.

Ngo, F., & Kwon, W. W. (2015). Using multiple measures to make math placement decisions: Implications for access and success in community colleges. Research in Higher Education, 56(5), 442–470.

Ngo, F., Kwon, W., Melguizo, T., Prather, G., & Bos, J. M. (2013). Course placement in developmental mathematics: Do multiple measures work. Los Angeles, CA: The University of Southern California Working Paper, as of 12/1/2019: https://pullias.usc.edu/wp-content/uploads/2013/10/Multiple_Measures_Brief.pdf.

Park, T., Woods, C., Tandberg, D., Richard, K., Cig, O., Hu, S., & Bertrand Jones, T. (2016). Examining student success following developmental education redesign in Florida. Teachers College Record.

Ran, F. X., & Lin, Y. (2019). The effects of corequisite remediation: evidence from a statewide reform in Tennessee. Columbia University, Teachers College, Community College Research Center.

Scott-Clayton, J. and Rodriguez, O. (2012). Development, discouragement, or diversion? new evidence on the effects of college remediation. NBER Working Paper No. 18328.

Scott-Clayton, J., Crosta, P. M., & Belfield, C. R. (2014). Improving the targeting of treatment: evidence from college remediation. Educational Evaluation and Policy Analysis, 36(3), 371–393.

Rutschow, E. Z., Cormier, M. S., Dukes, D., & Zamora, D. E. C. (2019). The Changing Landscape of Developmental Education Practices: Findings from a National Survey and Interviews with Postsecondary Institutions. Center for the Analysis of Postsecondary Readiness.

Acknowledgements

The research reported here was supported, in whole or in part, by the Institute of Education Sciences, U.S. Department of Education, through grant R305H150069 to the RAND Corporation. The opinions expressed are those of the authors and do not represent the views of the Institute or the U.S. Department of Education. The research benefitted tremendously from helpful input and strong guidance and support from Texas Higher Education Coordinating Board staff. In particular we thank Julie Eklund, Suzanne Morales-Vale, Melissa Humphries, and David Gardner.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Daugherty, L., Gerber, R., Martorell, F. et al. Heterogeneity in the Effects of College Course Placement. Res High Educ 62, 1086–1111 (2021). https://doi.org/10.1007/s11162-021-09630-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-021-09630-2