Abstract

This paper presents a novel method for analyzing high-dimensional nonlinear stochastic dynamic systems. In particular, we attempt to obtain the solution of the Fokker–Planck–Kolmogorov (FPK) equation governing the response probability density of the system without using the FPK equation directly. The method consists of several important components including the radial basis function neural networks (RBFNN), Feynman–Kac formula and the short-time Gaussian property of the response process. In the area of solving partial differential equations (PDEs) using neural networks, known as physics-informed neural network (PINN), the proposed method presents an effective alternative for obtaining solutions of PDEs without directly dealing with the equation, thus avoids evaluating the derivatives of the equation. This approach has a potential to make the neural network-based solution more efficient and accurate. Several highly challenging examples of nonlinear stochastic systems are presented in the paper to illustrate the effectiveness of the proposed method in comparison to the equation-based RBFNN approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Partial differential equations are widely employed to describe the evolution of complex systems in various fields, including physics, engineering and finance. However, obtaining analytical solutions for PDEs is often impractical, especially in the case of strongly nonlinear and high-dimensional systems. Due to their robust fitting capability and adaptability to complex data relationships, artificial neural networks (ANNs) have been employed as a universal method to solve PDEs [1,2,3]. This leads to physics informed neural networks commonly known as PINN. ANNs transform the PDE solution process into optimization problems, whose objective is to minimize a suitably defined loss function, such that the neural networks output approximates the solution to a high level of accuracy. The loss function of PINN usually consists of residuals of the PDE, boundary conditions and possibly other constraints and therefore requires the computation of high order derivatives of the equation. The computation of derivatives in the loss function in the repeated trainings of the neural networks can substantially increase the CPU time and sacrifice the accuracy of the solution. Furthermore, this approach may also face challenges such as vanishing gradient or exploding gradient problems, which can impede the convergence and overall effectiveness [4]. A Monte Carlo method was proposed to compute second derivatives, which proves to be time-consuming [5]. The simplicity and flexibility of the PINN approach render it suitable for a wide range of PDEs. Some literature on solving the FPK equation using the PINN approach includes [6,7,8,9].

The Feynman–Kac formula gives a connection between a semilinear parabolic PDE and a stochastic process with the martingale property [10, 11]. The solution to the parabolic PDE is proven to be the expectation of the martingale process driven by a Brownian motion. The theory of backward stochastic differential equations (BSDEs) extends the application of the Feynman–Kac method from semilinear parabolic PDEs to fully nonlinear PDEs [12, 13]. Xu further applied the concepts of the Feynman–Kac formula and BSDE to the complex PDEs [14]. The Feynman–Kac formula provides a route to solve PDEs with the help of simulated random paths without the need for derivative calculations.

The Feynman–Kac formula can be used to relate stochastic differential equation (SDE) to a corresponding PDE. An algorithm of variational quantum imaginary time evolution has been developed to solve the PDE in order to obtain the expectations of the SDEs [15]. The primary application of the Feynman–Kac formula remains providing a method for solving PDEs by simulating stochastic processes, thereby circumventing the need for derivative calculations. Monte Carlo simulations (MCS) can be employed to directly generate random trajectories in order to obtain the expectation of the stochastic process, which represents the solution to the PDE. However, direct simulations would require significant computational efforts [16,17,18]. To enhance the computational efficiency, the h-conditioned Green’s function, which enables the representation of the integral along random paths as a volume integral, is employed instead of directly simulating random trajectories to obtain the expectation of the stochastic process [19]. Dalang et al. established a probabilistic representation for PDEs that is different from the Feynman–Kac formula. The advantage of their method lies in deriving an analytical expression for the moments of the response process without the need for numerical simulations [20]. Computation of the expectation of the martingale process with direct simulations can be even more intensive for high dimensional systems [16, 17]. Deep learning methods based on variational formulations for the associated stochastic differential equations are investigated extensively in [21]. The Feynman–Kac formula is also used in the study, leading to a very efficient computational algorithm.

The authors of [22] have developed a derivative-free method to solve a series of elliptical PDEs. Furthermore, this derivative-free deep neural network method has been extended to solve viscous incompressible fluid flow problems [23]. Weinan et al. solve a class of parabolic PDEs by reformulating them as BSDEs using deep neural networks [2].

Although the calculation of derivatives can be circumvented by the Feynman–Kac formula, the traditional neural network methods still require numerical simulations to compute the expectation of random paths of the martingale stochastic process, which remains time-consuming and inconvenient. This paper develops an effective way to compute the expectation of the martingale process by making use of the short-time Gaussian approximation of the response process. We also utilize the RBFNN to accurately approximate the PDF of the response process. RBFNN is an example of PINN and has been successfully applied to obtain transient and stationary PDFs of the FPK equation of nonlinear systems [24,25,26] and the reliability function of the first-passage problem [27]. Since the output of the RBFNN is a weighted sum of Gaussian activation functions, by applying the short-time Gaussian approximation (STGA) technique, we have derived the analytical expression for the expectation of the Feynman–Kac formula. This approach obviates the need for numerical simulations. Compared to directly solving PDEs using RBFNN, the method proposed in this paper additionally eliminates the need for derivative calculations, thereby reducing computational time. Three numerical examples, including a strongly nonlinear system, a non-smooth system, and a high-dimensional system, are employed to demonstrate the feasibility and effectiveness of our approach. Furthermore, the comparison between the Feynman–Kac formula-based solution and the solution from directly solving PDE is discussed. The FPK equation is the primary example in this paper, while the method is also applicable to other PDEs, such as the Hamilton–Jacobi–Bellman (HJB) equation and heat equation.

The remainder of the paper is outlined as follows. Section 2 provides a brief description of the Feynman–Kac formula and the FPK equation. In Sect. 3, we elaborate on the implementation of the Feynman–Kac formula utilizing the RBFNN approach. We apply the STGA technique to derive the analytical expression for the expectation of the stochastic process, thereby circumventing both derivative calculations and numerical simulations. In Sect. 4, we briefly review a RBFNN approach of directly solving the FPK equation. In Sect. 5, three examples are presented, including a strongly nonlinear system, a non-smooth tri-stable system, and a high-dimensional system, to validate the feasibility and effectiveness of the proposed method. The computational time of the proposed method is compared with that of the equation-based RBFNN method in Sect. 6. Section 7 concludes the paper.

2 Feynman–Kac formula

In this section, we present an overview of the Feynman–Kac formula, which provides a connection between a second-order parabolic PDE and a stochastic process with the martingale property. However, we shall not show how to derive the FPK equation from the Feynman–Kac formula. The interested reader should refer to the reference [15].

Consider the following n-dimensional parabolic PDE,

where the differential operator \(\mathcal {L}_t [ p ]\) is defined as,

The coefficients \(a_i(\textbf{x},t)\), \(b_{ik}(\textbf{x},t)\) and \(c(\textbf{x},t)\) are known functions \(\mathbb {R}^n \times [0, T] \rightarrow \mathbb {R}\), \(\textbf{a}=[a_i] \in \mathbb {R}^{n\times 1}\) and \(\textbf{b}=[b_{ik}] \in \mathbb {R}^{n\times m}\). As discussed in the introduction [15], the Feynman–Kac formula can be used to derive PDE (1) governing the PDF of the n dimensional stochastic process \(\textbf{X}(t)=[X_j (t)] \in \mathbb {R}^n\) satisfying the following Itô differential equation,

where \(b_{ij} (\textbf{x},t) = \sum _{k=1}^{m} \sigma _{ik} \sigma _{jk}(\textbf{x},t)\) and \(B_j(t)\) \((j=1,2, \ldots , m)\) are independent unit Brownian motions. Hence, the FPK equation for the stochastic process \(\textbf{X}(t)\) satisfying Eq. (3) must be a special case of Eq. (1).

Consider the n-dimensional FPK equation with the initial condition.

where the deterministic functions \(m_{i}(\textbf{x})\) and \(b_{ij}(\textbf{x})\) are the drift and diffusion terms. The FPK operator can be expressed in the form of a linear parabolic equation as,

where \(\mathcal {L}_t [ p ]\) is defined in Eq. (2), and

Note that this is a special case when \(a_i(\textbf{x})\), \(b_{ij}(\textbf{x})\) and \(c(\textbf{x})\) are not explicit functions of time.

We are now back to the parabolic PDE (1) and the stochastic process \(\textbf{X}(t)\), and define another stochastic process \(\mathcal {M}(t)\) as,

where \(p: \mathbb {R}^n \times [0, T] \rightarrow \mathbb {R}\) is an arbitrary function at this point. The differentiation of \(\mathcal {M}(t)\) can be obtained by Itô’s lemma as,

When \(p(\textbf{x}, t)\) is the solution of PDE (1), we take the expectation of Eq. (9) and obtain

Hence, \(\mathcal {M}(t)\) is a martingale process. Integrating this equation over [0, T] gives \(\mathbb {E}[\mathcal {M}(0)]=\mathbb {E}[\mathcal {M}(T)]\) where \(T>0\) is arbitrary. We have

The equation relates the initial distribution \(p(\textbf{x},0)\) to the PDF \(p(\textbf{x}, T)\) at an arbitrary time \(T>0\). This is an implicit integral equation for determining the PDF of the stochastic process \(\textbf{X}(t)\) without the differential operations of PDE (1). Therefore, the derivative calculations, which are typically time-consuming in computations for solving PDEs, are not required when \(p(\textbf{x},T)\) is obtained from the equation.

Equation (11) needs samples of the stochastic process \(\textbf{X}(t)\) over the time interval [0, T] to evaluate the expectation. For nonlinear systems, Monte Carlo simulations have been the only effective method to compute the expectation. In the following, we present a novel neural networks method to obtain the PDF solution from Eq. (11) subject to the constraint of normalization.

3 The proposed method

3.1 Outline of the method

The proposed method making use of the Feynman–Kac formula consists of a few steps. In the first step, we divide the time interval [0, T] into a collection of small time intervals \([k\Delta t, (k+1) \Delta t]\) (\(k=0, 1, \ldots \)). In each sub-interval, the martingale property of the stochastic process \(\mathcal {M}(t)\) holds

In the second step, we develop a way to compute the conditional expectation on the right hand side of Eq. (13). \(p( \textbf{x}, (k+1)\Delta t)\) is a short-time response starting from the deterministic initial condition \(p(\textbf{x}, k\Delta t)\). Because the stochastic process \(\textbf{X}(t)\) satisfies Eq. (3), the STGA of the PDF of the response \(\textbf{X}((k+1)\Delta t)\) is valid. The details of the STGA strategy can be found in [28]. The mathematical expression of the STGA approximation of the PDF will be presented later.

With the PDF of the response \(\textbf{X}((k+1)\Delta t)\), we can analytically evaluate the conditional expectation of the nonlinear and unknown function of \(\textbf{X}(t)\) in Eq. (13).

In the third step, we propose a neural networks representation of the unknown function \(p(\textbf{x}, (k+1)\Delta t)\), which is a probability density function. From our previous experience [24,25,26,27], we find that the radial basis function neural networks (RBFNN) with Gaussian activation functions can be an excellent choice for modeling \(p( \textbf{x}, (k+1)\Delta t)\).

3.2 RBFNN

Let \(\bar{p}(\textbf{x}, \textbf{w}(k))\) be the function approximating \(p( \textbf{x}, k\Delta t)\) at the k th time step where \(\textbf{w}(k)\) denotes all the trainable coefficients of the neural networks. The trial solution \(\bar{p}(\textbf{x}, \textbf{w}(k))\) is written as the RBFNN with Gaussian activation functions

where \(N_{G}\) represents the total number of neurons. \(G_j(\textbf{x})\equiv G(\textbf{x},\varvec{\mu }_j, \varvec{\Sigma }_j)\) is a n-variate Gaussian radial basis function with the mean \(\varvec{\mu }_{j}\) and covariance matrix \(\varvec{\Sigma }_{j}=\textrm{diag}[\varvec{\sigma }^2_{j}]\). The n-variate Gaussian radial basis function is separable and can be expressed as a product of multiple uni-variate Gaussian functions as,

where \(\mu _{j k}\) is the k th component of the mean \(\varvec{\mu }_{j}\) and \(\sigma _{j, k}\) is the k th component of the standard deviation \(\varvec{\Sigma }_{j}\). In this paper, the means \(\varvec{\mu }_j\) and standard deviations \(\varvec{\sigma }_j\) are taken as constants.

The RBFNN solution (14) is a neural network with a single hidden layer using the Gaussian activation function. The diagram of the RBFNN for \(n=4\) is shown in Fig. 1.

This shallow neural network has been proven to possess universal approximation capabilities [29]. Furthermore, the normalization condition (12) now reads,

Let \(D_G\) denote the domain where the system stays with probability not less than 99%. We discretize the domain into uniform grids, which represent the means of Gaussian neurons. The standard deviations for all the Gaussian function are set equal to the grid size.

3.3 Loss function

Let us consider the time-invariant case again. Assume that the duration of each sub-interval \(\Delta t\) is sufficiently small. Let \(\int _{(k-1)\Delta t}^{k\Delta t}c(\textbf{X}(s))ds = \bar{c}\Delta t\) where \(\bar{c}\) is determined from the trapezoidal rule. Before presenting the loss function, let us introduce a shorthand for the expectation with the help of Eq. (14)

\(e_j(\textbf{x}_i)\) is the expectation of the Gaussian activation function \(G_j(\textbf{x})\).

Define a loss function and make use of Eq. (18). We have

where \(r(\textbf{x}_i)\) measures the degree to which the trial solution satisfies the martingale property at the the sampling point \(x_i\),

3.4 STGA technique for the expectation

The short-time probability density solution of the stochastic process, as defined in Eq. (3), starting from the initial condition \(\textbf{X}(k\Delta t)=\textbf{x}_i\), is approximately a Gaussian PDF with the mean \(\textbf{A}_i=\textbf{x}_i+ \textbf{a}(\textbf{x}_i)\Delta t\) and covariance \(\textbf{B}_i=\varvec{\sigma }\varvec{\sigma }^T(\textbf{x}_i)\Delta t\), as follows [30],

With this Gaussian PDF, the expectation \(\mathbb {E}\) \([G_j(\textbf{X}(k\Delta t)) | \textbf{X}((k-1)\Delta t)=\textbf{x}_i]\) can be calculated analytically. The calculation is detailed as follows:

The analytical expression for the function \(e_j(\textbf{x}_i)\) is obtained as,

Therefore, there is no longer a need for simulations to compute the expectation in the loss function (19) from the Feynman–Kac formula.

3.5 Transient response

Let \(\textbf{w}(k)=[w_j(k)] \in \mathbb {R}^{N_G \times 1}\), \(\textbf{p}(k)=[p(\textbf{x}_i, k\Delta t)] \in \mathbb {R}^{N_s \times 1}\), \(\textbf{G}=[G_{ij}]=[G_i(\textbf{x}_j)] \in \mathbb {R}^{N_G \times N_s}\) and \(\textbf{E}=[e_{ij}]=[e_i(\textbf{x}_j)] \in \mathbb {R}^{N_G \times N_s}\), we can rewrite the loss function (19) together with the normalization condition (16) in the matrix form as,

The optimal weight coefficients are determined to satisfy the necessary conditions for minimization of the loss:

where \(\textbf{e}_w \in \mathbb {R}^{N_G \times 1}\) is a column vector of ones with the same size as \(\textbf{w}\).

We should note that the optimal weight coefficients can also be obtained by making the gradient search algorithms in machine learning. This will be conducted in another paper.

Remark 1

To extend the the Feynman–Kac formula to the steady-state, we assume that \(T\rightarrow \infty \) and \(k\rightarrow \infty \) while \(\Delta t\) is kept finite. We further assume that the stationary solution of the PDF exists. Let \(p_{ss}(\textbf{x})\) denote the stationary PDF. Then, Eq. (13) reads,

Remark 2

Another way to reach the steady-state for autonomous or periodic systems is to consider the solution over consecutive finite time intervals \([k\Delta t, (k+1)\Delta t]\) (\(k=0,1,2,\ldots \)). This approach would lead to map**s of \(p(\textbf{x},k\Delta t) \rightarrow p(\textbf{x},(k+1)\Delta t)\). Such a map** described in the discretized state space has been known as the generalized cell map** (GCM) for stochastic systems [31]. In the framework of cell map**, the stationary response can be obtained with the help of one step transient probability matrix, which we shall discuss later.

3.6 Computation of optimal weights

Let \(D_G\) denote the domain where the system stays with probability not less than 99%. We discretize the domain into uniform grids, which represent the means of Gaussian neurons. The standard deviations for all the Gaussian functions are set equal to the grid size.

The optimal weight coefficients \(\textbf{w}(k)\) can be computed in Algorithm 1.

4 Review of the equation-based RBFNN method

Before presenting the computational examples, we briefly review the method of directly solving the FPK equation using RBFNN, referred to as the equation-based RBFNN method. Let the RBFNN in Eq. (14) be the trial solution of the FPK equation at the \(k^{th}\) time step. Consider a finite difference scheme to approximate the time derivative as follows,

By substituting the RBFNN trial solution shown in Eq. (14) and the time derivative shown in Eq. (27) into the FPK Eq. (4), we obtain a local residual \(r(\textbf{x}, \textbf{w}(k))\) as a function of the weight parameters \(\textbf{w}(k)\),

We define the loss function as

where \(\textbf{S}=[s_{ij}]=[s_i(\textbf{x}_j)] \in \mathbb {R}^{N_G \times N_s}\). Although both the methods employ the same RBFNN, they represent fundamentally distinct computational paradigms.

The effectiveness and accuracy of the equation-based RBFNN method have been well-documented in previous studies [24,25,26,27]. Therefore, the equation-based RBFNN method will serve as a substitute for MCS to evaluate the proposed RBFNN method based on the Feynman–Kac formula.

5 Examples

We apply the proposed method to obtain steady-state and transient solutions of the FPK equation for three distinct systems without directly using the FPK equation. These systems include a strongly nonlinear system, a vibro-impact non-smooth system and a high-dimensional system.

To evaluate the accuracy of the proposed method, we define two errors. The first is the root mean square (RMS) error of the FPK equation,

where \(\bar{p}_{\Delta t}( \textbf{x},t)\) represents the PDF derived from the proposed method with the time step size \(\Delta t\).

The second error metric, denoted as \(J_{PDF}\), represents the discrepancy between the PDFs derived from the Feynman–Kac formula and those obtained by the equation-based RBFNN method.

where \(p^*(\textbf{x},t)\) denotes the PDF derived via the equation-based RBFNN method. These errors are also valid for steady-state cases when \(t\rightarrow \infty \) and \(\frac{\partial \bar{p}_{\Delta t}( \textbf{x},t)}{\partial t} =0\).

The PDFs obtained by the proposed method and the differences to the equation-based RBFNN result for the Van der Pol system in Eq. (33). a–d correspond to time steps \(\Delta t= [10^{-1}, 10^{-2}, 10^{-3}, 10^{-4}]\). e, f show the differences between the results obtained by proposed method and the equation-based RBFNN result

5.1 Van der Pol system

We first consider a strongly nonlinear Van der Pol system subject to both additive and multiplicative Gaussian noises.

where \(W_i(t)\) represent independent Gaussian white noises with intensities \(2D_i\). The drift and diffusion terms of the FPK equation of the Van der Pol system are shown as follows,

The parameters are set as \(\beta =1\) and \(2D_i=0.2\). The domain for Gaussian neurons is \(D_G=[-3.2, 3.2]\times [-6.4, 6.4]\) divided into \(N_G=61\times 61\) grids, where \(N_G\) represents the total number of Gaussian neurons. The domain for sampling points is \(D_S=[-4, 4]\times [-8, 8]\) with \(N_s=81\times 81\) points uniformly distributed in the domain. For the sake of fair comparison, the equation-based RBFNN method uses the same parameters and settings.

The computation of expectation of the Feynman–Kac formula is over a time step \(\Delta t\). The integration of the function \(c(\textbf{X}(t))\) and short-time Gaussian approximation both introduce an error of order \(\Delta t\). A proper choice of \(\Delta t\) should balance the solution accuracy and computational cost. We shall examine the effect of \(\Delta t\) in the numerical studies.

5.1.1 Stationary response

We first report the stationary response of the Van der Pol system. Figure 2 presents the PDFs obtained using the proposed RBFNN method based on the Feynman–Kac formula for various time steps \(\Delta t = [10^{-1}, 10^{-2}, 10^{-3}, 10^{-4}]\), along with their discrepancies to the PDFs derived from the equation-based RBFNN method. It is noticeable that the difference decreases with the reduction in time step. Moreover, when \(\Delta t \le 10^{-3}\), the results of the proposed method exhibit good agreement with the equation-based RBFNN result.

Figure 3 shows the effect of the time step \(\Delta t\) on the errors defined earlier. It appears that \(\Delta t=10^{-3}\) is a turning point of \(J_{FPK}\) and can be considered as optimal in terms of the balance of accuracy and efficiency. This is also confirmed by the results in Fig. 2.

The total computational times for calculating the stationary responses with the proposed method and the equation-based RBFNN method are 1.7687 s and 2.3383 s, respectively.

5.1.2 Transient response analysis

Next, the RBFNN method based on the Feynman–Kac formula is applied to study the transient responses of the Van der Pol system. The time interval is set to [0, 10]. The time step is \(\Delta t=10^{-3}\). We take a Gaussian PDF with the mean \(\varvec{\mu }_0=[0, 0]\) and the standard deviation \(\varvec{\sigma }_0=[0.1, 0.1]\) as the initial condition.

Figures 4 and 5 show the evolution of the transient response through 3D surface plots and color contours, respectively. Figure 6 shows the marginal PDFs of the transient response of the Van der Pol system.

These results clearly demonstrate that the proposed method with a time step \(10^{-3}\) yields results that are in close agreement with those obtained from the equation-based RBFNN method. The total computational times for calculating the transient responses with the proposed method and the equation-based RBFNN method are 2393 s and 2386 s, respectively.

3D surface plots of PDFs and differences to the equation-based RBFNN result of the Van der Pol system. a–d are the transient PDFs at \(t=1.5\) s, 3 s, 5 s and the stationary PDF, respectively, obtained by the proposed method. e–h show the differences between the results obtained by proposed method and the equation-based RBFNN results. The peaks of the error are of order \(10^{-4}\)

Color contours of PDFs and differences to the equation-based RBFNN result of the Van der Pol system. a–d are the transient PDFs at \(t=1.5\) s, 3 s, 5 s and the stationary PDF, respectively, obtained by the proposed method. e–h show the differences between the results obtained by proposed method and the equation-based RBFNN results

5.2 Nonlinear vibro-impact system

Next, we consider a tri-stable vibro-impact system subject to both additive and multiplicative Gaussian noises.

where \(W_i(t)\) represent independent Gaussian white noises with intensities \(2D_i\), and \(f(x_1)\) denotes the impact force. This force is described by the Hertz contact law when the mass collides with the barrier.

where \(\delta _r\) and \(\delta _l\) denote the distances from the equilibrium point of the impact oscillator to the right and left impact barriers, respectively. The constants \(B_r\) and \(B_l\) correspond to properties determined by the material composition and geometric characteristics of these impact barriers.

The drift and diffusion terms of the vibro-impact system are shown as follows,

The parameters are set as \(\beta _1=0.2\), \(\alpha _1=1\), \(\alpha _3=-4\), \(\alpha _5=1\) and \(2D_i=0.2\). The domain \(D_G=[-3.2, 3.2]\times [-6.4, 6.4]\) is divided into \(N_G=61\times 61\) grids. The domain of sampling points is \(D_S=[-4, 4]\times [-8, 8]\). \(N_s=81\times 81\) points are uniformly sampled in \(D_s\).

5.2.1 Stationary response analysis

Figure 7 presents the PDFs obtained using the proposed RBFNN method based on the Feynman–Kac formula for various time steps \(\Delta t = [10^{-1}, 10^{-2}, 10^{-3}, 10^{-4}]\). These results clearly demonstrate that for systems with multiple equilibrium states, the errors introduced by a larger \(\Delta t\) can lead to incorrect predictions, causing the steady-state response to erroneously converge to incorrect equilibrium states. This highlights the importance of selecting an appropriate \(\Delta t\) for accurate prediction in such complex systems.

The variation of errors with \(\Delta t\) is illustrated in Fig. 8. The result indicates again that \(\Delta \le 10^{-3}\) is optimal with a good balance of accuracy and efficiency.

For the proposed method and the equation-based RBFNN method, the total computational times for calculating the stationary responses are 1.3746 s and 5.2292 s, respectively.

5.2.2 Transient response analysis

Next, we study the transient responses of the vibro-impact system. The time interval is set as [0, 10] and the time step is chosen as \(\Delta t=10^{-3}\). We consider a Gaussian PDF with the mean \(\varvec{\mu }_0=[0, 0]\) and the standard deviation \(\varvec{\sigma }_0=[0.1, 0.1]\) as the initial condition.

Figures 9 and 10 illustrate the transient response evolution using the 3D surface plots and color contours, in comparison with the equation-based RBFNN result. Figure 11 shows the evolution of the marginal PDFs of the transient response of the vibro-impact system. These results clearly demonstrate that the proposed method, when employed with a time step size of \(10^{-3}\) yields results that are in close agreement with those obtained from the equation-based RBFNN method.

For the proposed method and the equation-based RBFNN method, the total computational times for calculating the transient responses are 2561 s and 2570 s, respectively.

3D surface plots of PDFs and differences to the equation-based RBFNN result for the vibro-impact system. a–d are the transient PDFs at \(t=3\) s, 6 s, 8 s and the stationary PDF, respectively, obtained by the proposed method. e–h show the differences between the results obtained by proposed method and the equation-based RBFNN results. The peaks of the error are of order \(10^{-3}\)

Color contours of PDFs and differences to the equation-based RBFNN result for the vibro-impact system. a–d are the transient PDFs at \(t=3\) s, 6 s, 8 s and the stationary PDF, respectively, obtained by the proposed method. e–h show the differences between the results obtained by proposed method and the equation-based RBFNN results

5.3 System of two coupled duffing oscillators

As the last example, we consider a 4D coupled-Duffing system to demonstrate the effectiveness of the proposed method in high-dimensional systems. The governing equations are given by,

where \(W_i(t)\) are independent and zero-mean Gaussian white noises with intensities \(2D_i\). The drift and diffusion terms are given by

The parameters are \(k_1=0.3\), \(k_2=0.5\), \(k_3=0.3\), \(k_4=0.12\), \(\mu _1=0.2\), \(\mu _2=0.2\), \(\omega _1=0.2\), \(\omega _2=0.4\) and \(2D_i=0.04\). The domain \(D_G=[-2, 2]^4\) is divided into \(N_G=16^4\) grids. The sampling domain is \(D_S=[-2.5, 2.5]^4\). \(N_s=21^4\) points are uniformly sampled in \(D_s\). The equation-based RBFNN method with the same settings is utilized for comparison.

5.3.1 Stationary response analysis

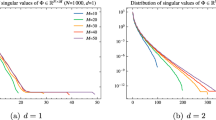

The variation of the errors with \(\Delta t\) is illustrated in Fig. 12. It can be observed that \(J_{FPK}\) converges towards the RMS error of the solution directly obtained from the FPK equation with \(\Delta \le 10^{-3}\).

We select the time step size \(\Delta t=10^{-3}\). The color contours of the stationary joint PDFs projected to different sub-spaces are shown in Fig. 13. The agreement between the proposed method and the equation-based RBFNN results is very good. The total computational times for calculating the stationary responses with the proposed method and the equation-based RBFNN method are 2671 s and 3592 s, respectively.

We skip the transient responses of this example for the sake of length of the paper.

6 Comparison of computational time

Finally, we analyze the computational time for both the proposed method and the equation-based RBFNN method. We focus on the results for stationary response PDFs. It can be observed that the main time-consuming steps of both the methods can be divided into three parts:

-

1.

Compute the matrix \(\textbf{G}\).

-

2.

Compute the matrix \(\textbf{E}\).

-

3.

Search for the optimal coefficients.

The computational times required for the stationary responses of three examples are listed in Tables 1 to 3.

Since both the methods make use of RBFNN, the times for the first and third steps are similar. However, it is observable that the proposed method significantly reduces computational time in the second step compared to the equation-based RBFNN method. This reduction is attributed to the fact that the proposed method does not involve derivative calculations in computing the expected function shown in Eq. (18). These examples offer compelling evidence of efficiency of the proposed method.

7 Conclusion

We have developed a numerical method based on the Feynman–Kac formula, capable of solving parabolic PDEs without directly dealing with the equation. The proposed method employs a RBFNN to approximate the PDF of the stochastic process. The STGA technique is used to derive an analytical expression for the expectation of the stochastic martingale process \(\mathcal {M}(t)\), thus eliminating the need for numerical simulations. The martingale property of the process \(\mathcal {M}(t)\) provides an implicit integral equation describing the evolution of the PDF starting from a given initial condition. The integral equation has been shown to be equivalent to the FPK equation. Hence, the solution of the FPK equation is obtained without using the FPK equation. Three challenging examples of nonlinear stochastic systems are studied with the proposed method. The results are compared with the equation-based RBFNN solution in terms of accuracy and efficiency. It is found that the proposed method is quite competitive and promising for analyzing high-dimensional nonlinear stochastic systems. However, in this preliminary study of applying the Feynman–Kac formula to obtain the solution of the FPK equation, many theoretical issues of the method are not addressed including stability and convergence of the proposed method.

Data availability

The research does not use any collected data. All the data used in the research are simulated in the state space.

Code Availability

Once the paper is accepted for publication, the computer programs for the examples reported in the paper will be made available to the public.

References

Berg, J., Nyström, K.: A unified deep artificial neural network approach to partial differential equations in complex geometries. Neurocomputing 317, 28–41 (2018). https://doi.org/10.1016/j.neucom.2018.06.056

Han, J., Jentzen, A., E, W.: Solving high-dimensional partial differential equations using deep learning. Proc. Natl. Acad. Sci. 115(34), 8505–8510 (2018). https://doi.org/10.1073/pnas.1718942115

Chen, X., Yang, L., Duan, J., Karniadakis, G.E.: Solving inverse stochastic problems from discrete particle observations using the Fokker–Planck equation and physics-informed neural networks. SIAM J. Sci. Comput. 43(3), 811–830 (2021). https://doi.org/10.1137/20M1360153

Hu, Z., Zhang, J., Ge, Y.: Handling vanishing gradient problem using artificial derivative. IEEE Access 9, 22371–22377 (2021). https://doi.org/10.1109/ACCESS.2021.3054915

Sirignano, J., Spiliopoulos, K.: DGM: a deep learning algorithm for solving partial differential equations. J. Comput. Phys. 375, 1339–1364 (2018). https://doi.org/10.1016/j.jcp.2018.08.029

Zhang, H., Xu, Y., Li, Y., Kurths, J.: Statistical solution to SDEs with \(\alpha \)-stable Lévy noise via deep neural network. Int. J. Dyn. Control 8(4), 1129–1140 (2020). https://doi.org/10.1007/s40435-020-00677-0

Xu, Y., Zhang, H., Li, Y., Zhou, K., Liu, Q., Kurths, J.: Solving Fokker–Planck equation using deep learning. Chaos Interdiscip. J. Nonlinear Sci. (2020). https://doi.org/10.1063/1.5132840

Zhang, H., Xu, Y., Liu, Q., Wang, X., Li, Y.: Solving Fokker–Planck equations using deep KD-tree with a small amount of data. Nonlinear Dyn. 108(4), 4029–4043 (2022). https://doi.org/10.1007/s11071-022-07361-2

Zhang, H., Xu, Y., Liu, Q., Li, Y.: Deep learning framework for solving Fokker–Planck equations with low-rank separation representation. Eng. Appl. Artif. Intell. 121, 106036 (2023). https://doi.org/10.1016/j.engappai.2023.106036

Karatzas, I., Shreve, S.: Brownian Motion and Stochastic Calculus, vol. 113. Springer, Berlin (1991)

Pham, H.: Continuous-Time Stochastic Control and Optimization with Financial Applications, vol. 61. Springer, Berlin (2009)

Pardoux, E., Peng, S.G.: Adapted solution of a backward stochastic differential equation. Syst. Control Lett. 14(1), 55–61 (1990). https://doi.org/10.1016/0167-6911(90)90082-6

Pardoux, E., Peng, S.: Backward stochastic differential equations and quasilinear parabolic partial differential equations. In: Rozovskii, B.L., Sowers, R.B. (eds.) Stochastic Partial Differential Equations and Their Applications, pp. 200–217. Springer, Berlin (1992)

Xu, Y.: A complex Feynman–Kac formula via linear backward stochastic differential equations. ar**v preprint ar**v:1505.03590 (2015)

Alghassi, H., Deshmukh, A., Ibrahim, N., Robles, N., Woerner, S., Zoufal, C.: A variational quantum algorithm for the Feynman–Kac formula. Quantum 6, 730 (2022)

Pham, H.: Feynman–Kac representation of fully nonlinear PDEs and applications. Acta Math. Vietnam 40, 255–269 (2015)

Nguwi, J.Y., Penent, G., Privault, N.: A fully nonlinear Feynman–Kac formula with derivatives of arbitrary orders. J. Evol. Equ. 23(1), 22 (2023)

Bouchard, B., Touzi, N.: Discrete-time approximation and Monte-Carlo simulation of backward stochastic differential equations. Stoch. Process. Their Appl. 111(2), 175–206 (2004). https://doi.org/10.1016/j.spa.2004.01.001

Hwang, C.-O., Mascagni, M., Given, J.A.: A Feynman–Kac path-integral implementation for Poisson’s equation using an h-conditioned Green’s function. Math. Comput. Simul. 62(3), 347–355 (2003). https://doi.org/10.1016/S0378-4754(02)00224-0

Dalang, R.C., Müller, C., Tribe, R.: A Feynman–Kac-type formula for the deterministic and stochastic wave equations and other P.D.E.’s. Trans. Am. Math. Soc. (2008). https://doi.org/10.1090/S0002-9947-08-04351-1

Richter, L., Berner, J.: Robust SDE-based variational formulations for solving linear PDEs via deep learning. In: Proceedings of the 39th International Conference on Machine Learning. PMLR 162, Baltimore, Maryland, USA, pp. 1–16 (2022)

Han, J., Nica, M., Stinchcombe, A.R.: A derivative-free method for solving elliptic partial differential equations with deep neural networks. J. Comput. Phys. 419, 109672 (2020). https://doi.org/10.1016/j.jcp.2020.109672

Park, K.M.S., Stinchcombe, A.R.: Deep reinforcement learning of viscous incompressible flow. J. Comput. Phys. 467, 111455 (2022). https://doi.org/10.1016/j.jcp.2022.111455

Wang, X., Jiang, J., Hong, L., Sun, J.-Q.: Random vibration analysis with radial basis function neural networks. Int. J. Dyn. Control 10(5), 1385–1394 (2022). https://doi.org/10.1007/s40435-021-00893-2

Wang, X., Jiang, J., Hong, L., Zhao, A., Sun, J.-Q.: Radial basis function neural networks solution for stationary probability density function of nonlinear stochastic systems. Probab. Eng. Mech. 71, 103408 (2023). https://doi.org/10.1016/j.probengmech.2022.103408

Qian, J., Chen, L., Sun, J.-Q.: Transient response prediction of randomly excited vibro-impact systems via RBF neural networks. J. Sound Vib. 546, 117456 (2023). https://doi.org/10.1016/j.jsv.2022.117456

Wang, X., Jiang, J., Hong, L., Sun, J.-Q.: First-passage problem in random vibrations with radial basis function neural networks. J. Vib. Acoust. (2022). https://doi.org/10.1115/1.4054437

Sun, J.Q., Hsu, C.S.: The generalized cell map** method in nonlinear random vibration based upon short-time Gaussian approximation. J. Appl. Mech. 57(4), 1018–1025 (1990). https://doi.org/10.1115/1.2897620

Park, J., Sandberg, I.W.: Universal approximation using radial-basis-function networks. Neural Comput. 3(2), 246–257 (1991). https://doi.org/10.1162/neco.1991.3.2.246

Risken, H.: The Fokker–Planck Equation—Methods of Solution and Applications. Springer, New York (1984)

Sun, J.-Q.: Stochastic Dynamics and Control. Elsevier, New York (2006)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant Nos. 12172267 and 11972274).

Funding

The work was supported by two grants from the National Natural Science Foundation of China as stated in the Acknowledgement.

Author information

Authors and Affiliations

Contributions

XW: this is part of his doctoral thesis. He wrote computer programs to generate results for the paper. He drafted the manuscript. JJ and LH: responsible for the concept and funding of the research, supervised the student, edited the draft of the manuscript. J-QS: responsible for the research concept, supervised the student, edited and finalized the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest with each other or any organization. There is no conflict of interest either in the joint research work.

Ethics approval and consent to participate

All the authors participated the research, discussion, drafting and editing of the manuscript.

Consent for publication

All the authors have a full consent to publish the paper as is written.

Materials availability

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, X., Jiang, J., Hong, L. et al. A novel method for response probability density of nonlinear stochastic dynamic systems. Nonlinear Dyn (2024). https://doi.org/10.1007/s11071-024-09686-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11071-024-09686-6