Abstract

Skin cancer is one of the most prevalent malignancies in the world. Deep learning-based methods have been successfully used for skin disease diagnosis and achieved great recognition performance, most of which relied on dermoscopic images alone. Existing multi-model methods of skin lesion diagnosis have the following two shortcomings: 1) They mainly focus on learning complementary information while ignoring the correlation between clinical and dermoscopic images. 2) The feature extractor is not optimized by imposing constraints, which may result in limited expression of the extracted features. To address these issues, this study proposes a new method, named multi-modal bilinear fusion with hybrid attention mechanism (MBF-HA) for multi-modal skin lesion classification. Specifically, MBF-HA introduced a common representation learning framework to learn the correlated features by exploring the shared characteristics between two modalities. Moreover, MBF-HA uses the hybrid attention-based reconstruction module which encourages the feature extractor to detect and localize lesion regions on each modality, thus, enhancing the discriminative power of the output feature representation. We perform comprehensive experiments on a well-established multi-modal and multi-label skin disease dataset: a 7-point Checklist database, MBF-HA achieves an average accuracy of 76.3% in the multi-classification tasks and 76.0% in the diagnostic task. The experimental results show that MBF-HA outperforms known related works and is expected to help physicians make more precise clinical diagnoses.

Similar content being viewed by others

Data availability

The datasets generated or analyzed during the current study are available from the corresponding author on reasonable req.

References

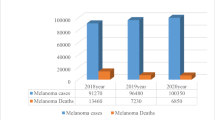

Siegel RL, Miller KD, Jemal A (2018) Cancer statistics, 2018. CA Cancer J Clin 68(1):7–30

Rigel DS, Friedman RJ, Kopf AW (1996) The incidence of malignant melanoma in the United States: issues as we approach the 21st century. J Am Acad Dermatol 34(5):839–847. https://doi.org/10.1016/S0190-9622(96)90041-9

Rogers HW, Weinstock MA, Feldman SR, Coldiron BM (2015) Incidence estimate of nonmelanoma skin cancer (keratinocyte carcinomas) in the US population, 2012. JAMA Dermatol 151(10):1081–1086

Massone C, Hofmann-Wellenhof R, Ahlgrimm-Siess V, Gabler G, Ebner C, Peter Soyer H (2007) Melanoma screening with cellular phones. PLoS ONE 2(5):e483

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Barata C, Celebi ME, Marques JS (2017) Development of a clinically oriented system for melanoma diagnosis. Pattern Recogn 69:270–285. https://doi.org/10.1007/978-3-662-45139-7_116

Duma S (2015) Dermoscopy of pigmented skin lesions. In: European Handbook of Dermatological Treatments. Springer, Berlin, Heidelberg, pp 1167–1177. https://doi.org/10.1016/j.jaad.2001.11.001

Argenziano G, Catricalà C, Ardigo M, Buccini P, De Simone P, Eibenschutz L … Zalaudek I (2011) Dermoscopy of patients with multiple nevi: Improved management recommendations using a comparative diagnostic approach. Arch Dermatol 147(1):46–49

Abbas Q, Celebi ME, Serrano C, Garcia IF, Ma G (2013) Pattern classification of dermoscopy images: a perceptually uniform model. Pattern Recogn 46(1):86–97. https://doi.org/10.1016/j.patcog.2012.07.027

Serrano C, Acha B (2009) Pattern analysis of dermoscopic images based on Markov random fields. Pattern Recognit 42(6):1052–1057

Bi L, Kim J, Ahn E, Kumar A, Fulham M, Feng D (2017) Dermoscopic image segmentation via multistage fully convolutional networks. IEEE Trans Biomed Eng 64(9):2065–2074

Bunte K, Biehl M, Jonkman MF, Petkov N (2011) Learning effective color features for content based image retrieval in dermatology. Pattern Recogn 44(9):1892–1902. https://doi.org/10.1016/j.patcog.2010.10.024

Barata C, Celebi ME, Marques JS (2014) Improving dermoscopy image classification using color constancy. IEEE J Biomed Health Inform 19(3):1146–1152. https://doi.org/10.1109/ICIP.2014.7025716

Saez A, Serrano C, Acha B (2014) Model-based classification methods of global patterns in dermoscopic images. IEEE Trans Med Imaging 33(5):1137–1147. https://doi.org/10.1109/TMI.2014.2305769

Ma L, Staunton RC (2013) Analysis of the contour structural irregularity of skin lesions using wavelet decomposition. Pattern Recogn 46(1):98–106

Zhang J, Cui L, Gouza FB (2018) SEGEN: sample-ensemble genetic evolutional network model. ar**v preprint ar**v:1803.08631

Liu D, Cui Y, Tan W, Chen Y (2021) Sg-net: spatial granularity network for one-stage video instance segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 9816–9825

Liang J, Zhou T, Liu D, Wang W (2023) CLUSTSEG: Clustering for Universal Segmentation. ar**v preprint ar**v:2305.02187

Zhang J, **e Y, **a Y, Shen C (2019) Attention residual learning for skin lesion classification. IEEE Trans Med Imaging 38(9):2092–2103

Adegun AA, Viriri S (2019) Deep learning-based system for automatic melanoma detection. IEEE Access 8:7160–7172

Wang W, Liang J, Liu D (2022) Learning equivariant segmentation with instance-unique querying. Adv Neural Inf Process Syst 35:12826–12840

Harangi B (2017) Skin lesion detection based on an ensemble of deep convolutional neural network. ar**v preprint ar**v:1705.03360. https://doi.org/10.1016/j.jbi.2018.08.006

Yap J, Yolland W, Tschandl P (2018) Multimodal skin lesion classification using deep learning. Exp Dermatol 27(11):1261–1267

Li W, Zhuang J, Wang R, Zhang J, Zheng WS (2020) Fusing metadata and dermoscopy images for skin disease diagnosis. In: 2020 IEEE 17th international symposium on biomedical imaging (ISBI), pp 1996–2000. IEEE. https://doi.org/10.1109/ISBI45749.2020.9098645

Kawahara J, Daneshvar S, Argenziano G, Hamarneh G (2018) Seven-point checklist and skin lesion classification using multitask multimodal neural nets. IEEE J Biomed Health Inform 23(2):538–546

Tang P, Yan X, Nan Y, **ang S, Krammer S, Lasser T (2022) FusionM4Net: a multi-stage multi-modal learning algorithm for multi-label skin lesion classification. Med Image Anal 76:102307

Bi L, Feng DD, Fulham M, Kim J (2020) Multi-label classification of multi-modality skin lesion via hyper-connected convolutional neural network. Pattern Recogn 107:107502. https://doi.org/10.1016/j.patcog.2020.107502

Ge Z, Demyanov S, Chakravorty R, Bowling A, Garnavi R (2017) Skin disease recognition using deep saliency features and multimodal learning of dermoscopy and clinical images. In: Medical Image Computing and Computer Assisted Intervention− MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, September 11–13, 2017, Proceedings, Part III 20. Springer International Publishing, pp 250–258

Wu Y, Lariba AC, Chen H, Zhao H (2022) Skin lesion classification based on deep convolutional neural network. In: 2022 IEEE 4th International Conference on Power, Intelligent Computing and Systems (ICPICS). IEEE, pp 376–380

Bhattacharya I, Seetharaman A, Kunder C, Shao W, Chen LC, Soerensen SJ … Rusu M (2022) Selective identification and localization of indolent and aggressive prostate cancers via CorrSigNIA: an MRI-pathology correlation and deep learning framework. Med Image Anal 75:102288

Chandar S, Khapra MM, Larochelle H, Ravindran B (2016) Correlational neural networks. Neural Comput 28(2):257–285

Guo Y, Wang Y, Yang H, Zhang J, Sun Q (2022) Dual-attention EfficientNet based on multi-view feature fusion for cervical squamous intraepithelial lesions diagnosis. Biocybern Biomed Eng 42(2):529–542

Roy AG, Navab N, Wachinger C (2018) Concurrent spatial and channel ‘squeeze & excitation’in fully convolutional networks. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, September 16–20, 2018, Proceedings, Part I. Springer International Publishing, pp 421–429

Yan L, Li S, Guo Y, Ren P, Song H, Yang J, Shen X (2021) Multi-state colposcopy image fusion for cervical precancerous lesion diagnosis using BF-CNN. Biomed Signal Process Control 68:102700

Yu Z, Yu J, Fan J, Tao D (2017) Multi-modal factorized bilinear pooling with co-attention learning for visual question answering. In: Proceedings of the IEEE international conference on computer vision, pp 1821–1830

Ruder S (2016) An overview of gradient descent optimization algorithms. ar**v preprint ar**v:1609.04747

Afroze S, Hossain MR, Hoque MM, Dewan MAA (2023) An empirical framework for detecting speaking modes using ensemble classifier. Multimed Tools Appl:1–34

Kingma DP, Adam BJ (2014) A method for stochastic optimization. ar**v e-prints. ar**v preprint ar**v:1412.6980, 1412

Ngiam J, Khosla A, Kim M, Nam J, Lee H, Ng AY (2011) Multimodal deep learning. In: Proceedings of the 28th international conference on machine learning (ICML-11), pp 689–696

Wang W, Wang Y, Liu D, Hou W, Zhou T, Ji Z (2022) GeneSegNet: a deep learning framework for cell segmentation by integrating gene expression and imaging. bioRxiv, 2022-12

Wang W, Han C, Zhou T, Liu D (2022) Visual recognition with deep nearest centroids. ar**v preprint ar**v:2209.07383

Venugopal V, Raj NI, Nath MK, Stephen N (2023) A deep neural network using modified EfficientNet for skin cancer detection in dermoscopic images. Decis Anal J:100278

Chattopadhay A, Sarkar A, Howlader P, Balasubramanian VN (2018) Grad-cam++: generalized gradient-based visual explanations for deep convolutional networks. In: 2018 IEEE winter conference on applications of computer vision (WACV). IEEE, pp 839–847

Wei Z, Li Q, Song H (2022) Dual attention based network for skin lesion classification with auxiliary learning. Biomed Signal Process Control 74:103549

Bayoudh K, Knani R, Hamdaoui F, Mtibaa A (2021) A survey on deep multimodal learning for computer vision: advances, trends, applications, and datasets. Vis Comput:1–32

Acknowledgements

This work was supported by the National Key Research and Development Program of China [No. 2018YFB1700902].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wei, Y., Ji, L. Multi-modal bilinear fusion with hybrid attention mechanism for multi-label skin lesion classification. Multimed Tools Appl 83, 65221–65247 (2024). https://doi.org/10.1007/s11042-023-18027-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-18027-5