Abstract

Computer vision is one of the artificial intelligence’s most challenging fields, enabling computers to interpret, analyse and derive meaningful information from the visual world. There are various utilizations of computer vision algorithms, and most of them, from simpler to more complicated, have an object and shape recognition in common. Traditional pen and paper tests are designed in a pre-established format and consist of numerous basic shapes, which designate the important parts of the test itself. With that in mind, many computer vision applications regarding pen and paper tests arise as an opportunity. Massive courses and large schooling organizations mostly conduct their exams in paper format and assess them manually, which imposes a significant burden on the teaching staff. Any kind of automatization that will facilitate the grading process is highly desirable. Hence, an automated answer recognition system in assessment was developed to mitigate the problems above. The system uses images of scanned test pages obtained from the test scanning process and performs the necessary image manipulation steps to increase target recognition accuracy. Further, it manages to identify regions of interest containing multiple-choice questions and contours. Finally, the system verifies obtained results using the knowledge of the whereabouts of the test template regions of interest.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Computer vision is one of the most powerful fields of artificial intelligence [26, 32]. The main focus of computer vision is to attempt to mimic the sophisticated human vision system, thus enabling dedicated computer systems to designate and process objects on digital images and videos [22]. The abundance of daily generated visual data is one of the main driving forces behind the growth of computer vision.

There are various categories of computer vision, most of which are centered around object detection [33] and pattern recognition [23]. Considering the enormous improvements in computer vision algorithms, object identification accuracy rates are growing. Hence, dedicated computer vision systems are becoming more powerful than humans in performing certain tasks [1]. Moreover, considering the growing speeds of various hardware components occurring throughout time, computer vision systems are increasing the capability of executing tasks at higher rates than humans [16].

Many examination processes at schools are obliged to be realized at the same time for all eligible candidates. However, computing resources are scarce in schools, given a large number of pupils and students. Hence, up to this day, most pupil and student examinations at schools are conducted using traditional pen and paper tests [7]. This assessment process type is very common when an examination is performed with many candidates without computer access. These paper tests are later manually examined and assessed by the teaching staff.

Taking into account that these examinations are usually carried out in massive courses and groups using paper tests, a huge overhead is imposed on the teaching staff. Moreover, crucial examination processes require results in the shortest possible time [21]. Consequently, an opportunity arises to disburden the teaching staff by introducing automatization in the test assessing process using computer vision.

The tests consist of various question types: multiple-choice, matching concepts, short answer, fill-in, etc. Test questions are usually enveloped in regular polygon shapes that can be identified as objects. Multiple-choice question answers are typically symbolized as rectangles and circles, for instance. Although the used symbols designating the answers depend on the test format, they are generally regular polygons [18, 29]. In this case, the rectangle shape was chosen for the designation of questions, and the circle shape for the designation of multiple-choice questions’ answers. The main goal of the proposed automated assessment system is to detect circularly shaped multiple-choice questions’ answers besides the rectangularly shaped questions.

Considering the regularity of objects targeted for recognition, there should be no major concern regarding the success of the detection process. However, a couple of actions take place before the test is involved in an answer recognition process:

-

Tests are printed in printing centers, and the disfigurement can be caused by a bad printing machine.

-

Tests are manually filled in by students and pupils, distorting regular template objects on test pages.

-

Tests are carried to scanning centers (to obtain digital images that will be used in an answer recognition process) and are prone to impairment in the transport process.

-

Tests are scanned in scanning centers, and obtained images do not always fully resemble original test pages due to the characteristics of scanners used in a scanning process.

All these steps attach a certain level of disruption to images, which should not be neglected. Handling of the attached interference contributes to a certain extent to the amelioration of the recognition process quality. However, given the number of tests and pages that constitute them, additional image purification extends the total processing time. Therefore, morphological image operations must be carefully chosen to achieve an acceptable trade-off between recognition quality and processing time.

In this paper, the authors presented an automated answer recognition system. It uses images of scanned test pages obtained from the test scanning process, and it manages to identify regions of interest, which contain multiple-choice questions’/answers’ contours. Finally, the system verifies obtained results using the knowledge of the whereabouts of the test template regions of interest. The system was tested on 2180 instances of a mathematics template test filled by students about to finish secondary school.

The rest of this paper is organized as follows. Section 2 describes the previous related works and existing circle detection methods. Section 3 presents the proposed solution using Python programming language and OpenCV, as well as the reasons behind the chosen technologies. Section 4 illustrates obtained results by the present-day implemented system for circle answer recognition of multiple-choice questions. Finally, a brief conclusion and future work are stated in Section 5.

2 Related work and existing solutions

Most circle recognition algorithms are based on the Hough transform. This feature extraction technique aims to detect objects within a certain class of shapes by a voting procedure [4], carried out in a parameter space from which object candidates are obtained. However, the standard Hough transform algorithm demands a great amount of time and space resources for complicated calculations [9]. There are numerous optimizations of this algorithm [19], aiming to improve its execution speed. Nevertheless, all of them require prior knowledge in terms of circle radius range for calculation efficiency amelioration.

There are circle detection methods based on Hough transform named Curvature Aided Circle Detection [34] and Teaching Learning Based Optimization [17]. The Curvature Aided Circle Detection method adaptively tries to determine the center and radius of a candidate circle and adaptively estimates the curvature radius of the potential circle. The technique exhibits the capability of different radius circle detection in a complex scene. Unlike conventional circle detection methods, it is significantly less time and space-consuming. Teaching Learning Based Optimization method presents the task of circle detection as an optimization problem, where each circle represents an optimum within the feasible search space. This circle detection method is based on the Teaching Learning Based Optimization algorithm, a population-based technique inspired by the teaching and learning processes. Additionally, improvements to the evolutionary approach for circle detection are obtained by exploiting gradient information for constructing the search space and defining the objective function.

Moreover, there exists a circle detection method, which is not based on the Hough transform, named Randomized Circle Detection [27]. This method tries to fit a circle using an image’s several (mostly four) randomly chosen edge points. Afterward, a distance criterion and subsequent evidence collecting steps are applied to determine if these points belong to a possible circle edge. However, randomization on the whole image and the verification process of potential circles of this method require enormous memory and time resources. Still, there are a couple of improvements of this approach regarding the algorithm performances, which yield very good circle detection results.

Also, Zhao and all [10].

The implemented computer vision system is one of the modules of a larger system, which consists of four modules depicted in Fig. 1. These modules are the Zoning module, the Scanning module, the Processing module, and the Verification module, and they will be discussed in the following subsections of this paper.

3.1 Zoning module

Each test consists of an arbitrary number of pages. The very first page of each test, which is presented in Fig. 2 (text given on all figures is intentionally blurred), holds information about the test name and a placeholder for student’s identification code. This code is added during the exam by placing a barcode label on the appropriate placeholder. Also, the first page specifies filling instructions. A test can contain empty pages, as they are intended as a place for sketching the intermediate steps of question’s solutions.

Regions of interest of all test pages, namely questions, answers within those questions, and test QR code identification, are bounded by four borders. Borders are formed in the shape of the capital letter “L”. Each of these four borders is placed at one of the angles of the page and rotated accordingly to that angle.

The test can contain multiple types of questions: multiple-choice, fill-in, matching terms, etc. A rectangle circumscribes every question with solid edges. Questions in the test can span one or many pages. If the latter is the case, every part of the split question is identified by its solid rectangle. All information regarding the question is present within the question itself and does not span among other questions, as is depicted in Fig. 3.

Multiple-choice questions, the main objective of interest, hold their answers in a regular grid in an arbitrary number of rows and columns. Each answer is designated with a circle that should be filled, if the answer is considered the correct one, alongside the text representing that answer. Occasionally, multiple-choice answers are given as a regular grid of circles, with the names of those answers given on the header rows and columns of the surrounding table, as seen in Fig. 4.

As with any other question type, multiple-choice questions can naturally contain circle objects (graphically represented sets in mathematics, zero digits in numbers, “O” letters in words, etc.) which do not represent circle answers. They can sometimes be distributed in a regular grid and confused with circle answers. Consequently, distinguishing and separating circle answers and random noise was needed. Therefore, answers circles of each multiple-choice question are embodied inside a separate rectangular table.

It is worth noting that the layout and organization of questions and answers on test pages do not need to be fixed for each test. The layout of a particular test is determined by the people responsible for designing the test and must conform with the agreed rules above. All regions of interest are placed within four borders on the paper. There is a convention that uniquely identifies questions and answers within them. Questions are designated by the outermost rectangles within regions of interest. Answer regions of multiple-choice questions are designated by regular rectangular tables within question’s regions. Answer regions of open-type questions spawn through the entire question space.

The designed test is inputted in the Zoning module as a .pdf file. Firstly, the people administering the Zoning process are obliged to mark QR and Barcode regions on the main test page and the top left border on a page. Furthermore, they are required to designate question’s whereabouts for each question on the test. For a particular question, this designation is done by dragging the rectangle area from the top left question coordinate. Furthermore, for multiple-choice type questions, the answer region tables within these questions must be designated, as well. The coordinates of all these rectangles are relative to the top left border on a page. In both cases, this marking rectangle does not need to be precisely dragged, and it is the responsibility of a man operating the Zoning process to properly envelope each question.

The Zoning Module uses Hough transform to detect circles. The zoning is conducted on a test in a digital form (not printed, scanned, or filled in by a person) in a .pdf file format. Therefore, there is almost no noise present in the image. The text and figures were previously removed from all questions for security purposes, so the test contains only questions answers regions and circles for multiple-choice questions. Circles present on this version of the test are regular circle shapes, unlike the ones on the filled-in test, which are disfigured by printing and scanning the test and the students filling in the test.

The Zoning process results in a configuration .json file generated for a test that was being zoned. This configuration file for each test denotes its own layout, and this information is only used for validation purposes, and the whole recognition process is performed per se. The configuration of a test can vary from test to test and includes information such as page size, questions’ and answers’ roughly determined whereabouts, number of questions per page, question type, answer matrix dimension for multiple-choice questions, etc. The configuration file and the scanned tests themselves represent the input to the Processing module.

3.2 Scanning module

After the exam is finished, tests are carried to multiple scanning centers. There, every test is scanned by the operator and, after the scanning is completed, can be in one of the following states: complete, incomplete, and erroneous. Complete test is without any errors, and as a result of the scanning phase, a multipage .tiff file is generated per each test. Incomplete test is missing at least one of the pages, cannot be sent to the Processing module, and must be inspected manually. The erroneous test is caused by an inability to recognize QR or Barcode optically. This can be the case if the image is disfigured by the scanning machine, the test’s transportation, or students’ actions.

The scanning process is robust and resilient to test pages out of order arrival. Moreover, it assembles them in the proper order for each completed test. These files are uploaded to a dedicated server that listens to incoming requests and processes them, applying the steps described in Section 3.3 Processing Module. All requests also include additional information, such as the name of the scanning center, the id of the scanning machine, etc., in order to deal with any kind of unpredicted errors efficiently. In addition, the application sends statistics: number of completed tests, number of scanned pages, number of errors, etc. for further analysis.

3.3 Processing module

The main objective of the Processing module is to detect multiple choice questions and circular answers, given the scanned images of pages of the traditional pen and paper tests which the pupils and students answer. Moreover, the module is able to detect questions’ regions, answers’ regions within those questions, test borders, and student and test identification numbers. All features mentioned above of this module and its correct operation highly depend on the predefined configuration file for a particular test, which specifies the rules of questions and answers layout. The complete realization of the Processing module is depicted with pseudo code in Fig. 5.

3.3.1 Image processing

Each test is represented by a multipage .tiff file. Besides the test, a configuration .json file (created by a Zoning module) serves as an input to the Processing module. Every image of the multipage .tiff file depicts one test page. After loading the page images, morphological operations are performed on them. These operations are necessary to amplify the recognition process.

Firstly, the loaded image is converted to grayscale, as the following algorithms require this type of image as their argument. After that, the image is blurred using Gaussian blur, which effectively removes Gaussian noise from an image. The function convolves the image with a specified Gaussian kernel. It requires a positive and odd dimension rectangular kernel and chosen dimensions are 13 × 13 in the particular case.

The threshold binary inverted image is obtained by applying a certain threshold function to the image. The same threshold value is applied for every pixel of a particular image. If the value of the pixel is larger than the threshold, its value is set to 0. Otherwise it is set to the maximum value. The threshold function uses the Otsu algorithm to choose the optimal threshold value.

The binary, inverted image is further morphed using horizontal and vertical line kernels. The elements of interest, four borders, as well as the questions and answers boundaries, have their boundaries composed of horizontal and vertical lines. Hence the reasoning behind the morphology element shape choice. Applying the appropriate kernel removes noise represented by the text in the test from the inverted image.

The scanning process often disfigures contours that represent regions of interest. This disruption is mainly manifested in the perforation of the element’s contours. Having that in mind, a closing morphology operation is needed (opening on inverted binary image), which results in a closure of the holes. The obtained image after these morphological operations can be seen in Fig. 6.

3.3.2 Borders, questions and answers detection

Borders are identified as outermost contours shaped as a capital letter “L” that are placed in all four angles of each paper page of the test. The border template image is matched against all candidate borders contours to identify the likeliest candidates for each of the four borders. The pseudo code in Fig. 7 illustrates how they are obtained.

Questions are identified by the aforementioned rectangular boundaries. Potential answers’ regions within those questions are designated by regular rectangular tables. They are marked as potential since there could be another similarly shaped region that does not represent the answer region yet is considered to be a part of the question postulate itself. Multiple-choice type questions are the only type of questions containing answer regions. The following pseudo codes in Figs. 8 and 9 depict retrieval of questions’ and answers’ regions, respectively.

Contour is defined as the line joining all the points along the boundary of an image with the same intensity. In the case of the binary, inverted image, all pixels have one of the two levels of intensities – 0 or 1. There are several isolation methods, and the chosen mode for designating question’s contours is the external mode since the question boundaries are the outermost contours found on the image. Potential answer regions are identified by determining the cell contours that these regions are composed of. The chosen mode is tree mode, which yields the hierarchical relations between contours, including parent, child, and sibling. These cells are checked against the regular grid test, and, if passed, the region is added to the potential answer’s regions list.

Each test template is associated with a corresponding .json file, which holds information about the whereabouts of the regions of interest. The file keeps the information about questions’ and answers’ locations and the data about the test itself, namely the number of questions and answers and their organization within the test template. Consequently, the locations of questions and answers do not accurately represent marked entities on the test, yet are merely an approximation. Therefore, this information cannot be translated from the template to the particular image of a test orthogonally. The resulting locations of questions and their answers’ regions are obtained by merging information from the zoning process with the contours found by the computer vision process. That is the step where the potential answers’ regions are confirmed.

3.3.3 Circles detection within answer regions

For each multiple-choice question, there is at least one answer region designated by the regular rectangular table. At the moment of processing answer circles within these regions, all potential answers’ regions are already confirmed. Therefore, parts of the image representing answers’ regions can be extracted.

The tree retrieval mode searches extracted answer region images for contours. Obtained contours are sorted by their surface descending. The largest contour in the portion of the image representing the answer region is the outermost border contour bounding the answer region. This contour is important since the table cells composing the answers’ regions have that contour as their parent. Identifying the largest bounding contours aids the exploration of table cells, which hold circle answers.

Each circle representing the answer has one of the table cells as their parent and is the largest contour inside a particular cell. Hence, identifying the table cells is an important step in the circle detection process. Also, potential circle contours are the largest contours within the individual cells, which is why the obtained contours are initially sorted by their surface. If the potential circle contour passes the circle test, that table cell is no longer considered, and the contour is added to the potential circles’ list.

The circle test requires a series of mathematical operations to be applied to each potential contour. However, before the rather sophisticated circle test is used, the potential circle contour is checked to see if it is placed in the center of the surrounding table cell. That position is defined as the rectangular region, which dimensions comprise one-fifth of the surrounding cell and occupies the cell’s central portion. If the check succeeds, the closed contour’s curve length (also known as the perimeter) is determined. Secondly, the circle reliability index is calculated as a ratio between four times pi times the contour area and the surface of the perimeter squared, which is then compared to a given tolerance. For ideal circles, this ratio should yield a maximum value of 1. The lower the value of the circle reliability index, the less likely it is that the found contour is a circle. The tolerance is determined experimentally and has a value of 0.85. Thirdly, the moment of the candidate contour is calculated, which aids in the determination of the contour’s center of mass. The Center of mass of the ideal circle should be in its center. Bearing in mind that the contour is a line connecting points along the boundary that are having the same intensity, the distance from the center of mass to each of these points is calculated. The distances are sorted, after which the median value is found and at least 85% of points should have their distance to the center of mass in the epsilon environment of the median value, which is 10% of the median value. Both values are determined experimentally. The pseudo code for circle detection is depicted in Fig. 10.

The number of potential circle contours after the conducted circle test should not exceed half of the number of the cells in a table, denoted as N. If that is not the case, only the largest N contours are considered since answer circles are larger than other answer table elements. Further, the remaining contour candidates are validated against the grid test, which checks if the layout of contour candidates in each row of the table answer region is the same. The same check is also performed for the table columns. Moreover, the dimensions of a detected circles’ grid are checked against the question information in the test configuration file to prevent any kind of student’s mischief. For instance, if a student adds an additional circle column in the answer’s region, the system will detect the inappropriate answer due to the mismatch with the configuration file and declare this answer invalid. Finally, a proper minimum enclosing circle for each of the contours of the grid is calculated, which yields its center and radius.

3.3.4 Answer’s circles filled status determination

After the answer’s circle grids are detected, the level of blackness (fullness) of each detected circle should be determined. Firstly, the number of black pixels (white pixels in the binary inverted image) in a rectangular image slice containing the minimum enclosing circle is calculated. Subsequently, the ratio between the obtained value and the circle area is determined as the blackness level. However, even the non-filled circles have a border that contributes to the number of calculated black pixels. Consequently, after determining the blackness level of all circles, the min-max scaling is performed to obtain a blackness value of 0 for non-filled circles. The pseudo code in Fig. 11 determines the circle’s fullness status.

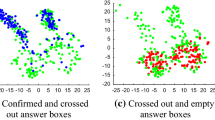

After the blackness level of each circle is calculated, every one of them should be labeled with a fullness status of black or white. Black circles are the ones with a blackness level above a certain threshold. The threshold value is determined for each test separately, as each student expresses their own method of answer filling. The blackness level and fullness status can be seen in Fig. 12. Fullness statuses are denoted with letters B and W for black and white, respectively.

3.3.5 Generating resulting .json file

After the fullness status of answer’s circle grids is determined, the results are written to a separate, resulting .json file. This file contains various information about all questions of the test. This information includes the number and type for all questions. Moreover, images representing portions of a test page regarding the regions of interest (questions’ and answers’ regions) are generated and their paths are stored in the resulting file. Additionally, for multiple-choice type questions, answer matrix is included. All aforementioned output files are next delivered to the Verification module for further interpretation.

3.4 Verification module

Multiple-choice type questions are verified by comparing information from the resulting .json file with correct answers stored in the database. Additionally, they are scored according to the correct answer sheet and points are stored in a separate database. Questions of other types are forwarded for manual review.

Manual review is performed with a separate software tool. Manual reviews are presented with images of regions of interest for questions that need manual evaluation. Reviewers’ task is to score the answers to these questions or report problems they cannot resolve, which prevents them from scoring these questions in the first place.

4 Result analysis

The system was tested on 2180 instances of a mathematics template test filled by students about to finish secondary school. The students did not have any previous training for filling the template tests. Each template test consisted of 16 pages having 24 questions in total. There were two types of questions on this test:

-

Open-type questions (for instance, fill-in, matching, etc.). There were 16 questions of this type. The task of the system was to identify their locations on the test and provide them to human graders, who are solely performing the grading process.

-

Multiple-choice questions. There were 8 questions of this type, and the system’s task was to identify their locations on the test and the locations of their answers. In addition to providing the human graders with detected questions, the system also performs the grading process.

The system could not identify all questions of only one test. The possible reason behind this is that, during the scanning process, the test was disfigured with a vertical line connecting two questions’ contours. This situation can be seen in the Fig. 13.

The system managed to correctly identify 52,317 (out of 52,320) questions on 2180 tests, out of which 17,440 were questions of multiple-choice type. The system successfully graded all identified multiple-choice type questions. However, having in mind that the human graders were part of the verification process of multiple-choice questions (exclusively this time, for testing and comparison purposes), as well, the occurrence of conflicts in grading was inevitable. There were in total 225 conflicts reported for multiple-choice questions. Out of these 225 conflicts, 174 were incorrectly marked as conflicts and their reporting was a result of errors made by human graders in the grading process. The remaining 51 had a problem with the level of the blackness of the circle answers. An example of the part of that test is depicted in Fig. 14.

Even though the first circle answer is somewhat marked, its level of blackness is below the global marked threshold. In these cases, a higher instance is needed as an arbitrator. Since the tests are completely depersonalized and distinguished solely by the student identification label, the student could use the specific marking style to signal his identity to a human grader. Still, it should be noted that the test instructions clearly state that the student needs to fill the desired answer circle completely.

The configuration used for the evaluation of the system was a server using an Intel(R) Core (TM) i5-6500 CPU running at 3.20 GHz and having 12GB of RAM. This configuration was chosen since it is used in the multiple centers of the Ministry of Education, Science, and Technological Development of the Republic of Serbia, where the Processing module is executed. The worst processing time for one test page was exactly 233 ms and 3.734 s for the particular test. Detailed processing time information in milliseconds per test is illustrated in Fig. 15. Compared to the manually grading process, which lasts 78 s per test on average (which was empirically evaluated during multiple years of experience), automated assessment is more than 18 times faster, even in the worst processing time. Each test is processed when it is completed and sent to the processing stage. In order to reduce the total processing time and use all the available resources, a worker thread pool is managed, where each completed test is processed by only one thread.

The following Table 1. illustrates a comparative analysis of discussed existing solutions mentioned in the related work section. Each algorithm performs on its own dataset, which differs in the number of instances present. The proposed solution was tested with 30% threshold of circle fullness level, as well as the variable one, which is calculated given the student’s circle filling method and is independent per each student test.

Given the results presented in Table 1, the proposed solution with a 30% threshold yields the best results in precision compared to the other solutions. Moreover, the implementation of variable thresholding did not benefit in terms of precision. Yet, this increased processing time since another pass through the test was required to determine the threshold.

5 Conclusion and future work

This paper discussed and presented the actual application of computer vision for the realization of the automated assessment system that grades paper tests. The most significant part of a system is the Processing module utilizing computer vision techniques, which is used to recognize important test elements, for instance, borders, questions, and answers. The main goal of this module is to detect circle answers distributed within a question in a regular rectangular grid and determine their fullness level. To improve the recognition process, this module checks detected elements against the information obtained by the Zoning module, which zones these elements on a template test. The Processing module operates on digital data provided by the Scanning module, which completes test pages and produces a resulting digital image file. Lastly, the Verification module scores final test results.

The evaluation of a system using real-world data shows that a processing component exhibits an astonishingly high accuracy in recognizing important test elements. In addition, the component shows high precision in recognizing circle answers and determining their fullness level. Lastly, the component can process individual tests in a short amount of time and in parallel on a custom configuration. In comparison with the related solutions, the proposed solution achieved the best results in terms of precision.

The system should be more resistant to possible test page disfigurations, which could be caused in some of the previous phases of the process (during the execution of the test in the classrooms, during the scanning phase, etc.). Also, the system could detect doubtful answer circles markings and classify those tests as suspicious, although they are already sent to manual review. Moreover, besides logging the errors, the system could classify tests by the occurred errors and their level of seriousness. These are also the main limitations of the study.

Bearing in mind that the system can only grade multiple-choice questions, in the future, the system’s capabilities can be broadened toward the grading of open-type questions [11, 13]. A first prerequisite, which is already fulfilled, is to detect these questions. The most suitable open-type question for grading is matching concepts. If lines connected the concepts, questions of this type could easily be detected using the existent solution and technology and would require minimal code modification. On the other hand, if the concepts were connected by writing characters or numbers listed in front of the given answers, a convolutional neural network could be of use [2, 3, 30]. However, a real challenge would be to detect handwritten essays in open-text type questions and interpret text meaning, which would require the usage of deep neural network models [14, 15] and natural language processing techniques [5]. Furthermore, if the software could someday verify the integrity of the text handwritten by the pen [8, 24], that would significantly contribute to preserving the examination process’s soundness. That is something that we will strive for in the future.

Data availability

The data supporting this study’s findings belong to the Ministry of education, science and technological development of the Republic of Serbia. Due to this reason, the data cannot be public. The authors receive data under special terms for research purposes only. If needed, the authors can send a request to the Ministry to make data available to the editor to verify the submitted manuscript. The source code is available at the following link: https://github.com/jocke93/AAPPT.

References

Bacanin N, Bezdan T, Venkatachalam K, Al-Turjman F (2021) Optimized convolutional neural network by firefly algorithm for magnetic resonance image classification of glioma brain tumor grade. J Real-Time Image Proc 18(1):1085–1098. https://doi.org/10.1007/s11554-021-01106-x

Bacanin N, Bezdan T, Tuba E, Strumberger I, Tuba M (2020) Monarch butterfly optimization based convolutional neural network design. Mathematics 8(6):1–32. https://doi.org/10.3390/math8060936

Bansal M, Kumar M, Sachdeva M, Mittal A (2021) Transfer learning for image classification using VGG19: Caltech-101 image data set. J Ambient Intell Hum Comput. https://doi.org/10.1007/s12652-021-03488-z

Barbosaa W, Vieira W (2019) On the improvement of multiple circles detection from images using Hough transform. Trends Comput Appl Math (TEMA) 20(2):331–342. https://doi.org/10.5540/tema.2019.020.02.0331

Borade JG, Netak LD (2020) Automated grading of essays: a review. Int Conf Intell Hum Comput Interact 238–249. https://doi.org/10.1007/978-3-030-68449-5_25

Catalan JA (2017) A framework for automated multiple-choice exam scoring with digital image and assorted processing using readily available software. DLSU Research Congress 2017, De La Salle University, Manila, pp 1–5

Chamorro MEG (2022) Cognitive validity evidence of computer- and paper-based writing tests and differences in the impact on EFL test-takers in classroom assessment. Assess Writ 51(1):1–21. https://doi.org/10.1016/j.asw.2021.100594

Dansena P, Bag S, Pal R (2022) Pen ink discrimination in handwritten documents using statistical and motif texture analysis: a classification based approach. Multimed Tools Appl 81(16):1–41. https://doi.org/10.1007/s11042-022-12843-x

Djekoune AO, Messaoudi K, Amara K (2017) Incremental circle Hough transform: an improved method for circle detection. Optik 133(1):17–31. https://doi.org/10.1016/j.ijleo.2016.12.064

Golekar D, Bula R, Hole R, Katare S, Parab S (2022) Sign language recognition using Python and OpenCV. Int Res J Modernization Eng Technol Sci 4(2):1–5

González-López S, Montes-Rosales ZG, López-Monroy AP, López-López A, García-Gorrostieta JM (2022) Short answer detection for open questions: a sequence labeling approach with deep learning models. Mathematics 10(13):2259. https://doi.org/10.3390/math10132259

GradeScanner mobile application for grading bubble sheets assessments automatically. (Last access 24.04.2023.) https://www.gradescanner.net/

Kumar M, **dal MK, Kumar M (2022) Distortion, rotation and scale invariant recognition of hollow Hindi characters. Sādhanā 47(2):92. https://doi.org/10.1007/s12046-022-01847-w

Kumar M, **dal MK, Kumar M (2022) A systematic survey on CAPTCHA recognition: types, creation and breaking techniques. Arch Computat Methods Eng 29:1107–1136. https://doi.org/10.1007/s11831-021-09608-4

Kumar M, **dal MK, Kumar M (2022) Design of innovative CAPTCHA for hindi language. Neural Comput Appl 34(6):4957–4992. https://doi.org/10.1007/s00521-021-06686-0

Lopez-Fuentes L, van de Weijer J, González-Hidalgo M, Skinnemoen H, Bagdanov AD (2018) Review on computer vision techniques in emergency situations. Multimedia Tools Appl 7713:17069–17107. https://doi.org/10.1007/s11042-017-5276-7

Lopez-Martinez A, Cuevas FJ (2019) Automatic circle detection on images using the teaching learning based optimization algorithm and gradient analysis. Appl Intell 49(5):2001–2016. https://doi.org/10.1007/s10489-018-1372-2

Loudon C, Macias-Muñoz A (2018) Item statistics derived from three-option versions of multiple-choice questions are usually as robust as four- or five-option versions: implications for exam design. Adv Physiol Educ 42(4):565–575. https://doi.org/10.1152/advan.00186.2016

Manzanera A, Nguyen T, Xu X (2016) Line and circle detection using dense one-to-one Hough transforms on greyscale images. J Image Video Proc 46(1):1773–1773. https://doi.org/10.1186/s13640-016-0149-y

Mingyang Z, **aohong J, Dong-Ming Y (2021) An occlusion-resistant circle detector using inscribed triangles. Pattern Recogn 109(1):1075–1088. https://doi.org/10.1016/j.patcog.2020.107588

Nardi A, Ranieri M (2019) Comparing paper-based and electronic multiple-choice examinations with personal devices: impact on students’ performance, self-efficacy and satisfaction. Br J Educ Technol 50(3):1495–1506. https://doi.org/10.1111/bjet.12644

Odeh N, Direkoglu C (2020) Automated shop** system using computer vision. Multimed Tools Appl 79(41):30151–30161. https://doi.org/10.1007/s11042-020-09481-6

Santosh KC, Antani SK (2020) Recent trends in image processing and pattern recognition. Multimed Tools Appl 79(47):34697–34699. https://doi.org/10.1007/978-981-16-0507-9

Shaheed K, Aihua M, Qureshi I, Abbas Q, Kumar M, **ngming Z (2022) Finger-vein presentation attack detection using depthwise separable convolution neural network. Expert Syst Appl 198(1). https://doi.org/10.1016/j.eswa.2022.116786

SmartGrade mobile application for grading multiple-choice answer sheets. (Last access 24.04.2023). https://smartgrade.net/

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. Proc IEEE Conf Comput Vis Pattern Recognit (CVPR) 2818–2826. https://doi.org/10.1109/CVPR.2016.308

Teh-Chuan C, Kuo-Liang C (2001) An efficient randomized algorithm for detecting circles. Comput Vis Image Underst 83(2):172–191. https://doi.org/10.1006/cviu.2001.0923

Tirpude P, Girhepunje P, Sahu S, Zilpe S, Ragite H (2022) Real time object detection using OpenCV-Python. Int Res J Modernization Eng Technol Sci 4(5):1–6

Tractenberg R, Gushta M, Mulroney S, Weissinger P (2013) Multiple choice questions can be designed or revised to challenge learners’ critical thinking. Adv Health Sci Educ 18(1):945–961. https://doi.org/10.1007/s10459-012-9434-4

Vaidya R, Trivedi D, Satra S, Pimpale PM (2018) Handwritten character recognition using deep-learning. Second Int Conf Inventive Communication Comput Technol (ICICCT) 772–775. https://doi.org/10.1109/ICICCT.2018.8473291

Velasco JS, Beltran AAV, Alayon JAC, Maranan PEB, Mascardo CMA, Sombrito JMB, Tolentino LKS (2020) Alphanumeric Test Paper Checker Through Intelligent Character Recognition Using OpenCV and Support Vector Machine. World Congress on Engineering and Technology; Innovation and its Sustainability 2018. WCETIS 2018. EAI/Springer Innovations in Communication and Computing. Springer, Berlin, pp 119–128. https://doi.org/10.1007/978-3-030-20904-9_9

Voulodimos A, Doulamis N, Doulamis A, Protopapadakis E (2018) Deep learning for computer vision: a brief review. Comput Intell Neurosci 2018(1):1–13. https://doi.org/10.1155/2018/7068349

**ao S, Li T, Wang J (2020) Optimization methods of video images processing for mobile object recognition. Multimed Tools Appl 79(25):17245–17255. https://doi.org/10.1007/s11042-019-7423-9

Zhenjie Y, Weidong Y (2016) Curvature aided Hough transform for circle detection. Expert Syst Appl 51(1):26–33. https://doi.org/10.1016/j.eswa.2015.12.019

Acknowledgements

This research was supported by the Science Fund of the Republic of Serbia, grant no. 6526093, AI-AVANTES (www.fondzanauku.gov.rs). The authors gratefully acknowledge the support.

Funding

This study was funded by the Science Fund of the Republic of Serbia, grant no. 6526093, AI-AVANTES (www.fondzanauku.gov.rs).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare they have no conflicts of interest to report regarding the present study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jocovic, V., Marinkovic, M., Stojanovic, S. et al. Automated assessment of pen and paper tests using computer vision. Multimed Tools Appl 83, 2031–2052 (2024). https://doi.org/10.1007/s11042-023-15767-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15767-2