Abstract

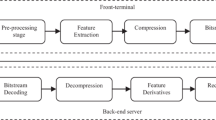

In this paper we present an experimental framework for Arabic isolated digits speech recognition named ARADIGITS-2. This framework provides a performance evaluation of Modern Standard Arabic devoted to a Distributed Speech Recognition system, under noisy environments at various Signal-to-Noise Ratio (SNR) levels. The data preparation and the evaluation scripts are designed by deploying a similar methodology to that followed in AURORA-2 database. The original speech data contains a total of 2704 clean utterances, spoken by 112 (56 male and 56 female) Algerian native speakers, down-sampled at 8 kHz. The feature vectors, which consist of a set of Mel Frequency Cepstral Coefficients and log energy, are extracted from speech samples using ETSI Advanced Front-End (ETSI-AFE) standard; whereas, the Hidden Markov Models (HMMs) Toolkit is used for building the speech recognition engine. The recognition task is conducted in speaker-independent mode by considering both word and syllable as acoustic units. Therefore, an optimal fitting of HMM parameters, as well as the temporal derivatives window, is carried out through a series of experiments performed on the two training modes: clean and multi-condition. Better results are obtained by exploiting the polysyllabic nature of Arabic digits. These results show the effectiveness of syllable-like unit in building Arabic digits recognition system, which exceeds word-like unit by an overall Word Accuracy Rate of 0.44 and 0.58% for clean and multi-condition training modes, respectively.

Similar content being viewed by others

References

Abushariah, M. A., Ainon, R. N., Zainuddin, R., Elshafei, M., & Khalifa, O. O. (2012). Phonetically rich and balanced text and speech corpora for Arabic language. Language Resources and Evaluation, 46(4), 601–634.

Alotaibi, Y. A. (2003). High performance Arabic digits recognizer using neural networks. In Proceedings of the international joint conference on neural networks, IJCNN, (pp. 670–674).

Alotaibi, Y. A. (2005). Investigating spoken Arabic digits in speech recognition setting. Information Sciences, 173(1), 115–139.

Alotaibi, Y. A. (2008). Comparative study of ANN and HMM to Arabic digits recognition systems. Journal of King Abdulaziz Universitys, 19(1), 43–59.

Al-Zabibi, M. (1990). An acoustic-phonetic approach in automatic Arabic speech recognition (Doctoral dissertation, The British Library in Association with UMI,1990).

Amrouche, A., Debyeche, M., Taleb-Ahmed, A., Rouvaen, J. M., & Yagoub, M. C. E. (2010). An efficient speech recognition system in adverse conditions using the nonparametric regression. Engineering Applications of Artificial Intelligence, 23(1), 85–94.

Applebaum, T. H., & Hanson, B. (1991). Regression features for recognition of speech in quiet and in noise. In Proceedings of the international conference on acoustics, speech, and signal processing, ICASSP, (pp. 985–988).

AURORA project. (2006). AURORA speech recognition experimental framework. Retrieved September 15, 2016, from http://AURORA.hsnr.de/index.html

Bakis, R. (1976). Continuous speech recognition via centisecond acoustic states. The Journal of the Acoustical Society of America, 59(S1), S97.

Boersma, P., & Weenink, D. (2015). Praat: Doing phonetics by computer. Version 5.4.08. Retrieved September 15, 2016, from http://www.praat.org/

Cui, X., & Gong, Y. (2007). A study of variable-parameter Gaussian mixture hidden Markov modeling for noisy speech recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 15(4), 1366–1376.

ELRA. (2005). NEMLAR broadcast news speech corpus. ELRA catalogue, ELRA-S0219. Retrieved September 15, 2016, from http://catalog.elra.info/product_info.php?products id = 874

ETSI document ES 201 108. (2003a). Speech processing, transmission, and quality aspects (stq): Distributed speech recognition; front-end feature extraction algorithm; compression algorithms. Version 1.1.3.

ETSI document ES 202 211. (2003b). Speech processing, transmission, and quality aspects (STQ): Distributed speech recognition; extended front-end feature extraction algorithm; compression algorithms; back-end speech reconstruction algorithm. Version 1.1.1.

ETSI document ES 202 050. (2007). Speech processing, transmission, and quality aspects (STQ): Distributed speech recognition; advanced front-end feature extraction algorithm; compression algorithms. Version 1.1.5.

Fujimoto, M., Takeda, K., & Nakamura, S. (2006). CENSREC-3: An evaluation framework for Japanese speech recognition in real car-driving environments. IEICE Transactions on Information and Systems, 89(11), 2783–2793.

Furui, S. (1981). Cepstral analysis technique for automatic speaker verification. Acoustics, IEEE Transactions on Acoustics, Speech, and Signal Processing, 29(2), 254–272.

Furui, S. (1986). Speaker-independent isolated word recognition using dynamic features of speech spectrum. IEEE Transactions on Acoustics, Speech, and Signal Processing, 34(1), 52–59.

Ganapathiraju, A., Hamaker, J., Picone, J., Ordowski, M., & Doddington, G. R. (2001). Syllable-based large vocabulary continuous speech recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 9(4), 358–366.

Gish, H., & Ng, K. (1996). Parametric trajectory models for speech recognition. In Proceedings of the international conference on spoken language processing, ICSLP, (pp. 466–469).

Hajj, N., & Awad, M. (2013). Weighted entropy cortical algorithms for isolated Arabic speech recognition. In Proceedings of the International Joint Conference on Neural Networks, IJCNN, (pp. 1–7).

Hirsch, H.-G., & Pearce, D. (2000). The AURORA experimental framework for the performance evaluation of speech recognition systems under noisy conditions. In Proceedings of ISCA tutorial and research workshop, (pp. 181–188).

Hirsch, H-G. (2005). FaNT, filtering and noise adding tool. Retrieved September 15, 2016, from http://dnt.kr.hsnr.de/

Hirsch, H-G., & Pearce, D. (2006). Applying the advanced ETSI frontend to the AURORA-2 task. technical report, Version 1.1.

Hu, G., & Wang, D. (2008). Segregation of unvoiced speech from nonspeech interference. The Journal of the Acoustical Society of America, 124(2), 1306–1319.

Hyassat, H., & Abu Zitar, R. (2006). Arabic speech recognition using SPHINX engine. International Journal of Speech Technology, 9(3), 133–150.

ITU-T, Recommendation P.830. (1992). Subjective performance assessment of telephone-band and wideband digital codecs. Geneva, Switzerland.

ITU-T, Recommendation G.712. (1996). Transmission performance characteristics for pulse code modulation channels, Geneva, Switzerland.

Knoblich, U. (2000). Description and baseline results for the subset of the Speechdat-Car Italian database used for ETSI STQ Aurora WI008 advanced DSR front-end evaluation. Alcatel. AU/237/00.

Lee, C. H., Rabiner, L., Pieraccini, R., & Wilpon, J. (1990). Acoustic modeling of subword units for speech recognition. In Proceedings of the international conference on acoustics, speech, and signal processing, ICASSP, (pp. 721–724).

Lee, C. H., Soong, F. K., & Paliwal, K. K. (1996). Automatic speech and speaker recognition: advanced topics (Vol. 355). London: Springer Science & Business Media.

Leonard, R. (1984). A database for speaker-independent digit recognition. In Proceedings of the international conference on acoustics, speech, and signal processing, ICASSP, (pp. 328–331).

Lindberg, B. (2001). Danish Speechdat-Car Digits database for ETSI STQ AURORA advanced DSR. CPK, Aalborg University. AU/378/01.

Ma, D., & ZENG, X. (2012). An improved VQ based algorithm for recognizing speaker-independent isolated words. In Proceedings of the international conference on machine learning and cybernetics, ICMLC, (pp. 792–796).

Macho, D. (2000). Spanish SDC-AURORA database used for ETSI STQ AURORA WI008 advanced DSR front-end evaluation, description and baseline results. Barcelona: Universitat Politecnica de Catalunya (UPC). AU/271/00.

Nakamura, S., Takeda, K., Yamamoto, K., Yamada, T., Kuroiwa, S., Kitaoka, N., Nishiura, T., Sasou, A., Mizumachi, M., Miyajima, C., Fujimoto, M., & Endo, T. (2005). AURORA-2J: An evaluation framework for Japanese noisy speech recognition. IEICE Transaction on Information and Systems, 88(3), 535–544.

Naveh-Benjamin, M., & Ayres, T. J. (1986). Digit span, reading rate, and linguistic relativity. The Quarterly Journal of Experimental Psychology, 38(4), 739–751.

Neto, S.F.D.C. (1999). The ITU-T software tool library. International Journal of Speech Technology, 2(4), 259–272.

Netsch, L. (2001). Description and baseline results for the subset of the Speechdat-Car German database used for ETSI STQ AURORA WI008 advanced DSR front-end evaluation. Texas Instruments. AU/273/00.

Nishiura, T., Nakayama, M., Denda, Y., Kitaoka, N., Yamamoto, K., Yamada, T., et al. (2008). Evaluation framework for distant-talking speech recognition under reverberant: Newest part of the CENSREC Series. In Proceedings of the language resources and evaluation conference, LREC, (pp. 1828–1834).

Nokia. (2000). Baseline results for subset of Speechdat-Car Finnish database used for ETSI STQ WI008 advanced front-end evaluation. AU/225/00.

Pearce, D. (2000). Enabling new speech driven services for mobile devices: An overview of the ETSI standards activities for distributed speech recognition. In Proceedings of the voice input/output applied society conference, AVIOS (pp. 83–86). San Jose: AVIOS

Pearce, D. (2001). Develo** the ETSI AURORA advanced distributed speech recognition front-end & what next?. In Proceedings of the workshop on automatic speech recognition and understanding, ASRU, (pp. 131–134).

Rabiner, L. R. (1989). A tutorial on Hidden Markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2), 257–286.

Rabiner, L. R., & Juang, B. H. (1993). Fundamentals of speech recognition (Vol. 14). Englewood Cliffs: PTR Prentice Hall.

Rabiner, L. R., Wilpon, J. G., & Soong, F. K. (1989). High performance connected digit recognition using hidden Markov models. IEEE Transactions on Acoustics, Speech, and Signal Processing, 37(8), 1214–1225.

Ryding, K. C. (2005). A reference grammar of modern standard Arabic. Cambridge: Cambridge University Press.

Siemund, R., Heuft, B., Choukri, K., Emam, O., Maragoudakis, E., Tropf, H., et al. (2002). OrienTel: Arabic speech resources for the IT market. In Proceedings of the language resources and evaluation conference, LREC.

Soong, F. K., & Rosenberg, A. E. (1988). On the use of instantaneous and transitional spectral information in speaker recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 36(6), 871–879.

The Linguistic Data Consortium. (2014). King Saud University database. Retrieved September 15, 2016, from https://catalog.ldc.upenn.edu/ldc2014s02

World Bank (2016). Retrieved September 15, 2016, from http://data.worldbank.org/region/ARB

Young, S., Evermann, G., Gales, M., Hain, T., Kershaw, D., Liu, X., Moore, G., Odell, J., Ollason, D., Povey, D., Valtchev, V., & Woodland, P. (2006). The HTK Book. Version 3.4. Cambridge: Cambridge University, Engineering Department.

Acknowledgements

This work has been supported in part by the LCPTS laboratory project. We would like to thank Dr Abderrahmane Amrouche for making many suggestions which have been exceptionally helpful in carrying out this research work. We also would like to thank Dr. Amr Ibrahim El-Desoky Mousa for providing support in interpreting results.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Touazi, A., Debyeche, M. An experimental framework for Arabic digits speech recognition in noisy environments. Int J Speech Technol 20, 205–224 (2017). https://doi.org/10.1007/s10772-017-9400-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-017-9400-x