Abstract

Aposematism (considered here as an association between conspicuous colour patterns and the presence of a harmful secondary defence) has long been recognized as an anti-predator strategy, with salient traits serving as a warning signal to ward off would-be predators. Here we review evidence for a potentially widespread yet under-explored third component of this defensive syndrome, namely capture tolerance (the ability of the signaller to survive being captured and handled by would-be predators). We begin by collating the (largely anecdotal) available evidence that aposematic species do indeed have more robust bodies than cryptic species which lack harmful secondary defences, and that they are better able to survive being captured. We then present a series of explanations as to why aposematism and capture tolerance may be associated. One explanation is that a high degree of capture tolerance facilitates the evolution of post detection (“secondary”) defences and associated warning signals. However perhaps a more likely scenario is that a high capture tolerance is selected for in defended species, especially if conspicuous, because if they can survive for long enough to reveal their defences then they may be released unharmed. Alternatively, both capture tolerance and secondary defences may arise through independent or joint selection, with both traits subsequently facilitating the evolution of conspicuous warning signals. Whatever its ultimate cause, the three-way association appears widespread and has several key implications, including inhibiting the evolution of automimicry and sha** the evolution of tactile mimicry. Finally, we present a range of research questions and describe the challenges that must be overcome in develo** a more critical understanding of the role of capture tolerance in the evolution of anti-predator defences.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Many species are defended with toxins, unpleasant tastes, spines and/or other traits that make them unprofitable to consume by would-be predators (Ruxton et al. 2018). These secondary (post-contact) defences serve to protect the carrier, but they will not always be evident to the predator before capture. Consequently, many of these species have evolved conspicuous colour patterns which may serve as a salient signal to warn would-be predators to avoid them (Poulton 1890; Wallace 1889; White and Umbers 2021). Intriguingly, EB Poulton, who proposed the term “aposematism” to describe the combination of harmful defence and conspicuous colour patterns (Poulton 1890) also noted another important association that has so far received considerably less attention: “Great tenacity of life is usually possessed by animals with Warning Colours” (1908, pg. 316). In his seminal work on animal coloration, Cott (1940) similarly noted “Another characteristic shared by [aposematic] animals…is their savage disposition when attacked, their toughness, and their remarkable tenacity of life when injured.” (pg. 245). Likewise, in his influential review of animal defences Edmunds (1974) simply noted that “aposematic animals are also often tough” (pg. 63).

The relative difficulty of killing aposematic species, and in particular their physical robustness, has long been familiar to entomologists who regularly capture and pin specimens. The association is perhaps best known in Lepidoptera, which have given us many examples of warning signals and mimicry (Quicke 2017; Ruxton et al. 2018). A common technique for immobilising butterflies without damaging their wings is known as “pinching”, in which the insect’s thorax is held between the thumb and forefinger and squeezed until a soft “pop” is felt (e.g., Steppan 1996; Swynnerton 1926). Even before the recognition of aposematism as a defensive strategy, Trimen (1868) took notice when pinching the thorax of several species of African Danais and Acraea butterflies failed to have any obvious effect on the specimens, until a very high amount of force was used. Even after the butterflies’ apparent death, Trimen described, with surprise, how several of the seemingly dead specimens flew off “with perfect ease and apparent nonchalance” (Trimen 1868, pg. 499). Trimen credited the “tenacity for life” of these butterflies to their “remarkable elasticity”, and quickly made the connection to the putative unpalatability of these genera, proposing that naïve birds and other insectivores may sample the butterflies only to release them upon discovering their noxious taste, leaving the butterflies largely unharmed. Over the years many similar observations have been made by entomologists, all supporting the view that aposematic species are in general “tougher” than palatable, cryptic species (see Table S1, Supplementary Information). For example, Pinheiro and Campos (2013) noted “In contrast to palatable species (including Batesian mimics), which usually exhibit soft wings, aposematic butterflies have tough wings” (pg. 366).

Of course, the evaluation of pinching forces is clearly subjective and many of the above observations were simply made in passing. Some assertions are also not entirely independent, with authors simply echoing the beliefs of others. In this review, we have attempted to critically evaluate the available evidence for an association between the ability of species to survive being handled and whether they exhibit aposematism or crypsis. Note that warning signals can potentially evolve to indicate pre-capture defences such as high evasiveness, rather than harmful post-capture defences, such as spines or toxins (Ruxton et al. 2018) but it is the association of capture tolerance with post-capture (“secondary”) defences that we focus on here. If aposematic species are indeed able to tolerate capture better than alternative forms, then it raises two important questions. First, if warning signals function by preventing predation before physical contact, why have aposematic species evolved an ability to tolerate capture? Second, if aposematic species are so good at tolerating capture, why have warning signals evolved at all? We return to these questions at the end.

Successful predation involves the predator breaching of not one but several stages of a prey defences in an attack sequence which may include encounter, detection, identification, approach, subjugation and consumption (Endler 1991). Increasing attention is therefore being paid to understanding defences as inter-related suites of deterrents, with specific back-up defences being selected for when earlier defences fail (Britton et al. 2007; Kikuchi et al. 2023). Here we use this review to summarize the strength of evidence that warning signals, chemical defences and capture tolerance represent one such suite of defences (a syndrome), acting quasi-independently yet combining synergistically to enhance the survivorship of the prey (Winters et al. 2021). In so doing, we follow the lead of early researchers such as Carpenter (1929) who also viewed the three-way association between secondary-defences, warning signals and capture tolerance as an integrated whole: “This resistance to injury is part and parcel of the process whereby an aposematic insect teaches an enemy that it is harmful or unpalatable. It almost invites attack, and if it is seized and handled, suffers little injury”.

We begin our review by justifying our use of the term “capture tolerance” to represent the ability of species to survive being seized and subjugated by a would-be predator. We then briefly describe the physical forces that prey may be subjected to when seized by their would-be predators, before describing the properties that would allow them to survive this treatment. Next, we describe the available evidence that aposematic species exhibit a greater degree of capture tolerance than cryptic palatable species, both from a morphological perspective and from an experimental perspective with real predators. We then consider why this association might arise before discussing its implications and highlighting areas for future work. To improve the focus of our review we have limited our examples to insects, although we note at the outset that a three-way association may arise in other taxonomic groups for similar reasons. For example, Cott (1940) highlighted the toughness of aposematic mammals, suggesting that it would be a selective advantage to a skunk, zorrila and porcupine to be constructed in such a way that it could survive any “ill-considered” blows before its defences are revealed.

Terminology

Here we use the term “capture tolerance”, to reflect a species’ ability to survive being seized by a predator with little to no permanent damage, allowing it to go on to reproduce. Capture tolerance can, of course, be achieved in several ways, depending on the nature of the stresses imposed by the would-be predator. These stresses are generally some combination of crushing (compression), stretching (tensile) and tearing (shear) forces (Miller et al. 2009). While some species appear to have evolved weakened structures that break off under initial seizure, these traits appear to have evolved to avoid successful capture, rather than to tolerate capture per se, so we only consider them briefly here.

When discussing capture tolerance, it is helpful to have a specific predator in mind. Birds, spiders, other insects, fish, frogs, lizards, and mammals (amongst others) are all known to attack insects and use different strategies to do so (Sugiura 2020). Naturally, the body part that is first seized by a would-be predator will vary with the species of prey and predator. Butterflies for example are often seized by the wings (Kassarov 1999; Wourms and Wasserman 1985) while flies (order Diptera) have small wings and are typically seized by the abdomen or thorax. Some inter-related measurable traits of relevance to capture tolerance in insects therefore include stiffness (resistance to non-permanent deformation), hardness (resistance to permanent deformation), elasticity (ability to return to its original shape after the removal of the deforming force), resilience (ability to store strain energy via elastic deformation without breaking), strength (force per unit area required to initiate a crack) and toughness (energy per unit area required to propagate a crack), although the precise meaning of these terms varies from field to field.

A standard measure of the stress applied on a prey item by a would-be predator is its bite force, typically measured in Newtons (N). Arguably of more relevance is the force the predator applies per unit area over which that force is distributed i.e. its pressure (typically measured in Pascals i.e. N/m2). Using pressure may be particularly informative when comparing the destructive capability of predators with different jaw sizes and shapes (being stepped on by a stiletto can do more damage than a flat-soled shoe). However, due to limitations in the instruments used to measure forces (e.g. transducer plates), the difficulty of quantifying bite area, and the desire to have a standardized measure, it is generally the raw forces that are reported, rather than pressures (see Lederer 1975 for a notable exception).

Physical tests of the capture tolerance of aposematic and cryptic insects

Aposematic velvet ants (Hymenoptera: Mutillidae) are the models of several well-known mimicry rings. In their exploration of the defences of these species, Schmidt and Blum (1977) experimentally evaluated the hardness of several insect species by crushing the thoraxes of dry specimens using a Hanson Cook-O-Meter® weighing scale and a bar. The velvet ant, Dasymutilla occidentalis, which is protected by a powerful sting with algogenic venom, was able to withstand the highest absolute crushing force (27.8 N) before “skeletal collapse”, and more force relative to its mass (247 N/g) than any other species assayed. For context, Corbin et al. (2015) measured compressive (“bite”) forces of North American insectivorous birds and found them to range from 0.68 N (approximately 1.28 N/cm2 given bill dimensions) in the yellow-rumped warbler (Dendroica coronata) to 6.31 N (approximately 2.14 N/cm2 given bill dimensions) in blue jays (Cyanocitta cristata). Likewise, Aguirre et al. (2003) determined the bite forces of bats, which range from 1.27 N in the black myotis (Myotis nigricans) to 68 N in the greater spear-nosed bat (Phyllostomus hastatus), which feeds preferentially on beetles.

In perhaps one of the most comprehensive datasets assembled to date, Wang et al. (2018a, b) used the dissected jaws of a lizard predator (Japalura swinhonis) embedded in resin as the crushing tool to quantify the “hardness” of a number of different insect species, measured as the maximum force at exoskeleton failure. As with Schmidt and Blum (1977), their work focused on evaluating the hardness of a conspicuously coloured (and potentially aposematic) species, the weevil Pachyrhynchus sarcitis, but in so doing they compared the physical properties of this weevil species with a diverse selection of sympatric insects, including other Coleoptera and insects in the orders Odonata, Orthoptera, Hemiptera, Blattodea, Neuroptera, and Hymenoptera. Although aposematic and non-aposematic taxa were not explicitly compared, it was noted that the mature aposematic form of P. sarcitis took more force to crush on average (20.1–40.9 N) than any of the other sympatric weevils (Curculionidae) in the same habitat, the majority of which appear to have been cryptic.

Another set of studies (DeVries 2002, 2003) was specifically interested in evaluating the tensile wing “toughness” of butterflies that varied in palatability to predators. In his 2002 paper, DeVries tested five species of sympatric nymphalid butterflies: two species considered palatable (Junonia terea and Bicyclus safitza) and three unpalatable (Amauris niavius, Acraea insignis and Acraea jobnstoni), measuring the “toughness” of their wings by attaching a small metal electrical clip to the hindwing margin and adding weight until the clip ripped free. He found that the wing “toughness” varied significantly among species, with the wings of the unpalatable species taking more weight to rip compared to those of palatable species. Even the unpalatable species varied in their wing toughness, leading DeVries to suggest that this variation may be due in part to inter-specific variation in their unpalatability, with more unpalatable species having “tougher” wings. To further test this idea, DeVries (2003) evaluated the rip-resistance of the wings of three different nymphalid species in the same manner. The butterfly Amauris albimaculata (Danainae) was considered an aposematic (unpalatable and conspicuous) model for the putatively palatable Batesian mimic Pseudacraea lucretia (Nymphalinae), while the butterfly Cymothoe herminia (Nymphalinae) was chosen as a closely-related palatable non-mimetic species. Intriguingly, DeVries (2003) again found that the aposematic species had the highest tear weight, the cryptic species the lowest, and the “putative” Batesian mimic a tear weight which was intermediate between the two.

It is worth noting that the species tested in the above experiments varied significantly in wing length, with the aposematic species being the largest and the cryptic species being the smallest, making size a potentially confounding variable, especially given the fixed size of clip (Kassarov 2004). Moreover, while DeVries (2002, 2003) broke new ground in attempting to objectively compare the physical attributes of butterflies with different defences, the relationship between the weight required to rip the wing and the ability of an insect to survive capture is not straightforward. In particular, one might argue that if a wing more easily tears in the beak of a bird, then that butterfly is more liable to be able to escape. So, the differences uncovered by DeVries (2002, 2003) may have been driven more by selection on palatable species to avoid capture, rather than by selection on unpalatable species wings to resist tearing following capture (Kassarov 2004).

To help understand why the wings of different butterfly species vary in their ease of tearing, Hill and Vaca (2004) compared the critical hindwing tear weight of three Pierela species of butterfly (Satyrinae) all of which were considered palatable to birds but only one of which exhibits “deflective” patches. As predicted, the species with a conspicuous white hindwing patch (P. astyoche) had significantly lower tear weights than the two species lacking this patch (P. lamia and P. lena). In this way, the weakness of a butterfly wing (in terms of its ease of rip**) may be a strength. The counter-intuitive nature of inferences drawn from beak marks is reminiscent of the classic work by Abraham Wald on the “survival bias” in WWII bombers (Mangel and Samaniego 1984; Wald 1943)—which concluded that reinforcing armour should go where bullet holes are not observed, rather than where they are.

Surviving sampling by predators

The properties of insects that confer resistance to physical forces are of interest here primarily because they suggest an ability to survive capture. However, some of the studies described above have been complemented by trials with real predators. As noted earlier, many aposematic velvet ants (Hymenoptera: Mutillidae) are extremely hard to crush (Schmidt and Blum 1977). Schmidt and Blum (1977) offered the species Dasymutilla occidentalis to a variety of predators. Out of 122 trials, only two specimens were killed, one by a gerbil and one by a tarantula. Gall et al. (2018) subsequently staged interactions between seven species of velvet ant and ten different species of potential predators with similar results. Likewise, Wang et al. (2018a) also reported that, in behavioural trials, all 40 of the live, hard (mature) specimens of the potentially aposematic weevil Pachyrhynchus sarcitis were immediately spat out after being bitten by tree lizards Japalura swinhonis (Agamidae), in each case surviving the attacks.

Many other studies have observed that chemically defended insect prey frequently survive being captured by predators. In particular, Järvi et al. (1981) presented wild-caught great tits, Parus major, sequential choices of a piece of mealworm and an unpalatable swallowtail larva, Papilio machaon. The birds generally attacked the swallowtail larva at the first opportunity but soon learned to avoid attacking them after experiencing their distastefulness. Importantly, all the swallowtail larvae survived these initial attacks, whereas most of the mealworms were consumed. In an even more ambitious experiment, Wiklund and Järvi (1982) evaluated the response of hand-reared Japanese quail (Cotumix coturnix), starlings (Sturnus vulgaris), great tits (Parus major) and blue tits (Parus caeruleus) to five species of aposematic insect (swallowtail butterfly larvae Papilio machaon, large white butterfly larvae Pieris brassicae, burnet moths Zygaena filipendula, ladybirds Coccinella septempunctata and firebugs Pyrrhocoris apterus) and found that all of the predators frequently released these distasteful prey unharmed after capture. Ohara et al. (1993) presented naïve domestic chicks (Gallus gallus) with green larvae of the butterfly Pieris rapae and black larvae of the sawfly Athalia rosae (conspicuous against green). They found that the number of P. rapae attacked by the chicks increased per trial, with 60/80 being eaten in the third trial. By contrast, although A. rosae larvae were pecked, they were seldom killed or injured, with only 1/80 being consumed in the third trial. They put the high survival of A. rosae down to the fact that their bodies were elastic, and the fact their integument has such a low mechanical resistance that even slight peck causes the release of repellent haemolymph (“easy bleeding”, Boevé and Schaffner 2003).

While the above studies were laboratory based, field experiments have reported similar results. In a comprehensive series of studies in the neotropics, Chai (1996) found that naïve young jacamars with little experience would readily attack butterflies of any colour pattern. However, certain butterfly species were released after capture, and most survived this sampling. Chai (1996) put this high survivorship down to the fact that many of the unacceptable butterflies had a “tough” and flexible thorax. Thereafter, these unacceptable species tended to be sight rejected. By contrast, other species –notably erratically flying cryptic species—were frequently consumed when caught, creating a bimodal acceptability curve.

Rather than observe the reactions of hand-reared predators, Pinheiro and Campos (2019) observed the responses of wild adult jacamars to butterflies in the field. While the majority of aposematic butterflies (e.g. species of Heliconius and Parides) were sight rejected, all of the aposematic butterflies that were caught by the jacamars were released alive and without apparent injury. By contrast, cryptic butterflies (Hamadryas and Satyrini species) did not elicit sight rejections, and large numbers were successfully attacked and consumed.

Arthropod predators have also been known to release chemically defended butterfly species unharmed after capture. For example, Vasconcellos-Neto and Lewinsohn (1984) released live specimens of 27 butterfly species into the web of the neotropical forest spider Nephira clavipes. Almost all prey items were initially touched by the spiders with pedipalps and foreleg tarsi, allowing the spiders to feel and gently taste them. The vast majority of chemically defended Ithomiine butterflies remained motionless in the web and were subsequently disentangled and released by the spiders. Curiously, Heliconius butterflies tended to struggle in the web and most specimens thrown in the webs were eaten. Despite the fact that heliconids are unpalatable to birds (Chouteau et al. 2019) one reason for this difference may be that the cyanogenic glycosides found in heliconids are not as repellent to other arthropods as the pyrrolizidine alkaloids found in ithomines.

If the association between aposematism and capture tolerance exists, why might it arise?

So far, we have highlighted the widespread impression among entomologists that aposematic insect species tend to have relatively high capture tolerance compared to cryptic insect species. While a variety of observations support this view, much of the evidence remains anecdotal in that the studies were not specifically designed to compare the capture tolerance of aposematic and non-aposematic species. Clearly, much more remains to be done, including evaluating the capture tolerance of a broader range of insect species under standard conditions (see section “Future directions”). However, given the long-held belief that there is an association between aposematism and capture tolerance, it is natural to wonder why it might have evolved.

The evolution of a harmful secondary defence such as a toxin and associated warning signals (aposematism) has itself long been considered a puzzle (Caro 2023; Mappes et al. 2005; Ruxton et al. 2018). This is because the first conspicuous mutant of a chemically defended but cryptic species would face a “double whammy” of standing out, and yet not being recognized as defended. Faced with this problem, Fisher (1930) argued that aposematism might more readily evolve in species that are spatially or temporally aggregated, so that the traits may spread if it protects other individuals that have also inherited the chemical defence and associated signal. However, an ability to survive capture suggests that this form of selection (later known as “kin selection”, Maynard Smith 1964) is not the only solution, or even the most likely. Fisher (1930) was also aware of this alternate possibility: “Professor Poulton has informed me that distasteful and warningly coloured insects, even butterflies, have such tough and flexible bodies that they can survive experimental tasting without serious injury. Without the weight of his authority I should not have dared to suppose that distasteful flavours in the body fluids could not have been evolved by the differential survival of individuals in such an ordeal”.

Unfortunately, Poulton’s observations referred to by Fisher still leave us with a conundrum, because a complete explanation of aposematism evolving by individual selection should also explain the high capture tolerance of aposematic insects. Indeed, as Endler (1991) noted, observing aposematic species that can survive attack “begs the question of how toughness evolves”. Here we consider various possibilities, with capture tolerance either at the start or end of a sequence of selection, or the product of independent selection (see Fig. 1a–d).

A range of plausible evolutionary pathways in which an association between secondary defences, warning signals and capture tolerance might evolve. Naturally, outside mimicry, warning signals will tend to evolve only after the possession of a suitable secondary defence. While it seems reasonable to assume that the possession of a secondary defence will tend to select for a body that is sufficiently robust to survive for long enough to reveal the defence, there are several routes under which the association might arise

Capture tolerance generates selection for aposematism

One solution as to how the association arises is that is that high capture tolerance is an initially incidental trait that simply allows aposematism to evolve (Fig. 1a). As we have seen, many insect species are able to tolerate capture, and if this resilience arises for other reasons independent of anti-predator defence, then it may nevertheless set the stage for the evolution of defences and associated warning signals. The abiotic environment of an insect species inevitably imposes important selection pressures on the species’ physical properties, and hence (incidentally) its ability to tolerate capture by would-be predators. For example, large beetles living in arid environments have been found to be generally more resistant to crushing than those living in temperate areas, which may be a consequence, at least in part, of selection to avoid desiccation (Fisher and Dickman 1993a, b). Likewise, insects that frequently come into contact with abrasive substrates, such as those which burrow underground, must be able to resist excessive friction damage (Sun et al. 2008). The biotic environment, ranging from selection imposed by potential ectoparasites to intra-specific conflict over resources, may also select for body forms that happen to allow the species to survive capture by predators (Gullan and Cranston 2014). Once capture tolerance has arisen then, as Evans (1987) argued, it may allow “tough, harmless cryptics” to “evolve into tough, nasty aposematics” through individual selection, since it facilitates the evolution of secondary defences and associated warning signals.

Of course, capture tolerance may not be incidental at all, but represent an integral part of the insect’s secondary defence, making the prey unprofitable to consume from an energetic perspective (Cyriac and Kodandaramaiah 2019). For example, while it is tempting to consider conspicuous bumblebees as signalling their propensity to sting a would-be predator, the tough chitin and hairy bodies of these insects may be an even more important factor rendering them unprofitable (Gilbert 2005). In an ambitious series of aviary experiments, Mostler (1935) observed that bumblebees stung their would-be predators relatively infrequently and instead proposed that birds learned to avoid them due to their difficulty of handling. As Mostler (1935) reports, one bird (a whitethroat, Sylvia communis) took 18 min to beat, dismember and consume a bumblebee, after which it was exhausted. Similarly, Swynnerton (1926) detailed numerous accounts of hungry birds repeatedly failing to capture or consume live Charaxes butterflies and attributed this to the butterflies’ “hard” bodies and the violence with which they struggled upon being seized. Charaxes are generally considered palatable (Batesian) mimics of chemically defended models. However, if their “tough” integument and violent escape behaviours make them sufficiently unprofitable, then they could potentially be considered Müllerian mimics (Sherratt 2008), having evolved the same warning signal as other unprofitable species.

In short, there are multiple ways in which warning signals could evolve after capture tolerance, including the trivial solution that capture tolerance itself is the signalled defence. In this instance, rather than toughness being a “third component” of aposematism, it could just be one of several secondary defences contributing to unprofitability.

A secondary defence selects for capture tolerance and warning signals

If a species has evolved a form of post-capture defence (such as unpleasant chemicals derived from its host plant, or a painful sting) then it is likely that there will be strong selection on its capture tolerance to allow the prey to survive long enough for their defence to be revealed (Fig. 1b). Many defended species secrete chemical compounds and odours on handling (Whitman et al. 1985); ladybirds, for example, release pyrazine odours when disturbed (Marples et al. 1994). Armed with a secondary defence, any preview of what is in store if subjugation continues, should therefore elicit a quick release. Indeed, it appears that the distribution of harmful deterrents within the body of aposematic prey are strategically allocated in a manner that rapidly teaches predators to avoid them and/or enhances prey survivorship (Brower and Glazier 1975). Evans et al. (1986) presented individuals of an aposematic (red and black) bug Caenocoris nerii (Hemiptera: Lygaeidae) that had been fed on oleander seeds (which contain cardiac glycosides) or sunflower seeds (cardenolide-free) to naïve common quail (Coturnix coturnix). Like many aposematic bugs, C. nerii has a rigid exoskeleton (Evans et al. 1986) and about 85% of all adults consequently survived the quails' attacks overall. However, the oleander seed-fed bugs were even less likely to be killed on attack, suggesting that the defence plays some role in facilitating survivorship.

Aposematism generates selection for capture tolerance

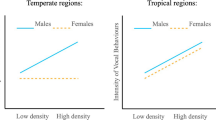

Aposematic species are not only highly conspicuous, but also tend to exhibit slow and predictable movement, rendering them relatively easy to catch (Dowdy and Conner 2016; Hatle and Faragher 1998; Marden and Chai 1991; Sherratt et al. 2004; Srygley 1994). Since many such species are initially attacked by naïve predators before they are consistently sight-rejected (Boyden 1976; Chai 1986) then it stands to reason that the higher vulnerability of aposematic prey (at least initially) may further select for improved post-capture tolerance (Fig. 1c).

One might wonder why palatable species lacking a significant secondary defence have not likewise co-evolved a form of capture tolerance when their primary defence, notably crypsis or high evasiveness, fails. One reason may be that such species are so rarely caught (either because they are not detected, or because they are hard to catch) that there is weaker selection for a post-capture defence. However, another complementary reason is that palatable prey lacking a secondary defence have no means to repel the predator following capture, so may be less able to prevent their consumption.

To formalize the above set of arguments, we have modified the well-known model of Engen et al. (1986)—see Supplementary Information. Engen et al. (1986) assumed for convenience that the probability of a prey being eaten when seized (pe) was a constant, reflecting the distastefulness of the prey (a small value of pe corresponding to a high distastefulness). Here we instead assume that prey can invest r in their ability to survive capture, which can be measured in terms of its reduction in fecundity at the end of the season. This investment is non-linear with a maximum possible (high investment) survival probability after being seized of mU and mP for unprofitable and profitable (i.e. with and without a secondary defence) prey respectively (\({m}_{P}\le {m}_{U})\).

Figures 2a, b show the fitness of the prey with and without a secondary defence for different mean number of encounters (\(\lambda\)) over its lifetime (reflective of its degree of crypsis) and different levels of investment in ability to survive capture (r). The optimal investment in robustness that maximizes prey types’ fitness under these conditions are highlighted in red. The key insights of this model are: (1) when prey are rarely encountered (\(\lambda\) low) then the optimal level of investment in robustness is 0 for both prey types, since the prey will not be selected to pay a cost for a trait that is rarely needed, (2) when unprofitable prey can honestly indicate their defences (maximal survivorship following capture, \({m}_{U}>{m}_{P}\)) then unprofitable prey get more “bang for their buck” and consequently stronger selection to become robust and (3) even profitable prey will ultimately face selection for a degree of robustness, but when \({m}_{P}\) is low this would only be worthwhile at high predator encounter rates.

a, b How conspicuousness (measured by the mean number of encounters of a prey type with a predator before reproduction \(\lambda\)) and the investment in robustness after capture (r) combine to influence the fitness of a profitable (without secondary defence) and b unprofitable (with secondary defence) prey type. Fitness is measured by the number of surviving offspring at the end of the season. The optimal investment in robustness that maximizes the prey type’s fitness is shown in red. The model generating these figures closely follows Engen et al. (1986) but treats robustness as a trait that can be invested in with parameters k = 0.1, b = 10, mP = 0.2, mU = 0.8 (see Supplementary Information for more details). When prey are rarely encountered (\(\lambda\) low) then the optimal level of investment in robustness is negligible, because it is rarely needed. As conspicuousness of the prey type rises, unprofitable prey face selection to become more robust, especially if they are able to gain release by displaying their secondary defence (mU > mP). Even profitable prey will ultimately face selection for a degree of robustness, but only when they are highly conspicuous

One intriguing possibility not covered in the above model is that the warning signals forewarn a would-be predator that the prey item is defended, so that it motivates the predator to handle it more carefully. In this way, a prey-type’s capture tolerance is not entirely independent of its signal. For example, Sillén-Tullberg (1985) found that great tits tended to taste-reject both red and grey forms of an unpalatable bug (Lygaeus equestris) after attacking them, but that the red form was more likely to survive this sampling, possibly as a consequence of more cautious sampling. Likewise, Yamazaki et al. (2020) recently deployed artificial prey in the field with two levels of crypsis (cryptic/conspicuous) and two levels of defence (palatable/unpalatable). They found that while the aposematic prey and cryptic unpalatable prey were attacked at similar rates, the aposematic prey exhibited a higher level of relative partial predation, leading them to suggest that predators attack aposematic prey with less aggression than other prey types (see also Carroll and Sherratt 2013).

The above studies support the contention that instead of instructing would-be predators to avoid them entirely, warning signals might serve as “go-slow” signals (Guilford 1994), causing would-be predator to be more attentive to the possibility that a prey item is harmful to attack. If warning signals cause predators to “handle with care,” then it seems reasonable to suggest that this forewarning reduces not just the handling cost for the predator should the prey turn out to be unprofitable (so that the predator is not stung, or smeared with a noxious compound, for example), but also the survivorship of the signalling prey when it is handled, allowing further selection to act on capture tolerance.

Co-evolutionary pathways

Of course, we do not necessarily need a linear sequence of events for the three-way association between secondary defence, warning signals and capture tolerance to arise. In particular, selection for both secondary defences and capture tolerance may be mediated by another aspect of the species’ life-history (Fig. 1d). For example, chemically defended insects tend to be relatively large (Pasteels et al. 1983), possibly because it is harder for these species to evolve effective crypsis (Prudic et al. 2007; Remmel and Tammaru 2009). However, larger insects also tend to be more resistant to compressive forces than smaller insects (Fisher and Dickman 1993a; Freeman and Lemen 2007; Herrel et al. 2001) at least in part due to the structural needs of a larger body (Williams et al. 2012). So, at least some associations between capture tolerance and aposematism may be simply driven by a “confounder”.

Summary

There are many plausible reasons why aposematic species might tend to survive capture more often than non-aposematic species. For example, physical “toughness” could facilitate aposematism. Alternatively, aposematism may facilitate selection for toughness. However, as we have stressed, physical toughness is not equivalent to capture tolerance. Indeed, the simplest explanation for the association is that predators simply let go of aposematic prey once they discover their secondary defences. Alternatively, or in addition, a warning signal could motivate predators to capture and handle aposematic insects more cautiously, which again does not require them to be “tougher”.

Implications of the association

Being able to survive for long enough to indicate the possession of secondary defences during capture has important implications for the evolution of anti-predator traits. Most notably, if conspicuous mutants with secondary defences can survive capture, then aposematism can readily evolve via individual selection (Fisher 1930; Wiklund and Järvi 1982). However, there are also other implications: following physical evaluation, if those variants displaying a harmful defence are more likely to be subsequently released unharmed, then automimics (variants that do not carry the defence) and Batesian (interspecific) mimics will tend to be selected against, or at least not gain quite as much. The whole process, analogous to checking for forgeries, would not be possible if all prey were simply killed on capture as is widely assumed in models of mimicry evolution (e.g. Aubier and Sherratt 2015; Speed 1993).

Naturally, if unpalatable or otherwise protected species tend to have high capture tolerance because of their mechanical properties (coupled with a preview of the defences themselves) then it is possible that a predator might use tactile stimuli to recognize these prey and release them. Birds are known to have various types of sensorimotor neurons in their beaks, jaws, and oral cavities which allow them to feel and manipulate the objects and food items they grasp (Kuenzel 1989; Schneider et al. 2014; Soliman and Madkour 2017). If prey with harmful secondary defences are sometimes rejected by predators simply on the basis of their “feel” then these tactile cues may be open to exploitation or reinforcement by other species (Batesian or Müllerian mimicry respectively). Tactile cues are perhaps the least-explored sensory component of prey evaluation and are likely to be of particular importance for nocturnal predators and those living in visually restricted environments. While it has long been recognised that predators can make exploratory contact with a prey item without consuming it, this has been largely evaluated in the context of gustatory taste-rejection and is rarely considered to be part of tactile exploration per se, although this possibility has been raised in several papers (DeVries 2003; Hogan-Warburg and Hogan 1981; Kassarov 1999; Swynnerton 1926).

In a comprehensive review, Gilbert (2005) drew attention to the wide variation in the extent to which palatable hoverfly species (Diptera: Syrphidae) resemble their stinging hymenopteran models. Some hoverfly species (the “high fidelity” mimics) appear to match their hymenopteran models very closely, not just in appearance but also in behaviour, whereas other mimetic species only bear a crude resemblance. Gilbert (2005) proposed that the high-fidelity mimics were on average “harder” and “more durable” than non-mimetic dipterans and low-fidelity hoverfly mimics, citing rounded, emarginate abdomens, a punctate cuticle, and strong joints between overlap** tergites as structural traits that are especially common in high fidelity mimicry. Naturally, being harder and more durable would not only allow the flies withstand the compressive and shear forces of their would-be predators, but also come closer to resembling Hymenoptera in texture (Matthews and Matthews 2010). Gilbert’s (2005) conjecture appears entirely plausible given that some of the higher fidelity hoverfly mimics also engage in mock-stinging (Penney et al. 2014), a behaviour that is only likely to be exhibited post capture. Unfortunately, however, the above proposals remain largely untested.

In his review of mimicry in insects, Rettenmeyer (1970) included mimics in his list of species that have resilient bodies “…aposematic insects, models, and mimics often have harder, more durable bodies than other insects”. Rothschild (1971) argued the opposite however: “…unlike the model, with its tough cuticle and other disagreeable qualities, the mimic cannot afford to be examined at close quarters”, and we have already quoted Pinheiro and Campos (2013) who suggested that palatable mimetic butterflies tend to have soft wings. Indeed, the robustness of the insect has occasionally been used by entomologists to distinguish models from their mimics, suggesting that, if mimics do ever evolve to resemble their models in structural texture, then it is not always close. In an 1883 letter, referenced by both Trimen and Bowker (1887), Haase (1896), Bowker commented on two species of South African butterflies which he could only distinguish once he pinched their thorax. Reportedly, the Batesian mimics of the genus Pseudacraea were “brittle” and would die at once, while the aposematic Acraea were “leathery” and could be squeezed “as long as hard as you like without effect”. Thus, while there are some suggestions in the literature that certain Batesian mimics may have higher capture tolerance than non-mimetic species, and plausible explanations as to why it might arise, the best that can be said is that it remains uncertain whether predators use tactile cues to discriminate prey and hence whether there is selection on mimics to evolve tactile properties that are similar to their models.

Future directions

Blest (1963) noted the “familiar fact” that aposematic insects are generally tougher and more heavily sclerotized than their non-aposematic forms. He also referred to the widespread belief that this would allow them to survive sampling by inexperienced predators. However, he also cautioned that “These effects do not seem to have been searched for critically, and their confirmation would be of some interest”. It is fair to say that little has changed in the time since Blest’s cautionary note, in that there remains a dearth of systematic experiments to quantitatively compare the capture tolerance of insects.

As noted in section “If the association between aposematism and capture tolerance exists, why might it arise?”, the majority of studies to date have simply highlighted the remarkably high tolerance of certain aposematic species, but the flimsiness of species that lack post-contact defences may have been considered insufficiently newsworthy to merit attention. One might wonder whether non-aposematic prey, when subjected to the same duration of handling as a similar aposematic prey, would have a lower survival rate. Clearly the first avenue of future research should be aimed at broadening our pool of data, building on comparative studies such as Wang et al. (2018b), Herrel et al. (2001) in which the capture tolerance of a wide variety of species are quantified. As we have seen, objectively quantifying an insect species capture tolerance—whether by physical assay or with real predators—is challenging, because it can be achieved through a variety of physical traits including hardness and elasticity and because different predators may exert different compressive, tensile and shear forces. Standardizing of the test procedures would be desirable, but the most important criterion for these tests is that they should be replicable and conducted in a manner that is relevant to the organism’s natural predators (Kassarov 2004). This is not a small undertaking; there are hundreds of examples of aposematic species in the literature, of which only a scant few have been examined in the context of their capture tolerance.

When comparing capture tolerance among insects, phylogenetic relationships are important to consider, as certain groups of organisms, such as beetles, tend to be generally more resistant to deformation relative to others, such as grasshoppers, with members of each group having shared physical properties in part because of their phylogenetic relatedness. As more comparative data are assembled, they can be used to address a number of questions, which have received some provisional early answers (e.g. DeVries 2002, 2003; Gilbert 2005) but clearly require more work. For example, controlling for phylogeny and important confounders such as body size, do species with significant secondary defences tend to have higher capture tolerance than closely related species which lack these defences, as has been so widely conjectured? Likewise, are aposematic species that signal their evasiveness (for example, butterflies of the genus Morpho, Young 1971) less able to survive capture than related aposematic species that signal a secondary defence such as unpalatability? Are Batesian mimics generally more resistant to handling than comparable non-mimics? A phylogenetic analysis of the potential evolutionary transitions between species with different combinations of capture tolerance, secondary defences and warning signals may help reveal the order in which the traits have evolved (e.g. Loeffler-Henry et al. 2023), but until we have the baseline data, the temporal sequence will remain conjecture.

We began our review by asking if warning signals work so well, why have aposematic insects evolved an ability to tolerate capture? To answer this question, we need a change in mind set: when researchers investigate animal defences, it is natural to highlight conditions under which they work, rather than fail. However, we have argued that when warning signals fail to prevent pursuit, the possession of the signalled defence will facilitate selection on the prey to survive for long enough for its defence to be shown. We also asked if aposematic species are so good at tolerating capture, why have warning signals evolved at all? Once again, it is all too easy to fall into the trap of believing an anti-predator strategy works perfectly: despite a degree of capture tolerance, if some individuals die or get injured during the handling process, then there will be continued selection on signalling to reduce the rate at which they are pursued following detection. In this way, a secondary defence may facilitate the evolution of both a warning signal (“plan A”) to deter pursuit, and an ability to survive capture (plan B) when plan A fails.

Is capture tolerance a neglected third component of aposematism? We hope we have convinced readers that capture tolerance has largely been neglected in the field of anti-predator defence. However, despite numerous anecdotal remarks, papers highlighting the exceptional robustness of certain aposematic species, and many plausible reasons why capture tolerance should be associated with aposematism, we cannot yet be confident that it is a third component of aposematism. Of course, a high degree of capture tolerance in aposematic species will come “for free” if the signalled defence is one of toughness, or if the warning signals motivate the predator to attack cautiously. More generally, however, we need more experimental work evaluating the robustness of non-aposematic species to fully characterize the nature of the association, while being careful to distinguish physical traits that enhance capture tolerance from those that simply prevent capture.

Data availability

Not applicable.

References

Aguirre LF, Herrel A, Van Damme R, Matthysen E (2003) The implications of food hardness for diet in bats. Funct Ecol 17:201–212

Aubier TG, Sherratt TN (2015) Diversity in Müllerian mimicry: the optimal predator sampling strategy explains both local and regional polymorphism in prey. Evolution 69:2831–2845. https://doi.org/10.1111/evo.12790

Blest AD (1963) Relations between moths and predators. Nature 197:1046–1047. https://doi.org/10.1038/1971046a0

Boevé JL, Schaffner U (2003) Why does the larval integument of some sawfly species disrupt so easily? The harmful hemolymph hypothesis. Oecologia 134:104–111. https://doi.org/10.1007/s00442-002-1092-4

Boyden TC (1976) Butterfly palatability and mimicry - experiments with Ameiva lizards. Evolution 30:73–81

Britton N, Planqué R, Franks N (2007) Evolution of defence portfolios in exploiter–victim systems. Bull Math Biol 69:957–988

Brower LP, Glazier SC (1975) Localization of heart poisons in the Monarch butterfly. Science 188:19–25

Caro T (2023) An evolutionary route to warning coloration. Nature 618:34–35. https://doi.org/10.1038/d41586-023-01356-8

Carpenter H (1929) Mimicry. Nature 123:661–663

Carroll J, Sherratt TN (2013) A direct comparison of the effectiveness of two anti-predator strategies under field conditions. J Zool 291:279–285. https://doi.org/10.1111/jzo.12074

Chai P (1986) Field observations and feeding experiments on the responses of rufous-tailed jacamars (Galbula ruficauda) to free-flying butterflies in a tropical rainforest. Biol J Linn Soc 29:161–189. https://doi.org/10.1111/j.1095-8312.1986.tb01772.x

Chai P (1996) Butterfly visual characteristics and ontogeny of responses to butterflies by a specialized tropical bird. Biol J Linn Soc 59:37–67

Chouteau M, Dezeure J, Sherratt TN, Llaurens V, Joron M (2019) Similar predator aversion for natural prey with diverse toxicity levels. Anim Behav 153:49–59. https://doi.org/10.1016/j.anbehav.2019.04.017

Corbin CE, Lowenberger LK, Gray BL (2015) Linkage and trade-off in trophic morphology and behavioural performance of birds. Funct Ecol 29:808–815. https://doi.org/10.1111/1365-2435.12385

Cott HB (1940) Adaptive coloration in animals. Methuen & Co., London

Cyriac VP, Kodandaramaiah U (2019) Don’t waste your time: predators avoid prey with conspicuous colors that signal long handling time. Evol Ecol 33:625–636. https://doi.org/10.1007/s10682-019-09998-9

DeVries PJ (2002) Differential wing toughness in distasteful and palatable butterflies: direct evidence supports unpalatable theory. Biotropica 34:176–181. https://doi.org/10.1646/0006-3606(2002)034[0176:dwtida]2.0.co;2

DeVries PJ (2003) Tough African models and weak mimics: new horizons in the evolution of bad taste. J Lepidopterists Soc. 57:235–238

Dowdy NJ, Conner WE (2016) Acoustic aposematism and evasive action in select chemically defended Arctiine (Lepidoptera: Erebidae) species: Nonchalant or not? PLoS ONE. https://doi.org/10.1371/journal.pone.0152981

Edmunds ME (1974) Defence in animals: a survey of anti-predator defences. Longman, Harlow

Endler JA (1991) Interactions between predators and prey. In: Krebs J, Davies N (eds) Behavioural ecology: an evolutionary approach, 2nd edn. Blackwell Scientific Publications, Oxford, pp 169–202

Engen S, Järvi T, Wiklund C (1986) The evolution of aposematic coloration by individual selection: a life-span survival model. Oikos 46:397–403. https://doi.org/10.2307/3565840

Evans DL (1987) Tough, harmless cryptics could evolve into tough, nasty aposematics: an individual selectionist model. Oikos 48:114–115

Evans DL, Castoriades N, Badruddine H (1986) Cardenolides in the defense of Caenocoris nerii (Hemiptera). Oikos 46:325–329. https://doi.org/10.2307/3565830

Fisher RA (1930) The genetical theory of natural selection. Clarendon Press, Oxford

Fisher DO, Dickman CR (1993a) Body size-prey relationships in insectivorous marsupials: tests of three hypotheses. Ecology 74:1871–1883

Fisher DO, Dickman CR (1993b) Diets of insectivorous marsupials in arid Australia: selection for prey type, size or hardness? J Arid Environ 25:397–410. https://doi.org/10.1006/jare.1993.1072

Freeman PW, Lemen CA (2007) Using scissors to quantify hardness of insects: Do bats select for size or hardness? J Zool 271:469–476. https://doi.org/10.1111/j.1469-7998.2006.00231.x

Gall BG, Spivey KL, Chapman TL, Delph RJ, Brodie ED, Wilson JS (2018) The indestructible insect: Velvet ants from across the United States avoid predation by representatives from all major tetrapod clades. Ecol Evol 8:5852–5862. https://doi.org/10.1002/ece3.4123

Gilbert F (2005) The evolution of imperfect mimicry. In: Fellowes M, Holloway G, Rolff J (eds) Insect evolutionary ecology. CABI, Wallingford, pp 231–288

Guilford T (1994) ‘Go-slow’ signalling and the problem of automimicry. J Theor Biol 170:311–316. https://doi.org/10.1006/jtbi.1994.1192

Gullan PJ, Cranston PS (2014) The insects: an outline of entomology. Wiley, New Jersey

Haase E (1896) Researches on mimicry on the basis of a natural classification of the papilionidae: part 2 researches on mimicry. Stuttgart Erwin Nagele

Hatle JD, Faragher SG (1998) Slow movement increases the survivorship of a chemically defended grasshopper in predatory encounters. Oecologia 115:260–267. https://doi.org/10.1007/s004420050515

Herrel A, Damme RV, Vanhooydonck B, Vree FD (2001) The implications of bite performance for diet in two species of lacertid lizards. Can J Zool 79:662–670. https://doi.org/10.1139/cjz-79-4-662

Hill RI, Vaca JF (2004) Differential wing strength in Pierella butterflies (Nymphalidae: Satyrinae) supports the deflection hypothesis. Biotropica 36:362–370. https://doi.org/10.1646/03191

Hogan-Warburg AJ, Hogan JA (1981) Feeding strategies in the development of food recognition in young chicks. Anim Behav 29:143–154. https://doi.org/10.1016/S0003-3472(81)80161-3

Järvi T, Sillentullberg B, Wiklund C (1981) The cost of being aposematic - an experimental-study of predation on larvae of Papilio-machaon by the great tit, Parus major. Oikos 36:267–272

Kassarov L (1999) Are birds able to taste and reject butterflies based on ‘beak mark tasting’? A different point of view. Behaviour 136:965–981

Kassarov L (2004) A critical response to the paper “Tough African models and weak mimics: new horizons in the evolution of bad taste” by P. DeVries published in this journal, Vol 57(3) 2003. J Lepidopterists’ Soc 58:169–172

Kikuchi DW, Allen WL, Arbuckle K, Aubier TG, Briolat ES, Burdfield-Steel ER, Cheney KL, Daňková K, Elias M, Hämäläinen L, Herberstein ME, Hossie TJ, Joron M, Kunte K, Leavell BC, Lindstedt C, Lorioux-Chevalier U, McClure M, McLellan CF, Medina I, Nawge V, Páez E, Pal A, Pekár S, Penacchio O, Raška J, Reader T, Rojas B, Rönkä KH, Rößler DC, Rowe C, Rowland HM, Roy A, Schaal KA, Sherratt TN, Skelhorn J, Smart HR, Stankowich T, Stefan AM, Summers K, Taylor CH, Thorogood R, Umbers K, Winters AE, Yeager J, Exnerová A (2023) The evolution and ecology of multiple antipredator defences. J Evol Biol 36:975–991. https://doi.org/10.1111/jeb.14192

Kuenzel WJ (1989) Neuroanatomical substrates involved in the control of food intake. Poult Sci 68:926–937. https://doi.org/10.3382/ps.0680926

Lederer RJ (1975) Bill size, food size, and jaw forces of insectivorous birds. Auk 92:385–387

Loeffler-Henry K, Kang C, Sherratt TN (2023) Evolutionary transitions from camouflage to aposematism: Hidden signals play a pivotal role. Science 379:1136–1140

Mangel M, Samaniego FJ (1984) Abraham Wald’s work on aircraft survivability. J Am Stat Assoc 79:259–267. https://doi.org/10.1080/01621459.1984.10478038

Mappes J, Marples N, Endler JA (2005) The complex business of survival by aposematism. Trends Ecol Evol 20:598–603. https://doi.org/10.1016/j.tree.2005.07.011

Marden JH, Chai P (1991) Aerial predation and butterfly design: how palatability, mimicry, and the need for evasive flight constrain mass allocation. Am Nat 138:15–36

Marples NM, Vanveelen W, Brakefield PM (1994) The relative importance of color, taste and smell in the protection of an aposematic insect Coccinella-septempunctata. Anim Behav 48:967–974. https://doi.org/10.1006/anbe.1994.1322

Matthews RW, Matthews JR (2010) Insect behavior. Springer, New york

Maynard Smith J (1964) Group selection and kin selection. Nature 201:1145–1147. https://doi.org/10.1038/2011145a0

Miller TA, Andersen SO, Chandler HD, Gilby AR, Hackman RH, Hepburn HR, Huie P, Lewis CT, Locke M, Loveridge JP, Oberlander H, Neville AC, Scheie PO (2009) Cuticle techniques in arthropods. Springer-Verlag, New York

Mostler G (1935) Beobachtungen zur frage der wespenmimikry. Z Morphol Oekol Tierre 29:381–454

Ohara Y, Nagasaka K, Ohsaki N (1993) Warning coloration in sawfly Athalia rosae larva and concealing coloration in butterfly Pieris rapae larva feeding on similar plants evolved through individual selection. Popul Ecol 35:223. https://doi.org/10.1007/BF02513594

Pasteels JM, Gregoire JC, Rowellrahier M (1983) The chemical ecology of defense in arthropods. Annu Rev Entomol 28:263–289. https://doi.org/10.1146/annurev.en.28.010183.001403

Penney HD, Hassall C, Skevington JH, Lamborn B, Sherratt TN (2014) The relationship between morphological and behavioral mimicry in hover flies (Diptera: Syrphidae). Am Nat 183:281–289. https://doi.org/10.1086/674612

Pinheiro C, Campos V (2019) The responses of wild jacamars (Galbula ruficauda, Galbulidae) to aposematic, aposematic and cryptic, and cryptic butterflies in central Brazil. Ecol Entomol. https://doi.org/10.1111/een.12723

Pinheiro CEG, Campos VC (2013) Do rufous-tailed jacamars (Galbula ruficauda) play with aposematic butterflies? Ornitol Neotropical 24:365–367

Poulton EB (1908) The place of mimicry in a scheme of defensive coloration. Clarendon Press, Oxford, pp 293–382

Poulton EB (1890) The colours of animals: their meaning and use, especially considered in the case of insects. Kegan Paul, Trench Trübner, & Co. Ltd., London

Prudic KL, Oliver JC, Sperling FAH (2007) The signal environment is more important than diet or chemical specialization in the evolution of warning coloration. Proc Natl Acad Sci USA 104:19381–19386. https://doi.org/10.1073/pnas.0705478104

Quicke DLJ (2017) Mimicry, crypsis, masquerade and other adaptive resemblances. Wiley Blackwell, Hoboken, New Jersey

Remmel T, Tammaru T (2009) Size-dependent predation risk in tree-feeding insects with different colouration strategies: a field experiment. J Anim Ecol 78:973–980. https://doi.org/10.1111/j.1365-2656.2009.01566.x

Rettenmeyer CW (1970) Insect mimicry. Annu Rev Entomol 15:43–74

Rothschild M (1971) Speculations about mimicry with Henry Ford. In: Creed ER (ed) Ecological genetics and evolution. Blackwell, Oxford

Ruxton GD, Allen WL, Sherratt TN, Speed MP (2018) Avoiding attack: the evolutionary ecology of crypsis, aposematism, and mimicry, 2nd edn. Oxford University Press, Oxford

Schmidt JO, Blum MS (1977) Adaptations and responses of Dasymutilla occidentalis (Hymenoptera: Mutillidae) to predators. Entomol Exp Appl 21:99–111. https://doi.org/10.1111/j.1570-7458.1977.tb02663.x

Schneider ER, Mastrotto M, Laursen WJ, Schulz VP, Goodman JB, Funk OH, Gallagher PG, Gracheva EO, Bagriantsev SN (2014) Neuronal mechanism for acute mechanosensitivity in tactile-foraging waterfowl. Proc Natl Acad Sci USA 111:14941–14946. https://doi.org/10.1073/pnas.1413656111

Sherratt TN (2008) The evolution of Müllerian mimicry. Naturwissenschaften 95:681–695. https://doi.org/10.1007/s00114-008-0403-y

Sherratt TN, Rashed A, Beatty CD (2004) The evolution of locomotory behavior in profitable and unprofitable simulated prey. Oecologia 138:143–150. https://doi.org/10.1007/s00442-003-1411-4

Sillén-Tullberg B (1985) Higher survival of an aposematic than of a cryptic form of a distasteful bug. Oecologia 67:411–415. https://doi.org/10.1007/BF00384948

Soliman SA, Madkour FA (2017) A comparative analysis of the organization of the sensory units in the beak of duck and quail. Cytol Embryol 1:1–16. https://doi.org/10.15761/HCE.1000122

Speed MP (1993) Muellerian mimicry and the psychology of predation. Anim Behav 45:571–580

Srygley RB (1994) Locomotor mimicry in butterflies - the associations of positions of centers of mass among groups of mimetic, unprofitable prey. Philos Trans R Soc Lond Ser B-Biol Sci 343:145–155. https://doi.org/10.1098/rstb.1994.0017

Steppan SJ (1996) Flexural stiffness patterns of butterfly wings (Papilionoidea). J Res Lepidoptera 35:61–77

Sugiura S (2020) Predators as drivers of insect defenses. Entomol Sci 23:316–337. https://doi.org/10.1111/ens.12423

Sun JY, Tong J, Ma YH (2008) Nanomechanical behaviours of cuticle of three kinds of beetle. J Bionic Eng 5:152–157. https://doi.org/10.1016/S1672-6529(08)60087-6

Swynnerton CFM (1926) An investigation into the defences of butterflies of the genus Charaxes. Int Entom Ologen-Kongreß 2:478–504

Trimen R (1868) On some remarkable memetic analogies among African Butterflies. Trans Linnean Soc Lond 26:497–522

Trimen R, Bowker JH (1887) South-African butterflies. Trübner & Co., Ludgate Hill

Vasconcellos-Neto J, Lewinsohn TM (1984) Discrimination and release of unpalatable butterflies by Nephila clavipes, a neotropical orb-weaving spider. Ecol Entomol 9:337–344. https://doi.org/10.1111/j.1365-2311.1984.tb00857.x

Wald A (1943) A method of estimating plane vulnerability based on damage of survivors. Statistical Research Group, Columbia University reprint from July 1980 Center for Naval Analyses CRC 432

Wallace AR (1889) Darwinism - An exposition of the theory of natural selection with some of its applications. MacMillan & Co., London

Wang L-Y, Huang W-S, Tang H-C, Huang L-C, Lin C-P (2018a) Too hard to swallow: a secret secondary defence of an aposematic insect. J Exp Biol 221:1–10. https://doi.org/10.1242/jeb.172486

Wang LY, Rajabi H, Ghoroubi N, Lin CP, Gorb SN (2018b) Biomechanical strategies underlying the robust body armour of an aposematic weevil. Front Physiol 9:1–10. https://doi.org/10.3389/fphys.2018.01410

White TE, Umbers KDL (2021) Meta-analytic evidence for quantitative honesty in aposematic signals. Proc R Soc B Biol Sci 288:20210679. https://doi.org/10.1098/rspb.2021.0679

Whitman DW, Blum MS, Jones CG (1985) Chemical defense in Taeniopoda eques (Orthoptera: Acrididae): role of the metathoracic secretion. Ann Entomol Soc Am 78:451–455. https://doi.org/10.1093/aesa/78.4.451

Wiklund C, Järvi T (1982) Survival of distasteful insects after being attacked by naive birds - a reappraisal of the theory of aposematic coloration evolving through individual selection. Evolution 36:998–1002

Williams ML, Sullivan DE, Renninger GH, McFarland EI, Hunt JL (2012) Physics for the biological sciences. Nelson Education Ltd, Toronto

Winters AE, Lommi J, Kirvesoja J, Nokelainen O, Mappes J (2021) Multimodal aposematic defenses through the predation sequence. Front Ecol Evol 9:1–18. https://doi.org/10.3389/fevo.2021.657740

Wourms MK, Wasserman FE (1985) Butterfly wing markings are more advantageous during handling than during the initial strike of an avian predator. Evolution 39:845–851. https://doi.org/10.2307/2408684

Yamazaki Y, Pagani-Núñez E, Sota T, Barnett CRA (2020) The truth is in the detail: predators attack aposematic prey with less aggression than other prey types. Biol J Linn Soc 131:332–343. https://doi.org/10.1093/biolinnean/blaa119

Young AM (1971) Wing coloration and reflectance in Morpho butterflies as related to reproductive behavior and escape from avian predators. Oecologia 7:209–222

Acknowledgements

We thank Jeff Dawson, Graeme Ruxton, Jayne Yack our AE (Tom Reader) and two anonymous referees for their helpful comments on our paper.

Funding

T.N.S. is supported by a Discovery Grant from the Natural Sciences and Engineering Research Council (NSERC).

Ethics declarations

Conflict of interest

The authors are not aware of any financial or competing interests that may have influenced this review.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sherratt, T.N., Stefan, A. Capture tolerance: A neglected third component of aposematism?. Evol Ecol 38, 257–275 (2024). https://doi.org/10.1007/s10682-024-10289-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10682-024-10289-1