Abstract

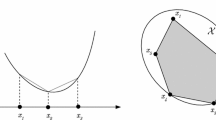

Solving large scale nonlinear optimization problems requires either significant computing resources or the development of specialized algorithms. For Linear Programming (LP) problems, decomposition methods can take advantage of problem structure, gradually constructing the full problem by generating variables or constraints. We first present a direct adaptation of the Column Generation (CG) methodology for nonlinear optimization problems, such that when optimizing over a structured set \({\mathcal {X}}\) plus a moderate number of complicating constraints, we solve a succession of (1) restricted master problems on a smaller set \({\mathcal {S}}\subset {\mathcal {X}}\) and (2) pricing problems that are Lagrangean relaxations wrt the complicating constraints. The former provides feasible solutions and feeds dual information to the latter. In turn, the pricing problem identifies a variable of interest that is then taken into account into an updated subset \({\mathcal {S}}'\subset {\mathcal {X}}\). Our approach is valid whenever the master problem has zero Lagrangean duality gap wrt to the complicating constraints, and not only when \({\mathcal {S}}\) is the convex hull of the generated variables as in CG for LPs, but also with a variety of subsets such as the conic hull, the linear span, and a special variable aggregation set. We discuss how the structure of \({\mathcal {S}}\) and its update mechanism influence the number of iterations required to reach near-optimality and the difficulty of solving the restricted master problems, and present linearized schemes that alleviate the computational burden of solving the pricing problem. We test our methods on synthetic portfolio optimization instances with up to 5 million variables including nonlinear objective functions and second order cone constraints. We show that some CGs with linearized pricing are 2–3 times faster than solving the complete problem directly and are able to provide solutions within 1% of optimality in 6 h for the larger instances, whereas solving the complete problem runs out of memory.

Similar content being viewed by others

Data availability statement

The source codes used to generate the computational results of this work are included in the supplementary material of the paper.

Notes

f and g satisfy some convexity properties in Sect. 3.

Strict feasibility is defined in Subsection 1.8.

More precisely, projections onto the variables x of a polyhedron in an extended space.

Originally used in a Generalized Benders Decomposition context.

The entropic risk measure is shown to be convex in z in [52].

References

Acerbi, C., Simonetti, P.: Portfolio optimization with spectral measures of risk. ar**v preprint cond-mat/0203607 (2002)

Ahmadi, A.A., Dash, S., Hall, G.: Optimization over structured subsets of positive semidefinite matrices via column generation. Discret. Optim. 24, 129–151 (2017)

Álvarez, C., Mancilla-David, F., Escalona, P., Angulo, A.: A Bienstock–Zuckerberg-based algorithm for solving a network-flow formulation of the convex hull pricing problem. IEEE Trans. Power Syst. 35(3), 2108–2119 (2019)

Barnhart, C., Johnson, E.L., Nemhauser, G.L., Savelsbergh, M.W.P., Vance, P.H.: Branch-and-price: column generation for solving huge integer programs. Oper. Res. 46(3), 316–329 (1998)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization. Princeton University Press, Princeton (2009)

Bergner, M., Caprara, A., Ceselli, A., Furini, F., Lübbecke, M.E., Malaguti, E., Traversi, E.: Automatic Dantzig–Wolfe reformulation of mixed integer programs. Math. Program. 149(1–2), 391–424 (2015)

Bertsimas, D., Tsitsiklis, J.N.: Introduction to Linear Optimization, vol. 6. Athena Scientific Belmont, MA (1997)

Bienstock, D., Zuckerberg, M.: A new LP algorithm for precedence constrained production scheduling. Optimization Online pp. 1–33 (2009)

Bonnans, J.F., Gilbert, J.C., Lemaréchal, C., Sagastizábal, C.A.: Numerical Optimization: Theoretical and Practical Aspects. Springer Science & Business Media, Berlin, Germany (2006)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Briant, O., Lemaréchal, C., Meurdesoif, P., Michel, S., Perrot, N., Vanderbeck, F.: Comparison of bundle and classical column generation. Math. Program. 113(2), 299–344 (2008)

Burer, S.: On the copositive representation of binary and continuous nonconvex quadratic programs. Math. Program. 120(2), 479–495 (2009)

Chicoisne, R., Ordoñez, F., Espinoza, D.: Risk averse shortest paths: a computational study. INFORMS J. Comput. 30(3), 539–553 (2018)

Choi, E., Tcha, D.W.: A column generation approach to the heterogeneous fleet vehicle routing problem. Comput. Oper. Res. 34(7), 2080–2095 (2007)

Chou, C.A., Liang, Z., Chaovalitwongse, W.A., Berger-Wolf, T.Y., DasGupta, B., Sheikh, S., Ashley, M.V., Caballero, I.C.: Column-generation framework of nonlinear similarity model for reconstructing sibling groups. INFORMS J. Comput. 27(1), 35–47 (2015)

Dantzig, G.B., Wolfe, P.: The decomposition algorithm for linear programs. Econom. J. Econom. Soc. 767–778 (1961)

Dentcheva, D., Ruszczyński, A.: Portfolio optimization with stochastic dominance constraints. J. Bank. Financ. 30(2), 433–451 (2006)

Desaulniers, G., Desrosiers, J., Dumas, Y., Marc, S., Rioux, B., Solomon, M.M., Soumis, F.: Crew pairing at air France. Eur. J. Oper. Res. 97(2), 245–259 (1997)

Dong, H., Anstreicher, K.: Separating doubly nonnegative and completely positive matrices. Math. Program. 137(1–2), 131–153 (2013)

Du Merle, O., Villeneuve, D., Desrosiers, J., Hansen, P.: Stabilized column generation. Discret. Math. 194(1–3), 229–237 (1999)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55(1–3), 293–318 (1992)

Espinoza, D., Moreno, E.: A primal-dual aggregation algorithm for minimizing conditional value-at-risk in linear programs. Comput. Optim. Appl. 59(3), 617–638 (2014)

García, R., Marín, A., Patriksson, M.: Column generation algorithms for nonlinear optimization, I: convergence analysis. Optimization 52(2), 171–200 (2003)

García, R., Marín, A., Patriksson, M.: Column generation algorithms for nonlinear optimization, II: numerical investigations. Comput. Oper. Res. 38(3), 591–604 (2011)

Geoffrion, A.: Generalized benders decomposition. J. Optim. Theory Appl. 10(4), 237–260 (1972)

Giles, F.R., Pulleyblank, W.R.: Total dual integrality and integer polyhedra. Linear Algebra Appl. 25, 191–196 (1979)

Glover, F.: Surrogate constraint duality in mathematical programming. Oper. Res. 23(3), 434–451 (1975)

Goemans, M.X., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM (JACM) 42(6), 1115–1145 (1995)

Gorge, A., Lisser, A., Zorgati, R.: Generating cutting planes for the semidefinite relaxation of quadratic programs. Comput. Oper. Res. 55, 65–75 (2015)

Greenberg, H., Pierskalla, W.: Surrogate mathematical programming. Oper. Res. 18(5), 924–939 (1970)

Khaniyev, T., Elhedhli, S., Erenay, F.S.: Structure detection in mixed-integer programs. INFORMS J. Comput. 30(3), 570–587 (2018)

Kleywegt, A.J., Shapiro, A., Homem-de Mello, T.: The sample average approximation method for stochastic discrete optimization. SIAM J. Optim. 12(2), 479–502 (2002)

Krokhmal, P., Palmquist, J., Uryasev, S.: Portfolio optimization with conditional value-at-risk objective and constraints. J. Risk 4, 43–68 (2002)

Levy, H., Markowitz, H.M.: Approximating expected utility by a function of mean and variance. Am. Econ. Rev. 69(3), 308–317 (1979)

Lovász, L.: On the Shannon capacity of a graph. IEEE Trans. Inf. Theory 25(1), 1–7 (1979)

Lübbecke, M., Desrosiers, J.: Selected topics in column generation. Oper. Res. 53(6), 1007–1023 (2005)

Müller, B., Muñoz, G., Gasse, M., Gleixner, A., Lodi, A., Serrano, F.: On generalized surrogate duality in mixed-integer nonlinear programming. In: International Conference on Integer Programming and Combinatorial Optimization, pp. 322–337. Springer (2020)

Muñoz, G., Espinoza, D., Goycoolea, M., Moreno, E., Queyranne, M., Rivera, O.: A study of the Bienstock–Zuckerberg algorithm: applications in mining and resource constrained project scheduling. Comput. Optim. Appl. 69(2), 501–534 (2018)

Murphy, F.H.: A column generation algorithm for nonlinear programming. Math. Program. 5(1), 286–298 (1973)

Muts, P., Nowak, I., Hendrix, E.M.: On decomposition and multiobjective-based column and disjunctive cut generation for MINLP. Optim. Eng. 22(3), 1389–1418 (2021)

Nesterov, Y., Nemirovskii, A.: Interior-point polynomial algorithms in convex programming. SIAM (1994)

Ni, W., Shu, J., Song, M., Xu, D., Zhang, K.: A branch-and-price algorithm for facility location with general facility cost functions. INFORMS J. Comput. 33(1), 86–104 (2021)

Nocedal, J., Wright, S.: Numerical Optimization. Springer Science & Business Media, Berlin, Germany (2006)

Nowak, I., Breitfeld, N., Hendrix, E.M., Njacheun-Njanzoua, G.: Decomposition-based inner-and outer-refinement algorithms for global optimization. J. Glob. Optim. 72(2), 305–321 (2018)

Park, Y.W.: Optimization for l 1-norm error fitting via data aggregation. INFORMS J. Comput. 33(1), 120–142 (2021)

Pessoa, A., Sadykov, R., Uchoa, E., Vanderbeck, F.: Automation and combination of linear-programming based stabilization techniques in column generation. INFORMS J. Comput. 30(2), 339–360 (2018)

Petra, C.G., Schenk, O., Anitescu, M.: Real-time stochastic optimization of complex energy systems on high-performance computers. Comput. Sci. Eng. 16(5), 32–42 (2014)

Petra, C.G., Schenk, O., Lubin, M., Gärtner, K.: An augmented incomplete factorization approach for computing the Schur complement in stochastic optimization. SIAM J. Sci. Comput. 36(2), C139–C162 (2014)

Pirnay, H., Lopez-Negrete, R., Biegler, L.: Optimal sensitivity based on IPOPT. Math. Program. Comput. 4(4), 307–331 (2012)

Pisinger, W.D., Rasmussen, A.B., Sandvik, R.: Solution of large quadratic knapsack problems through aggressive reduction. INFORMS J. Comput. 19(2), 280–290 (2007)

Porumbel, D., Clautiaux, F.: Constraint aggregation in column generation models for resource-constrained covering problems. INFORMS J. Comput. 29(1), 170–184 (2017)

Pratt, J.W.: Risk aversion in the small and in the large. Econom. J. Econom. Soc. 32(1/2), 122–136 (1964)

Ruszczyński, A.: On convergence of an augmented Lagrangian decomposition method for sparse convex optimization. Math. Oper. Res. 20(3), 634–656 (1995)

Sadykov, R., Lazarev, A., Shiryaev, V., Stratonnikov, A.: Solving a freight railcar flow problem arising in Russia. In: ATMOS-13th Workshop on Algorithmic Approaches for Transportation Modelling, Optimization, and Systems-2013. Dagstuhl Open Access Series in Informatics (2013)

Sadykov, R., Vanderbeck, F.: Column generation for extended formulations. EURO J. Comput. Optim. 1(1–2), 81–115 (2013)

Song, Y., Luedtke, J.: An adaptive partition-based approach for solving two-stage stochastic programs with fixed recourse. SIAM J. Optim. 25(3), 1344–1367 (2015)

Sponsel, J., Dür, M.: Factorization and cutting planes for completely positive matrices by copositive projection. Math. Program. 143(1–2), 211–229 (2014)

Sun, Y., Andersen, M.S., Vandenberghe, L.: Decomposition in conic optimization with partially separable structure. SIAM J. Optim. 24(2), 873–897 (2014)

Vandenberghe, L., Andersen, M.S.: Chordal graphs and semidefinite optimization. Found. Trends Optim. 1(4), 241–433 (2015)

Vielma, J.P., Ahmed, S., Nemhauser, G.L.: A lifted linear programming branch-and-bound algorithm for mixed-integer conic quadratic programs. INFORMS J. Comput. 20(3), 438–450 (2008)

Von Hohenbalken, B.: Simplicial decomposition in nonlinear programming algorithms. Math. Program. 13(1), 49–68 (1977)

Wachter, A., Biegler, L.: On the implementation of a primal-dual interior point filter line search algorithm for large-scale nonlinear programming. Math. Program. 106(1), 25–57 (2006)

Wang, J., Ralphs, T.: Computational experience with hypergraph-based methods for automatic decomposition in discrete optimization. In: International Conference on AI and OR Techniques in Constriant Programming for Combinatorial Optimization Problems, pp. 394–402. Springer (2013)

Wang, Y., Yin, W., Zeng, J.: Global convergence of ADMM in nonconvex nonsmooth optimization. J. Sci. Comput. 78(1), 29–63 (2019)

Zangwill, W.I.: The convex simplex method. Manag. Sci. 14(3), 221–238 (1967)

Zheng, Y., Fantuzzi, G., Papachristodoulou, A., Goulart, P., Wynn, A.: Fast admm for semidefinite programs with chordal sparsity. In: 2017 American Control Conference (ACC), pp. 3335–3340. IEEE (2017)

Zheng, Y., Fantuzzi, G., Papachristodoulou, A., Goulart, P., Wynn, A.: Chordal decomposition in operator-splitting methods for sparse semidefinite programs. Math. Program. 180(1), 489–532 (2020)

Zheng, Y., Sootla, A., Papachristodoulou, A.: Block factor-width-two matrices and their applications to semidefinite and sum-of-squares optimization. IEEE Transactions on Automatic Control (2022)

Acknowledgements

The author thanks Victor Bucarey, Bernard Fortz, Bernard Gendron (This paper is dedicated to Bernard Gendron who passed away during July 2022), Gonzalo Muñoz, Fernando Ordóñez, Dana Pizarro, Jupiler™ and two anonymous reviewers for their valuable comments on an early version of this work. Powered@NLHPC: This research was partially supported by the supercomputing infrastructure of the NLHPC (ECM-02).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proof of Example 4

We necessarily have \(y_2=x_3=x_4=x_5=x_6=0\), \(y_1=1-x_1\) and \(x_2=1\), which turns the SDP constraint into \(x_1\geqslant 0\) and we have \(\omega ({\mathcal {X}})=0\). The conic dual of the latter problem is

We necessarily have \(\lambda _1=\lambda _4=\lambda _5=0\), \(\lambda _2=1\), which turns the SDP constraint into \(\lambda _3\geqslant \lambda _6^2\) and we have \(\omega '=-1<0=\omega ({\mathcal {X}})\), proving that \(P({\mathcal {X}})\) does not satisfy Assumption 1.

Now, by construction \({\mathcal {S}}\subset {\mathcal {X}}\) and \(P({\mathcal {S}})\) is a feasible LP that always satisfies Assumption 1. \(\square\)

Appendix B: Proof of Proposition 1

We prove that some \(({{\tilde{x}}}, {{\tilde{y}}})\) is strictly feasible for \(P({\mathcal {S}})\). Because \(-g({{\bar{x}}},{{\bar{y}}})\in \text {relint }{\mathcal {C}}\) and \({\mathcal {C}}\) is proper, there is \(\rho >0\) such that \({\mathcal {B}}(-g({{\bar{x}}},\bar{y}),\rho )\subset \text {relint }{\mathcal {C}}\). Consider \(({{\hat{x}}},\hat{\theta })\) strictly feasible for \({\mathcal {S}}\) and \(\epsilon >0\) and \({\tilde{x}}\) as follows:

Because \({{\bar{x}}}\in {\mathcal {S}}\) and \(({{\hat{x}}},{{\hat{\theta }}})\) is strictly feasible for \({\mathcal {S}}\), there exists \({{\bar{\theta }}}\) such that \(\phi ({{\bar{x}}}, {{\bar{\theta }}})=0\), \(-\gamma ({{\bar{x}}}, {{\bar{\theta }}})\in {\mathcal {K}}\), \(\phi ({{\hat{x}}}, {{\hat{\theta }}})=0\) and \(-\gamma ({{\hat{x}}}, {{\hat{\theta }}})\in \text {relint }{\mathcal {K}}\). \(\phi\) being linear, defining \({{\tilde{\theta }}} = {{\bar{\theta }}}+\epsilon ({{\hat{\theta }}} - \bar{\theta })\), we immediately have \(\phi ({{\tilde{x}}},{{\tilde{\theta }}})=0\). Further, \(\epsilon >0\), \({\mathcal {K}}\) is proper, \(-\gamma ({{\bar{x}}},\bar{\theta }) \in {\mathcal {K}}\) and \(-\gamma ({{\hat{x}}},{{\hat{\theta }}}) \in \text {relint }{\mathcal {K}}\) so we obtain \(-\epsilon \gamma ({{\hat{x}}},{{\hat{\theta }}})-(1-\epsilon ) \gamma ({{\bar{x}}},\bar{\theta })\in \text {relint }{\mathcal {K}}\). Because \(\gamma\) is \({\mathcal {K}}\)-convex and \(\epsilon \in ]0,1]\) we obtain \(-\gamma ({{\tilde{x}}},{{\tilde{\theta }}})\in \text {relint }{\mathcal {K}}\). We just proved that \(({{\tilde{x}}},{{\tilde{\theta }}})\) is strictly feasible for \({\mathcal {S}}\). We finish by proving that \(({{\tilde{x}}}, {\bar{y}})\) is strictly feasible for \(P({\mathcal {S}})\): By definition we have \(\rho \geqslant L\epsilon || {{\hat{x}}} - {{\bar{x}}} ||=L||{{\tilde{x}}}- {{\bar{x}}}|| =L||({{\tilde{x}}}- {{\bar{x}}};{{\bar{y}}}- {{\bar{y}}})||\). Because g is L-Lipschitz, we obtain that \(\rho \geqslant ||g({{\tilde{x}}},{{\bar{y}}}) - g({{\bar{x}}},{{\bar{y}}})||\), meaning that \(-g({{\tilde{x}}},{{\bar{y}}}) \in {\mathcal {B}}(-g({\bar{x}}, {{\bar{y}}}),\rho )\subset \text {relint }{\mathcal {C}}\). Because \(P({\mathcal {S}})\) is convex, Slater’s condition holds, thus finishing the proof. \(\square\)

Appendix C: Proof of Proposition 2

If \(L({\mathcal {X}},\lambda ^k)\) satisfies the P-property wrt y, the problem in y given some \(x\in {\mathcal {X}}\) is equivalent to the problem in y given \(x=x^k\), i.e. for any \(x\in {\mathcal {X}}\) we have

Because \(P({\mathcal {S}}^k)\) satisfies Assumption 1, \((x^k,y^k)\) is optimal for \(L({\mathcal {S}}^k,\lambda ^k)\), i.e.

In consequence, \(y^k\in \arg \min _{y}\{f(x^k,y)+\langle \lambda ^k , g(x^k,y)\rangle \}\). Using equality (13) finishes the proof. \(\square\)

Appendix D: Proof of Lemma 1

First, for any \(t\in [0,1]\) and any pair \((u^1,u^2)\in {\mathcal {U}}\times {\mathcal {U}}\) we have: \(t\varphi (u^1) +(1-t)\varphi (u^2) -\varphi (t u^1+(1-t)u^2) \in {\mathcal {K}}\). Given that \(\lambda \in {\mathcal {K}}^*\) we have \(\langle \lambda , t\varphi (u^1) +(1-t)\varphi (u^2) -\varphi (t u^1+(1-t)u^2) \rangle \geqslant 0\), meaning that

Appendix E: Proof of Lemma 2

The linear approximation of \(\psi\) at \({{\bar{u}}}\) is \({{\bar{\psi }}}[\bar{u}](u):= \psi ({{\bar{u}}})+ \langle \nabla \psi [{{\bar{u}}} ],u-{{\bar{u}}}\rangle\). By definition, for every \(u\in {\mathcal {U}}\):

implying that \({{\bar{\psi }}}[{{\bar{u}}}](u)= \langle \lambda , \varphi (\bar{u})\rangle + \langle \lambda , D\varphi ({{\bar{u}}} ) (u-{{\bar{u}}}) \rangle =\langle \lambda , {{\bar{\varphi }}}[{{\bar{u}}}](u)\rangle\). \(\square\)

Appendix F: Proof of Lemma 3

By convexity of \({\mathcal {U}}\) and optimality of \(u^*\), for any \(\epsilon \in ]0,1]\) and any \(u\in {\mathcal {U}}\) we have \(\varphi (u^ *)\leqslant \varphi (u^ *+\epsilon (u-u^ *))\), i.e.

which implies that when \(\epsilon \rightarrow 0\), for any \(u \in {\mathcal {U}}\) we have \(\left\langle \nabla \varphi \left( u^ *\right) ,u-u^ *\right\rangle \geqslant 0\), i.e.

meaning that \(u^*\) is optimal for \(\min _{u\in {\mathcal {U}}} \bar{\varphi }[u^*](u)\) and implying \(\omega ^ *= {{\bar{\omega }}}[u^ *]\). \(\square\)

Appendix G: Proof of Proposition 3

First, notice that the following holds:

In consequence, we have that:

which is separable in x and y. Wlog we can assume that (14) attains its minimum, otherwise the master problem is unbounded and we can stop. Under this assumption, the first order optimality conditions in y for the master problem \(P({\mathcal {S}}^k)\) are \(\nabla _y f(x^k,y^k)+D_y g ( x^k,y^k)^*\lambda ^k =0\), in turn implying that for any y: \(\langle \nabla _y f(x^k,y^k),y\rangle +\langle \lambda ^k,D_y g ( x^k,y^k) y\rangle =0\), meaning that the objective function of (14) is identically zero. \(\square\)

Appendix H: Proof of Proposition 5

Let us define the following subsets of [p]:

First notice that we can fix beforehand the following variables:

Next, for every \(j\in {\mathcal {J}}_-\), we use the change of variable \(z_j\leftarrow \mu _j-z_j\), obtaining the following problem:

where \({{\hat{\beta }}}:= \beta -\sum _{j\in {\mathcal {J}}_-\cup {\mathcal {J}}_\mu } \alpha _j \mu _j\) and:

The latter is a knapsack problem with positive capacity \({{\hat{\beta }}}\) and weights \({{\hat{\alpha }}}_j\) that can be solved by sorting the remaining indices \(j\in {\mathcal {J}}_+\cup {\mathcal {J}}_-\) in increasing disutility \({{\hat{\gamma }}}_j/{{\hat{\alpha }}}_j\) and filling the capacity constraint until no variable is available or the capacity constraint is tight. \(\square\)

Appendix I: Proof of Proposition 6

It is enough to show that \((u^*,( \pi ^*,\hat{\lambda },{\hat{\lambda }}_0))\) satisfies the KKT conditions for (9):

(15a) and (15b) are trivially satisfied. Given that \(-\gamma _0,\lambda ^*\geqslant 0\), the remaining conditions are equivalent to:

which are all implied by the KKT conditions for (10) at \((u^*,(\pi ^*,\lambda ^*))\). \(\square\)

Appendix J: Sparsity patterns for the enhanced models

Primal-dual interior point and Newton step Given a barrier parameter \(\mu >0\) and an optimization problem \(\min _{u}\{\varphi (u):\phi (u)\leqslant 0,\,Ru=s\}\), the barrier problem is defined as \(\min _{u}\{\varphi (u)-\mu \sum _{i=1}^p\ln (-\phi _i(u)):Ru=s\}\). The first order optimality conditions for the barrier problem are the following perturbed conditions:

The heavy works at each iteration of a primal-dual interior point algorithm occur during the Newton step, which consists in approximately solve the latter system of equations by solving in \((\Delta u,\Delta \beta , \Delta \pi )\) its following first-order approximation:

Monolithic formulation After the sparsity patterns in Figs. 8, 9, Figure 10 summarizes the number of nonzero coefficients present in the Newton step’s system.

The non-enhanced version has \(n^2+10n+T+2\) nonzeroes, with \(3n+T+2\) unknowns, while the enhanced version sums \(n(4S+8)+S(S(T+1)+2T+7)+2+T\) nonzeroes, with \(3n+2ST+4S+T+2\) unknowns. We summarize these results in Table 10 to illustrate the benefits of using the enhanced version instead of the original version (recall that we used \(S=20\)):

We can see that we can divide at least by a factor 100 the number of nonzero coefficients in our instances at the cost of having less than a percent of additional unknowns.

Pricing problems We only cover here the pricing problem when the variance constraint is considered as conic because the pricing when considering it as a single nonlinear constraint becomes very similar to the monolithic formulation. Given that the problem is splittable in each \(x^t\) (and \(x^0=y\)), we successively solve the pricing for each \(t\in \{0,...,T\}\), which is the problem for which we study the sparsity pattern. As per Fig. 11, Fig. 12 summarizes the number of nonzero coefficients present in the Newton step’s system.

The non-enhanced version has \(n_t^2+8n_t+1\) nonzeroes, with \(3n_t+1\) unknowns, while the enhanced version sums \(n_t(8+2S)+S(S+2)+1\) nonzeroes, with \(3n_t+2S+1\) unknowns. We summarize these results in Table 11 to illustrate the benefits of using the enhanced version instead of the original version, where we can see again that we can divide at least by a factor 100 the number of nonzero coefficients in our instances at the cost of having less than a percent of additional unknowns.

Appendix K: Proof of Proposition 7

The KKT conditions for (12) are

Conditions (16a)–(16b)–(16c) represent respectively primal and dual feasibility and complementary slackness for (11) at \(({{\tilde{u}}},(\tilde{\pi },{{\tilde{\lambda }}}))\). We now prove that (16d)–(16e) imply the last remaining KKT condition for (11): stationarity. Replacing (16d) in (16e) we obtain:

For any (u, v, w) such that \(Vu=v\) and \(Wu = w\), we have \(\varphi (u) = {{\tilde{\varphi }}}(Vu)\) and \(\gamma (u) = {{\tilde{\gamma }}}(Wu)\), implying that

Using (18) at \((u,v,w)=(\tilde{u},{{\tilde{v}}},{{\tilde{w}}})\) and replacing in (17) we get \(D\phi ({{\tilde{u}}})^\top {{\tilde{\pi }}} +\nabla \varphi ({{\tilde{u}}}) +D\gamma ({{\tilde{u}}})^*{{\tilde{\lambda }}} = 0\). \(\square\)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chicoisne, R. Computational aspects of column generation for nonlinear and conic optimization: classical and linearized schemes. Comput Optim Appl 84, 789–831 (2023). https://doi.org/10.1007/s10589-022-00445-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-022-00445-0