Abstract

A novel optimization algorithm called hybrid salp swarm algorithm with teaching-learning based optimization (HSSATLBO) is proposed in this paper to solve reliability redundancy allocation problems (RRAP) with nonlinear resource constraints. Salp swarm algorithm (SSA) is one of the newest meta-heuristic algorithms which mimic the swarming behaviour of salps. It is an efficient swarm optimization technique that has been used to solve various kinds of complex optimization problems. However, SSA suffers a slow convergence rate due to its poor exploitation ability. In view of this inadequacy and resulting in a better balance between exploration and exploitation, the proposed hybrid method HSSATLBO has been developed where the searching procedures of SSA are renovated based on the TLBO algorithm. The good global search ability of SSA and fast convergence of TLBO help to maximize the system reliability through the choices of redundancy and component reliability. The performance of the proposed HSSATLBO algorithm has been demonstrated by seven well-known benchmark problems related to reliability optimization that includes series system, complex (bridge) system, series-parallel system, overspeed protection system, convex system, mixed series-parallel system, and large-scale system with dimensions 36, 38, 40, 42 and 50. After illustration, the outcomes of the proposed HSSATLBO are compared with several recently developed competitive meta-heuristic algorithms and also with three improved variants of SSA. Additionally, the HSSATLBO results are statistically investigated with the wilcoxon sign-rank test and multiple comparison test to show the significance of the results. The experimental results suggest that HSSATLBO significantly outperforms other algorithms and has become a remarkable and promising tool for solving RRAP.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since 1950, reliability optimization plays a progressively decisive role because of its critical requirements on several engineering and industrial applications, and has become a hot research topic in the engineering field. To be more competitive in daily life, the basic goal of a reliability engineer is always to improve the reliability of product components or manufacturing systems. Obviously, an excellent reliability design facilitates a system to run more safely and reliably. In general, reliability optimization problems can be classified into two classes: integer reliability problems (IRP) and mixed-integer reliability problems (MIRP). In IRP, the components reliability of the system is known and the main task is only to allocate the redundant components number. In case of MIRP, both the component reliability and the redundancy allocation of the system are to be designed simultaneously. This kind of problem in which the reliability of the system is maximized through the choices of redundancy and component reliability is also known as the reliability-redundancy allocation problem (RRAP). To optimize a RRAP, redundancy levels and component reliabilities of the system are considered as integer values and continuous values lies between zero and one respectively. Several researchers works on this field to solve RRAP with the objective of maximizing system reliability under constraints such as the system cost, volume, and weight etc., [44,45,46,47,48, 66, 76]. RRAP has been considered to be an NP-hard combinatorial optimization problem because of its complexity and it has been considered as the subject of much prior research work over various optimization approaches. Recently, meta-heuristics algorithms (MHAs) have been successfully applied in dealing with the computational difficulty to solve a wide range of practical optimization problems. Solving optimization problems, these methods apply probabilistic rules and also approximate the optimal solution by using a random population in the search space that makes it more flexible to find better solutions compared to deterministic methods.

Inspiring by biological phenomena and human characteristics, several authors have been developed a variety of population-based optimization techniques to address complex optimization and in terms of the inspiring source, this can be broadly classified into three categories –

-

(i)

Swarm intelligence algorithms: These types of algorithms mimic the behaviours or intelligence of animals or plants in nature, such as artificial bee colony (ABC) [7], ant colony optimization (ACO) [18], grey wolf optimization (GWO) [60], particle swarm optimization (PSO) [42], whale optimization algorithm (WOA) [59], bat algorithm (BA) [85], cuckoo search (CS) [86], jellyfish search (JS) [16], mayfly algorithm (MA) [92] and salp swarm algorithm (SSA) [58] etc.

-

(ii)

Evolutionary algorithms: These algorithms mimic the mechanism of biological evolution, such as genetic algorithm (GA) [29], differential evolution (DE) [73], biogeography-based optimization (BBO) [71], and evolutionary programming (EP) [88] etc.

-

(iii)

Human-related algorithms: These algorithms are inspired by some human nature/activities, such as passing vehicle search (PVS) [69], sine cosine algorithm (SCA) [57], teaching-learning based optimization (TLBO) [65], and coronavirus herd immunity optimizer (CHIO) [4] etc.

According to the “No Free Launch (NFL)” theorem [83], there exist no MHAs best fitted to solve all optimization problems. Alternatively, it may happen that a particular algorithm gives efficient solutions for some optimization problems, but it may fail to perform well on another set of problems. Thus, no MHAs are perfect and its limitation affects the performance of the algorithm. Therefore, NFL provokes researchers to develop new MHAs or upgrade some original methods for solving a wider range of complex optimization problems (COPs). The hybridization of two algorithms is a remarkable and better choice between all strategies to upgrade an existing algorithm and to overcome shortcomings. In this process, two different operators are merged to get better solutions. For example, an improved HHO method named HHO-DE, based on the hybridization with DE algorithm is proposed for the multilevel image thresholding task by Bao et al., [11]. Later, Ibrahim et al., [38] present a hybrid optimization method that combines the salp swarm algorithm (SSA) with the particle swarm optimization for solving the feature selection problem. Again, in 2020, a hybrid method GNNA combining grey wolf optimization (GWO) and neural network algorithm (NNA) is proposed by Zhang [94]. Note that all above cited hybrid algorithms have been shown to be more competitive compared to the corresponding original methods. Considering the efficiency of the hybrid methods, this paper introduces a new hybrid algorithm based on SSA and TLBO to solve different kinds of reliability optimization problems.

Mirjalili et al. (2017) [58] first proposed an innovative population-based optimization method named salp swarm algorithm (SSA) that mimics the swarming behaviour of salps. The important characteristics like, simple structure, robustness, and scalability, makes SSA an efficient method for solving various kinds of real world problems (e.g., engineering design and optimization [80], feature selection [38], job shop scheduling [75], optimal power flow problem [22], parameter optimization of power system stabilizer [21], power generation [68], image segmentation [39, 8]. Therefore, these advantages make SSA an efficient technique and a rapid growth of the SSA studies has also been noticed recently. Despite of these efficiency, the basic SSA has some major drawbacks in solving some optimization problems. Firstly, the SSA algorithm suffers from the problem of local optima stagnation. Secondly, the SSA experience is adequate in exploration but lacking of exploitation forces an improper balance between exploration and exploitation. Finally, SSA has poor convergence tendency and sometimes, it need more time to evaluate a new solution for some optimization problems. To address these issues, researchers have applied different search mechanisms and adopted modified operators to upgrade the original SSA. To mention a few- Gupta et al., [30] have introduced a new variant of the SSA called m-SSA. In this work, two different search strategies levy-fight search and opposition-based learning are utilized to increase the convergence speed and also, to establish an appropriate balance between exploration and exploitation. Abasi et al., [1] proposed a new hybrid algorithm named H-SSA combining the SSA and the β −hill climbing algorithm (β HC) to enhance the convergence speed as well as the local searching ability of the conventional SSA. A new type improved SSA based on inertia weight search mechanism is introduced by Hegazy et al. [33] to maximize reliability, optimizing accuracy, and convergence speed. Kassaymeh et al., [41] have embedded backpropagation neural network (BPNN) in the salp swarm algorithm (SSA) to solve the software fault prediction (SFP) problem that helps to enhance the prediction accuracy. Sayed et al., [70] proposed a new hybrid algorithm CSSA that is based on the chaos theory and the basic SSA. Here, ten different types of chaotic maps are utilized to maximize the convergence speed and to get more accurate optimization results. A simplex method based salp swarm algorithm is introduced by Wang et al., (2018) [80] that improves the local searching ability of the algorithm. To maintain a proper balance between exploration and exploitation, Wu et al., [82] introduced an improved SSA based on a dynamic weight factor and an adaptive mutation searching strategy. Further, Tubishat et al., [77] proposed an improved version of SSA based on the concepts of opposition based learning and new local search strategy. These two improvement helps to enhance the exploitation capability of SSA. Singh et al., (2020) [72] developed a hybrid algorithm named HSSASCA that combines the sine-cosine algorithm (SCA) and the SSA to improve the convergence efficiency in both local and global search. Also, an enriched review of recent variants of SSA and its applications has been discussed by Abualigah et al., [3].

Apart from the SSA, inspired by the conventional teaching circumstances of the classroom, Rao et al., (2011) [65] proposed another significant algorithm for solving the optimization problems named TLBO. Teaching and learning are two common human social behaviours and are also an important motivating process in which an individual tries to learn from others. A regular classroom teaching-learning environment is motivational process that allows students to improve their cognitive levels. After their appearance, several researchers [2, 12, 13, 15, 36, 62, 80, 87] used this algorithm to solve the real-world optimization problems. However, in order to solve large complex global optimization problems, it often falls to the local optimum. The main advantages of the TLBO algorithm is that without any effort for calibrating initial parameters, it leads to first convergence speed and also, the computational complexity of the algorithm is much better than several existing algorithms like GA, ABC, CS, SCA and PSO etc.

Motivated by the advantages of SSA and TLBO, a hybrid algorithm called HSSATLBO has been developed in this paper. Generally, searching processes with similar nature may lead to the loss of diversity in the search space, and also there is a chance of getting trapped into a local optimum. But, the different searching techniques of two different algorithms can maximize the capacity of esca** from the local optimal. In this algorithm, the basic structure of the SSA has been renovated by embedding the features of the TLBO. In this context, a probabilistic selection strategy is defined, which helps to determine whether to apply the basic SSA or the TLBO to construct a new solution. In the searching process, TLBO helps to accelerate the convergence speed of HSSATLBO, whereas the excellent global exploration ability of SSA helps to find a better global optimal solution. Therefore, in the search process of HSSATLBO, TLBO aims at the local search, and SSA accentuates the global search, which may help to maintain a convenient balance between exploration and exploitation and produces efficient and effective results for solving RRAP. Therefore, in this study, a population diversity definition of the proposed method is introduced and also, performed the exploration-exploitation evaluation for investigating the search behaviour of both HSSATLBO and the conventional SSA. To reduce the computational complexity and improve the searching abilities, HSSATLBO can reduce its population by kee** the diversity too low. The measurement of exploration and exploitation also helps to identify how the proposed HSSATLBO performs better on an optimization problem. Kee** all of the above points in mind, the basic objective of the study is to present an efficient and effective algorithm to solve various types of reliability optimization problems. The main contributions of the paper are listed as follows:

-

A hybrid algorithm HSSATLBO is developed by combining the features of SSA and TLBO algorithms. Proposed algorithm mainly contains the structure of the basic SSA algorithm and, meantime, it has been reconstructed by embedding the searching strategy of TLBO.

-

The proposed method makes a proper balance between exploration and exploitation in which the basic SSA looks after the exploration part and the presence of the searching strategy of TLBO increases the exploitation capability of the algorithm. Again, to generate a new solution, a new probabilistic selection strategy is introduced to determine whether to apply the original SSA or the TLBO algorithm.

-

To validate the effectiveness and efficiency of the HSSATLBO algorithm, it is examined against seven well-known reliability redundancy optimization problems [9, 14, 23, 24, 28, 36, 43, 51, 56, 63, 78, 81, 89]. For a fair comparison, the test problems are also examined by the conventional SSA and its three different variants (LSSA, CSSA and GSSA). Finally, a comparative study between HSSATLBO and the three different variants of SSA are also performed in this study.

-

In order to state the statistically significant results or not, a number of tests have been carried out, such as rank-tie, Wilcoxon-rank test, Kruskal Wallis test, and multiple comparison tests, on the results obtained from the proposed and the existing algorithms. From these computed results, it is verified that the proposed algorithm produces an effective result and also delivers superior performance compared to other existing algorithms in terms of the best optimal solutions.

The rest of the papers is organized as follows: Section 2 briefly describes the traditional SSA, three variants of SSA (i.e., LSSA, CSSA & GSSA), and TLBO algorithms. Section 3 presents the proposed algorithm HSSATLBO, a probabilistic selection procedure, and exploration-exploitation measurement. The reliability redundancy allocation problems are described in Section 4. Section 5 presents the computational results of the proposed HSSATLBO algorithm and compares them with several existing algorithms. The results obtained have also been validated through statistical test analysis. Finally, Section 6 concludes the paper.

2 Basis algorithms

In this section, basic SSA, three improved variants of SSA, and the conventional TLBO algorithms are briefly described.

2.1 Salp swarm algorithm (SSA)

Salp swarm algorithm (SSA) is one of the population based algorithm recently developed by Mirjalili et al.,(2017) [58] to solve numerous kinds of optimization problems. Salp is a kind of marine creature which belongs to the Salpidae family and has a thin barrel-shaped body with openings at the end in which the water is pumped through the body as propulsion to move forward. These marine creature shows an interesting behaviour which is of interest in the paper is their swarming behaviour. In oceans, Salps having a swarm behaviour called salp chain may support salps in exploring and a better movement may be possible using fast cordial changes.

Based on this conduct, a mathematical form for the salp chains is designed by the authors and examined in optimization problems. Firstly, the population of salp is divided into two groups: leaders and followers. The first salp of the chain is known as the leader, and rest of them are called the followers. The position of all salps (Np) is stored in a two-dimensional matrix X given in equation (1). These salps looking for a food source that implies the target of the swarm.

Then the salp with the best fitness (i.e., leader) is find out by calculating the fitness value of each salp. The position of the leader should be refurbished in regular basis, so the following equation (2) is proposed-

Where \({x_{j}^{1}}\) is the position of the first salp (leader) in the jth dimension and Fj is the food position in the jth dimension. lbj and ubj represents the lower and upper bound of the jth dimension respectively. D1, D2 and D3 are random numbers lies between 0 and 1. Equation (2) shows that the leader only updates its position concerning the food source. Here, D1 is a very important parameter in this algorithm as it plays a vital role in balancing the exploration and exploitation phase and the is determined by the equation (3)

Where T and it represents the maximum number of iterations and the current iteration respectively. The parameter D2 and D3 controlled both the direction and the step size of the jth dimension of the next position. After updating the leader’s position, the follower’s position is updated using equation (4).

Where, i ≥ 2, \({x_{j}^{i}}\) shows the position of the ith follower salp in the jth dimension, μ0 is the initial speed, t is the time and \(\lambda =\frac {\mu _{final}}{\mu _{0}}\), where \(\mu = \frac {(x-x_{0})}{t}\).

In optimization, the time indicates the iteration, so the disparity between iterations is considered as 1 and, taking μ0 = 0, the following equation (5) is applied for this problem.

In may happen that some salps cross the search space, but, using equation (6) they can be bring back to the search space.

The detailed steps of the basic SSA are explained in Algorithm 1.

2.2 Improved variants of SSA with mutation strategies

Although the conventional SSA is a highly competitive and effective method for solving different kinds of complex optimization problems, it may trap into local optima and also, suffers from the improper balance between exploration and exploitation which encounters slow convergence. To get rid of this difficulty and to explore the solution space more adequately, Nautiyal et al., (2021) [61] introduced improved variants of SSA named LSSA, CSSA, and GSSA using three new mutation operators Lévy flight based mutation, Cauchy mutation, and Gaussian mutation respectively to enhance the overall performance of SSA. These different mutation operators make the algorithm more competent in exploring and exploiting the search space. In these improved SSA, the mutation scheme is performed after the greedy search is completed between two consecutive position \({x_{j}^{i}} (t)\) at tth iteration and \({x_{j}^{i}}(t+1)\) at (t + 1)th iteration corresponding to each salp which is given by the (7).

After the completion of the greedy search for each salp, the mutation strategy is performed with a mutation rate mr. In this study, the value of this parameter mr is taken as 0.7. During this process, the fitness value of the newly generated muted salps are compared with the original salps and if it found better, it replaces the original salps otherwise discarded. The detailed pseudo-code of the mutation-based SSA is presented in Algorithm 2.

I. Lévy flight based SSA (LSSA):

The concept of lévy flight based mutation is used to increase salps diversity in the SSA. When mutation rate allows, lévy-mutation can improve the global search ability more adequately by mutating the salps. Each muted salp in the LSSA is generated using (8) as follows

where LF(δ) corresponds to lévy distributed random number with δ variable size and that can be obtained using (9)

where u and v are standard normal distribution. β is a default constant set to 1.5.

II. Cauchy-SSA (CSSA):

In this Cauchy-SSA, a random number is generated, and if its value allows to generate the new salps using the mutation scheme based on the mutation rate mr, then each muted salp of the swarm in CSSA is generated using the (10) as follows

where Cauchy(δ) is a random number generated using the Cauchy distribution function given by the (11) as follows

and the Cauchy density function is given by

where, y is a uniformly distributed random number within (0,1) and η = 1 is a scale parameter.

III. Gaussian-SSA (GSSA):

In GSSA, the mutation follows the (13)

where Gaussian(δ) is a random number generated using the Gaussian distribution and the Gaussian density function is given by (14)

where σ2 is a variance for each salp. To generate random numbers, the above equation is reduced by taking standard deviation σ as 1.

2.3 Teaching-Learning Based Optimization (TLBO)

Rao et al., (2011) [65] first developed an algorithm like other population-based algorithms, which reproduced the conventional teaching-learning aspects of a classroom. In TLBO, a group of learners is recognised as the population and various subjects taught to learners represents different design variables. The fitness value indicates the students’ grade after learning, and the student with the best fitness value is witnessed as the teacher. This algorithm describes two basic modes of learning: (1) through teacher (known as teacher phase) and (2) interacting with the other learners (known as the learner phase). The working procedure of the TLBO algorithm is explained below -

In the teacher phase, let us assume, at any iteration t, the number of subjects or course offered to the learners is d and Np denotes the population size (i.e. number of learners). In this phase, the basic intention of a teacher is to transfer knowledge among the students and also to improvise the average result of the class. Here, the parameter Meanj(t) indicates the mean result of the learners in jth subject (j = 1,2,…,d) and at generation t, it is given by (15).

XTeacher(t) indicates the learner with the best objective function value at iteration t and is recognised as the teacher. The teacher tries to give his/her maximum effort to increase the knowledge of each student in the class, but learners will gain knowledge according to their talent and also by the quality of teaching. Then, the difference vector between the teacher and the average results of students can be calculated given by the equation (16).

where rand indicates a random number lies between 0 and 1. TF denotes the teaching factor and its value is decided randomly as given in equation (17)

The existing solution is now updated in the teacher phase and the updated solution is given by the following equation (18)

If the new learner \(X_{j}^{m,new}(t)\) in generation t is found to be a better than \({X_{j}^{m}}(t)\), then it will replace \({X_{j}^{m}}(t)\) otherwise keeps the previous solution.

Interaction with other students is an effective way to enhance their knowledge. A learner can also gain new information from other learners having more knowledge than him or her. The learning circumstances of the learner phase is given below.

A student Xm randomly select classmate Xn (≠Xm) to obtain more knowledge in the learner phase. If Xn performs better, Xm moves towards Xn; if Xn performs worse, Xm moves away from it. The following formulas (19) and (20) can be used to describe this process:

Where \(f({X_{j}^{n}}(t))\) and \(f({X_{j}^{m}}(t))\) are fitness values of \({X_{j}^{n}}(t)\) and \({X_{j}^{m}}(t)\) respectively. The pseudocode of the basic TLBO is given in Algorithm 3.

3 Proposed method

3.1 Probabilistic selection procedure

It is very important to maintain a proper balance between exploration and exploitation for a well-organized and well-designed meta-heuristics optimization algorithm. Therefore, in this study, a hybrid algorithm HSSATLBO is introduced by modifying the basic structure of SSA. A probabilistic selection parameter (PSP) is implemented in the proposed algorithm to decide whether to apply the search equation of SSA (Algorithm 1) or TLBO (Algorithm 3) to generate the new solution. The formula for the parameter PSP is given by equation (21)

Here, PSPmin and PSPmax denotes the minimum and maximum values of the parameter PSP, respectively. Again, MaxIter indicates the maximum number of generations, and iter shows the current generation. Equation (21) shows that, at the early stage of iterations, the value of the parameter PSP is very large, and its force to choose the search equation of SSA. However, as the number of iterations increases, the probability of electing the search equation of TLBO is also increased. In this study, PSPmin and PSPmax takes the value as 0.3 and 0.9 respectively, i.e., the value of the parameter PSP is a random number lies between [0.3, 0.9].

3.2 The proposed HSSATLBO

The framework of the proposed algorithm HSSATLBO that associates with the SSA and the TLBO algorithm, is demonstrated in this section. The conventional SSA algorithm shows excellent efficiency in exploration but undergoes poor exploitation, and as a result, it fails to manage the convenient balance between exploitation and exploration and also, most of the time it cannot generate a global optimum solution. To avoid this situation, the updating phase of the salps position is enhanced by reconstructing the basic formation of the SSA. During this modification the searching mechanism of TLBO is implemented into the main structure of the SSA. The TLBO algorithm having first convergence speed and much better computational complexity than several existing algorithms makes it an exceptional search algorithm. Thus, the inclusion of TLBO adds more flexibility to the SSA and subsequently, the exploration and exploitation abilities of the SSA algorithm are also improved. The detailed framework of the HSSATLBO algorithm is presented in Fig. 1. The first step in the HSSATLBO is to initialize the parameters for both the SSA and TLBO and a random population is generated that represents a set of salp positions. Then the fitness value for each solution is computed to evaluate the performance and the best one is determined. After that, the current population of both the leader and follower position is to be updated either by using the searching technique of SSA or TLBO algorithm depending on a probabilistic selection parameter (PSP) (Section 3.1). This parameter is basically designed to control the probability of selecting the above searching strategies. If a random number lies between [0,1] is less than PSP, then the SSA, otherwise, the TLBO is used for updating the current salps position. After that, the fitness value of the current population is evaluated and the current best solution is compared with the previous best fitness value, and accordingly the best solution is need to be updated. This procedure is continued until the stop** criterion is satisfied. For the proposed HSSATLBO algorithm the maximum iteration number is considered as a stop** criterion.

3.3 Exploration and exploitation measurement

In this study, an in-depth empirical analysis is performed to examine the searching behaviour of the proposed HSSATLBO in terms of diversity. Through diversity measurement, it is possible to measure explorative and exploitative capabilities of the algorithm. In the exploration phase, the difference expands between the values of dimension D within the population and hence swarm individuals are scattered in the search space. On the other hand, in exploitation phase, the difference reduces and swarm individuals are clustered to a dense area. These two concepts are ubiquitous in any MHAs. In case of finding the globally optimal location, the exploration phase maximizes the efficiency in order to visit unseen neighbourhoods in the search space. Contrarily, through exploitation, an algorithm can successfully converge to a neighbourhood with high possibility of global optimal solution. A proper balance between this two abilities is a trade-off problem. For better illustration about the exploration and exploitation concept, see Fig. 2 . According to Hussain [37], diversity in population is measured mathematically, using the following equations (22) and (23):

Where, \(x_{j}^{i,t}\) denotes the jth dimension of ith swarm individual in Np population in iteration t, whereas \(med({x_{j}^{t}})\) is median of dimension j. \(Di{v_{j}^{t}}\) and Divt indicates the diversity in the jth dimension and the average of diversity of all dimensions respectively. After determining the population diversity Divt for all the iterations, it is now possible to calculate the exploration and exploitation percentage ratios during search process, using equation (24) and equation (25) respectively:

where Expl(%) and Expt(%) denotes exploration and exploitation percentages respectively for iteration t, whereas \(Div_{max}^{t}\) is the maximum population diversity in all iterations (T).The MATLAB code for measuring population diversity and exploration-exploitation for MHAs has been made publicly available at https://github.com/usitsoft/Exploration-Exploitation-Measurementhttps://github.com/usitsoft/Exploration-Exploitation-Measurement.

4 Problem formulation

4.1 Notations

n | \(=(n_{1},n_{2},\dot {...},n_{m})\), the redundancy allocation |

vector for the system. | |

m | number of subsystems. |

n i | the number of components in subsystem i. |

\({n_{i}}^{max}\) | maximum number of components in subsystem i. |

r i | the components reliability in subsystem i. |

b | is the vector of resource limitation. |

c i | the component cost in subsystem i. |

v i | the component volume in subsystem i. |

w i | the component weight in subsystem i. |

R i | = \( 1-(1-r_{i})^{n_{i}} \), is the reliability of the ith |

subsystem. | |

C | upper limit of the system’s cost. |

V | upper limit of the system’s volume. |

W | upper limit of the system’s weight. |

R S | the system reliability. |

g j | the jth constraint function. |

4.2 Reliability-redundancy allocation problem

The requirement of reliability analysis to evaluate the performance of products, equipment, and several engineering systems is increasing day by day. Reliability optimization can figure out these issues and capable of finding a high-quality products and equipment that performs efficiently and safely in a given period. In this section, seven reliability optimization problems are discussed to examine the performance of the HSSATLBO algorithm.The general form of the reliability redundancy problem is

The goal of the problem is to maximize system reliability by computing the number of redundant components ni and the components’ reliability ri in each subsystem.

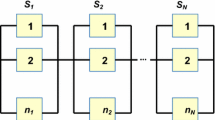

4.2.1 Series system [Fig. 3(a)] [6, 9, 23, 27, 31, 35, 36, 43, 81, 89, 91, 93]

The series system is a non-linear mixed-integer programming problem and the formulation is given as follows

where, \(c_{i} = \alpha _{i} \left (\frac {-1000}{ln(r_{i})}\right )^{\beta _{i}}, \ \ i = 1,2,...,5. \) The parameters βi and αi are physical features of system components. Constraints g1(r,n), g2(r,n), and g3(r,n) represents volume, cost and weight constraint respectively. The coefficients of the series system are shown in the literature (Garg, 2015a)[23] and Table 1.

4.2.2 Complex (bridge) system [Fig. 3(b)] [6, 9, 23, 25, 27, 31, 35, 36, 43, 63, 81, 89, 91, 93, 96, 97]

Complex (bridge) system consists of five subsystems and the formulation of it is described as follows:

\({\max \limits } R_{S}(r,n) = R_{1} R_{2}+R_{3} R_{4}+R_{1} R_{4} R_{5}+R_{2} R_{3} R_{5} -R_{1} R_{2} R_{3} R_{4}-R_{1} R_{2} R_{3} R_{5}-R_{1} R_{2} R_{4} R_{5}- R_{2} R_{3} R_{4} R_{5}+2R_{1} R_{2} R_{3} R_{4} R_{5} \) subject to, the same constraint given by the equation equation (27), (28) and (29) respectively. And also, 0.5 ≤ ri ≤ 1, 1 ≤ ni ≤ 5, ni ∈Z+, i = 1,2,...,5. The coefficients of the complex system are shown in the literature (Garg, 2015a) [23] and Table 1.

4.2.3 Series-Parallel System [Fig. 3(c)] [23, 25, 27, 31, 32, 35, 36, 40, 43, 51, 53, 63, 78, 79, 81, 89, 91]

The mathematical formulation is as follows:

\({\max \limits } R_{S}(r,n) =1-(1-R_{1} R_{2})[1-(1-(1-R_{3})(1-R_{4})) R_{5}]\)subject to, the same constraint given by the equation equation (27),(28) and (29) respectively. And also, 0.5 ≤ ri ≤ 1, 1 ≤ ni ≤ 5, ni ∈Z+, i = 1,2,...,5.The coefficients of the series-parallel system are shown in the literature (Garg, 2015a) [23] and Table 1.

4.2.4 Overspeed protection system for a gas turbine [Fig. 3(d)] [6, 19, 23, 24, 35, 36, 43, 51, 53, 63, 81, 93, 98]

This reliability problem is formulated as follows:

where, \(c_{i} = \alpha _{i} \left (\frac {-1000}{ln(r_{i})}\right )^{\beta _{i}}, \ \ i = 1,2,...,4. \) The coefficients of the overspeed protection system are shown in the literature (Garg, 2015a) [23] and Table 1.

4.2.5 Convex quadratic reliability problem [28, 50, 63, 91]

The mathematical formulation of this problem is as follows:

ni ∈ [1,6], i = 1,2,...,10. j = 1,2,3,4.The parameters ri, aji and Cji are generated from uniform distributions that lies between [0.80, 0.99], [0,10] and [0,10] respectively. A randomly generated set of values of these coefficients are given as follows:

\(a = \left (\begin {array}{cccccccccc} 2 & 7 & 3 & 0 & 5 & 6 & 9 & 4 & 8 & 1 \\ 4 & 9 & 2 & 7 & 1 & 0 & 8 & 3 & 5 & 6 \\ 5 & 1 & 7 & 4 & 3 & 6 & 0 & 9 & 8 & 2 \\ 8 & 3 & 5 & 6 & 9 & 7 & 2 & 4 & 0 & 1 \end {array} \right )\)

\(C = \left (\begin {array}{cccccccccc} 7 & 1 & 4 & 6 & 8 & 2 & 5 & 9 & 3 & 3 \\ 4 & 6 & 5 & 7 & 2 & 6 & 9 & 1 & 0 & 8 \\ 1 & 10 & 3 & 5 & 4 & 7 & 8 & 9 & 4 & 6 \\ 2 & 3 & 2 & 5 & 7 & 8 & 6 & 10 & 9 & 1 \end {array} \right )\)

4.2.6 Mixed series-parallel system [28, 50, 63, 91]

The mathematical formulation of this problem is as follows:

The coefficients of the mixed series-parallel system are taken from the literature (Gen et al., 1999) [26] and are listed in Table 2.

4.2.7 Large-scale system reliability problem [27, 28, 63, 78, 79, 81, 97]

The mathematical formulation of this problem is as follows:

Here, li indicates the lower bound of ni. The parameter 𝜃 indicates the tolerance error that implies 33% of the minimum requirement of each available resource li. The average minimum resource requirements for the reliability system with m subsystems is given by \({\sum }_{i=1}^{m} g_{ji}(l_{i}), (j=1,...,4)\) and the average values of which is given by \(b_{j}=\left (1+\frac {\theta }{100}\right ){\sum }_{i=1}^{m} g_{ji}(l_{i})\). In this way, we set the available system resources (Zou et al., 2010) [97] for reliability systems with 36,38,40,42, and 50 subsystems, respectively, as shown in Tables 3 and 4.

5 Results & discussions

In this section, we presented the results of all of the above-mentioned reliability optimization problems identified by the use of the proposed HSSATLBO algorithm. This section is divided into the following six parts. Section 5.1 introduces the experiment settings including parameters settings and maximum possible improvement (MPI). Section 5.2 describes the results obtained by the proposed algorithm and compared the performance with a number of existing approaches that are presented Table 5. The performance comparisons between HSSATLBO, SSA, and variants of SSA are presented in Section 5.3. A parameter sensitivity analysis for the parameter PSP is performed in Section 5.4. The performance in terms of population diversity and the exp- loation-exploitation measurement of HSSATLBO, SSA, and variants of SSA are described in Section 5.5. Finally, the statistical analysis of the proposed algorithm and all compared algorithms are illustrated in section Section 5.6.

5.1 Experiment settings

5.1.1 Parameter settings

The proposed algorithm is implemented in MATLAB (2015a) on the personal laptop with AMD Ryzen 3 2200 U with Radeon Vega Mobile Gfx 2.50GHz and 4.00 GB of RAM in Windows 10. The initial population sizes of ABC, NNA, TLBO, SSA, HHO, SMA, SCA and HSSATLBO were set as 100 for each and also the parameters of these compared algorithms are considered as: ABC (Maximum number of trials i.e., limit = 100), NNA (modification factor, β = 1), TLBO (teaching factor, TF = 1 or 2), HHO (β = 1.5), SMA (control parameter, z = 0.03), SCA (parameter, a = 2); Due to the stochastic nature of metaheuristics algorithms, it might be unreliable if one considers the results obtained in a single run. Therefore, 30 independent runs were performed for all applied algorithms ABC, NNA, TLBO, SSA, HHO, SMA, SCA and HSSATLBO for solving every reliability optimization problems. In our experiment, for every independent run, the maximum number of iterations for each algorithm is taken as 300.

5.1.2 Maximum possible improvement (MPI)

For each reliability optimization problem, the system reliability is to be maximized by computing both the components reliability ri and number of redundant components ni for each subsystem. During the computational procedure, the redundant components ni are firstly considered as real variables and after completion of the optimization process, the real values are converted to their respective nearest integer values. In this study, we introduce the maximum possible improvement (MPI) index to evaluate the performance of HSSATLBO and is expressed by the (45)

Where RS(HSSATLBO) denotes the best optimal solution obtained by the proposed algorithm and RS(Others) implies the best result obtained by the other compared approaches and the greater MPI indicates greater improvement.

5.2 HSSATLBO comparison with existing optimizers

This section describes the performance evaluation of proposed HSSATLBO in terms of best solution and the maximum possible improvement value. The results obtained by the proposed algorithm is compared with the other existing optimizers and the results of the compared algorithms are taken from their respective papers. The comparative analysis for solving the reliability problems are presented in Table 6 to Table 11.For the series system (4.1.1), Table 6 shows that the best optimal solution obtained by the proposed method is 0.93168238710, which is preferable to all compared algorithms SCA(Gen & Yun, 2006), SAA (Kim et al., 2006), GA (Yokota et al., 1996), IA (Hsieh & You, 2011), ABC1 (Yeh & Hsieh, 2011), IPSO (Wu et al., 2011), CS2 (Garg, 2015a), PSO (Huang, 2015), NAFSA (He et al., 2015), SSO (Huang, 2015), PSSO (Huang, 2015), MICA (Afonso et al., 2013), GA-SRS (Ardakan & Hamadani, 2014), and LXPM-IPSO-GA (Zhang et al., 2013) with the improvements 3.4940E-03%, 4.6533E-01%, 3.2446E-01%, 6.8943E-05%, 5.6662E-04%, 3.4940E-03%, 4.1061E-04%, 3.8727E + 01%, 1.7353E-04%, 2.6336E-01%, 1.3157E-04%, 4.3868E-03%, 3.6664E + 00%, and 3.9668E-05% respectively.

It can be observed from Table 7 that the optimal solution for the complex system (4.1.2) produced by HSSATLBO is 0.9998896373815054 which is better than the best result given by the other compared algorithms and also have most symbolic improvement 4.7596E + 01%, 1.7777E + 00%, 8.6705E + 00%, 2.5927E-01%, 6.6414E + 01%, 9.1343E-01%, and 2.5695E + 00%, over the results given by SCA (Gen & Yun, 2006), SSA(Kim et al., 2006), GA (Yokota et al., 1996), IA (Hsieh & You, 2011), PSO/SSO (Huang, 2015), and GA-SRS (Ardakan & Hamadani, 2014) respectively.

Table 8 presents that the best result for the series-parallel system (4.1.3) obtained by the proposed method is 0.9999863373757 and also better than the algorithms given by SCA (Gen & Yun, 2006), SAA (Kim et al., 2006), GA (Yokota et al., 1996), IA (Hsieh & You, 2011), ABC1 (Yeh & Hsieh, 2011), IPSO (Wu et al., 2011), CPSO (He & Wang, 2007a), CS1 (Valian & Valian, 2013), ICS (Valian et al., 2013), CS-GA (Kanagaraj et al., 2013), ABC2 (Garg et al., 2013), TS-DE (Liu & Qin, 2014), INGHS (Ouyang et al., 2015), CS2 (Garg, 2015a), MPSO (Liu & Qin, 2015), EBBO (Garg, 2015b), PSO (Huang, 2015), PSFSA (Mellal & Zio, 2016), NAFSA (He et al., 2015), PSSO (Huang, 2015), SSO (Huang, 2015), DE (Liu & Qin, 2015), IABC(Ghambari & Rahati, 2018) by the improvement 4.7085E + 01%, 4.1488E + 01%, 5.6280E + 01%, 4.2327E + 01%, 4.1490E + 01%, 3.9786E + 01%, 4.1513E + 01%, 4.1490E + 01%, 4.1490E + 01%, 4.1488E + 01%, 4.1490E + 01%, 4.1490E + 01%, 4.1490E + 01%, 4.1491E + 01%, 4.1490E + 01%, 4.1490E + 01%, 9.0348E + 01%, 4.1490E + 01%, 4.1493E + 01%, 4.1491E + 01%, 4.1687E + 01%, 4.1490E + 01%, and 3.2264E + 01% respectively. Also, the optimal redundant component by HSSATLBO for this sires-parallel system is (3,2,2,2,4) which is completely different from the other approaches.

It can be noticed in Table 9, the best solution achieved by HSSATLBO for the overspeed protection system (4.1.4) is 0.99995467466432. The proposed algorithm dominates 15 competitive algorithms in terms of the best-known solution found so far. Table 9 depicts that the proposed method has symbolic improvement indices 1.759E + 03%, 2.421E-02%, 1.029E + 00%, 1.029E + 00%, 1.240E-02%, 8.038E-02%, 1.750E-02%, and 1.419E-02% over the results by SAA (Kim et al., 2006), IPSO (Wu et al., 2011), NMDE (Zou et al., 2011), PSO (Huang, 2015), PSSO (Huang, 2015), SSO (Huang, 2015), DE (Liu & Qin, 2015) and GA-PSO (Duan et al., 2010) respectively. Table 10 indicates that HSSATLBO executes the same or better than the other existing algorithms given in this literature for solving the convex quadratic reliability problem (4.1.5) and the mixed series-parallel system (4.1.6) in terms of best results. Table 11 reports the test results of the problem (4.1.7). It can be seen that the HSSATLBO algorithm gives equal or better results compare to other algorithms in terms of the best objective function value for the large-scale problems of dimensions 36,38,40,42 & 50. But in the case of dimension 40, it comes with weaker objective value than two existing algorithms INGHS and IABC.

In order to show the convergence performance of the stated algorithm over several existing algorithms like ABC, NNA, TLBO, SSA etc, we vary the best solution for each considered problem and the results are plotted in Fig. 4. This analysis shows that the HSSATLBO has a better convergence rate compared to other algorithms.

5.3 HSSATLBO comparison with other variants of SSA

This section details about the comparative study of results for the conventional SSA, the proposed HSSATLBO and the three SSA variants namely Levy flight based SSA (LSSA), Cauchy salp swarm algorithm (CSSA) and Gaussian salp swarm algorithm (GSSA). The results are presented in Tables 12–16 in terms of best obtained value and the MPI values. Table 12 shows that the best optimal solution obtained by HSSATLBO for solving series system (4.1.1) is better than the original SSA and the three variants LSSA, CSSA and GSSA with the improvements 1.2529E-07%, 2.7509E-06%, 1.2566E-06%, and 6.8942E-07% respectively. It can be observed from Table 13, the optimal solution achieved by HSSATLBO for solving the complex system (4.1.2) is better than the compared algorithms SSA, LSSA, CSSA and GSSA with significant improvement percentage 2.6556E-03%, 2.3872E-03%, 3.2317E-04%, and 1.1439E-05% respectively. Again, in case solving the series-parallel system (4.1.3) and overspeed system (4.1.4), Tables 14 and 15 shows that, HSSATLBO dominated all compared algorithms effectively with the best optimal value as well as in MPI values. Table 16 shows that HSSATLBO executes the same or better optimal value with the other compared algorithms in case of solving both convex system (4.1.5) and mixed series-parallel system (4.1.6).

Again, the convergence graphs of HSSATLBO are also compared with LSSA, CSSA and GSSA for solving problems 4.1.1 to 4.1.6 and are given in Fig. 5. From these convergence graphs, we can conclude that, as the iteration number increases, the proposed HSSATLBO algorithm also shows better performance than the existing algorithm.

5.4 Parameter sensitivity analysis

In this section, parameter sensitivity analysis is performed to evaluate the impact of the probabilistic parameter PSP on the proposed algorithm. Under other conditions retained, different values of the parameter PSP are tested on reliability problems (4.1.1 - 4.1.6) and the results are presented in Table 17. PSP1 indicates that PSPmin and PSPmax takes the value 0.05 and 0.95 respectively, i.e., PSP1 lies between [0.05, 0.95]. Similarly, PSP2, PSP3, PSP4 and PSP5 are lies between [0.1, 0.9], [0.2, 0.9], [0.3, 0.9] and [0.4, 0.9] respectively. The mean values obtained by HSSATLBO and their corresponding ranking for each cases are given in that table. Also, as per their achievement in terms of mean value, we can sort their ranking, in the order: PSP4, PSP5, PSP3, PSP2, and PSP1. From the ranking order in Table 17, it can be observed that the result of the proposed algorithm is superior when PSP lies between [0.3, 0.9] i.e., for the case of PSP4. Researchers can also choose different values for PSP according to other set of problems. Figure 6 provides a better visualization of the ranking for each cases of HSSATLBO for solving reliability optimization problems.

5.5 Diversity and exploration-exploitation analysis

For an effective in-depth performance analysis, the population diversity and the exploration-exploitation measurement in HSSATLBO, SSA and SSA variants (i.e., LSSA, CSSA and GSSA) are presented in Table 18 while solving reliability optimization problems. A graphical presentation on comparison of diversity measurement between the proposed HSSATLBO and the SSA variants are given in Fig. 7. The exploration-exploitation phases of the proposed algorithm is also given in Fig. 8. According to Table 18, the proposed hybrid method mostly reduced population diversity compared to SSA, LSSA, CSSA and GSSA for all the reliability problems. For example, on series system (4.1.1), HSSATLBO maintained population diversity value 0.12618 which is relatively lesser than diversity values 1.17742, 0.64185, 0.65301, and 0.65110 in SSA, LSSA, CSSA and GSSA respectively. Similarly, diversity measurement in HSSATLBO for all other problems (4.1.2 - 4.1.6) remained lower than original SSA and its variants. Moreover, Table 18 also reveals that mostly HSSATLBO maintained exploration percentage lower than exploitation on all of the reliability problems. For instance, HSSATLBO maintained exploitation percentage as 81% 88% 72% 85% 67% and 65% for series, complex, series-parallel, overspeed, convex and mixed series-parallel system respectively; and these values are higher than exploitation measurements recorded for the compared algorithms. This discussion can be further assimilated via Fig. 7 for diversity measurement and Fig. 8 for exploration and exploitation behaviours in the proposed algorithm.

5.6 Statistical analysis

In addition, to analyze whether or not the results obtained by the proposed HSSATLBO algorithm are statistically significant, here we consider the following quality indices described below:

5.6.1 The statistical results by Value-based method and tied ranking

The solution quality in terms of standard deviation and mean value is described here. The lower mean value and standard deviation indicates that the algorithm has a stronger global optimization capability and more stability. Also, Tied rank (TR) (Rakhshani & Rahati, 2017) [64] is used here to compare intuitively the performance between the considered methods. In this study, the algorithm with the best mean value is assigned to rank 1; the second-best get rank 2, and so on. Besides, two algorithms having same results share the average of ranks. The algorithm with the smaller rank indicates that it is better than the compared algorithms. In view of the above two quality parameters, the statistical results achieved for HSSATLBO and all other existing algorithms (like ABC, NNA, TLBO, SSA, HHO, SMA and SCA) and the three variants of SSA (LSSA, CSSA, and GSSA) are computed and summarized in Tables 19 and 20 for the considered problems. In this table, the mean, SD and median of the best fitness value after the 30 independent runs of each algorithm is reported. From this table, it is observed that the proposed algorithm is rank 1 followed by the other algorithm, which shows its stability and convergence for all of the benchmark issues. Also, we can sort the ranking, as per their achievement, in the order:

-

i.

HSSATLBO, TLBO, SMA, SSA, NNA, ABC, HHO and SCA.

-

ii.

HSSATLBO, GSSA, LSSA, CSSA and SSA.

The ranking order in Table 19 indicates that the TLBO algorithm shows strong competitiveness and is the second-best on all test issues except Overspeed system. Also, in Table 20, the Gaussian variant of SSA (GSSA) occupied second best position on most of the cases except for solving the series-parallel system and the mixed system. It can therefore be argued that HSSATLBO is an efficient and effective method for solving various kinds of optimization problems.

Apart from this analysis, a statistical test named Wilcoxon signed-rank test is performed to check the statistical significance of the results obtained from the proposed algorithm.

5.6.2 The results analysis by Wilcoxon signed-rank test

This statistical test-based method [17] is used to compare the performance of the proposed HSSATLBO with the other algorithms. Also, it has several advantages,compared to the t-test, such as: (1) normal distributions is not considered here for the sample tested; (2) It’s less affected and more responsive than the t-test. This advantages makes it more powerful test for comparing two algorithms (Mafarja et al., 2018 [55]; Sun et al., 2018 [74]; Yi et al., 2019 [90]). Wilcoxon signed-rank test is performed here with a significance level α = 0.05 and the obtained results are shown in Tables 21 and 22 . In this table, “H” scored “1” if there is a symbolic difference between HSSATLBO and the existing algorithm and also “H” is labelled as “0” if there is no significant difference. Again, the sign of “S” is taken as “ + ” if the proposed algorithm is superior to the compared algorithm and “−” is assigned to “S” if HSSATLBO is inferior to the compared algorithm. It is noted that the proposed algorithm HSSATLBO dominates all compared algorithms on all reliability problems. Thus, from this analysis, we conclude that the proposed HSSATLBO can obtain better solutions than the comparative algorithms, which means that the proposed method has a better global performance optimization capability than the comparable algorithms.

5.6.3 Kruskal-Wallis and multiple comparison test

The MCT test is performed here to justify whether the proposed HSSATLBO algorithm is better than the other optimizers (e.g., SSA, NNA, TLBO, ABC, HHO, SMA and SCA) and the other variants of SSA (LSSA, CSSA, and GSSA). For this purpose, we perform a non-parametric Kruskal-Wallis test (KWT) between the best values obtained for each problem considered. This test was used to investigate the hypothesis that the different independent samples of the distributions had or did not have the same estimates. On the other hand, the MCT is used to determine the significant difference between the different estimates by performing multiple comparisons using one-way ANOVA. To addressed this, the significance of the proposed HSSATLBO algorithm results are compared with the compared algorithms results. The optimized results between the pairs of the different algorithms are summarized in Tables 23 and 24. In this table, the first column represents the problem considered, while the second column indicates the indices between the pairs of the different samples. The third and fifth column describes the boundary of the true mean difference between the samples considered at a 5% level of significance. At the end of the last column, the p-value of the test obtained by KWT corresponds to the null hypothesis of equal means.

The box-plot and the MCT graphs for the problems (4.1.1-4.1.6) considered are shown in Figs. 9 and 10. In this figure, the left graph describes the boxes with the values of the 1st, 2nd and 3rd quarters, while the vertical lines that extend the boxes are called the whisker lines that provide information on the re-imagining values. On the other hand, on the right side of this figure, the MCT makes a multiple comparison between the different pairs and makes a significant difference between them. The blue line on these graphs represents the proposed HSSATLBO results and the red line indicates which algorithm results (such as HHO, SMA, SCA, ABC, NNA, TLBO, and SSA) or (LSSA, CSSA, GSSA and SSA) are statistically significant from the proposed HSSATLBO. For example, in case of series system (4.1.1), as shown in Fig. 9 we calculate that all existing algorithms (HHO, SMA, SCA, ABC, NNA, TLBO, and SSA) have statistically significant resources from the HSSATLBO algorithm. Furthermore, the vertical lines (right/left, shown in black colour) shown around the HSSATLBO results (displayed in blue colour) describe the marginal area to show which method is statistically better or not considered to be problematic. From this analysis and the results are shown in Figs. 9-10 and Tables 23-24, we conclude that the performance of the proposed algorithm is statistically significant with the other algorithms. The best results are therefore provided by the HSSATLBO.

6 Conclusions & Future work

In order to solve the reliability-redundancy allocation problems (RRAP) with non-linear resource constraints, this paper introduces a hybrid algorithm HSSATLBO combining the SSA and TLBO algorithms. SSA has been successfully tasted to solve various kinds of complex optimization problems due to its simple structure and outstanding performance. Although the SSA experience is adequate in exploration but lacking of exploitation, which forces slow convergence and reduces the optimizing accuracy. To address these issues, the basic formation of the SSA has been renovated by embedding the features of the TLBO. In this context, a probabilistic selection strategy is defined, which helps to determine whether to apply the basic SSA or the TLBO to construct a new solution. To demonstrate the application of the HSSATLBO algorithm, we have considered several benchmark issues in the areas of reliability optimization. All of these problems considered are mixed variables – discrete, continuous and integer. The core idea of the proposed HSSATLBO algorithm is to make full use of the good global search ability of SSA and fast convergence of TLBO that helps to maximize the system reliability through the choices of redundancy and component reliability. The results obtained from the proposed algorithm have been tested and compared with a number of existing algorithms and conclude that they also perform well. Also, the best, mean, median and SD of the problems considered are reports that indicate that the proposed algorithm has better results with less SD and is therefore reliable and optimal. In addition, in order to eliminate the stochastic nature of the algorithm, we perform several statistical tests, namely a ranking test and a Wilcoxon signed-rank test for each problem. All of the above discussions and evaluations in this study ensure that the proposed algorithm is a competitive approach, not only that it performs well but also that it has a better global performance optimization capability than the comparable algorithms to solve the reliability problems.

In future works, one can attempt to use the proposed algorithm in other applications such as airline recovery problems, integrated aircraft, and passenger recovery problems, flight perturbation problems, etc. Flight irregularity is a well-known and widespread problem all over the world which creates a serious impact on the performance of the airlines’ company. All through the present decades, different models for dealing with the aircraft recovery issue have been recommended that hope to optimize the task upon different conditions. Airlines are attempting to locate the best timetables that are steady with their different objectives; namely, minimize the number of interrupted passengers and the total number of aircraft to recuperate from the interruption, decrease the number of irrecoverable flights, minimize the interrupted passenger’s cost, thus the ultimate goal of airlines to maximize their overall profit (Andersson, 2006 [5]; Arıkan et al., 2016 [10]). Analysts suggest that it is better to consider recapture problems jointly with all essential constraints instead of considering only one recovery. Real situations including erratic interruptions require a more sensible arrangement that can retain changes in a better way. Based on our study in that field of disruption administration, our proposed algorithm may be considered for further research to solve the problem. Being a dynamic field for exploration, the researchers may extend the models to handle more complex variants of the combined recovery problem.

References

Abasi AK, Khader AT, Al-Betar MA, Alyasseri ZAA, Makhadmeh SN, Al-laham M, Naim S (2021) A Hybrid Salp Swarm Algorithm with β-Hill Climbing Algorithm for Text Documents Clustering. Evolutionary Data Clustering: Algorithms and Applications, 129. https://doi.org/10.1016/j.jksuci.2021.06.015

Abirami M, Ganesan S, Subramanian S, Anandhakumar R (2014) Source and transmission line maintenance outage scheduling in a power system using teaching-learning based optimization algorithm. Applied Soft Computing Journal 21:72–83. https://doi.org/10.1016/j.asoc.2014.03.015

Abualigah L, Shehab M, Alshinwan M, Alabool H (2020) Salp swarm algorithm: a comprehensive survey. Neural Computing and Applications 32(15):11195–11215. https://doi.org/10.1007/s00521-019-04629-4

Al-Betar MA, Alyasseri ZAA, Awadallah MA, Doush IA (2021) Coronavirus herd immunity optimizer (CHIO). Neural Computing and Applications 33(10):5011–5042. https://doi.org/10.1007/s00521-020-05296-6

Andersson T (2006) Solving the flight perturbation problem with meta heuristics. Journal of Heuristics 12(1–2):37–53. https://doi.org/10.1007/s10732-006-4833-4

Afonso LD, Mariani VC, Dos Santos Coelho L (2013) Modified imperialist competitive algorithm based on attraction and repulsion concepts for reliability-redundancy optimization. Expert Systems with Applications 40(9):3794–3802. https://doi.org/10.1016/j.eswa.2012.12.093

Akay B, Karaboga D (2012) Artificial bee colony algorithm for large-scale problems and engineering design optimization. Journal of Intelligent Manufacturing 23(4):1001–1014. https://doi.org/10.1007/s10845-010-0393-4

Alkoffash MS, Awadallah MA, Alweshah M, Zitar RA, Assaleh K, Al-Betar MA (2021) A Non-convex Economic Load Dispatch Using Hybrid Salp Swarm Algorithm. Arabian Journal for Science and Engineering, 1-20. https://doi.org/10.1007/s13369-021-05646-z

Ardakan MA, Hamadani AZ (2014) Reliability-redundancy allocation problem with cold-standby redundancy strategy. Simulation Modelling Practice and Theory 42:107–118. https://doi.org/10.1016/j.simpat.2013.12.013

Arikan U, Gürel S, Aktürk MS (2016) Integrated aircraft and passenger recovery with cruise time controllability. Annals of Operations Research 236(2):295–317. https://doi.org/10.1007/s10479-013-1424-2

Bao X, Jia H, Lang C (2019) A novel hybrid harris hawks optimization for color image multilevel thresholding segmentation. IEEE Access 7:76529–76546. https://doi.org/10.1109/ACCESS.2019.2921545

Birashk A, Kazemi Kordestani J, Meybodi MR (2018) Cellular teaching-learning-based optimization approach for dynamic multi-objective problems. Knowledge-Based Systems 141:148–177. https://doi.org/10.1016/j.knosys.2017.11.016

Chen D, Zou F, Li Z, Wang J, Li S (2015) An improved teaching-learning-based optimization algorithm for solving global optimization problem. Information Sciences 297:171–190. https://doi.org/10.1016/j.ins.2014.11.001

Chen TC (2006) IAs based approach for reliability redundancy allocation problems. Applied Mathematics and Computation 182(2):1556–1567. https://doi.org/10.1016/j.amc.2006.05.044

Chen X, Mei C, Xu B, Yu K, Huang X (2018) Quadratic interpolation based teaching-learning-based optimization for chemical dynamic system optimization. Knowledge-Based Systems 145:250–263. https://doi.org/10.1016/j.knosys.2018.01.021

Chou JS, Truong DN (2021) A novel metaheuristic optimizer inspired by behavior of jellyfish in ocean. Applied Mathematics and Computation 389:125535. https://doi.org/10.1016/j.amc.2020.125535

Derrac J, García S, Molina D, Herrera F (2011) A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm and Evolutionary Computation 1(1):3–18. https://doi.org/10.1016/j.swevo.2011.02.002

Dorigo M, Birattari M, Stutzle T (2006) Ant colony optimization. IEEE Computational Intelligence Magazine 1(4):28–39. https://doi.org/10.1109/MCI.2006.329691

Duan HB, Xu CF, **ng ZH (2010) A hybrid artificial bee colony optimization and quantum evolutionary algorithm for continuous optimization problems. International Journal of Neural Systems 20(1):39–50. https://doi.org/10.1142/S012906571000222X

Ekinci S, Hekimoǧlu B, Kaya S (2018) Tuning of PID controller for AVR system using salp swarm algorithm. In: 2018 International Conference on Artificial Intelligence and Data Processing (IDAP) (pp. 1–6). IEEE. https://doi.org/10.1109/IDAP.2018.8620809

Ekinci S, Hekimoglu B (2018) Parameter optimization of power system stabilizer via salp swarm algorithm. In: 2018 5th international conference on electrical and electronic engineering (ICEEE) (pp. 143–147). IEEE. https://doi.org/10.1109/ICEEE2.2018.8391318

El-Fergany AA, Hasanien HM (2020) Salp swarm optimizer to solve optimal power flow comprising voltage stability analysis. Neural Computing and Applications 32(9):5267–5283. https://doi.org/10.1007/s00521-019-04029-8

Garg H (2015) An approach for solving constrained reliability-redundancy allocation problems using cuckoo search algorithm, vol 4. https://doi.org/10.1016/j.bjbas.2015.02.003

Garg H (2015) An efficient biogeography- based optimization algorithm for solving reliability optimization problems. Swarm and Evolutionary Computation 24:1–10. https://doi.org/10.1016/j.swevo.2015.05.001

Garg H, Rani M, Sharma SP (2013) An efficient two-phase approach for solving reliability-redundancy allocation problem using artificial bee colony technique. Computers and Operations Research 40 (12):2961–2969. https://doi.org/10.1016/j.cor.2013.07.014

Gen M, Ida K, Lee CY (1999) Hybridized neural network and genetic algorithms for solving nonlinear integer programming problem. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 1585(April):421–429. https://doi.org/10.1007/3-540-48873-1-54

Gen M, Yun YS (2006) Soft computing approach for reliability optimization: State-of-the-art survey. Reliability Engineering and System Safety 91(9):1008–1026. https://doi.org/10.1016/j.ress.2005.11.053

Ghambari S, Rahati A (2018) An improved artificial bee colony algorithm and its application to reliability optimization problems. Applied Soft Computing Journal 62:736–767. https://doi.org/10.1016/j.asoc.2017.10.040

Goldberg DE, Holland JH (1988) Genetic Algorithms and Machine Learning. Machine Learning 3(2):95–99. https://doi.org/10.1023/A:1022602019183

Gupta S, Deep K, Heidari AA, Moayedi H, Chen H (2021) Harmonized salp chain-built optimization. Engineering with Computers, 1-31. https://doi.org/10.1007/s00366-019-00871-5

He Q, Hu X, Ren H, Zhang H (2015) A novel artificial fish swarm algorithm for solving large-scale reliability-redundancy application problem. ISA Transactions 59:105–113. https://doi.org/10.1016/j.isatra.2015.09.015

He Q, Wang L (2007) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Engineering Applications of Artificial Intelligence 20(1):89–99. https://doi.org/10.1016/j.engappai.2006.03.003

Hegazy AE, Makhlouf MA, El-Tawel GS (2020) Improved salp swarm algorithm for feature selection. Journal of King Saud University - Computer and Information Sciences 32(3):335–344. https://doi.org/10.1016/j.jksuci.2018.06.003

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris hawks optimization: Algorithm and applications. Future generation computer systems 97:849–872. https://doi.org/10.1016/j.future.2019.02.028

Hsieh YC, You PS (2011) An effective immune based two-phase approach for the optimal reliability-redundancy allocation problem. Applied Mathematics and Computation 218(4):1297–1307. https://doi.org/10.1016/j.amc.2011.06.012

Huang CL (2015) A particle-based simplified swarm optimization algorithm for reliability redundancy allocation problems. Reliability Engineering and System Safety 142:221–230. https://doi.org/10.1016/j.ress.2015.06.002

Hussain K, Salleh MNM, Cheng S, Shi Y (2019) On the exploration and exploitation in popular swarm-based metaheuristic algorithms. Neural Computing and Applications 31(11):7665–7683. https://doi.org/10.1007/s00521-018-3592-0

Ibrahim RA, Ewees AA, Oliva D, Abd Elaziz M, Lu S (2019) Improved salp swarm algorithm based on particle swarm optimization for feature selection. Journal of Ambient Intelligence and Humanized Computing 10(8):3155–3169. https://doi.org/10.1007/s12652-018-1031-9

Ibrahim A, Ahmed A, Hussein S, Hassanien AE (2018) Fish image segmentation using salp swarm algorithm. In: International Conference on advanced machine learning technologies and applications (pp. 42–51). Springer, Cham. https://doi.org/10.1007/978-3-319-74690-6_5

Kanagaraj G, Ponnambalam SG, Jawahar N (2013) A hybrid cuckoo search and genetic algorithm for reliability-redundancy allocation problems. Computers and Industrial Engineering 66(4):1115–1124. https://doi.org/10.1016/j.cie.2013.08.003

Kassaymeh S, Abdullah S, Al-Betar MA, Alweshah M (2021) Salp swarm optimizer for modeling the software fault prediction problem. Journal of King Saud University-Computer and Information Sciences. https://doi.org/10.1016/j.jksuci.2021.01.015

Kennedy J, Eberhart R (1995) Particle swarm optimization. Proceedings of ICNN’95 - International Conference on Neural Networks 4:1942–1948. https://doi.org/10.1109/ICNN.1995.488968

Kim HG, Bae CO, Park DJ (2006) Reliability-redundancy optimization using simulated annealing algorithms. Journal of Quality in Maintenance Engineering 12(4):354–363. https://doi.org/10.1108/13552510610705928

Kundu T, Islam S (2018) Neutrosophic Goal Geometric Programming Problem and Its Application to Multi-objective Reliability Optimization Model. International Journal of Fuzzy Systems 20(6):1986–1994. https://doi.org/10.1007/s40815-018-0479-2

Kundu T, Islam S (2019) A new interactive approach to solve entropy based fuzzy reliability optimization model. International Journal on Interactive Design and Manufacturing 13(1):137–146. https://doi.org/10.1007/s12008-018-0484-6

Kundu T, Islam S (2019) An interactive weighted fuzzy goal programming technique to solve multi-objective reliability optimization problem. Journal of Industrial Engineering International 15:95–104. https://doi.org/10.1007/s40092-019-0321-y

Kuo W, Rajendra Prasad V (2000) An annotated overview of system-reliability optimization. IEEE Transactions on Reliability 49(2):176–187. https://doi.org/10.1109/24.877336

Kuo W, Wan R (2007) Recent advances in optimal reliability allocation. In: IEEE Transactions on Systems, Man, and Cybernetics - Part A: Systems and Humans, vol. 37, no. 2, pp. 143–156. https://doi.org/10.1109/TSMCA.2006.889476

Li S, Chen H, Wang M, Heidari AA, Mirjalili S (2020) Slime mould algorithm: A new method for stochastic optimization. Future Generation Computer Systems 111:300–323. https://doi.org/10.1016/j.future.2020.03.055

Liao TW (2010) Two hybrid differential evolution algorithms for engineering design optimization. Applied Soft Computing Journal 10(4):1188–1199. https://doi.org/10.1016/j.asoc.2010.05.007

Liu Y, Qin G (2014) A Modified Particle Swarm Optimization Algorithm for Reliability Redundancy Optimization Problem. Journal of Computers 9(9):2024–2031. https://doi.org/10.4304/jcp.9.9.2124-2131

Liu Y, Qin G (2014) A Hybrid TS-DE Algorithm for Reliability Redundancy Optimization Problem. Journal of Computers 9(9):2050–2057. https://doi.org/10.4304/jcp.9.9.2050-2057

Liu Y, Qin G (2015) A DE Algorithm Combined with Levy Flight for Reliability Redundancy Allocation Problems. International Journal of Hybrid Information Technology 8(5):113–118. https://doi.org/10.14257/ijhit.2015.8.5.12

Liu X, Xu H (2018) Application on target localization based on salp swarm algorithm. In: 37th Chinese Control Conference (CCC) (pp. 4542–4545). IEEE. https://doi.org/10.23919/ChiCC.2018.8482543

Mafarja M, Aljarah I, Heidari AA, Faris H, Fournier-Viger P, Li X, Mirjalili S (2018) Binary dragonfly optimization for feature selection using time-varying transfer functions. Knowledge-Based Systems 161:185–204. https://doi.org/10.1016/j.knosys.2018.08.003

Mellal MA, Zio E (2016) A penalty guided stochastic fractal search approach for system reliability optimization. Reliability Engineering and System Safety 152:213–227. https://doi.org/10.1016/j.ress.2016.03.019

Mirjalili S (2016) SCA: A Sine Cosine Algorithm for solving optimization problems. Knowledge-Based Systems 96:120–133. https://doi.org/10.1016/j.knosys.2015.12.022

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Advances in Engineering Software 114:163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002

Mirjalili S, Lewis A (2016) The Whale Optimization Algorithm. Advances in Engineering Software 95:51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey Wolf Optimizer. Advances in Engineering Software 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Nautiyal B, Prakash R, Vimal V, Liang G, Chen H (2021) Improved Salp Swarm Algorithm with mutation schemes for solving global optimization and engineering problems. Engineering with Computers, 1-23. https://doi.org/10.1007/s00366-020-01252-z

Ouyang HB, Gao LQ, Kong XY, Zou DX, Li S (2015) Teaching-learning based optimization with global crossover for global optimization problems. Applied Mathematics and Computation 265:533–556. https://doi.org/10.1016/j.amc.2015.05.012

Ouyang HB, Gao LQ, Li S, Kong XY (2015) Improved novel global harmony search with a new relaxation method for reliability optimization problems. Information Sciences 305:14–55. https://doi.org/10.1016/j.ins.2015.01.020

Rakhshani H, Rahati A (2017) Snap-drift cuckoo search: A novel cuckoo search optimization algorithm. Applied Soft Computing Journal 52:771–794. https://doi.org/10.1016/j.asoc.2016.09.048

Rao RV, Savsani VJ, Vakharia DP (2011) Teaching-learning-based optimization: A novel method for constrained mechanical design optimization problems. CAD Computer Aided Design 43(3):303–315. https://doi.org/10.1016/j.cad.2010.12.015

Ravi V, Reddy PJ, Zimmermann HJ (2000) Fuzzy global optimization of complex system reliability. IEEE Transactions on Fuzzy Systems 8(3):241–248. https://doi.org/10.1109/91.855914

Sadollah A, Sayyaadi H, Yadav A (2018) A dynamic metaheuristic optimization model inspired by biological nervous systems: Neural network algorithm. Applied Soft Computing Journal 71:747–782. https://doi.org/10.1016/j.asoc.2018.07.039

Sahu PC, Mishra S, Prusty RC, Panda S (2018) Improved-salp swarm optimized type-II fuzzy controller in load frequency control of multi area islanded AC microgrid. Sustainable Energy, Grids and Networks 16:380–392. https://doi.org/10.1016/j.segan.2018.10.003

Savsani P, Savsani V (2016) Passing vehicle search (PVS): A novel metaheuristic algorithm. Applied Mathematical Modelling 40(5–6):3951–3978. https://doi.org/10.1016/j.apm.2015.10.040

Sayed GI, Khoriba G, Haggag MH (2018) A novel chaotic salp swarm algorithm for global optimization and feature selection. Applied Intelligence 48(10):3462–3481. https://doi.org/10.1007/s10489-018-1158-6

Simon D (2008) Biogeography-based optimization. IEEE Transactions on Evolutionary Computation 12(6):702–713. https://doi.org/10.1109/TEVC.2008.919004

Singh N, Son LH, Chiclana F, Magnot JP (2020) A new fusion of salp swarm with sine cosine for optimization of non-linear functions. Engineering with Computers 36(1):185–212. https://doi.org/10.1007/s00366-018-00696-8

Storn R, Price K (1997) Differential Evolution - A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. Journal of Global Optimization 11(4):341–359. https://doi.org/10.1023/A:1008202821328

Sun G, Ma P, Ren J, Zhang A, Jia X (2018) A stability constrained adaptive alpha for gravitational search algorithm. Knowledge-Based Systems 139:200–213. https://doi.org/10.1016/j.knosys.2017.10.018

Sun ZX, Hu R, Qian B, Liu B, Che GL (2018) Salp swarm algorithm based on blocks on critical path for reentrant job shop scheduling problems. In: International conference on intelligent computing (pp. 638-648). Springer, Cham. https://doi.org/10.1007/978-3-319-95930-6_64

Tillman FA, Hwang CL, Kuo W (1977) Optimization Techniques for System Reliability with Redundancy—A Review. IEEE Transactions on Reliability R-26(3):148–155. https://doi.org/10.1109/TR.1977.5220100

Tubishat M, Idris N, Shuib L, Abushariah MA, Mirjalili S (2020) Improved Salp Swarm Algorithm based on opposition based learning and novel local search algorithm for feature selection. Expert Systems with Applications 145:113122. https://doi.org/10.1016/j.eswa.2019.113122

Valian E, Tavakoli S, Mohanna S, Haghi A (2013) Improved cuckoo search for reliability optimization problems. Computers and Industrial Engineering 64(1):459–468. https://doi.org/10.1016/j.cie.2012.07.011

Valian E, Valian E (2013) A cuckoo search algorithm by Lévy flights for solving reliability redundancy allocation problems. Engineering Optimization 45(11):1273–1286. https://doi.org/10.1080/0305215X.2012.729055

Wang D, Zhou Y, Jiang S, Liu X (2018) A simplex method-based salp swarm algorithm for numerical and engineering optimization. IFIP Advances in Information and Communication Technology 538:150–159. https://doi.org/10.1007/978-3-030-00828-4_16

Wu P, Gao L, Zou D, Li S (2011) An improved particle swarm optimization algorithm for reliability problems. ISA Transactions 50(1):71–81. https://doi.org/10.1016/j.isatra.2010.08.005

Wu J, Nan R, Chen L (2019) Improved salp swarm algorithm based on weight factor and adaptive mutation. Journal of Experimental Theoretical Artificial Intelligence 31(3):493–515. https://doi.org/10.1080/0952813X.2019.1572659

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation 1(1):67–82. https://doi.org/10.1109/4235.585893

**ng Z, Jia H (2019) Multilevel color image segmentation based on GLCM and improved salp swarm algorithm. IEEE Access 7:37672–37690. https://doi.org/10.1109/ACCESS.2019.2904511

Yang XS (2010) A new metaheuristic Bat-inspired Algorithm. Studies in Computational Intelligence 284:65–74. https://doi.org/10.1007/978-3-642-12538-6_6

Yang XS, Deb S (2009) Cuckoo search via Lévy flights. In: 2009 World Congress on Nature and Biologically Inspired Computing, NABIC 2009 - Proceedings, pp 210–214. https://doi.org/10.1109/NABIC.2009.5393690

Yang Z, Li K, Guo Y, Ma H, Zheng M (2018) Compact real-valued teaching-learning based optimization with the applications to neural network training. Knowledge-Based Systems 159:51–62. https://doi.org/10.1016/j.knosys.2018.06.004

Yao X, Liu Y, Lin G (1999) Evolutionary programming made faster. IEEE Transactions on Evolutionary Computation 3(2):82–102. https://doi.org/10.1109/4235.771163

Yeh WC, Hsieh TJ (2011) Solving reliability redundancy allocation problems using an artificial bee colony algorithm. Computers and Operations Research 38(11):1465–1473. https://doi.org/10.1016/j.cor.2010.10.028

Yi J, Gao L, Li X, Shoemaker CA, Lu C (2019) An on-line variable-fidelity surrogate-assisted harmony search algorithm with multi-level screening strategy for expensive engineering design optimization. Knowledge-Based Systems 170:1–19. https://doi.org/10.1016/j.knosys.2019.01.004

Yokota T, Gen M, Li YX (1996) Genetic algorithm for non-linear mixed integer programming problems and its applications. Computers and Industrial Engineering 30 (4):905–917. https://doi.org/10.1016/0360-8352(96)00041-1

Zervoudakis K, Tsafarakis S (2020) A mayfly optimization algorithm. Computers Industrial Engineering 145:106559. https://doi.org/10.1016/j.cie.2020.106559

Zhang H, Hu X, Shao X, Li Z, Wang Y (2013) IPSO-based hybrid approaches for reliability-redundancy allocation problems. Science China Technological Sciences 56(11):2854–2864. https://doi.org/10.1007/s11431-013-5372-5

Zhang Y, ** Z, Chen Y (2020) Hybridizing grey wolf optimization with neural network algorithm for global numerical optimization problems. Neural Computing and Applications 32(14):10451–10470. https://doi.org/10.1007/s00521-019-04580-4

Zhang J, Wang Z, Luo X (2018) Parameter estimation for soil water retention curve using the salp swarm algorithm. Water 10(6):815. https://doi.org/10.3390/w10060815

Zou D, Gao L, Li S, Wu J (2011) An effective global harmony search algorithm for reliability problems. Expert Systems with Applications 38(4):4642–4648. https://doi.org/10.1016/j.eswa.2010.09.120

Zou D, Gao L, Wu J, Li S, Li Y (2010) A novel global harmony search algorithm for reliability problems. Computers and Industrial Engineering 58(2):307–316. https://doi.org/10.1016/j.cie.2009.11.003

Zou D, Liu H, Gao L, Li S (2011) A novel modified differential evolution algorithm for constrained optimization problems. Computers and Mathematics with Applications 61(6):1608–1623. https://doi.org/10.1016/j.camwa.2011.01.029

Acknowledgements

The authors wish to acknowledge the Council of Scientific and Industrial Research (C.S.I.R), India, for financial support through the CSIR Grants-in-aid No: 25(0287)/18/ EMR-II.