Abstract

The analytics function is growing in importance as the digitisation of business operations and markets leads to the generation of ever-increasing amounts of data. Analysing this data in a manner aligned with company priorities and structures can generate value through supporting effective decision-making, rapid product innovation, supply chain visibility and other aspects of intra- and inter-company operations. To guide the growth we derive a novel maturity framework focused on driving the Analytics-Business alignment, covering a number of diverse organisational facets such as data, leadership support, processes, data management, governance, technology and people. It differentiates itself by using a firm theoretical foundation and providing guidance for analytics capability development instead of simply diagnosing the existing maturity level. To guide development, it distinguishes between two aspects of maturity – a “state” aspect, which is used to assess the present situation in an organisation, and a “management” aspect, which evaluates management attitude in order to establish the next stage of analytics growth. The framework has been implemented in a web-based tool and its utility has been demonstrated by obtaining feedback from 64 managers from a variety of sectors, who have praised its ability to integrate diagnosis of the current situation with guidance on the next steps necessary to develop analytics maturity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The ongoing digitisation of all aspects of business operations and trade leads to the generation of ever-increasing amounts of data, and this has led to growing focus on analytics as a core tool for extracting value from this data. The concept of analytics is broadly understood as using data to build computer models that can be applied to analyse products, services and processes for the purpose of achieving a required outcome (Grossman, 2018).

The areas where analytics is expected to add value in the field of operations research range from providing better visibility and lowering the cost of operations in supply chain context (Raman et al., 2018) to supporting industrial decision-making and business growth (Zhang et al., 2021). Often the value of analytics is seen as stimulating growth and development, for example a firm’s data-driven culture is seen to impact its product and process innovation, which in turn improves its performance and may bring competitive advantage (Chatterjee et al., 2021; Chaudhuri et al., 2021). This also holds true across the supply chain, where big data analytics capability is seen to positively impact the relationship between supplier development and firms’ performance (Gu et al., 2021; Gunasekaran et al., 2017), and in creating a customer-involving environment for new product development (Zhan et al., 2018). Other expected performance effects of analytics include reduced financial risks and improved financial performance, better understanding of customer preferences leading to increased sales and new business opportunities, operational efficiency and decision making (Ferraris et al., 2019; Holmlund et al., 2020; Siegel, 2016; Wamba et al., 2017).

Overall, data analytics is viewed as impacting many facets of operations research, and this diversity of impact possibilities calls for alignment between analytics and business strategy. The development of a strategic plan is thus deemed an important pre-requisite for successful transition towards a business analytics enabled firm (Hazen et al., 2018). This supports the concept of business analytics maturity (Vidgen et al., 2017), which is defined as the stages of development of a firm in its pursuit “to integrate, manage, and leverage all relevant internal and external data sources into key decision points” (Halper & Stodder, 2014).

The concept of maturity remains widely researched in many disciplines, including in operations research, sales and planning (Danese et al., 2018; Machado et al., 2017; Mishra et al., 2015; Tavares Thome et al., 2012). Numerous maturity models have been developed to address various aspects of information systems and other application fields (Becker et al., 2009; Mettler & Rohner, 2009; Wendler, 2012). Some were developed to evaluate maturity of information technology (Cates et al., 2005; Watson et al., 2001), data warehousing (Sen et al., 2012), business intelligence (Chuah, 2010; Howson & Duncan, 2015, Russel et al., 2010), big data and analytics (Burciaga, 2013; Grossman, 2018; Halper & Stodder, 2014; Nott, 2014; Vesset et al., 2015), and other domains. Our review of existing analytics maturity models in Sect. 2 demonstrates their ad-hoc nature with a nearly universal lack of underlying theories except being based on the Capability Maturity Model (Paulk et al., 1993). The existing research explores the contribution of analytics to the firm’s growth, performance (Rialti et al., 2019; Côrte-Real et al., 2017; Erevelles et al., 2016; Ferraris et al., 2019; Merendino et al., 2017; Shamim et al., 2019; Wixom et al., 2013), logistics and supply chain management (Wang et al., 2016), and innovation (Chaudhuri et al., 2021; Mikalef et al., 2019a). As we have not come across analytics maturity models with theory-derived maturity dimensions, i.e. specific areas of interest that are measured by practices, objects or activities at each maturity level (Lahrmann et al., 2011), we suggest that the resource-based view (Barney, 1991) and dynamic capabilities (Teece et al., 1997) theories can be applied in the context of analytics maturity to provide an underlying theoretical basis for a new Analytics Maturity Framework (AMF). Although some studies have applied dynamic capabilities to the concept of maturity (Magnusson & Nilsson, 2020), so far, the resource-based view (RBV) has not been used to provide a foundation for a holistic model of analytics capability maturity, despite its obvious suitability. Therefore, we pose our first research question as follows:

RQ1. What resources of a firm compose a holistic, theory-supported maturity model to guide analytics capability development in organisations?

Our review demonstrates that the available analytics maturity models do not specifically offer a mechanism for transitioning between stages of maturity. Those maturity models originating in consulting often recommend setting goals and creating a plan for change (see Table 1). A similar approach has been suggested by academic research proposing that firms need to design an evolutionary path to gradually build their digital capability (Issa et al., 2018), and evolutionary changes of maturity states occur within an evolutionary cycle (Russel et al., 2010). However, most often, the analytics maturity models do not describe how the transition should occur (see Table 1); this implies that the firm’s analytics capability should match the characteristics of the model in the next stage of maturity. Although analytics is becoming a key function for evidence-driven decision-making in support of bringing down costs and increasing profitability, businesses still have difficulties understanding their analytics capability and progressing in analytics adoption and digital transformation (Viaene & Van den Bunder, 2011); they are under pressure to improve the existing capabilities and to find unique and relevant insights (Kiron et al., 2014). Research has identified thirty-one challenges companies face when trying to create value from business analytics, of which data quality, using analytics for improved decision-making and creating a big data and analytics strategy remain top three (Vidgen et al., 2017). We aim to address the gap in the literature and pose our second research question:

RQ2. How can transition to the desired level of maturity be achieved?

This paper focuses on develo** the AMF - a theory-derived framework for assessing the analytics maturity of firms and facilitating transition to the desired level of maturity. The development and validation of the AMF is the core contribution of this paper, and the ways in which it is derived from the two underlying theories is described in Sect. 3. Our framework is differentiated from existing analytics maturity models by using a solid theoretical foundation and providing guidance for future growth instead of simply diagnosing an existing maturity level. This guidance is based on the AMF distinguishing between two dynamic aspects of maturity – a “state” aspect, which is used to assess the present situation, and a “management” aspect, which evaluates the management attitude in order to establish the next stage of growth for the company’s analytics.

We describe the use of the Action Design Research method in our research methodology in Sect. 4, and focus on the systematic derivation of the framework elements from empirical material while building upon the existing knowledge base in Sect. 5.

The AMF’s utility has been tested by implementing it as a web-based assessment and advisory tool, and by inviting management practitioners from a variety of sectors to comment on its value and drawbacks. The findings described in Sect. 6 indicate that the ability of the framework to integrate diagnosis of the current situation with guidance on the next steps is necessary to improve analytics maturity.

The theoretical and management implications described in Sect. 7 demonstrate the potential of this work to strengthen the impact of the RBV and dynamic capability research by instantiating their constructs in the area of analytics maturity models, and to change management practice by providing direct and customised guidance to companies at different stages of analytics capability development through aligning such capabilities with the business needs.

2 Literature review

The concept of modelling maturity originates from the works of Crosby (1979) and Nolan (1979). It received widespread use from the software process maturity framework known as Capability Maturity Model (CMM) developed in 1987 (Paulk et al., 1993). The CMM offered a set of recommendations for improving software development and maintenance capability of the IT function. Maturity models are known as a tool for assessing the state of completeness of certain capabilities (Wendler, 2012) or a set of characteristics. Such characteristics found in the existing analytics maturity models appear to be arbitrarily defined. Although they cover different aspects, such as data quality, leadership support, data management, governance, technology, people and skills, these models focus on pinpointing problems rather than on guiding the development of analytics capabilities. Although a small number of models consider alignment of analytics with business strategy (Comuzzi & Patel, 2016; Davenport, 2018), the majority of the models overlook the importance of Analytics-Business alignment for achieving holistic analytics capabilities at a maturity level required by an organisation for operational or strategic decision-making. This paper reviews only maturity models available in the Business Intelligence (BI), big data and analytics space. Earlier models developed by academia focused on Data Warehousing (Watson et al., 2001; Sen et al., 2012) and BI maturity (Cates et al., 2005; Eckerson, 2007; Chuah, 2010). Only a handful of such management tools were provided by consulting practice before 2010. The first assessment tool for measuring analytics was the DELTA model developed in 2010 by Davenport (2018). Other BI and analytics maturity models from business and IT consultancy firms emerged in subsequent years, e.g. Gartner (2010), Capgemini (2012), INFORMS (2013), IBM (2014), TDWI (2014) and IDC (2015). An overview of the identified analytics maturity models is shown in Table 1. The details of a few selected models were analysed during the design of dimensions for our framework.

The existing models provide recommendations for setting maturity development goals on either continuous or staged progress. However, they lack a theoretical foundation for how the analytics capability development and transition between stages should be achieved. Several publications pointed out that the available maturity models had no theoretical framing, except for being broadly based on the CMM (Becker et al., 2009; Magnusson & Nilsson, 2020; Muller & Hart, 2016; Russel et al., 2010; Wendler, 2012). Another identified issue with the existing models concerns the maturity limit-setting nature of the CMM-based models and their questionable relevance to business intelligence, analytics and digital technologies because they cannot have a final state of maturity, rather they evolve (Magnusson & Nilsson, 2020; Russel et al., 2010). To address the issue, alternatives to the conventional matrix structure have been proposed (Magnusson & Nilsson, 2020; Russel et al., 2010). Also, our review shows that the available models appear to use a mixed maturity concept focusing on the three elements described by Lahrmann (2011) - people, process and technology; some also incorporate organisational aspects such as vision, strategy and culture. On top of the organisation’s ability to build and use analytics models with available data, analytics maturity involves several organisational capabilities, resources and processes (Grossman, 2018). Along with resources such as available data, IT infrastructure and technical skills, which alone do not guarantee successful data-informed decision-making, creating a data science and analytics culture needs to be supported by management (Provost & Fawcett, 2013). Typically, building and nurturing the analytics culture can take years (Halper & Stodder, 2014). In addition, the effective use of data can be influenced by several factors including data quality, data privacy and governance, perceived organisational benefits, process management, people, systems, tools and technologies, as well as various organisational aspects (Surbakti et al., 2020). Quality of data has been emphasised as an important prerequisite of analytics maturity (Kiron et al., 2014; Ji-fan Ren et al., 2017); however, it remains a major challenge for businesses at all maturity levels (Lismont et al., 2017). This means that the availability and convenience of relevant mechanisms for ensuring data health, reliability and governance remain key factors in analytics maturity assessments. For develo** analytics capabilities, not only do organisations need to have a thorough understanding of how analytics are deployed in the organisational context (Mikalef et al., 2019b), they also need a structured approach to the understanding of their existing analytics capabilities and resources.

Having reviewed available analytics maturity models and after consulting the literature, we established that although there is a small number of models that have the underlying theoretical foundations or consider maturity-influencing factors and alignment with business strategy, there is still a lack of well-rounded analytics maturity frameworks with theory-supported maturity dimensions. In addition, although the literature discusses analytics processes, data, governance and other relevant characteristics that contribute to better organisational performance, we have not come across academic frameworks that suggested a theory-based transition method to enable the development of analytics capability and change. In the following section, we argue the suitability of the RBV and dynamic capabilities concepts to underpin a new framework to address this gap.

3 Theoretical approach

As companies diversify to compete in the market by offering unique products (Penrose, 1959, pp.21–22), they create unique capabilities and resources (Mintzberg et al., 2009, p.292). The RBV (Barney, 1991; Wernerfelt, 1984) and dynamic capabilities concept (Eisenhardt & Martin, 2000; Shamim et al., 2019; Teece et al., 1997), concern the development of internal capabilities in firms. The former emphasises the rooting of capabilities in the evolution of a firm itself and its organisational culture, whilst the latter stresses their development through a process of strategic learning (Mintzberg et al., 2009, p.293).

To underpin a new Analytics Maturity Framework with theoretical foundations we link the RBV and dynamic capabilities to the concept of analytics maturity. In this paper, we explore the ways the RBV and its VRIN/VRIO framework can guide the development and organisation of internal resources and capabilities. We argue that analytics maturity can be conceptualised as the state of the organisation of internal resources gradually evolving to align with business needs and situations requiring insight for relevant decision-making. The proposed analytics maturity framework (1) provides firms with a method to assess and understand the state across their analytics resources and management attitudes towards the development of those resources; and (2) offers guiding principles on how firms can achieve analytics maturity growth.

Research that attempted to explain the structure of maturity models is the work by Lahrmann et al. (2011). It gives theoretical definitions of five characteristics of maturity models: maturity concepts (“people maturity”, “process maturity” and “object or technology maturity”); dimensions (specific areas of interest measured at each level); levels (representative states of maturity of each dimension); maturity principle (continuous or staged) and maturity assessment (qualitative or quantitative). We were guided by the outlined principles and characteristics while develo** our framework; however, Lahrmann’s theoretical model does not define a transition mechanism between the levels, i.e. representative states of maturity. This mechanism was deemed important by our end users and its presence in our framework is one of the main innovations in our framework.

3.1 The resource-based view

The RBV takes a firm-specific perspective on why organisations succeed or fail in the marketplace (Hunt & Morgan, 2005). This view suggests that having resources that are valuable, rare, inimitable, non-substitutional and organised (VRIN/O) in order to capture value makes it possible for firms to develop a competitive advantage and achieve superior performance (Barney, 1991; Grant, 1991; Wernerfelt, 1984). According to Daft (1983), the firm’s resources include assets, capabilities, organisational processes, information, and knowledge. Controlled by the firm, these resources enable strategy creation that improves its operational efficiency and effectiveness. Furthermore, the firm’s resources are divided into three types: (i) physical capital resources consisting of resources such as equipment and technology; (ii) human capital resources comprising experience, training, intelligence, and knowledge; and (iii) organisational capital resources concerning formal structures, controlling systems and reporting and planning systems (Barney, 1991). We argue that the firm’s analytics capability is a bundle of resources comprising organisational and managerial culture, skills, governance, analytics processes, IT/Analytics infrastructure, and data technology and techniques that can be re-organised. A transition mechanism enabling maturity growth in our proposed Analytics Maturity Framework is based on dynamic re-organisation of the internal analytics resources and capabilities.

The RBV perspective is used in the proposed framework holistically. Viewed as a resource in itself, knowledge resulting from using the maturity assessment aids managers in understanding and re-organising the firm’s internal analytics resources. The AMF can help assess the organisation of resources as advocated by the VRIN/O framework, however, the realisation of its utility is reliant on the management attitude and action since the unused knowledge in the form of the results of the assessment cannot, by itself, initiate improvements that will advance analytics maturity and contribute to growth, company performance, and competitive advantage through analytics.

According to Gupta et al. (2018), the ubiquity and rapid increase in the use of ground-breaking IT technologies led by technological evolvement may erode the sustainability of the firm’s existing analytics resources. Technological resources represent physical capital. However, with fast-paced changes in technology, once efficient and appropriate technology may lose its relevancy a few years later. Regarded as relatively static, the RBV creates some limitations. It neither takes into account the rapidly changing nature of business environments (Teece et al., 1997) and institutional pressures (Dubey et al., 2019), nor accommodates the changes in the evolution of technological and digital resources to support efficient analytics and demanding business goals. Therefore, invoking the concept of dynamic capabilities may help to overcome this fundamental limitation (Barreto, 2010).

3.2 Dynamic capabilities

As indicated in the literature review, some research has attempted to apply the dynamic capabilities concept to maturity models before. Dynamic capabilities emphasise organisational routines and managerial skills as drivers of the firm’s ability to integrate, build and reconfigure internal competencies to address or create changes in the business environment (Teece et al., 1997). Recent research confirms that big data processes and capabilities need to change dynamically to adjust to external and internal influences (Braganza et al., 2017). The AMF provides an assessment of maturity levels given a combination of resources in a specific time period, thus the guidance to develop further will be based on the firm’s organised resources. Any resources within the firm can change over time either due to internal factors or external forces, resulting in a need to be adjusted to business pressures. Firms can produce knowledge by reviewing their analytics resources to ensure enduring operational, financial and market performance. Therefore, the dynamic capabilities principles emphasise resource development and renewal through reassessment and re-organisation.

Firms perceive the role of analytics as critical in closing the maturity and capability gap between digital natives and traditional companies (Alles & Burshek, 2016). However, the successful adoption of analytics remains a challenge for many (Viaene & Van den Bunder, 2011). Indeed, just the possession of analytics capabilities or the application of analytical methods without a clear business need to address brings no real value to the companies unless data is meaningfully transformed into actionable insights (Holsapple et al., 2014; Gandomi & Haider, 2015; Larson & Chang, 2016; Seddon et al., 2017) for decision-making. This requires such insights to be accessible and integrated into business processes to enable evidence-based decisions and insight-informed actions (Bose, 2009; Lavalle et al., 2011; Seddon et al., 2017). If previously hidden knowledge provides value after transforming data into actionable insights by using analytics, then, from the resource-based and dynamic capability perspectives, establishing and organising processes that enable better utilisation of the appropriate internal resources is supported by the VRIN/O framework. The dynamic capabilities concept suggests that companies build up knowledge that allows improving the process of bundling and re-bundling of resources (Eisenhardt & Martin, 2000); and that companies should have an ability to renew and reinvent their strategic, analytics, digital and IT capabilities to meet the requirements of changing environments (Mikalef & Pateli, 2017; Pavlou & El Sawy, 2006; Shamim et al., 2019; Wang et al., 2011), using a qualitative approach (Creswell, 2009) for problem exploration and definition, and a framework validation survey. We were guided by theoretical definitions of maturity model elements (Lahrman et al., 2011), definitions of maturity level in the Strategic Alignment Maturity model (Luftman, 2000), the RBV (Barney, 1991) and dynamic capabilities (Teece et al., 1997) theories. As the review of the previous relevant studies was an integral part of the iterative artefact-development process, the literature was consulted throughout different ADR stages.

4.1 Action design research

We used the ADR method because it supports the simultaneous development of a theory-informed artefact and deployment of this artefact to help address existing organisational challenges in the first case study organisation as explained below. The adopted method is shown in Fig. 1, and this section provides further details for the implementation of each of the four ADR stages (Problem definition; Building, Intervention and Evaluation (BIE); Reflection and Learning; and Formalisation of Learning).

ADR method stages, adapted from Sein et al. (2011)

The initial focus on the first case study allowed an in-depth analysis of needs and context that were sufficiently specific yet typical for a business setting. This was triangulated with the existing knowledge about the role of analytics as an organisational asset as outlined in the literature review to form an integrated and generalised understanding of both the organisational context and user needs with regards to analytics maturity. Each version of the maturity assessment model was evaluated by drawing on both practical feedback and theory. Four ADR stages are described below.

4.1.1 Problem definition

The business problem outlined by practitioners from the first case study company to the research team resulted from the company’s internal complexities and disparities between departmental and regional approaches to sales forecasting by different business units. These were further investigated during interviews. This research proposed to assess the company’s analytics maturity of the business unit in question. Since it was a multinational company, UK-based and overseas departments provided a sufficient scale for the research.

Key information for this theory-driven and practice-inspired research was gathered on: (a) the existing process of forecasting sales and financial performance, and (b) understanding the current practices and challenges related to the use of analytics and forecasting techniques. During this phase, more precise business requirements were defined by the company management; these were incorporated in the design of an interview guide that was used to gather input during the next stage.

4.1.2 Building, intervention and evaluation (BIE)

The second stage included the following activities: (1) reviewing available maturity models; (2) carrying out interviews with practitioners and users to gather information relevant to the existing process and the associated practices and challenges; (3) assessing the company’s maturity level; (4) integrating maturity dimensions into a target artefact - a theory-supported analytics maturity framework (cf. Sect. 5.1); and (5) evaluating the framework and implementing iterative modifications based on practitioners’ feedback.

An initial version of the analytics maturity framework was presented and tested with stakeholders in the first case study company. Subsequent interventions throughout this stage focused on develo** an online version of the analytics maturity framework to allow other practitioners to independently assess their companies. The revised version incorporated a validation survey and testing the framework with three additional companies. This work is discussed further in Sections <link rid="Sect. 16”>5</link> and 6 below.

4.1.3 Reflection and learning

This stage represents a conceptual move from constructing the framework to applying it to different organisations thus testing its generalisability. The research team focused on reflecting on the problem framing, identified research gaps, the theories chosen and the emerging output. Validating the framework with several companies confirmed that the problem framing was generalisable and relevant for any business setting.

4.1.4 Formalisation of learning

The final stage of the process focused on the generalisation of the outcome. It included sharing the emerging versions of the framework with users and practitioners within the original case study company first. It followed by making the final version available for free access and use by any company as a web-tool. This allowed us to extend the research, validate and generalise the results. Thus, the refinement and reassessment of the research aim and objectives as part of and throughout the research process reflected the iterative nature of the ADR process and principles.

4.2 Data collection approaches

Data was collected from a range of sources as part of Stage 2 of the ADR process.

4.2.1 Interviews

To better understand the business context within our first case study company and explore specific areas of interest to be assessed, the research team conducted unstructured interviews. The interviews provided first-hand insights from knowledge holders at two levels of seniority within the company, which gave the researchers different perspectives on the current business process. Participants for phone interviews were selected from relevant functional units in three identified geographic locations of this multi-national company. The main principle in selecting respondents was their experience, knowledge of and involvement in the business process in question. The selected groups included (1) regional decision makers (regional sales managers and business partners from Sales Operations who typically read forecast report, Tableau-dashboards users) and (2) global decision makers (global programme managers and directors who did not use information systems and digital tools, but used the forecasting results for making organisation-wide decisions; they had a strategic view of the business. Additionally, experts from Finance, Marketing and IT were also included in order to determine the level of their involvement with Sales Operations.

In order to gather in-depth information on the business process, responsibilities and ways how analytics could improve the business process results, interview questions were divided into three research sections: process, maturity and improvements. The main reason for selecting unstructured interviews as the primary research approach was their flexibility in allowing adjustments to be made within the list of the defined areas of investigation during the interviews (Saunders et al., 2015, p.391).

In addition to achieving the understanding of the process and gathering related information, the interviews incorporated questions on the quality of the existing data, its adequacy to deliver anticipated results and what improvements can be made to make efficient data-informed decisions. Our questions were designed to examine the analytics maturity of the Sales Operations with a particular focus on forecasting sales and predicting performance. We also sought to understand data sources, frequency, process, output and target audience.

Our literature review showed that the concept of maturity has been applied to multiple areas in Information Systems, and the existing maturity models tend to focus on the following aspects: (a) analytics technology issues; (b) business/organisational perspective; (c) analytics capabilities; and (d) the impact of analytics on the decision enablement and business performance (Chen & Nath, 2018). To explore the company’s maturity, our questions were organised to cover four dimensions: process, people, technology (including data, IT systems) and management support. Table 2 provides a sample of interview questions. During the interview process it became apparent that one additional important dimension, governance, was overlooked when designing the questionnaire. It was investigated during subsequent discussions.

To achieve a sufficient cross-section of sales managers, business partners and other support staff who provided their opinions and experiences, between 10 and 15 people in total from the key target functional units were interviewed in EMEA, Americas, Asia Pacific and Australia. Commentary and analysis of the interview findings were used in develo** the framework.

4.2.2 Iterative meetings

To gather initial feedback, we presented our framework design and maturity assessment to three senior managers within the first case study company. The initial prototype of the AMF was also tested with three managers within two external start-up companies. In contrast to the first case study company, these start-ups had small-scale global footprint. The framework was presented to the managers over a series of conference calls and face-to-face discussions. Our presentations focused on explaining the AMF, reasons behind its creation, and how its use could support management challenges. The research purpose of these interactive meetings was to understand the practical value of the AMF to the participating businesses. Feedback obtained from a survey questionnaire, which is shown in Table 3, was incorporated into further improvements of the AMF and led to the creation of a web-based tool that consisted of two parts, a self-diagnostic assessment and a feedback gathering validation survey.

4.2.3 Framework as a web-based tool and a validation survey

After identifying maturity levels and dimensions for the framework, we constructed questions for assessing each dimension. The questions related to the maturity dimensions were reviewed through several iterations by the research team before the first version of a web-based tool was designed in Qualtrics. The online assessment provided a brief description of the framework and its potential benefits. Users were invited to provide consent for data to be collected when they use the tool. The assessment was organised in 6 sections, each dedicated to an AMF dimension, with questions exploring the ‘Present State’ of analytics and the ‘Management’ aspects for each of the maturity dimensions (‘Organisation’ – 6 questions, ‘IT and Analytics Infrastructure’ – 7, ‘Analytics Processes’ – 6, Skills – 6, ‘Governance’ -7, ‘Data and Analytics Technology’ − 6). The analytics maturity assessment included a combination of multiple-choice and matrix table questions. Participants were asked to reflect their understanding of data analytics within their organisation or individual business units when answering the questions. The web-based tool we built to operationalise and test the AMF is available at bit.ly/Analytics_Growth.

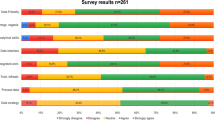

To determine the profiles of participants, they were asked to choose options that would indicate their involvement with analytics in their roles. Upon completion of the analytics maturity assessment, participants were invited to answer a validation survey consisting of eight questions, shown in Table 4, with five focusing on the validation of the AMF usefulness and three asking about company industry, company size and the participant’s role in their organisation.

5 The analytics maturity framework

5.1 Design

A summary of the activities performed during designing and creating the AMF is shown in Table 5.

The Analytics Maturity Framework uses a common maturity structure. We adapted the definitions of five maturity levels from the Strategic Alignment Maturity (SAM) model (Luftman & Kempaiah, 2008). These are ‘Initial’, ‘Committed’, ‘Focused’, ‘Managed’ and ‘Optimised’. Based on the analysis of the existing models that is shown in Table 6, and while also kee** in mind the IT-Business alignment criteria from the SAM model, we constructed new maturity dimensions by grou** commonly occurred themes.

Based on our interview findings and the above analysis, we selected six dimensions that could provide support for the Analytics-Business alignment based on the identified firm’s resources. Since the motivation for our study was to ground an analytics maturity framework in the established theoretical base, we explored management literature on the RBV and big data analytics to support our proposed framework’s dimensions. The AMF dimensions were developed using the environment and knowledge base perspectives (Hevner et al., 2004), as shown in Fig. 2. The environment perspective represents our empirical research findings accumulated through interviews within the case study companies, analysis of available maturity models and practitioners feedback. The knowledge base perspective represents literature and academic resources that we accessed in order to develop our maturity dimensions.

Integrating maturity dimensions using the Environment and Knowledge perspectives, adapted from Hevner et al. (2004)

Each of the AMF’s dimensions - organisation, skills, governance, analytics processes, IT/Analytics infrastructure, and data technology, tools and techniques - are viewed through the lens of the RBV. They differ by their nature: organisational, physical or human capital. Three out of the six dimensions, i.e. analytics processes, organisation and governance, are characteristic of the organisation element of the RBV’s VRIN/O framework, meaning that the physical and human resources represented by IT/analytics infrastructure, data/analytics technology and skills respectively are supported by the firm’s analytics governance structure, processes and culture, all of which are intangible resources and therefore difficult to imitate. The AMF can be used as a tool to support the internal organisation of the analytics capabilities. A brief description of each dimension is provided below.

5.1.1 Organisation (organisational/intangible)

Defines to what extent the organisational strategy, culture, leadership back business analytics (Barton & Court, 2012; Chaudhuri et al., 2021; Davenport et al., 2001; Erevelles et al., 2016; Gupta & George, 2016; Kiron et al., 2014; Lavalle et al., 2011; Mikalef et al., 2018; Olszak, 2016; Ross et al., 2013). Support for analytics can be fragmented, business unit-level or widespread and the level of such support demonstrates the management attitude towards encouraging analytics-informed decisions and business environment. Shows the importance of integrating and driving the right behaviours for analytics-supported and insight-driven decision-making. Lack of culture that supports the use of analytics is suggested to be one of the reasons many firms have failed to realise the potential of data (Lavalle et al., 2011). Given the effective use of analytics for decision-making and analytics capability development both depend on the organisational culture, assessing the firm’s attitude towards creating and cultivating culture and practice that supports alignment of analytics with decision-making at management levels needs to be part of the analytics maturity assessment.

5.1.2 IT & analytics infrastructure (physical/tangible)

Defines the level of suitability of IT systems and platform development in facilitating analytics adoption and use; demonstrates the ability of the infrastructure to support and accommodate growing volumes of data, attitude to development and impact on the business (Gupta & George, 2016; Wamba et al., 2017).

5.1.3 Analytics processes (organisational/intangible)

Assesses the quality of data (Brinkhues et al., 2014; Ji-fan Ren et al., 2017; Kiron et al., 2014) used in analytics; defines the existence of analytics processes in the organisation and how well these are managed and integrated with business needs. Creating efficient analytics processes to support transformation of data and knowledge is leading to better resource utilisation.

5.1.4 Skills (human)

Demonstrates the existence, organisation and level of data analytics skills needed to work with current and future technologies (Mikalef et al., 2018); assesses necessary managerial practices (Gupta & George, 2016) and attitude to skills development, such as acquisition, retention, and training. Achieving value from actionable insights relies on the know-how of using analytical technologies and interpreting data by people.

5.1.5 Governance (organisational/intangible)

Defines the firm’s approach to data governance and the ownership of data (Espinosa & Armour, 2016; Mikalef et al., 2018; Tallon et al., 2013; Vidgen et al., 2017); demonstrates how coherent and supportive the company’s data governance strategy is. The data governance status can influence the organisational strategy in ensuring the appropriate culture is maintained.

5.1.6 Data & analytics Technologies (physical/tangible)

Demonstrates how advanced the technologies, tools and techniques available in the organisation are (Erevelles et al., 2016; Gupta & George, 2016; Wixom et al., 2011); what and how analytics technologies and tools are used for efficient data transformation into actionable insights; and what the organisation’s attitude towards their strategic development is.

5.2 Transition

To further support the AMF with the VRIN/O framework and dynamic capabilities, this research proposes a mechanism for transitioning to advanced maturity levels. It proposes that transformation of analytics maturity starts with the existing makeup of the internal analytics resources and then continues with a course of action ensuring the relevance of these resources to the current and future business needs and environment. Each dimension is evaluated by two aspects. The first is ‘Management’. This aspect defines the existing processes, internal organisation and considerations the business makes about the use of analytics within the context of each maturity level, and management attitudes to establishing the next stage of the organisation’s analytics growth. The second aspect is ‘Present State’, which is a qualitative assessment of what the business currently uses and what capabilities it owns, e.g. infrastructure, technology, skills, processes, etc. These two aspects are critical for transitioning to the next maturity level. The presence of these two aspects makes our proposed framework unique among the available options and allows us to use it for both analysing the existing situation and for guiding the transition towards the higher levels of maturity. The two aspects of the proposed framework are detailed for each AMF dimension in Fig. 4.

5.2.1 Moving through stages of analytics maturity using the framework

The AMF is based on the continuous maturity principle and the transition model can be applied to all dimensions at any maturity level to guide the organisation’s progress from any current level of maturity to a more advanced one. As seen in Fig. 5, the transition begins with an assessment of the existing capabilities that are defined by the “Present State” aspect (Bt) of a specific maturity dimension. If a higher maturity state Bt+1 is desired, the organisation should reconsider its current “Management” attitude (At) that characterises this dimension, and change it to the one associated with the desired higher level of maturity (At+1). This transition is illustrated by the arched arrow inside the diagram in Fig. 5. The underlying assumption for the change of management attitude is: a present analytics maturity level is a result of the practices and attitudes measured by the “Management” aspect. The influence of the management attitude on the analytics maturity level is illustrated by the vertical downward arrow in Fig. 5. A desired change, for example, could involve formulating a data strategy, establishing governance policies or develo** a framework for introducing new technologies, etc. As the infrastructure, technology or any other dimension being measured becomes more sophisticated, and the analytics implementation supports decision-making and business requirements, the desired situation characterised by the “Management” (At+1) and “Future State” (Bt+1) aspects becomes a reality. This is now considered an existing maturity level and such status is illustrated by the backward arrow on the outside of the diagram. The previously defined aspirations are achieved and so to progress towards creating a new future state of analytics maturity, the current capabilities should be reassessed and the “Management” attitude should change again. This is a continuous spiral-like cycle.

5.3 Validating the AMF and its advisory component

The evaluation and validation of the AMF was carried out in two phases, a questionnaire to the managers from the three case study companies, and a web-based survey that participants were asked to complete after using the online AMF assessment.

During the first evaluation phase with three case study companies (a large multinational technology company and two technology start-ups), when asked whether the framework was useful, five interviewees across three companies agreed it was beneficial for several reasons. Firstly, it helped them to understand the current state of their business. More importantly, they were able to understand what the desired state should be; they could use this understanding to start building roadmaps, designing initiatives and thinking of the type of skills needed. The respondents found AMF clear as they were able to identify what analytics maturity level their business was at. However, it was indicated that there was a need to incorporate other elements, such as the nature of the business and the existing data. Also, when asked whether the framework provided guidance on how to move to a certain maturity level, some respondents said it only provided them with identifiable maturity characteristics at each level, but not with ‘this is what you need to do’ advice to move to the next level. Overall, all respondents found it practical and would use the AMF in their business. The obtained feedback on the framework’s usefulness and relevancy was incorporated into further improvements of the AMF and led to the creation of the web-based self-diagnostic and feedback gathering tool.

This updated AMF version and the web-based tool were tested in the second evaluation and validation phase. Firstly, it was tested with a large public listed company from the utilities sector. Five managers and a decision support analyst assessed the analytics maturity of their organisation using the online tool and completed the validation survey. Following this independently-performed exercise, the research team presented the AMF to the company and conducted a group discussion with seven participants. The gathered feedback suggested several further modifications in the design of the AMF dimensions. These were mainly related to clarifications of analytics terminology used, so that the analytics maturity assessment could be completed by managers with different functional roles and business perspectives. All managers participating in validating the AMF during consecutive interventions had senior management responsibilities in Operations, Process, IT, Decision Support, Strategy and other; these participants had either sufficient or good understanding of the role of analytics in their organisations.

Having implemented the modifications, the web-based self-assessment tool was updated to reflect the new AMF version, and the link to the tool was distributed amongst MBA cohorts within two UK Universities. Both MBA cohorts comprised professionals and managers from industry and the public sector with a minimum number of years of professional experience. Participants from a variety of industry sectors were invited through the professional social media platform LinkedIn to use the tool and complete the analytics maturity assessment of their organisations online. After using the tool, participants were invited but not obliged to answer a set of questions evaluating the AMF in terms of usefulness, benchmarking their organisation against the framework, and value to their organisation. 61 people in total completed this evaluation part.

Participants came from different industries, but the Information Technology (21%), Healthcare (16%), Banking & Financial Services (14%) and Utilities (10%) sectors were the largest; the rest included Manufacturing (8%), Public Administration (8%), Education, Retail and Other.

6 Results

The results of the AMF validation survey provide answers to our first research question focusing on what dimensions should compose a holistic, theory-supported maturity model that will guide analytics capability development in organisations. This question and our motivation for the research were also complemented by the need for a holistic maturity assessment that became apparent from our interview findings.

The results of the validation show that the AMF is practical and provides value by structuring maturity assessment along the following six dimensions: organisation; skills; governance; analytics processes; IT/Analytics infrastructure; and data technology, tools and techniques. 86% of our respondents found the AMF helpful when reflecting on the state of analytics maturity in their organisations, and 76% of them said they were able to relate the state of analytics use in their organisations with particular AMF levels. Furthermore, 75% of the participants were able to identify the areas for improvement to drive the analytics maturity growth in their organisations. In addition, 67% of the participants saw the value offered by the AMF if used in their organisations.

Participants were asked to expand on perceived benefits and value of using the AMF for their organisations. Two particular benefits dominated the responses. Firstly, the AMF provided a structure for the understanding of the company’s analytics capabilities and resources. Secondly, it was a tool for the management to understand the company’s current state of analytics capabilities One participant stated: “Defining different levels of analytics maturity with clear descriptions will help our manager to think more in a framework and perceive the current status of the company in this regard and next possible steps” (IT). This was echoed by others“…have found the framework useful in better understanding of analytics in my organisation” (Healthcare), and “More structured framework to show the management how data processes should be organised” (IT).

In addition some participants commented on improved decision making as a benefit if the framework was introduced in their organisations: “Using data to make strategic decisions” (Healthcare), “The organisation to use data in making decisions” (Healthcare), and “Strategic decision making and predictive analysis” (IT).

The demographics of our validation survey steered towards larger businesses with 250 + employees (75%) that already have established organisational structures, though micro- (4%), small- (16%) and medium- (4%) size businesses also participated. One participant from the manufacturing company said that their small and medium-sized enterprise (SME) lagged behind in the adoption of analytics, but they “recently started to look into digitisation and Industry 4.0 solutions and have little clue ‘how to’ be future ready. Converting paper-based processes along manual manufacturing for an SME is a big challenge.” Our results show that regardless of the company size, the challenges of develo** analytics capability are common to all businesses. The SMEs have the same needs as large businesses; they require a structured approach to organising data and analytics and understanding of how to progress with develo** their digital and analytics capabilities.

Our second research question focused on how companies can achieve the desired level of maturity, and to address this our paper proposes a transition mechanism. Although the validation survey focused mainly on the utility of the AMF, the participants provided positive feedback on this additional contribution of our framework, which can be considered as the third perceived benefit from using the AMF in organisations. It was recognised by 40% of the participants who agreed to explain their opinions on the AMF benefits that the proposed framework offered guidance for analytics capability development. These participants said that having a comprehensive view on the company’s analytics capabilities was beneficial for building “…a global picture of where the company is” (Financial Services) and develo** analytics maturity capability because it “provides us a framework to work against, provides us a starting point and a goal to aim for” (IT); it helped to “understand how to move up the maturity curve” (IT), and “it will support our current proposals to introduce a robust data strategy to support the organisations growth” (Other sector), Similarly, “…this framework will help the organisation pay particular focus to certain areas of development required” (Public Administration), “It would be able to organise and show to the board where we are, and where we have to be to go further” (Financial Services), “To highlight the dimensions of analytics maturity and to inform transition planning” (Public Administration), and “To show the areas for development” (Public Administration).

7 Discussion and conclusions

We have developed an analytics maturity framework that is unique amongst the existing frameworks by its grounding in the RBV theory and the principles of dynamic capabilities theory. Following RBV, the framework uses different types of resources in the organisation to shape its six dimensions of analytics maturity. The dynamic capability perspective then informs the transition of an organisation along the states of maturity, indicating the firm’s ability to reconfigure the state of embeddedness, use and deployment of analytics in support of organisational decision-making. This is dependent on the state of management attitude to the adoption and strategic development of such capabilities, and this stipulation is one of the significant novel elements of our framework.

The motivation for creating an analytics maturity model is to ensure the focus of analytics activities on addressing business challenges, supporting decision-making, and kee** analytics aligned with business goals through the development of analytics capabilities. This paper aims to achieve this by applying the RBV and dynamic capabilities concepts to systematically derive a framework for organising and develo** analytics capabilities in a business context. We argue that doing this will help companies not only understand the current state of their analytics but also to create a roadmap for develo** those capabilities.

The result of our research is the proposed Analytics Maturity Framework (AMF) and its implementation in a web-based diagnostic tool. We have shown that through its six dimensions, the AMF provides a structural, multi-perspective approach to understanding and building analytics capabilities. Viewed as a resource, each dimension can be described from the RBV perspective, while an in-depth understanding of the business needs and the analytics capabilities that can support them represents the knowledge that can be gradually cultivated.

The key novelty embedded in the structure of the AMF is the ability to distinguish between creating an inventory of analytics capabilities and guiding the analytics maturity growth. The sequel of this section describes the theoretical and managerial implications arising from the results of this research.

7.1 Theoretical implications

In the theoretical domain, this research addresses the absence in the literature of comprehensive theory-driven analytics maturity models. The paper integrates concepts from the SAM model, the RBV and dynamic capabilities theories to derive the AMF framework that can guide the identification and development of analytics capabilities and their maturity. The AMF maturity levels are based on the SAM model (Luftman, 2000, 2008), the analytics maturity dimensions are supported by the RBV and its VRIN/VRIO framework (Barney, 1991), and the transition through the stages of maturity relies on the dynamic capabilities theory (Teece et al., 1997).

Whilst the RBV and dynamic capabilities have been linked with Big Data Analytics capabilities in the previous research, these theories have been mostly analysed through the lens of their impact on different output variables such as organisational performance (Rialti et al., 2019; Côrte-Real et al., 2017; Ferraris et al., 2019; Shamim et al., 2019); or, to a lesser extent, innovation (Mikalef et al., 2019a). Instead, here we are using these theories to derive a benchmark-oriented and holistic framework for analytics capabilities growth and maturity.

The need for systematic derivation of the analytics maturity model has been recognised by the academic community, indeed, Korsten et al. (2022) have conducted a Delphi study with 9 experts attempting exactly that. However, their work lacks both the transition guidance element of AMF and the comprehensive evaluation in different areas of business offered here.

In addition, our observations of analytics needs, practices and processes during the course of develo** the framework using the ADR method have revealed a number of interesting findings which can be used to underpin further developments in the area:

-

Companies of different sizes and in different sectors experience similar challenges in the realm of data and analytics, often united by the stifling effect of legacy IT systems and corresponding poor data quality.

-

Companies can find their different divisions on different levels of analytics maturity. Indeed, the middle management attitude towards the present state of the analytics capability directly affects the potential for develo** the analytics maturity of that specific unit.

-

The lack of well-established analytics strategy and governance processes is frequently observed across industries and business functions, and it acts as a barrier to a company-wide use of analytics.

These findings indicate the need for a holistic analytics maturity framework, in alignment with the recommendations of Hazen et al. (2018) who call for alignment between analytics and business strategy through the development of a strategic plan towards a business analytics enabled firm. We deliver the framework which can guide the development of such a plan aligned with RBV, and underpin further research in the area.

7.2 Managerial implications

In the managerial domain, the proposed analytic maturity framework is a resource that firms can deploy internally to better understand analytics and the way it helps them derive value from data in all aspects of their operations as envisioned in the academic literature (Zhang et al., 2021; Chatterjee et al., 2021, Holmlund et al., 2020, Gu et al., 2021). Companies can also use AMF to plan their analytics maturity growth using the two core aspects: the analytics resources found at a specific point of time, and the managerial attitudes acting as vehicles for further development of those resources. Consequently, the use of AMF is expected to lead to significant improvement in the way analytics adds value to operations and identifying business opportunities as well as in organisation’s performance.

As a managerial assessment tool, the framework provides a starting point for re-organisation and improvement of analytics capabilities and allows business leaders to think of the next development steps in a structured way, set goals and plan actions. This can reduce the uncertainty inherent in highly complex business transformations. For example, short-term goals could focus on forming an understanding of the current environment and maturity level for building the right analytics processes, planning governance and selecting suitable data technologies. Long-term goals could include plans for creating an organisational culture that embraces analytics support for decision-making, or data unification, etc.

We believe this framework provides a comprehensive approach to assessing the analytics maturity level, indeed AMF’s six dimensions represent bundles of resources aligned with business operations. Using the ADR method to develop AMF has ensured its relevance to ongoing business needs in diverse business contexts, because ADR created the space for us to explore the aspects and dimensions of analytics maturity in several real contexts in consecutive iterations of the framework, validated and stimulated by practitioners’ feedback.

Overall, our framework provides a way of positioning the business on a maturity level and assessing the Analytics-Business alignment. It further proposes the actions needed for the transition to the next desired analytics maturity level. The framework was implemented as a web-based tool, and has been validated through a series of interactions with four case study companies, discussions of its usefulness, challenging areas, and the practicality of correct positioning of a business within the analytics maturity levels.

7.3 Limitations and further work

Formulating our research problem with the first case company at the initial stages was instrumental in allowing us to achieve the depth necessary for conceptual clarity of the framework. However, the nature of the focal company (UK branch of a blue-chip IT multi-national) may have influenced the overall direction of the work. Fortunately, our consequent findings from other business contexts indicate this has not been the case. Although we collected a variety of respondents to ensure for example that our results apply to small and medium-sized companies, the majority of responses were obtained from large organisations with 250 + employees.

We also recognise that exploring the transition method and maturity growth over a period of time may deliver new insights, for example in terms of the fit of IT infrastructure and architecture evolution to the current maturity levels. Many companies run on legacy IT infrastructure and systems that might not be suitable for the modern business environment. In this respect, the proposed transition mechanism should be validated in the real context, ideally, over a period of time.

In our future research, we are planning to address these limitations, including an in-depth investigation of currently under-explored challenges such as standardisation. Other research opportunities could focus on investigating the influence of the firm size and the available resources on the maturity level descriptions. For example, certain companies can maximise value creation or achieve sustainable performance at lower levels of the analytics maturity scale when compared to high-tech multinationals.

References

Alles, D., & Burshek, J. (2016). Ranking Analytics maturity by industry. International Institute for Analytics.

Barney, J. (1991). Firm resources and sustained competitive advantage. Journal of Management, 17(1), 99–120.

Barreto, I. (2010). Dynamic capabilities: A review of Past Research and an agenda for the future. Journal of Management, 36(1), 256–280. https://doi.org/10.1177/0149206309350776.

Barton, D., & Court, D. (2012). Making advanced Analytics work for you. Harvard Business Review. Retrieved Oct 2020 from https://hbr.org/2012/10/making-advanced-analytics-work-for-you.

Becker, J., Knackstedt, R., & Pöppelbuß, J. (2009). Develo** Maturity Models for IT Management - A Procedure Model and its application. Business and Information Systems Engineering, 1(3), 213–222. https://doi.org/10.1007/s12599-009-0044-5.

Bose, R. (2009). Advanced Analytics: Opportunities and challenges. Industrial Management & Data Systems, 109(2), 155–172. https://doi.org/10.1108/02635570910930073.

Braganza, A., Brooks, L., Nepelski, D., Ali, M., & Moro, R. (2017). Resource Management in Big Data Initiatives: Processes and dynamic capabilities. Journal of Business Research, 70, 328–337. https://doi.org/10.1016/j.jbusres.2016.08.006.

Brinkhues, R., Macada, A. C., & Casalinho, G. (2014). Information management capabilities: antecedents and consequences. In: Proceedings of Americas conference on information systems (AMCIS).

Burciaga, A., & INFORMS Annual Meeting October. (2013). INFORMS Analytics Maturity Model, 2013. Retrieved Sep 2018 from https://www.informs.org/Resource-Center/Video-Library/INFORMS-Meetings-Videos/INFORMS-Annual-Meeting-Presentations/2013-INFORMS-Annual-Meeting-Minneapolis/Analytics-Maturity-Model.

Capgemini (2012). Measuring Organizational Maturity in Predictive Analytics: The First Step to Enabling the Vision. Retrieved Jul 2018 from https://www.capgemini.com/wp-content/uploads/2017/07/Measuring_Organizational_Maturity_in_Predictive_Analytics__the_First_Step_to_Enabling_the_Vision.pdf.

Carvalho, J. V., Rocha, A., van de Wetering, R., & Abreu, A. (2019). A Maturity model for hospital information systems. Journal of Business Research, 94, 388–399. https://doi.org/10.1016/j.jbusres.2017.12.012.

Cates, J., Gill, S. & Zeituny, N. (2005). The Ladder of Business Intelligence (LOBI): A framework for enterprise IT planning and architecture. International Journal of Business Information Systems, 1(2), 220–238. https://doi.org/10.1504/IJBIS.2005.007408.

Chatterjee, S., Chaudhuri, R., & Vrontis, D. (2021). Does data-driven culture impact innovation and performance of a firm? An empirical examination. Annals of Operations Research. https://doi.org/10.1007/s10479-020-03887-z.

Chaudhuri, R., Chatterjee, S., Vrontis, D., & Thrassou, A. (2021). Adoption of robust business analytics for product innovation and organizational performance: The mediating role of organizational data-driven culture. Annals of Operations Research. https://doi.org/10.1007/s10479-021-04407-3.

Chen, L., & Nath, R. (2018). Business analytics maturity of firms: An examination of the relationships between managerial perception of IT, business analytics maturity and success. Information Systems Management, 35(1), 62–77.

Chuah, M. H. (2010). An enterprise business intelligence maturity model (EBIMM): Conceptual framework, In Proceeding of Fifth International Conference on Digital Information Management (ICDIM). Thunder Bay, ON, Canada.

Comuzzi, M., & Patel, A. (2016). How organisations leverage Big Data: A maturity model. Industrial Management & Data Systems, 116(8), 1468–1492.

Côrte-Real, N., Oliveira, T., & Ruivo, P. (2017). Assessing business value of Big Data Analytics in european firms. Journal of Business Research, 70, 379–390. https://doi.org/10.1016/j.jbusres.2016.08.011.

Creswell, J. W. (2009). Research Design: Qualitative, quantitative and mixed methods approaches (3rd ed.). Sage Publications.

Crosby, P. (1979). Quality is free: The art of making quality certain. McGraw-Hill.

Daft, R. (1983). Organizational theory and design. West.

Danese, P., Molinaro, M., & Romano, P. (2018). Managing evolutionary paths in sales and Operations Planning: Key dimensions and sequences of implementation. International Journal of Production Research, 56(5), 2036–2053.

Davenport, T. (2018). DELTA Plus Model & Five Stages of Analytics Maturity: A Primer. Retrieved Nov 2018 from https://www.iianalytics.com/delta-plus-primer.

Davenport, T., Harris, J., David, W., & Jacobson, A. (2001). Data to knowledge to results: Building an analytic capability. California Management Review, 43(2), 117–138.

Dubey, R., Gunasekaran, A., Childe, S., Blome, C., & Papadopoulos, T. (2019). Big Data and predictive analytics and manufacturing performance: Integrating institutional theory, resource-based view and big data culture. British Journal of Management, 30, 341–361. https://doi.org/10.1111/1467-8551.12355.

Eckerson, W. (2007). Beyond the Basics: Accelerating BI Maturity. Retrieved Jul 2018 from http://download.101com.com/pub/tdwi/Files/SAP_monograph_0407.pdf.

Eisenhardt, K., & Martin, J. (2000). Dynamic capabilities: What are they? Strategic Management Journal, 21(10/11), 1105–1121.

Erevelles, S., Fukawa, N., & Swayne, L. (2016). Big Data consumer analytics and the transformation of marketing. Journal of Business Research, 69(2), 897–904.

Espinosa, J. A., & Armour, F. (2016). The big data analytics gold rush: a research framework for coordination and governance. In: Proceedings of 2016 49th Hawaii international conference on system sciences (HICSS), 1112–1121.

Ferraris, A., Mazzoleni, A., Devalle, A., & Couturier, J. (2019). Big data analytics capabilities and knowledge management: Impact on firm performance. Management Decision, 57(8), 1923–1936. https://doi.org/10.1108/MD-07-2018-0825.

Gandomi, A., & Haider, M. (2015). Beyond the hype: Big data concepts, methods, and Analytics. International Journal of Information Management, 35(2), 137–144. https://doi.org/10.1016/j.i**fomgt.2014.10.007.

Grant, R. (1991). A resource-based perspective of competitive advantage. California Management Review, 33(3), 114–135. https://doi.org/10.2307/41166664.

Grossman, R. (2018). A framework for evaluating the analytic maturity of an organization. International Journal of Information Management, 38(1), 45–51. https://doi.org/10.1016/j.i**fomgt.2017.08.005.

Gu, V. C., Zhou, B., Cao, Q., & Adams, J. (2021). Exploring the relationship between supplier development, big data analytics capability, and firm performance. Annals of Operations Research, 302, 151–172. https://doi.org/10.1007/s10479-021-03976-7.

Gunasekaran, A., Papadopoulos, T., Dubey, R., Wamba, S. F., Childe, S., Hazen, B., & Akter, S. (2017). Big data and predictive analytics for supply chain and organizational performance. Journal of Business Research, 70, 308–317.

Gupta, M., & George, J. F. (2016). Toward the development of a big data analytics capability. Information and Management, 53(8), 1049–1064.

Gupta, G., Tan, K. T. L., Ee, Y. S., & Phang, C. S. C. (2018). Resource-based view of Information Systems: Sustainable and transient competitive advantage perspectives. Australasian Journal of Information Systems, 22. https://doi.org/10.3127/ajis.v22i0.1657.

Halper, F., & Stodder, D. (2014). TDWI Analytics Maturity Model Guide. Retrieved Jul 2018 from https://tdwi.org/whitepapers/2014/10/tdwi-analytics-maturity-model-guide.aspx.

Hazen, B. T., Skipper, J. B., Boone, C. A., & Hill, R. (2018). Back in business: Operations research in support of big data analytics for operations and supply chain management. Annals of Operations Research, 270, 201–211. https://doi.org/10.1007/s10479-016-2226-0.

Hevner, A., March, S., Park, J., & Ram, S. (2004). Design Science in Information Systems Research. MIS Quarterly, 28(1), 75–105.

Holmlund, Vaerenbergh, Y. V., Ciuchita, R., Ravald, A., Sarantopoulos, P., Ordenes, F. V., & Zaki, M. (2020). Customer experience management in the age of big data analytics: A strategic framework. Journal of Business Research, 116, 356–365.

Holsapple, C., Lee-Post, A., & Pakath, R. (2014). A unified foundation for business analytics. Decision Support Systems, 64, 130–141. https://doi.org/10.1016/j.dss.2014.05.013.

Howson, C., & Duncan, D. (2015). ITScore Overview for BI and Analytics September 24 2015. Retrieved Jul 2018 from https://www.gartner.com/doc/3136418/itscore-overview-bi-analytics.

Hunt, S. D., & Morgan, R. M. (2005). The Resource-Advantage Theory of Competition. Malhotra, N.K. (Ed.) Review of Marketing Research (Review of Marketing Research, 1, 153–206. https://doi.org/10.1108/S1548-6435(2004)0000001008.

Issa, A., Hatiboglu, B., Bildstein, A., & Bauernhansl, T. (2018). Industrie 4.0 roadmap: Framework for digital transformation based on the concepts of capability maturity and alignment. 51st CIRP Conference on Manufacturing Systems, 973–978.

Ji-fan Ren, S., Wamba, S., Akter, S., Dubey, R., & Childe, S. (2017). Modelling quality dynamics, business value and firm performance in a big data analytics environment. International Journal of Production Research, 55(17), 5011–5026.

Kiron, D., Prentice, P. K., & Ferguson, R. B. (2014). The Analytics Mandate. MIT Sloan Management Review, 55(4), 1–25.

Korsten, G., Aysolmaz, B., Turetken, O., Edel, D., & Ozkan, B. (2022, January). ADA-CMM: A Capability Maturity Model for Advanced Data Analytics. In Proceedings of the 55th Hawaii International Conference on System Sciences.

Lahrmann, G., Marx, F., Winter, R., & Wortmann, F. (2011). Business Intelligence Maturity: Development and Evaluation of a Theoretical Model, In Proceedings of the 44th Hawaii International Conference on System Sciences, Kauai, HI, USA

Larson, D., & Chang, V. (2016). A review and future direction of agile, business intelligence, analytics and data science. International Journal of Information Management, 36(5), 700–710. https://doi.org/10.1016/j.i**fomgt.2016.04.013.

Lavalle, S., Lesser, E., Shockley, R., Hopkins, M., & Kruschwitz, N. (2011). Big data, Analytics and the path from insights to value. MIT Sloan Management Review 52: 21–32. Retrieved Oct 2020 from https://sloanreview.mit.edu/article/big-data-analytics-and-the-path-from-insights-to-value/.

Lismont, J., Vanthienen, J., Baesens, B., & Lemahieua, W. (2017). Defining analytics maturity indicators: A survey approach. International Journal of Information Management, 37(3), 114–124.

Luftman, J. (2000). Assessing business-IT alignment maturity. Communications of the Association of Information Systems, 4(14), 1–50. https://doi.org/10.17705/1CAIS.00414.

Luftman, J., & Kempaiah, R. (2008). An update on Business-IT alignment: ‘A line’ has been Drawn. MIS Quarterly Executive, 6(3), 165–177.

Machado, C., de Lima, E., da Costa, S., Angelis, J., & Mattioda, R. (2017). Framing maturity based on sustainable operations management principles. International Journal of Production Economics, 190, 3–21.

Magnusson, J., & Nilsson, A. (2020). Digital Maturity in the Public Sector: Design and Evaluation of a new model, Scandinavian Workshop on E-Government (SWEG2020). Available at: https://gup.ub.gu.se/file/208009.

Menukhin, O., Mandungu, C., Shahgholian, A., & Mehandjiev, N. (2019). ‘Now and Next: A Maturity Model to Guide Analytics Growth’. In Proceedings of UKAIS 2019 Conference, 24th UK Academy for Information Systems International Conference, 09-10th April 2019, Oxford, UK, https://aisel.aisnet.org/ukais2019/39/.

Merendino, A., Dibba, S., Meadows, M., Quinn, L., Wilson, D., Simkina, L., & Canhoto, A. (2017). Big data, big decisions: The impact of big data on board level decision making. Journal of Business Research, 93, 67–78. https://doi.org/10.1016/j.jbusres.2018.08.029.

Mettler, T., & Rohner, P. (2009). Situational Maturity Models as Instrumental Artifacts for Organizational Design, In DESRIST ‘09 Proceedings of the 4th International Conference on Design Science Research in Information Systems and Technology. Philadelphia, Pennsylvania

Mikalef, P., & Pateli, A. (2017). Information technology-enabled dynamic capabilities and their indirect effect on competitive performance: Findings from PLS-SEM and fsQCA. Journal of Business Research, 70, 1–16.

Mikalef, P., Pappas, I. O., Krogstie, J., & Giannakos, M. (2018). Big data analytics capabilities: A systematic literature review and research agenda. Information Systems and e-Business Management, 16(3), 547–578.

Mikalef, P., Boura, M., Lekakos, G., & Krogstie, J. (2019a). Big data analytics capabilities and innovation: The mediating role of dynamic capabilities and moderating effect of the environment. British Journal of Management, 30(2), 272–298.

Mikalef, P., Boura, M., Lekakos, G., & Krogstie, J. (2019b). Big data analytics and firm performance: Findings from a mixed-method approach. Journal of Business Research, 98, 261–276.

Mintzberg, H., Ahlstrand, B., & Lampel, J. (2009). Strategy safari: The complete guide through the wilds of strategic management (2nd ed.). Great Britain: Pearson.

Mishra, A., Das, S. R., & Murray, J. (2015). Risk, process maturity, and Project Performance: An empirical analysis of US Federal Government Technology Projects. Production and Operations Management, 25(2), 210–232.

Muller, L., & Hart, M. (2016). Updating Business Intelligence and Analytics Maturity Models for New Developments, Lecture Notes in Business Information Processing, Vol.250, 137–151. https://doi.org/10.1007/978-3-319-32877-5_11.

Nolan, R. (1979). Managing the crisis in data processing. Harvard Business Review, 57, 115–126.

Nott, C. (2014). Big Data & Analytics Maturity Model. 15 August 2014. Retrieved Sep 2018 from https://www.ibmbigdatahub.com/blog/big-data-analytics-maturity-model.

Olszak, C. (2016). Toward better understanding and use of Business Intelligence in Organizations. Information Systems Management, 33(2), 105–123.

Paulk, M., Curtis, B., Chrissis, M., & Weber, C. (1993). Capability Maturity Model, Version 1.1. IEEE Software, 10(4), 18–27. https://doi.org/10.1109/52.219617.

Pavlou, P., & El Sawy, O. (2006). From IT leveraging competence to competitive advantage in turbulent environments: The case of New Product Development. Information Systems Research, 17(3), 198–227.

Penrose, E. (1959). The theory of the growth of the firm (4th ed.). Ebook Central Academic Complete [Online].

Provost, F., & Fawcett, T. (2013). Data Science for Business. Kindle version, accessed 18 November 2018 from Amazon.com.

Raman, S., Patwa, N., Niranjan, I., Ranjan, U., Moorthy, K., & Mehta, A. (2018). Impact of big data on supply chain management. International Journal of Logistics Research and Applications, 21(6), 579–596.

Rialti, R., Zollo, L., Ferraris, A., & Alon, I. (2019). Big data analytics capabilities and performance: Evidence from a moderated multi-mediation model. Technological Forecasting and Social Change, 149, 119781.

Ross, J. W., Beath, C., & Quaadgras, A. (2013). You may not need big data after all. Harvard Business Review. Retrieved Oct 2020 from https://hbr.org/2013/12/you-may-not-need-big-data-after-all.

Russell, S., Bruni, M., Haddad, M., & Granger, M. (2010). Organic Evolution and the Capability Maturity of Business Intelligence, in: AMCIS 2010 Proceedings, 501, https://aisel.aisnet.org/amcis2010/501.

Saunders, M., Lewis, P., & Thornhill, T. (2015). Research Methods for Business Students. 7th edn. Pearson [Online]. Available at: http://lib.myilibrary.com.manchester.idm.oclc.org/Open.aspx?id=819487 (Accessed: 20 July 2018).

Seddon, P., Constantinidis, D., Tamm, T., & Dod, H. (2017). How does business analytics contribute to business value? Information Systems Journal, 27(3), 237–269. https://doi.org/10.1111/isj.12101.

Sein, M., Henfridsson, O., Purao, S., Rossi, M., & Lindgren, R. (2011). Action Design Research. MIS Quarterly, 35(1), 37–56.

Sen, A., Ramamurthy, K., & Sinha, A. (2012). A model of data warehousing process maturity. IEEE Transactions on Software Engineering, 38(2), 336–353.

Shamim, S., Zenga, J., Shariq, S., & Khana, Z. (2019). Role of big data management in enhancing big data decision-making capability and quality among chinese firms: A dynamic capabilities view. Information & Management, 56(6), 103–135.