Abstract

Vision-language models (VLM), such as Contrastive Language-Image Pretraining (CLIP), have demonstrated powerful capabilities in image classification under zero-shot settings. However, current zero-shot learning (ZSL) relies on manually tagged samples of known classes through supervised learning, resulting in a waste of labor costs and limitations on foreseeable classes in real-world applications. To address these challenges, we propose the mixup long-tail unsupervised (MLTU) approach for open-world ZSL problems. The proposed approach employs a novel long-tail mixup loss that integrated class-based re-weighting assignments with a given mixup factor for each mixed visual embedding. To mitigate the adverse impact over time, we adopt a noisy learning strategy to filter out samples that generated incorrect labels. We reproduce the unsupervised experiments of existing state-of-the-art long-tail and noisy learning approaches. Experimental results demonstrate that MLTU achieves significant improvements in classification compared to these proven existing approaches on public datasets. Moreover, it serves as a plug-and-play solution for amending previous assignments and enhancing unsupervised performance. MLTU enables the automatic classification and correction of incorrect predictions caused by the projection bias of CLIP.

Similar content being viewed by others

References

Larochelle, H., Erhan, D., Bengio, Y.: Zero-data learning of new tasks. In: AAAI, p. 3 (2008)

Lampert, C.H., Nickisch, H., Harmeling, S.: Attribute-based classification for zero-shot visual object categorization. IEEE Trans. Pattern Anal. Mach. Intell. 36, 453–465 (2013)

Li, K., Min, M., Fu, Y.: Rethinking zero-shot learning: A conditional visual classification perspective. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3583–3592 (2019)

Xu, W., **an, Y., Wang, J., Schiele, B., Akata, Z.: Attribute prototype network for zero-shot learning. In: Advances in Neural Information Processing Systems, vol. 33, pp. 21969–21980 (2020)

Liang, J., Hu, D., Feng, J.: Domain adaptation with auxiliary target domain-oriented classifier. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16632–16642 (2021)

Reed, S., Akata, Z., Lee, H., Schiele, B.: Learning deep representations of fine-grained visual descriptions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 49–58 (2016)

Chen, Z., Luo, Y., Wang, S., Qiu, R., Li, J., Huang, Z.: Mitigating generation shifts for generalized zero-shot learning. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 844–852 (2021)

Pourpanah, F., Abdar, M., Luo, Y., Zhou, X., Wang, R., Lim, C., Wang, X.-Z., Wu, Q.: A review of generalized zero-shot learning methods. IEEE Trans. Pattern Anal. Mach. Intell. 45(4), 4051–4070 (2022)

Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J.: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning, pp. 8748–8763 (2021)

Ramesh, A., Pavlov, M., Goh, G., Gray, S., Voss, C., Radford, A., Chen, M., Sutskever, I.: Zero-shot text-to-image generation. In: International Conference on Machine Learning, pp. 8821–8831 (2021)

Radford, A., Narasimhan, K., Salimans, T., Sutskever, I.: Improving language understanding by generative pre-training (2018)

Wortsman, M., Ilharco, G., Kim, J.W., Li, M., Kornblith, S., Roelofs, R., Lopes, R.G., Hajishirzi, H., Namkoong, H.: Robust fine-tuning of zero-shot models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7959–7971 (2022)

Lin, Z., Yu, S., Kuang, Z., Pathak, D., Ramanan, D.: Multimodality helps unimodality: Cross-modal few-shot learning with multimodal models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19325–19337 (2023)

Zhou, K., Yang, J., Loy, C., Liu, Z.: Learning to prompt for vision-language models. Int. J. Comput. Vis. 130, 2337–2348 (2022)

Zhou, K., Yang, J., Loy, C., Liu, Z.: Conditional prompt learning for vision-language models. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16816–16825 (2022)

Ma, C., Liu, Y., Deng, J., **e, L., Dong, W., Xu, C.: Understanding and mitigating overfitting in prompt tuning for vision-language models. IEEE Trans. Circuits Syst. Video Technol. 33(9), 4616–4629 (2023)

Oh, C., So, J., Byun, H., Lim, Y., Shin, M., Jeon, J.-J., Song, K.: Geodesic multi-modal mixup for robust fine-tuning. Advances in Neural Information Processing Systems 36 (2024)

Jia, Y., Ye, X., Liu, Y., Guo, S.: Multi-modal recursive prompt learning with mixup embedding for generalization recognition. Knowl.-Based Syst. 294, 111726 (2024)

Liu, S., Niles-Weed, J., Razavian, N., Fernandez-Granda, C.: Early-learning regularization prevents memorization of noisy labels. In: Advances in Neural Information Processing Systems, vol. 33, pp. 20331–20342 (2020)

Han, Z., Fu, Z., Chen, S., Yang, J.: Semantic contrastive embedding for generalized zero-shot learning. Int. J. Comput. Vis. 130, 2606–2622 (2022)

Sun, X., Tian, Y., Li, H.: Zero-shot image classification via visual–semantic feature decoupling. Multimed. Syst. 30(2), 82 (2024)

**an, Y., Lorenz, T., Schiele, B., Akata, Z.: Feature generating networks for zero-shot learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5542–5551 (2018)

Ji, Z., Yan, J., Wang, Q., Pang, Y., Li, X.: Triple discriminator generative adversarial network for zero-shot image classification. Sci. China Inf. Sci. 64, 1–14 (2021)

Rahman, S., Khan, S., Barnes, N.: Transductive learning for zero-shot object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6082–6091 (2019)

Gao, R., Hou, X., Qin, J., Chen, J., Liu, L., Zhu, F., Zhang, Z., Shao, L.: Zero-vae-gan: generating unseen features for generalized and transductive zero-shot learning. IEEE Trans. Image Process. 29, 3665–3680 (2020)

Du, Y., Shi, M., Wei, F., Li, G.: Boosting zero-shot learning via contrastive optimization of attribute representations. IEEE Trans. Neural Netw. Learn. Syst. (2023). https://doi.org/10.1109/TNNLS.2023.3297134

Ji, Z., Hou, Z., Liu, X., Pang, Y., Han, J.: Information symmetry matters: a modal-alternating propagation network for few-shot learning. IEEE Trans. Image Process. 31, 1520–1531 (2022)

Wang, Q., Chen, K.: Multi-label zero-shot human action recognition via joint latent ranking embedding. Neural Netw. 122, 1–23 (2020)

Wang, Q., Breckon, T.: Unsupervised domain adaptation via structured prediction based selective pseudo-labeling. Proc. AAAI Conf. Artif. Intell. 34(04), 6243–6250 (2020)

Wang, Q., Meng, F., Breckon, T.P.: Data augmentation with norm-ae and selective pseudo-labelling for unsupervised domain adaptation. Neural Netw. 161, 614–625 (2023)

Huang, T., Chu, J., Wei, F.: Unsupervised prompt learning for vision-language models. ar**v preprint ar**v:2204.03649 (2022)

Shu, M., Nie, W., Huang, D.-A., Yu, Z., Goldstein, T., Anandkumar, A., **ao, C.: Test-time prompt tuning for zero-shot generalization in vision-language models. In: Advances in Neural Information Processing Systems, vol. 35, pp. 14274–14289 (2022)

Yang, X., Lv, F., Liu, F., Lin, G.: Self-training vision language berts with a unified conditional model. IEEE Trans. Circuits Syst. Video Technol. 33(8), 3560–3569 (2023)

Ding, N., Qin, Y., Yang, G., Wei, F., Yang, Z., Su, Y., Hu, S., Chen, Y., Chan, C.-M., Chen, W., et al.: Parameter-efficient fine-tuning of large-scale pre-trained language models. Nat. Mach. Intell. 5(3), 220–235 (2023)

Ren, J., Yu, C., Ma, X., Zhao, H., Yi, S.: Balanced meta-softmax for long-tailed visual recognition. In: Advances in Neural Information Processing Systems, vol. 33, pp. 4175–4186 (2020)

Park, S., Lim, J., Jeon, Y., Choi, J.: Influence-balanced loss for imbalanced visual classification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 735–744 (2021)

Ji, Z., Yu, X., Yu, Y., Pang, Y., Zhang, Z.: Semantic-guided class-imbalance learning model for zero-shot image classification. IEEE Trans. Cybern. 52, 6543–6554 (2021)

Ye, H., Zhou, F., Li, X., Zhang, Q.: Balanced mixup loss for long-tailed visual recognition. In: ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5 (2023)

Baik, J., Yoon, I., Choi, J.: Dbn-mix: training dual branch network using bilateral mixup augmentation for long-tailed visual recognition. Pattern Recogn. 147, 110107 (2024)

Arazo, E., Ortego, D., Albert, P., O’Connor, N., McGuinness, K.: Unsupervised label noise modeling and loss correction. In: International Conference on Machine Learning, pp. 312–321 (2019)

Huang, Z., Zhang, J., Shan, H.: Twin contrastive learning with noisy labels. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11661–11670 (2023)

Tang, L., Zhao, P., Pan, Z., Duan, X., Pardalos, P.: A two-stage denoising framework for zero-shot learning with noisy labels. Inf. Sci. 654, 119852 (2024)

Menon, A., Van Rooyen, B., Ong, C., Williamson, B.: Learning from corrupted binary labels via class-probability estimation. In: International Conference on Machine Learning, pp. 125–134 (2015)

Zhang, H., Cisse, M., Dauphin, Y., Lopez-Paz, D.: mixup: Beyond empirical risk management. In: 6th Int. Conf. Learning Representations (ICLR) (2018)

**an, Y., Lampert, C.H., Schiele, B., Akata, Z.: Zero-shot learning—a comprehensive evaluation of the good, the bad and the ugly. IEEE Trans. Pattern Anal. Mach. Intell. 41(9), 2251–2265 (2018)

Sheshadri, A., Endres, I., Hoiem, D., Forsyth, D.: Describing objects by their attributes. Computer Vision and, 1778–1785 (2012)

Wah, C., Branson, S., Welinder, P., Perona, P., Belongie, S.: The caltech-ucsd birds-200–2011 dataset (2011)

Patterson, G., Hays, J.: Sun attribute database: Discovering, annotating, and recognizing scene attributes. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2751–2758. IEEE (2012)

Nilsback, M.-E., Zisserman, A.: Automated flower classification over a large number of classes. In: 2008 Sixth Indian Conference on Computer Vision, Graphics & Image Processing, pp. 722–729. IEEE (2008)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE (2009)

Rahman, S., Khan, S., Porikli, F.: A unified approach for conventional zero-shot, generalized zero-shot, and few-shot learning. IEEE Trans. Image Process. 27, 5652–5667 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S.: An image is worth 16x16 words: Transformers for image recognition at scale. ar**v preprint ar**v:2010.11929 (2020)

Lin, T.-Y., Goyal, P., Girshick, R., He, K., Doll ́ar, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Cui, Y., Jia, M., Lin, T.-Y., Song, Y., Belongie, S.: Class-balanced loss based on effective number of samples. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9268–9277 (2019)

Li, M., Cheung, Y.-m., Lu, Y.: Long-tailed visual recognition via gaussian clouded logit adjustment. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6929–6938 (2022)

Chou, H.-P., Chang, S.-C., Pan, J.-Y., Wei, W., Juan, D.-C.: Remix: rebalanced mixup. In: Computer Vision–ECCV 2020 Workshops: Glasgow, UK, August 23–28, 2020, Proceedings, Part VI, pp. 95–110. Spring (2020)

Gao, P., Geng, S., Zhang, R., Ma, T., Fang, R., Zhang, Y., Li, H., Qiao, Y.: Clip-adapter: better vision-language models with feature adapters. Int. J. Comput. Vision 132(2), 581–595 (2024)

Cuturi, M.: Sinkhorn distances: Lightspeed computation of optimal transport. In: Advances in neural information processing systems 26 (2013)

Ouali, Y., Bulat, A., Matinez, B., Tzimiropoulos, G.: Black box few-shot adaptation for vision-language models. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 15534–15546 (2023)

Acknowledgements

This paper was supported by the National Natural Science Foundation of China (Grant No. 42276187).

Author information

Authors and Affiliations

Contributions

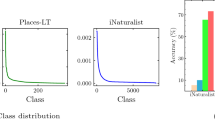

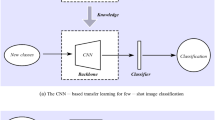

Yunpeng Jia wrote the main manuscript text; **ufen Ye provided the technical support and reviewed the manuscript text; **nkui mei prepared figures 1-2; Yusong Liu reviewed and modified the manuscript abstract; Shuxiang Guo provided the technical support.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Communicated by Fei Wu.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jia, Y., Ye, X., Mei, X. et al. MLTU: mixup long-tail unsupervised zero-shot image classification on vision-language models. Multimedia Systems 30, 169 (2024). https://doi.org/10.1007/s00530-024-01373-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-024-01373-1