Abstract

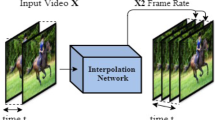

Many modern frame interpolation approaches rely on explicit bidirectional optical flows between adjacent frames, thus are sensitive to the accuracy of underlying flow estimation in handling occlusions while additionally introducing computational bottlenecks unsuitable for efficient deployment. In this paper, we propose a flow-free approach that is completely end-to-end trainable for multi-frame video interpolation. Our method, FLAVR, leverages 3D spatio-temporal kernels to directly learn motion properties from unlabeled videos and greatly simplifies the process of training, testing and deploying frame interpolation models. As a result, FLAVR delivers up to \(6\times \) speed up compared to the current state-of-the-art methods for multi-frame interpolation while consistently demonstrating superior qualitative and quantitative results compared with prior methods on popular benchmarks including Vimeo-90K, SNU-Film, and GoPro. Additionally, we show that frame interpolation is a competitive self-supervised pre-training task for videos by demonstrating various novel applications of FLAVR including action recognition, optical flow estimation, and video object tracking. Code and trained models are available in the project page: https://tarun005.github.io/FLAVR/. We have provided additional qualitative results for frame interpolation as well as downstream applications in our video available using this web link https://paperid1300.s3.us-west-2.amazonaws.com/FLAVRVideo.mp4 (link).

Similar content being viewed by others

References

Meyer, S., Djelouah, A., McWilliams, B., Sorkine-Hornung, A., Gross, M., Schroers, C.: Phasenet for video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 498–507 (2018)

Bao, W., Lai, W.-S., Ma, C., Zhang, X., Gao, Z., Yang, M.-H.: Depth-aware video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3703–3712 (2019)

Xue, T., Chen, B., Wu, J., Wei, D., Freeman, W.T.: Video enhancement with task-oriented flow. Int. J. Comput. Vision 127(8), 1106–1125 (2019)

Lee, H., Kim, T., Chung, T.-y., Pak, D., Ban, Y., Lee, S.: Adacof: Adaptive collaboration of flows for video frame interpolation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5316–5325 (2020)

Jiang, H., Sun, D., Jampani, V., Yang, M.-H., Learned-Miller, E., Kautz, J.: Super slomo: High quality estimation of multiple intermediate frames for video interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9000–9008 (2018)

Niklaus, S., Mai, L., Liu, F.: Video frame interpolation via adaptive separable convolution. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 261–270 (2017)

Niklaus, S., Liu, F.: Context-aware synthesis for video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1701–1710 (2018)

Liu, Z., Yeh, R.A., Tang, X., Liu, Y., Agarwala, A.: Video frame synthesis using deep voxel flow. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4463–4471 (2017)

Choi, M., Kim, H., Han, B., Xu, N., Lee, K.M.: Channel attention is all you need for video frame interpolation. In: AAAI, pp. 10663–10671 (2020)

Niklaus, S., Liu, F.: Softmax splatting for video frame interpolation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5437–5446 (2020)

Hu, P., Niklaus, S., Sclaroff, S., Saenko, K.: Many-to-many splatting for efficient video frame interpolation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3553–3562 (2022)

Bao, W., Lai, W.-S., Zhang, X., Gao, Z., Yang, M.-H.: Memc-net: Motion estimation and motion compensation driven neural network for video interpolation and enhancement. IEEE transactions on pattern analysis and machine intelligence (2019)

Niklaus, S., Mai, L., Liu, F.: Video frame interpolation via adaptive convolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 670–679 (2017)

Peleg, T., Szekely, P., Sabo, D., Sendik, O.: Im-net for high resolution video frame interpolation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2398–2407 (2019)

Cheng, X., Chen, Z.: Video frame interpolation via deformable separable convolution. In: AAAI, pp. 10607–10614 (2020)

Xu, X., Siyao, L., Sun, W., Yin, Q., Yang, M.-H.: Quadratic video interpolation. In: Advances in Neural Information Processing Systems, pp. 1647–1656 (2019)

Park, J., Ko, K., Lee, C., Kim, C.-S.: Bmbc: Bilateral motion estimation with bilateral cost volume for video interpolation. ar**v preprint ar**v:2007.12622 (2020)

Chi, Z., Nasiri, R.M., , Z., Lu, J., Tang, J., Plataniotis, K.N.: All at once: Temporally adaptive multi-frame interpolation with advanced motion modeling. ar**v preprint ar**v:2007.11762 (2020)

Doersch, C., Gupta, A., Efros, A.A.: Unsupervised visual representation learning by context prediction. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1422–1430 (2015)

Pathak, D., Girshick, R., Dollár, P., Darrell, T., Hariharan, B.: Learning features by watching objects move. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2701–2710 (2017)

Wang, X., Gupta, A.: Unsupervised learning of visual representations using videos. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2794–2802 (2015)

He, K., Fan, H., Wu, Y., **e, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9729–9738 (2020)

Xu, D., **ao, J., Zhao, Z., Shao, J., **e, D., Zhuang, Y.: Self-supervised spatiotemporal learning via video clip order prediction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 10334–10343 (2019)

Lee, H.-Y., Huang, J.-B., Singh, M., Yang, M.-H.: Unsupervised representation learning by sorting sequences. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 667–676 (2017)

Fernando, B., Bilen, H., Gavves, E., Gould, S.: Self-supervised video representation learning with odd-one-out networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3636–3645 (2017)

Misra, I., Zitnick, C.L., Hebert, M.: Shuffle and learn: unsupervised learning using temporal order verification. In: European Conference on Computer Vision, pp. 527–544 (2016). Springer

Wei, D., Lim, J.J., Zisserman, A., Freeman, W.T.: Learning and using the arrow of time. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8052–8060 (2018)

Vondrick, C., Shrivastava, A., Fathi, A., Guadarrama, S., Murphy, K.: Tracking emerges by colorizing videos. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 391–408 (2018)

Wang, X., Jabri, A., Efros, A.A.: Learning correspondence from the cycle-consistency of time. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2566–2576 (2019)

Han, T., **e, W., Zisserman, A.: Video representation learning by dense predictive coding. In: Proceedings of the IEEE International Conference on Computer Vision Workshops (2019)

Han, T., **e, W., Zisserman, A.: Memory-augmented dense predictive coding for video representation learning. ar**v preprint ar**v:2008.01065 (2020)

Gordon, D., Ehsani, K., Fox, D., Farhadi, A.: Watching the world go by: Representation learning from unlabeled videos. ar**v preprint ar**v:2003.07990 (2020)

Mahajan, D., Huang, F.-C., Matusik, W., Ramamoorthi, R., Belhumeur, P.: Moving gradients: a path-based method for plausible image interpolation. ACM Trans. Gr. (TOG) 28(3), 1–11 (2009)

Meyer, S., Wang, O., Zimmer, H., Grosse, M., Sorkine-Hornung, A.: Phase-based frame interpolation for video. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1410–1418 (2015)

Shi, Z., Liu, X., Shi, K., Dai, L., Chen, J.: Video interpolation via generalized deformable convolution. ar**v preprint ar**v:2008.10680 (2020)

Liu, Y.-L., Liao, Y.-T., Lin, Y.-Y., Chuang, Y.-Y.: Deep video frame interpolation using cyclic frame generation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8794–8802 (2019)

Yuan, L., Chen, Y., Liu, H., Kong, T., Shi, J.: Zoom-in-to-check: Boosting video interpolation via instance-level discrimination. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 12183–12191 (2019)

Yu, Z., Li, H., Wang, Z., Hu, Z., Chen, C.W.: Multi-level video frame interpolation: exploiting the interaction among different levels. IEEE Trans. Circuits Syst. Video Technol. 23(7), 1235–1248 (2013)

Zhang, H., Zhao, Y., Wang, R.: A flexible recurrent residual pyramid network for video frame interpolation. (2019). ICCV

Huang, Z., Zhang, T., Heng, W., Shi, B., Zhou, S.: Rife: Real-time intermediate flow estimation for video frame interpolation. ar**, and cost volume. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8934–8943 (2018)

Liu, Y., **e, L., Siyao, L., Sun, W., Qiao, Y., Dong, C.: Enhanced quadratic video interpolation. In: European Conference on Computer Vision, pp. 41–56 (2020). Springer

Tulyakov, S., Gehrig, D., Georgoulis, S., Erbach, J., Gehrig, M., Li, Y., Scaramuzza, D.: Time lens: Event-based video frame interpolation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16155–16164 (2021)

Kalluri, T., Pathak, D., Chandraker, M., Tran, D.: Flavr: Flow-agnostic video representations for fast frame interpolation. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2071–2082 (2023)

Dutta, S., Subramaniam, A., Mittal, A.: Non-linear motion estimation for video frame interpolation using space-time convolutions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1726–1731 (2022)

Shi, Z., Xu, X., Liu, X., Chen, J., Yang, M.-H.: Video frame interpolation transformer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17482–17491 (2022)

Kim, H.H., Yu, S., Yuan, S., Tomasi, C.: Cross-attention transformer for video interpolation. In: Proceedings of the Asian Conference on Computer Vision, pp. 320–337 (2022)

Li, C., Wu, G., Sun, Y., Tao, X., Tang, C.-K., Tai, Y.-W.: H-vfi: Hierarchical frame interpolation for videos with large motions. ar**v preprint ar**v:2211.11309 (2022)

Reda, F., Kontkanen, J., Tabellion, E., Sun, D., Pantofaru, C., Curless, B.: Film: Frame interpolation for large motion. In: Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part VII, pp. 250–266 (2022). Springer

Singer, U., Polyak, A., Hayes, T., Yin, X., An, J., Zhang, S., Hu, Q., Yang, H., Ashual, O., Gafni, O., et al.: Make-a-video: Text-to-video generation without text-video data. ar**v preprint ar**v:2209.14792 (2022)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3d convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4489–4497 (2015)

al., T.: A closer look at spatiotemporal convolutions for action recognition. In: CVPR (2018)

**e, S., Sun, C., Huang, J., Tu, Z., Murphy, K.: Rethinking spatiotemporal feature learning: Speed-accuracy trade-offs in video classification. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 305–321 (2018)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017)

Feichtenhofer, C., Fan, H., Malik, J., He, K.: Slowfast networks for video recognition. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 6202–6211 (2019)

Shou, Z., Chan, J., Zareian, A., Miyazawa, K., Chang, S.-F.: CDC: Convolutional-de-convolutional networks for precise temporal action localization in untrimmed videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2017)

Xu, H., Das, A., Saenko, K.: R-c3d: Region convolutional 3d network for temporal activity detection. In: Proceedings of the International Conference on Computer Vision (ICCV) (2017)

Xu, J., Mei, T., Yao, T., Rui, Y.: Msr-vtt: A large video description dataset for bridging video and language. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-assisted Intervention, pp. 234–241 (2015). Springer

Tran, D., Wang, H., Feiszli, M., Torresani, L.: Video classification with channel-separated convolutional networks. In: ICCV (2019)

Odena, A., Dumoulin, V., Olah, C.: Deconvolution and checkerboard artifacts. Distill (2016). https://doi.org/10.23915/distill.00003

Miech, A., Laptev, I., Sivic, J.: Learnable pooling with context gating for video classification. ar**v preprint ar**v:1706.06905 (2017)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Khurram, S., Amir, Z., Mubarak, S.: UCF101: A dataset of 101 human action classes from videos in the wild. CRCV-TR-12-01 (2012)

Perazzi, F., Pont-Tuset, J., McWilliams, B., Van Gool, L., Gross, M., Sorkine-Hornung, A.: A benchmark dataset and evaluation methodology for video object segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 724–732 (2016)

Nah, S., Hyun Kim, T., Mu Lee, K.: Deep multi-scale convolutional neural network for dynamic scene deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3883–3891 (2017)

Su, S., Delbracio, M., Wang, J., Sapiro, G., Heidrich, W., Wang, O.: Deep video deblurring for hand-held cameras. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1279–1288 (2017)

Nilsson, J., Akenine-Möller, T.: Understanding ssim. ar**v preprint ar**v:2006.13846 (2020)

Zhang, H., Zhao, Y., Wang, R.: A flexible recurrent residual pyramid network for video frame interpolation. In: European Conference on Computer Vision, pp. 474–491 (2020). Springer

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. ar**v preprint ar**v:2010.11929 (2020)

Bertasius, G., Wang, H., Torresani, L.: Is space-time attention all you need for video understanding? In: ICML, vol. 2, p. 4 (2021)

Cheng, X., Chen, Z.: Multiple video frame interpolation via enhanced deformable separable convolution. ar**v preprint ar**v:2006.08070 (2020)

Park, J., Lee, C., Kim, C.-S.: Asymmetric bilateral motion estimation for video frame interpolation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14539–14548 (2021)

Hore, A., Ziou, D.: Image quality metrics: Psnr vs. ssim. In: 2010 20th International Conference on Pattern Recognition, pp. 2366–2369 (2010). IEEE

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision, pp. 694–711 (2016). Springer

Baker, S., Scharstein, D., Lewis, J., Roth, S., Black, M.J., Szeliski, R.: A database and evaluation methodology for optical flow. Int. J. Comput. Vision 92(1), 1–31 (2011)

Scharstein, D., Hirschmüller, H., Kitajima, Y., Krathwohl, G., Nešić, N., Wang, X., Westling, P.: High-resolution stereo datasets with subpixel-accurate ground truth. In: German Conference on Pattern Recognition, pp. 31–42 (2014). Springer

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: Hmdb: a large video database for human motion recognition. In: 2011 International Conference on Computer Vision, pp. 2556–2563 (2011). IEEE

Vondrick, C., Pirsiavash, H., Torralba, A.: Generating videos with scene dynamics. In: Advances in Neural Information Processing Systems, pp. 613–621 (2016)

Luo, Z., Peng, B., Huang, D.-A., Alahi, A., Fei-Fei, L.: Unsupervised learning of long-term motion dynamics for videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2203–2212 (2017)

Wulff, J., Black, M.J.: Temporal interpolation as an unsupervised pretraining task for optical flow estimation. In: German Conference on Pattern Recognition, pp. 567–582 (2018). Springer

Butler, D.J., Wulff, J., Stanley, G.B., Black, M.J.: A naturalistic open source movie for optical flow evaluation. In: A. Fitzgibbon et al. (Eds.) (ed.) European Conf. on Computer Vision (ECCV). Part IV, LNCS 7577, pp. 611–625. Springer, (2012)

Geiger, A., Lenz, P., Urtasun, R.: Are we ready for autonomous driving? the kitti vision benchmark suite. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2012)

Menze, M., Geiger, A.: Object scene flow for autonomous vehicles. In: Conference on Computer Vision and Pattern Recognition (CVPR) (2015)

Ilg, E., Mayer, N., Saikia, T., Keuper, M., Dosovitskiy, A., Brox, T.: Flownet 2.0: Evolution of optical flow estimation with deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2462–2470 (2017)

Liu, P., Lyu, M., King, I., Xu, J.: Selflow: Self-supervised learning of optical flow. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4571–4580 (2019)

Pan, T., Song, Y., Yang, T., Jiang, W., Liu, W.: Videomoco: Contrastive video representation learning with temporally adversarial examples. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11205–11214 (2021)

Tong, Z., Song, Y., Wang, J., Wang, L.: Videomae: Masked autoencoders are data-efficient learners for self-supervised video pre-training. ar**v preprint ar**v:2203.12602 (2022)

Jabri, A., Owens, A., Efros, A.A.: Space-time correspondence as a contrastive random walk. ar**v preprint ar**v:2006.14613 (2020)

Xu, J., Wang, X.: Rethinking self-supervised correspondence learning: A video frame-level similarity perspective. ar**v preprint ar**v:2103.17263 (2021)

Li, X., Liu, S., De Mello, S., Wang, X., Kautz, J., Yang, M.-H.: Joint-task self-supervised learning for temporal correspondence. ar**v preprint ar**v:1909.11895 (2019)

Acknowledgements

We would like to thank Adrian Smith for providing us with the insect videos. TK and MC are partially supported by NSF CAREER Award 1751365. TK is also supported by IPE PhD fellowship.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kalluri, T., Pathak, D., Chandraker, M. et al. FLAVR: flow-free architecture for fast video frame interpolation. Machine Vision and Applications 34, 83 (2023). https://doi.org/10.1007/s00138-023-01433-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-023-01433-y