Abstract

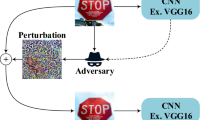

Adversarial attacks are deliberate data manipulations that may appear harmless to the viewer yet lead to incorrect categorization in a machine learning or deep learning system. These kinds of attacks frequently take shape of carefully constructed “noise”, that may cause misdiagnosis. Deep Neural Networks (DNNs) are being utilized more frequently in the physical world for applications that require high safety standards, including intelligent driverless vehicles, cloud systems, electronic health records, etc. The guiding rules of autonomous automobiles rely mainly on their capacity to gather environmental data through embedded sensors, then use DNN categorization to understand it and come to operational conclusions. For academics, the security and dependability of DNNs present numerous difficulties and worries. Threats of adversarial attacks on DNNs are one of the difficulties that researchers are now facing in their work. An adversarial attack assumes a minor alteration of an original image, with the modifications being nearly undetectable to human vision. In this survey, we describe the most recent studies on adversarial attack mechanisms on DNN-based frameworks and countermeasures to mitigate these attacks. Our main focus in this paper is images based adversarial attacks. We confer each research's benefits, drawbacks, methodology, and experimental results.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Charu CA (2018) Neural networks and deep learning: a textbook. Springer, Berlin

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Wang Y, Ma X, Bailey J, Yi J, Zhou B, Gu Q (2021) On the convergence and robustness of adversarial training. ar**v Prepr. ar**v2112.08304

Bai X, Yan C, Yang H, Bai L, Zhou J, Hancock ER (2018) Adaptive hash retrieval with kernel based similarity. Pattern Recogn 75:136–148

Szegedy C et al (2013) Intriguing properties of neural networks. ar**v Prepr. ar**v1312.6199

Goodfellow IJ, Shlens J, Szegedy C (2014) Explaining and harnessing adversarial examples. ar**v Prepr. ar**v1412.6572

Eykholt E et al (2018) Robust physical-world attacks on deep learning visual classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1625–1634

Cheng Y, Lu F, Zhang X (2018) Appearance-based gaze estimation via evaluation-guided asymmetric regression. In: Proceedings of the European conference on computer vision (ECCV), pp 100–115

Finlayson SG, Bowers JD, Ito J, Zittrain JL, Beam AL, Kohane IS (2019) Adversarial attacks on medical machine learning. Science 363(6433):1287–1289

Kumar RSS et al (2020) Adversarial machine learning-industry perspectives. In: 2020 IEEE security and privacy workshops (SPW), pp 69–75

Steinhardt J, Koh PWWW, Liang PS (2017) Certified defenses for data poisoning attacks. Adv Neural Inf Process Syst 30

Gu T, Liu K, Dolan-Gavitt B, Garg S (2019) Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access 7:47230–47244

Khalid F, Hanif MA, Rehman S, Ahmed R, Shafique M (2019) TrISec: training data-unaware imperceptible security attacks on deep neural networks. In: 2019 IEEE 25th International symposium on on-line testing and robust system design (IOLTS), pp 188–193

Stallkamp J, Schlipsing M, Salmen J, Igel C (2012) Man vs. computer: benchmarking machine learning algorithms for traffic sign recognition. Neural Netw 32:323–332

**e S, Yan Y, Hong Y (2022) Stealthy 3D poisoning attack on video recognition models. IEEE Trans Depend Secur Comput

Liu H, Li D, Li Y (2021) Poisonous label attack: black-box data poisoning attack with enhanced conditional DCGAN. Neural Process Lett 53(6):4117–4142

Lin J, Luley R, **ong K (2021) Active learning under malicious mislabeling and poisoning attacks. In: 2021 IEEE global communications conference (GLOBECOM), pp 1–6

Ghamizi S, Cordy M, Papadaki M, Le Traon Y (2021) Evasion attack steganography: turning vulnerability of machine learning to adversarial attacks into a real-world application. In: Proceedings of the IEEE/CVF International conference on computer vision, pp 31–40

**ao Q, Chen Y, Shen C, Chen Y, Li K (2019) Seeing is not believing: camouflage attacks on Image Scaling algorithms. In: USENIX security symposium, pp 443–460

Kwon H, Yoon H, Choi D (2019) Priority adversarial example in evasion attack on multiple deep neural networks. In: 2019 International conference on artificial intelligence in information and communication (ICAIIC), pp 399–404

Ma S, Liu Y, Tao G, Lee W-C, Zhang X (2019) Nic: detecting adversarial samples with neural network invariant checking. In: 26th Annual network and distributed system security symposium (NDSS 2019)

Calzavara S, Cazzaro L, Lucchese C (2021) AMEBA: an adaptive approach to the black-box evasion of machine learning models. In: Proceedings of the 2021 ACM Asia conference on computer and communications security, pp 292–306

**ang C, Mittal P (2021) Patchguard++: efficient provable attack detection against adversarial patches. ar**v Prepr. ar**v2104.12609, 2021.

Liu Y, Chen X, Liu C, Song D (2016) Delving into transferable adversarial examples and black-box attacks. ar**v Prepr. ar**v1611.02770

Zhang Z, Chen Y, Wagner D (2021) Seat: similarity encoder by adversarial training for detecting model extraction attack queries. In: Proceedings of the 14th ACM Workshop on artificial intelligence and security, pp 37–48

Liu S (2022) Model extraction attack and defense on deep generative models. J Phys: Conf Ser 2189(1):12024

Prakash P, Ding J, Li H, Errapotu SM, Pei Q, Pan M (2020) Privacy preserving facial recognition against model inversion attacks. In: GLOBECOM 2020–2020 IEEE global communications conference, pp 1–6.

Madono K, Tanaka M, Onishi M, Ogawa T (2021) Sia-gan: Scrambling inversion attack using generative adversarial network. IEEE Access 9:129385–129393

Khosravy M, Nakamura K, Hirose Y, Nitta N, Babaguchi N (2021) Model inversion attack: analysis under gray-box scenario on deep learning based face recognition system. KSII Trans Internet Inf Syst 15(3):1100–1118

Nasr M, Shokri R, Houmansadr A (2019) Comprehensive privacy analysis of deep learning: passive and active white-box inference attacks against centralized and federated learning. In: 2019 IEEE symposium on security and privacy (SP), pp 739–753

Moosavi-Dezfooli S-M, Fawzi A, Frossard P (2016) Deepfool: a simple and accurate method to fool deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2574–2582

Ye L (2021) Thundernna: a white box adversarial attack. ar**v Prepr. ar**v2111.12305

Akhtar N, Mian A (2018) Threat of adversarial attacks on deep learning in computer vision: a survey. IEEE Access 6:14410–14430

Gungor O, Rosing T, Aksanli B (2022) STEWART: STacking ensemble for white-box AdversaRial attacks towards more resilient data-driven predictive maintenance. Comput Ind 140:103660

Wong KL, Bosello M, Tse R, Falcomer C, Rossi C, Pau G (2021) Li-ion batteries state-of-charge estimation using deep LSTM at various battery specifications and discharge cycles. In: Proceedings of the conference on information technology for social good, pp 85–90

Saxena A, Goebel K, Simon D, Eklund N (2008) Damage propagation modeling for aircraft engine run-to- failure simulation. In: 2008 international conference on prognostics and health management, pp 1–9

**ao W, Jiang H, **a S (2020) A new black box attack generating adversarial examples based on reinforcement learning. In: 2020 Information communication technologies conference (ICTC), pp 141–146

Li Y, Hua J, Wang H, Chen C, Liu Y (2021) DeepPayload: black-box backdoor attack on deep learning models through neural payload injection. In: 2021 IEEE/ACM 43rd international conference on software engineering (ICSE), pp 263–274

Sun Z, Sun R, Lu L, Mislove A (2021) Mind your weight (s): a large-scale study on insufficient machine learning model protection in mobile apps. In: 30th USENIX security symposium (USENIX security 21), pp 1955–1972

Liu J et al (2022) An efficient adversarial example generation algorithm based on an accelerated gradient iterative fast gradient. Comput. Stand. Inter. 82:103612

Dong Y et al (2018) Boosting adversarial attacks with momentum. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9185–9193

Cao H, Li S, Zhou Y, Fan M, Zhao X, Tang Y (2021) Towards black-box attacks on deep learning apps. ar**v Prepr. ar**v2107.12732

Qiu L, Wang Y, Rubin J (2018) Analyzing the analyzers: FlowDroid/IccTA, AmanDroid, and DroidSafe. In: Proceedings of the 27th ACM SIGSOFT international symposium on software testing and analysis, pp 176–186

Ben Taieb S, Hyndman RJ (2014) A gradient boosting approach to the Kaggle load forecasting competition. Int J Forecast 30(2):382–394

Bhagoji AN, He W, Li B, Song D (2018) Practical black-box attacks on deep neural networks using efficient query mechanisms. In: Proceedings of the European conference on computer vision (ECCV), pp 154– 169

Spall JC (1992) Multivariate stochastic approximation using a simultaneous perturbation gradient approximation. IEEE Trans Autom Control 37(3):332–341

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proceedings of ICNN’95-international conference on neural networks, vol 4, pp 1942–1948

Guo S, Zhao J, Li X, Duan J, Mu D, **g X (2021) A black-box attack method against machine-learning-based anomaly network flow detection models. Secur. Commun. Networks 2021:1–13

Leevy JL, Khoshgoftaar TM (2020) A survey and analysis of intrusion detection models based on CSE-CIC-IDS2018 big data. J Big Data 7(1):1–19

Tavallaee M, Bagheri E, Lu W, Ghorbani AA (2009) A detailed analysis of the KDD CUP 99 data set. In: 2009 IEEE symposium on computational intelligence for security and defense applications, pp 1–6

Zhou Z, Wang B, Dong M, Ota K (2019) Secure and efficient vehicle-to-grid energy trading in cyber physical systems: integration of blockchain and edge computing. IEEE Trans Syst Man Cybern Syst 50(1):43–57

Kurakin A, Goodfellow IJ, Bengio S (2018) Adversarial examples in the physical world. In: Artificial intelligence safety and security. Chapman and Hall/CRC, pp 99–112

Feng R, Mangaokar N, Chen J, Fernandes E, Jha S, Prakash A (2022) GRAPHITE: generating automatic physical examples for machine-learning attacks on computer vision systems. In: 2022 IEEE 7th European symposium on security and privacy (EuroS&P), pp 664–683

Chen S-T, Cornelius C, Martin J, Chau DH (2019) Shapeshifter: robust physical adversarial attack on faster R-CNN object detector. In: Machine learning and knowledge discovery in databases: European conference, ECML PKDD 2018, Dublin, Ireland, 10–14 Sept 2018, Proceedings, Part I 18, 2019, pp 52–68

Lin T-Y et al (2014) Microsoft coco: common objects in context. In: Computer vision–ECCV 2014: 13th European conference, Zurich, Switzerland, 6–12 Sept 2014, Proceedings, Part V 13, pp 740–755

Lu J, Sibai H, Fabry E, Forsyth D (2017) No need to worry about adversarial examples in object detection in autonomous vehicles. ar**v Prepr. ar**v1707.03501

Woitschek F, Schneider G (2021) Physical adversarial attacks on deep neural networks for traffic sign recognition: a feasibility study. In: 2021 IEEE Intelligent vehicles symposium (IV), pp 481–487

Chen Z, Dash P, Pattabiraman K (2021) Jujutsu: a two-stage defense against adversarial patch attacks on deep neural networks. ar**v Prepr. ar**v2108.05075

Brown TB, Mané D, Roy A, Abadi M, Gilmer J (2017) Adversarial patch. ar**v Prepr. ar**v1712.09665

Mundhenk TN, Chen BY, Friedland G (2019) Efficient saliency maps for explainable AI. ar**v Prepr. ar**v1911.11293

**ang C, Bhagoji AN, Sehwag V, Mittal P (2021) PatchGuard: a provably robust defense against adversarial patches via small receptive fields and masking. In: USENIX Security Symposium, pp 2237–2254

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of the IEEE international conference on computer vision, pp 3730–3738

Zhou B, Lapedriza A, Khosla A, Oliva A, Torralba A (2017) Places: a 10 million image database for scene recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1452–1464

Zhang Y et al (2022) Adversarial patch attack on multi-scale object detection for UAV remote sensing images. Remote Sens 14(21):5298

Redmon J, Farhadi A (2018) Yolov3: an incremental improvement. ar**v Prepr. ar**v1804.02767

Prabakaran V, Le AV, Kyaw PT, Kandasamy P, Paing A, Mohan RE (2023) STetro-D: a deep learning based autonomous descending-stair cleaning robot. Eng Appl Artif Intell 120:105844

Liu X, Yang H, Liu Z, Song L, Li H, Chen Y (2018) DPATCH: an adversarial patch attack on object detectors. ar**v Prepr. ar**v1806.02299

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Vineetha, B., Suryaprasad, J., Shylaja, S.S., Honnavalli, P.B. (2024). A Deep Dive into Deep Learning-Based Adversarial Attacks and Defenses in Computer Vision: From a Perspective of Cybersecurity. In: Nagar, A.K., Jat, D.S., Mishra, D., Joshi, A. (eds) Intelligent Sustainable Systems. WorldS4 2023. Lecture Notes in Networks and Systems, vol 803. Springer, Singapore. https://doi.org/10.1007/978-981-99-7569-3_28

Download citation

DOI: https://doi.org/10.1007/978-981-99-7569-3_28

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-7568-6

Online ISBN: 978-981-99-7569-3

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)