Abstract

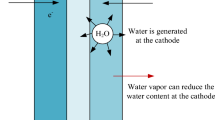

Water heating is the third largest electricity consumer in U.S. households, after space heating and cooling. Thus, water heaters represent a significant potential for reducing electricity consumption and associated CO2 emissions of residential buildings. To this end, this study proposes a model-free deep reinforcement learning (RL) approach that aims to minimize the electricity consumption and the CO2 emissions of a heat pump water heater without affecting user comfort. In this approach, a set of RL agents focusing on either electricity saving or emission reduction, with different look ahead periods, were trained using the deep Q-networks (DQN) algorithm and their performance was tested on different hot water usage and Marginal Operating Emissions Rate (MOER) profiles. The testing results showed that the RL agents that focus on electricity saving can save electricity in the range of 12–22% by operating the water heater with maximum heat pump efficiency and minimum electric element utilization. On the other hand, the RL agents that focus on emission reduction reduced emissions in the range of 18–37% by making use of the variable MOER values. These RL agents used the heat pump and/or an element when the MOER values are low due to the availability of renewable energy sources (e.g., solar and wind) and mostly avoided the periods of carbon-intensive periods. Overall, these results showed that the proposed RL approach can help minimize the electricity consumption and the CO2 emissions of a heat pump water heater without having any prior knowledge about the device.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Amasyali K, Munk J, Kurte K, Kuruganti T, Zandi H (2021) Deep reinforcement learning for autonomous water heater control. Buildings 11(11):548. [Online]. Available: https://www.mdpi.com/2075-5309/11/11/548

Boudreaux PR, Munk JD, Jackson RK, Gehl AC, Parkison AE, Nutaro JJ (2014) Improving heat pump water heater efficiency by avoiding electric resistance heater use. Oak Ridge National Laboratory, Oak Ridge, TN, United States

De Somer O, Soares A, Vanthournout K, Spiessens F, Kuijpers T, Vossen K (2017) Using reinforcement learning for demand response of domestic hot water buffers: a real-life demonstration. In: 2017 IEEE PES innovative smart grid technologies conference Europe (ISGT-Europe), 26–29 Sept 2017, pp 1–7. https://doi.org/10.1109/ISGTEurope.2017.8260152

Gong H, Rooney T, Akeyo OM, Branecky BT, Ionel DM (2021) Equivalent electric and heat-pump water heater models for aggregated community-level demand response virtual power plant controls. IEEE Access 9:141233–141244. https://doi.org/10.1109/ACCESS.2021.3119581

Hepbasli A, Kalinci Y (2009) A review of heat pump water heating systems. Renew Sustain Energy Rev 13(6):1211–1229. https://doi.org/10.1016/j.rser.2008.08.002

Lee S, Choi D-H (2020) Energy management of smart home with home appliances, energy storage system and electric vehicle: a hierarchical deep reinforcement learning approach. Sensors 20(7):2157. [Online]. Available: https://www.mdpi.com/1424-8220/20/7/2157

Mnih V et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533. https://doi.org/10.1038/nature14236

Peirelinck T, Hermans C, Spiessens F, Deconinck G (2021) Domain randomization for demand response of an electric water heater. IEEE Trans Smart Grid 12(2):1370–1379. https://doi.org/10.1109/TSG.2020.3024656

Ruelens F, Claessens BJ, Quaiyum S, Schutter BD, Babuška R, Belmans R (2018) Reinforcement learning applied to an electric water heater: from theory to practice. IEEE Trans Smart Grid 9(4):3792–3800. https://doi.org/10.1109/TSG.2016.2640184

Sparn B, Hudon K, Christensen D (2014) Laboratory performance evaluation of residential integrated heat pump water heaters. National Renewable Energy Laboratory, Golden, CO, United States

Wei T, Wang Y, Zhu Q (2017) Deep reinforcement learning for building HVAC control. Presented at the Proceedings of the 54th annual design automation conference 2017, Austin, TX, USA. [Online]. Available: https://doi.org/10.1145/3061639.3062224

Yu L, Qin S, Zhang M, Shen C, Jiang T, Guan X (2021a) A review of deep reinforcement learning for smart building energy management. IEEE Internet Things J 8(15):12046–12063. https://doi.org/10.1109/JIOT.2021.3078462

Yu L et al (2021b) Multi-agent deep reinforcement learning for HVAC control in commercial buildings. IEEE Trans Smart Grid 12(1):407–419. https://doi.org/10.1109/TSG.2020.3011739

Zhang X, Biagioni D, Cai M, Graf P, Rahman S (2021) An edge-cloud integrated solution for buildings demand response using reinforcement learning. IEEE Trans Smart Grid 12(1):420–431. https://doi.org/10.1109/TSG.2020.3014055

Acknowledgements

This work was funded by the U.S. Department of Energy, Energy Efficiency and Renewable Energy, Building Technology Office under contract number DE-AC05-00OR22725. This manuscript has been authored by UT-Battelle, LLC under Contract No. DE-AC05-00OR22725 with the U.S. Department of Energy. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of this manuscript, or allow others to do so, for United States Government purposes. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-public-access-plan).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Amasyali, K., Munk, J., Kurte, K., Zandi, H. (2023). Deep Reinforcement Learning Based Smart Water Heater Control for Reducing Electricity Consumption and Carbon Emission. In: Wang, L.L., et al. Proceedings of the 5th International Conference on Building Energy and Environment. COBEE 2022. Environmental Science and Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-19-9822-5_105

Download citation

DOI: https://doi.org/10.1007/978-981-19-9822-5_105

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-9821-8

Online ISBN: 978-981-19-9822-5

eBook Packages: EngineeringEngineering (R0)