Abstract

Spectral-Domain Optical Coherence Tomography (SD-OCT) is a non-invasive imaging modality, which provides retinal structures with unprecedented detail in 3D. In this paper, we propose an automated segmentation method to detect intra-retinal layers in OCT images acquired from a high resolution SD-OCT Spectralis HRA+OCT (Heidelberg Engineering, Germany). The algorithm starts by removing all the OCT imaging artifects includes the speckle noise and enhancing the contrast between layers using both 3D nonlinear anisotropic and ellipsoid averaging filers. Eight boundaries of the retinal are detected by using a hybrid method which combines hysteresis thresholding method, level set method, multi-region continuous max-flow approaches. The segmentation results show that our method can effectively locate 8 surfaces for varying quality 3D macular images.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Coherence Tomography (OCT) is a powerful biomedical tissue-imaging modality, which can provide wealthy information, such as structure, blood flow, elastic parameters, change of polarization state and molecular content [9]. Therefore, it has been increasingly useful in diagnosing eye diseases, such as glaucoma, diabetic retinopathy and age-related macular degeneration, which are the most common causes of blindness in the developed countries according to the World Heath Organization (WHO) survey [14]. In order to help ophthalmologists to diagnose the eye diseases more accurately and efficiently, some medical image processing techniques are applied to extract some useful information from OCT data, such as retinal layers, retinal vessels, retinal lesions, optic nerve head, optic cup and neuro-retinal rim. In this work, we focus on the intra-retinal layer segmentation of 3D macular images.

There are two main reasons for intra-retinal layer segmentation [7]. First, the morphology and thickness of each intra-retinal layer are important indicators for assessing the presence of ocular disease. For example, the thickness of nerve fiber layer is an important indicator of glaucoma. Second, intra-retinal layer segmentation improves the understanding of the pathophysiology of systemic diseases. For instance, the damage of the nerve fiber layer can provide the indication of brain damages.

However, it is time consuming or even impossible for ophthalmologist to manually label each layers, specifically for those macular images with the complicated 3D layer structures. Therefore, a reliable automated method for layer segmentation is attractive in computer aided-diagnosis. 3D OCT layer segmentation is a challenging problem, and there has been significant effort in this area over the last decade. A number of different approaches are developed to do the segmentation, however, no typical segmentation method can work equally well on different macular images collected from different imaging modalities.

For most of the existing 3D macular segmentation approaches, a typical two-step process is adopted. The first step is de-noising, which is used to remove the speckle noises and enhance the contrast between layers (usually with 3D anisotropic diffusion method, 3D median filter, 3D Gaussian filter or 3D wavelet transform). The second step is to segment the layers according to the characteristics of the images, such as shapes, textures or intensities. For most of the existing 3D OCT layer segmentation approaches, we can generally classify into three distinct groups: snake based, pattern recognition based and graph based retinal layer segmentation methods.

Snake based methods [11] attempt to minimize the energy of a sum of internal and external energy of the current contour. These methods work well on those images with high contrast, high gradient and smooth boundary between the layers, however, the performance is adversely affected by the blood vessel shadows, other morphological features of the retinal, or irregular layer shapes. Zhu et al. [18] proposed a Floatingcanvas method to segment 3D intraretinal layers. This method can produce relatively smooth layer surface, however, it is sensitive to the low gradient between layers. Yazdanpanah et al. [16] proposed an active contour method, incorporating with circular shape prior information, to segment intra-retinal layer from 3D OCT image. This method can effectively overcome the affects of the blood vessel shadows and other morphological features of the retinal, however it cannot work well on those images with irregular layer shapes.

Pattern recognition based techniques perform the layer segmentation by using boundary classifier, which is used to assign each voxel to layer boundary and non boundary. The classifier is obtained through a learning process supervised by reference layer boundaries. Fuller et al. [5] designed a multi-resolution hierarchical support vector machines (SVMs) to segment OCT retinal layer. However, the performance of this algorithm is not good enough, it has 6 pixels of line difference and 8 % of the thickness difference. Lang et al. [12] trained a random forest classifier to segment retinal layers from macular images. However, the performance of the pattern recognition based techniques are highly relayed on training sets.

Graph based methods are aimed to find the global minimum cut of the segmentation graph, which is constructed with regional term and boundary term. Garvin [6] proposed a 3D graph search method by constructing geometric graph with edge and regional information and five intra-retinal layers were successfully segmented. This method was extended in [4], which combined graph theory and dynamic programming to segment the intra-retinal layers and eight retinal layer boundaries were located. Although these methods provide good segmentation accuracy, they can not segment all layer boundaries simultaneously and with slow processing speed. Lee et al. [13] proposed a parallel graph search method to overcome these limitations. Kafieh et al. [10] proposed the coarse grained diffusion maps relying on regional image texture without requiring edge based image information and ten layers were segmented accurately. However, this method has high computational complexity and cannot work well for these abnormal images.

In this paper, we proposed an automatic approach for segmenting macular layers by using the graph cut and level set methods. A de-noising step including the nonlinear anisotropic diffusion approach and ellipsoidal averaging filter is applied to remove speckle noise and enhance the contrast between layers. The segmentation of the layers boundaries is performed by using the combination of classical region based level set method, multi-region continuous max-flow approaches, all the segmentation techniques use the layers characteristics, such as voxel intensities and positions of layers.

This paper is organised as follows. A detailed description of the proposed method is presented in Sect. 2. This is followed by the experimental results in Sect. 3. Finally, conclusions are drawn in Sect. 4.

2 Methods

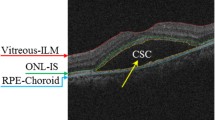

Intra-retinal layers are segmented by two major steps: preprocessing step and layer segmentation step. Figure 1 shows the process of layer segmentation. During the preprocessing step, the nonlinear anisotropic diffusion approach [8] and ellipsoidal averaging filter are applied to 3D macular images to remove speckle noise, enhance the contrast between object and background and remove staircase noise. At the second step, seven intra-retinal boundaries are segmented by using different methods, which include the level set method, hysteresis method, multi-region continuous max-flow algorithm, according to the characteristics of each layers.

2.1 Preprocessing

During the OCT imaging of the retinal, the speckle noise is introduced simultaneously. Figure 2(a) shows the original 3D macular image, which contains a significant level of speckle noise. The conventional anisotropic diffusion approach (Perona-Malik) [8] is used to remove the speckle noise and sharpen the object boundary. The the nonlinear anisotropic diffusion filter is defined as:

where the vector \(\bar{x}\) represents (x, y, z) and t is the process ordering parameter. \(I(\bar{x},t)\) is macular voxel intensity. \(c(\bar{x},t)\) is the diffusion strength control function, which is depended on the magnitude of the gradient of the voxel intensity. The function of \(c(\bar{x},t)\) is:

where \(\kappa \) is a constant variable chosen according to the noise level and edge strength. Finally, the voxel intensities are updated by the following formulate:

The filtered image is shown in Fig. 2(b). Due to the stair-casing (a byproduct of the anisotropic method), the ellipsoidal averaging filter is applied to remove the noise. Firstly, the filter function is defined as

where X, Y, Z are the mask size of x, y, z direction, respectively. This is followed by convoluting with \(2\times 2\times 2\) ones array, and we can get the result f. Finally, the filter mask is: \(f=f/sum(f)\). The result of this filtering is shown in Fig. 2(c).

2.2 Vitreous and Choroid Boundaries Segmentation

The level set method has been extensively applied to image segmentation area. There are two major classes of the level set method: region-based models and edge-based models. The edge-based models use local edge information to direct active contour to the object boundaries, while the region-based models use a certain descriptor to identify each region of interest to guide the active contour to the desired boundary. In this study, the classical region based Chan-Vese model [3] is used to locate the boundaries of victorious and choroid layer from 3D macular images.

Due to different characteristics of each layers, different methods are applied to segment different layers. Through the de-noising process, most of the speckle noise is removed and the contrast between background and object is enhanced. The level set method is used to segment the vitreous and the choroid boundaries because it works well when there is large gradient between retinal tissue and background.

The energy function of the Chan-Vese method is defined as:

where \(\lambda _{1}\), \(\lambda _{2}\) are fixed parameters determined by the user, \(\nu \) is set to zero. In addition, outside(\(\textit{C}\)) and inside(\(\textit{C}\)) indicate the region outside and inside the contour \(\mathbf {\textit{C}}\), respectively, and \(c_{1}\) and \(c_{2}\) are the average image intensity of outside(\(\textit{C}\)) and inside(\(\textit{C}\)). \(\phi \) is defined as a signed distance function (SDF) that is valued as positive inside C, negative outside C, and equal to zero on C. The regularization term Heaviside function H and the average intensities \(c_{1}\) and \(c_{2}\) are formulated as:

and

In calculus of variations [1], minimizing the energy functional of \(E(\phi )\) with respect to \(\phi \) by using gradient decent method:

where \(\frac{\partial {E(\phi )}}{\partial {\phi }}\) is the \( G\hat{a}teaux\) derivative [1] of the energy function \(E(\phi )\). The equation of (4) is derived by using Euler-Lagrange equation [15], which gives us the gradient flow as follow:

2.3 NFL, GCL-IPL, INL, OPL, ONL-IS, OS, RPE Boundaries Segmentation

After locating the boundaries of the vitreous and choroid layers, we define a region that includes all the layers see Fig. 3(b). Because of the low intensities of the OS-RPE layers, the 3D hysteresis method is used to locate the boundary of IS layer, where two threshold values and a loop are used to produce a more connected segmentation results. Furthermore, this method takes advantage of the 3D connectivities by filling image regions and holes to produce smooth boundary. The hysteresis method can efficiently and accurately locate the IS boundary and divide the 3D cube into two parts.

In order to reduce the computation load and increase the speed of the segmentation, we further split the region into two parts (upper part (Fig. 3(d)) and lower part (Fig. 3(c)). From Fig. 3(c) and (d), looking at the intensity variation between different layers, it is obvious to distinguish layers from each other. The multi-region continuous max-flow (Potts model) is applied to segment both the upper part and lower part, the detail of this method will be presented in the Sect. 2.3. For the upper part, the NFL, GCL-IPL, INL, OPL and ONL-IS boundaries are segmented. On the other hand, OS and RPE boundaries are located for the lower part.

Graph cut is an interactive image segmentation method, which was first introduced by Boykov et al. [2]. This method is through minimizing the segmentation function, which consists the regional term and boundary term, to find the globally optimal cut of images. The regional term is defined by computing the likelihoods of foreground (object) and background, while the boundary term is to smooth the boundary by calculating voxel intensities, textures, colors or etc. Here, the multi-region continuous max-flow (Potts model) is used to segment both the upper and lower part to obtain the NFL, GCL-IPL, INL, OPL and ONL-IS boundaries and OS and RPE boundaries, respectively.

Graph Construction and Min-Cut. Each 3D macular image represents as a graph G\((\nu ,\xi )\) consisting of a set of vertex \(\nu \) and a set of edges \(\xi \) \(\subset \) \(\nu \times \nu \). The graph contains two terminal vertices: the source s (foreground) and the sink t (background). There are two types of edges: spatial edges and terminal edges. The spatial edges (n-links) link two neighbour vertices except terminal vertices (s or t), and the terminal edges link the terminals s or t to each voxels in the image, respectively. In other words, for each voxel p \(\subset \nu \backslash \{s,t\} \) is connected to terminal s called s-link, while linked to terminal t called t-link. Each edge \(e\in \xi \) is assigned a weight \(w_{e}\ge 0\).

A cut is a subset of edges \(C \in \xi \), that separates the macular image into two or more disjoint regions. It is through assigning each vertex to the source s or the sink t to cut the graph into two disjoint regions, also called s-t cut. The mathematical expressions are:

The optimal cut is to find the minimum of the sum of edge weights. The corresponding cut-energy is defined as:

Let A= (\(A_{1},\ldots , A_{p}, \ldots , A_{P}\)) be a binary vector, and \(A_{p}\) labels p voxel in the graph to be object or background. The energy function can be rewritten as:

where R(A) is regional term, B(A) is the boundary term. \(\lambda \) is a nonnegative coefficient, which represents the importance of the R(A). According to the the voxel intensities of the selected seeds, the intensity distributions are: Pr(I|O) and Pr(I|B). The regional penalty \(R_{p}(\cdot )\) assigns the likelihood of voxel p to object and foreground as:

The regional term can be expressed as:

The boundary term B(A) is formulated as:

where \(\delta (A_{p}, A_{q})\) = 1 if \(A_{p}=A_{q}\), and otherwise is equal to 0. The boundary penalty \(B_{\{p,q\}}\) is defined as:

The \(B_{\{p,q\}}\) is large when the intensities of voxel p and q are similar and the \(B_{\{p,q\}}\) is close to 0 when two are different.

Multi-region Potts Model. The continuous max-flow convex related potts model was proposed by Yuan et al. [17] to segment the image into n disjoint regions \(\{\varOmega _{i}\}_{i=1}^{n}\). This model modified the boundary term of the original model by calculating the perimeter of each region, and the segmentation functional can be modified as:

where \(|\partial \varOmega _{i}|\) calculates the perimeter of each disjoint region \(\varOmega _{i}\), i=1 ...n, and \(\alpha \) is a positive weight for \(|\partial \varOmega _{i}|\) to give the trade-off between the two terms; the function \(C_{i}(x)\) computes the cost of region \(\varOmega _{i}\). By using the piecewise constant Mumford-Shah function, the functional can be rewritten as:

where \(u_{i}(x)\), i=1 ...n, indicate each voxel x to the specific disjoint region \(\varOmega _{i}\),

The convex relaxation is introduced to solve the Potts model based image segmentation as:

where S is the convex constrained set of \(\{u(x)=(u_{1}(x), \dots ,\) \(u_{n}(x)\) \()\in \triangle _{+}, \forall {x}\in \varOmega \}\), and \(\triangle _{+}\) is simplex set. This multi-terminal ‘cut’ problem as above functional is solved by using a continuous multiple labels max-flow algorithms [17].

3 Experiments

Images used in this study were obtained with Heidelberg SD-OCT Spectralis HRA imaging system (Heidelberg Engineering, Heidelberg, Germany) in Tongren Hospital. Non-invasive OCT imaging was performed on 13 subjects, and the age of the enrolled subjects ranged from 20 to 85 years. This imaging modalities protocol has been widely used to diagnose both retinal diseases and glaucoma diseases, which provides 3D image with 256 B-scans, 512 A-scans, 992 pixels in depth and 16 bits per pixel. It is time-consuming to do the manual grading for all the B-scans of the 13 subjects as it is a large quantitative number. Therefore, in order to evaluate the proposed method, the ground truth was done by human experts by manually labelling a number of positions on a fixed grid and the rest were interpolated.

3.1 Results

To provide a quantitative evaluation of our method, four performance measurements are selected by comparing with the ground truth, (1) Signed mean error (\(\mu _{unsign}\)), (2) Signed standard deviation (\(\sigma _{unsign}\)), (3) Unsigned mean error (\(\mu _{sign}\)), and (4) Unsigned standard deviation (\(\sigma _{sign}\)). These metrics are defined as:

where \(G_{i,j}\), \(S_{i,j}\) are the ground truth and the proposed segmentations of each surface; M and N are 25 and 512.

Our method successfully located eight intra-retinal surfaces of all the 30 3D macular images without any segmentation failures. The segmentation results are consistent with visual observations and are confirmed by the experts from the hospital as accurate. It is useless to compare our segmentation performance with others presented previously, because different datasets were used in their experiment. The signed and unsigned mean and standard deviation (SD) difference between the ground truth and the proposed segmentation results of the eight surfaces are given in Table 1. In terms of the signed difference, the surface 4 gives the best performance (\(-\)0.67\(\pm \)1.53); while in terms of the unsigned difference, the surface 3 performs the best, it achieves around 1.22\(\pm \)1.93.

Table 2 shows the average thickness and overall thickness of the seven layers of the 30 volume images, besides that the absolute thickness and relative thickness difference between the ground truth and the proposed segmentations of the seven layers of the 30 images are calculated and showed. In terms of the average thickness of the Table 2, the overall is around 119.07; the GCL+IPL and ONL+IS layers are 25.88 and 26.19, respectively, as they include two layers, the thinest layer is OPL (8.48). The absolute thickness difference and relative thickness difference of the overall are 1.98\(\pm \)1.69 and \(-\)0.93\(\pm \)1.79, respectively.

Figure 4 shows an example of eight intra-retinal layers segmented result on an example B-scan. Three examples of 3D segmented results are demonstrated in Fig. 5. Figure 6 illustrates the segmented results of 12 example B-scans from a segmented 3D macular, and Fig. 6(a)–(m) are from 30th to 230th B-scans, respectively.

The retinal thickness maps of all the layers are important indicators for diagnosis and understanding of retinal pathologies. Therefore, after an accurate segmentation of the eight retinal boundaries, we generate the thickness maps of seven retinal layers. Figure 7 shows the thickness maps of all the retinal layers, which includes thickness maps of layer 1 to layer 7, layers above OS and total retinal layers.

The proposed approach was implemented on MATLAB R2011b on Intel(R) Core(TM) i5-2500 CPU, clock of 3.3 GHz, and 8G RAM memory.

4 Conclusions and Discussions

In this paper, we have presented a novel hybrid intra-retinal layer segmentation method, which includes hysteresis thresholding method, the CV model based level set method, and the Potts model based multi-region continuous max-flow method. According to the characteristics of different layers, different methods are applied to segment different layers accurately and efficiently. This was implemented with a typical two-staged process: de-noising step and segmentation step. The nonlinear anisotropic diffusion approach and ellipsoidal averaging filter is used to filter the speckle noise and enhance the contrast between the layers as a preprocessing. The segmentation results show that our approach can detect seven layers accurately for 3D macular images with no failure.

The overall segmentation process may look over complicated as it involves three different methods at different stages, namely the level set method, the hysteresis thresholding method and the multi-region continuous max-flow method. It may sound much more concise if a single method is used to simultaneously segment all layers. However, our experiments show that such an approach would demand much higher memory and much longer computation time for the algorithms to run, simply because of the high volume of 3D images. If methods such as sub-sampling are used to reduce the data size and computation time, the accuracy of segmentation would be degraded. Besides, a single method such as level set method or graph cut method is impossible to segment all the layers simultaneously on our 3D datas without using the sub-sampling method due to lake of memory and high computation complexity. In contrast, our approach is able to deliver a better performance with less computation. In particular, the level set method first segments the volume region containing all the 6 middle layers, the simple, fast hysteresis thresholding method partitions this region further into two parts along the easiest boundary between the ONL-LS and OS layers, and finally the multi-region max-flow method is used to segment the individual layers in the upper and lower parts.

References

Aubert, G., Kornprobst, P.: Mathematical Problems in Image Processing: Partial Differential Equations and the Calculus of Variations, vol. 147. Springer, New York (2006)

Boykov, Y.Y., Jolly, M.P.: Interactive graph cuts for optimal boundary & region segmentation of objects in ND images. In: International Conference on Computer Vision, vol. 1, pp. 105–112. IEEE (2001)

Chan, T.F., Vese, L.A.: Active contours without edges. IEEE Trans. Image Process. 10(2), 266–277 (2001)

Chiu, S.J., Li, X.T., Nicholas, P., Toth, C.A., Izatt, J.A., Farsiu, S.: Automatic segmentation of seven retinal layers in SDOCT images congruent with expert manual segmentation. Opt. Express 18(18), 19413–19428 (2010)

Fuller, A.R., Zawadzki, R.J., Choi, S., Wiley, D.F., Werner, J.S., Hamann, B.: Segmentation of three-dimensional retinal image data. IEEE Trans. Vis. Comput. Graph. 13(6), 1719–1726 (2007)

Garvin, M.K., Abràmoff, M.D., Kardon, R., Russell, S.R., Wu, X., Sonka, M.: Intraretinal layer segmentation of macular optical coherence tomography images using optimal 3-D graph search. IEEE Trans. Med. Imaging 27(10), 1495–1505 (2008)

Garvin, M.K., Abràmoff, M.D., Wu, X., Russell, S.R., Burns, T.L., Sonka, M.: Automated 3-D intraretinal layer segmentation of macular spectral-domain optical coherence tomography images. IEEE Trans. Med. Imaging 28(9), 1436–1447 (2009)

Gerig, G., Kubler, O., Kikinis, R., Jolesz, F.A.: Nonlinear anisotropic filtering of MRI data. IEEE Trans. Med. Imaging 11(2), 221–232 (1992)

Huang, D., Swanson, E.A., Lin, C.P., Schuman, J.S., Stinson, W.G., Chang, W., Hee, M.R., Flotte, T., Gregory, K., Puliafito, C.A., et al.: Optical coherence tomography. Science 254(5035), 1178–1181 (1991)

Kafieh, R., Rabbani, H., Abramoff, M.D., Sonka, M.: Intra-retinal layer segmentation of 3D optical coherence tomography using coarse grained diffusion map. Med. Image Anal. 17(8), 907–928 (2013)

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vis. 1(4), 321–331 (1988)

Lang, A., Carass, A., Hauser, M., Sotirchos, E.S., Calabresi, P.A., Ying, H.S., Prince, J.L.: Retinal layer segmentation of macular OCT images using boundary classification. Biomed. Opt. Express 4(7), 1133–1152 (2013)

Lee, K., Abràmoff, M.D., Garvin, M.K., Sonka, M.: Parallel graph search: application to intraretinal layer segmentation of 3-D macular OCT scans. In: SPIE Medical Imaging, pp. 83141H–83141H (2012)

Organization, W.H.: Coding Instructions for the WHO/PBL Eye Examination Record (Version III). WHO, Geneva (1988)

Smith, B., Saad, A., Hamarneh, G., Möller, T.: Recovery of dynamic PET regions via simultaenous segmentation and deconvolution. In: Analysis of Functional Medical Image Data, pp. 33–40 (2008)

Yazdanpanah, A., Hamarneh, G., Smith, B.R., Sarunic, M.V.: Segmentation of intra-retinal layers from optical coherence tomography images using an active contour approach. IEEE Trans. Med. Imaging 30(2), 484–496 (2011)

Yuan, J., Bae, E., Tai, X.-C., Boykov, Y.: A continuous max-flow approach to potts model. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010, Part VI. LNCS, vol. 6316, pp. 379–392. Springer, Heidelberg (2010)

Zhu, H., Crabb, D.P., Schlottmann, P.G., Ho, T., Garway-Heath, D.F.: FloatingCanvas: quantification of 3D retinal structures from spectral-domain optical coherence tomography. Opt. Express 18(24), 24595–24610 (2010)

Acknowledgments

The authors would like to thanks Yuan **g for providing the source of the continuous max-flow algorithm.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Wang, C., Wang, Y., Kaba, D., Wang, Z., Liu, X., Li, Y. (2015). Automated Layer Segmentation of 3D Macular Images Using Hybrid Methods. In: Zhang, YJ. (eds) Image and Graphics. ICIG 2015. Lecture Notes in Computer Science(), vol 9217. Springer, Cham. https://doi.org/10.1007/978-3-319-21978-3_54

Download citation

DOI: https://doi.org/10.1007/978-3-319-21978-3_54

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21977-6

Online ISBN: 978-3-319-21978-3

eBook Packages: Computer ScienceComputer Science (R0)