Abstract

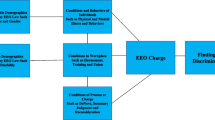

A variety of procedures for making personnel selection decisions that are designed to reflect job-relevant factors also tend to result in substantial differences in employment outcomes across racial/ethnic and gender groups. These statistical disparities, regardless of their cause, are generally described under the heading of “adverse impact.” Adverse impact statistics serve as evidence in many employment discrimination lawsuits and can be used to set diversity goals and evaluate the progress of affirmative action programs. In this chapter, we review contemporary adverse impact analyses and the various contextual factors that may affect the appropriateness of those analyses. We discuss the relevant (Equal Employment Opportunity) EEO context, different forms of discrimination, and how to structure employment data for analysis. We then discuss a variety of adverse impact analysis strategies, including both statistical significance testing and practical significance measurement. We conclude with a framework for conducting adverse impact analyses and a brief primer on more complex scenarios.

The first two authors contributed equally to this chapter.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

This is in addition to the broader controversy related to the concept of unintentional discrimination more generally (e.g., McDaniel et al. 2011).

- 2.

Methods for establishing that a selection procedure is job-related and consistent with business necessity are covered by Loverde and Lahti as well as Tonowski in this volume.

- 3.

For more on regulatory coverage and agency issues, please refer to Gutman et al. 2010.

- 4.

Gutman et al. (2010) note that these types of stock statistic comparison are typically more relevant to pattern or practice claims.

- 5.

Specifically, the 4/5ths rule (described later in this chapter) will often produce different results when applied to selection versus rejection decisions (Bobko and Roth 2004).

- 6.

There are a number of other nuances related to data structure that are outside the scope of this chapter (e.g., distinguishing job seekers from applicants, how declined offers are treated in the analysis, etc.). Interested readers should refer to Cohen et al. (2010) for more detail.

- 7.

It is interesting to consider whether inferences to a population may be difficult to conceptualize in scenarios where an entire population of interest is already available for analysis (e.g., a workforce). This is not an issue that has been considered in case law to our knowledge.

- 8.

As discussed by Murphy and Jacobs (2012), the use of the term “standard deviation” is somewhat misleading, because this phrase refers to a descriptive measure of the variability of a distribution. The more appropriate term here is “standard error” which describes the variability in a test statistic due to random fluctuation across samples.

- 9.

This is analogous to the p-value in the Z and chi-square tests. The difference is how the p-value is computed. Whereas the FET sums the probability over all possible outcomes, the Z and chi-square tests assume that passing rates are normally distributed, and compute the p-value from the area under the normal or chi-square distribution.

- 10.

The hypergeometric distribution describes the probability of obtaining a specified number of successes (i.e., number passing a test) when a dichotomous outcome is sampled from a finite population without replacement. In the context of adverse impact analysis, it provides the probability of obtaining NP min successful outcomes if N min cases were randomly sampled without replacement from a population with N individuals, of whom NP T are successful.

- 11.

It is worth noting that the 0.05 alpha level type I error rate criterion that differentiates statistically significant from nonsignificant was once arbitrary as well. Over time, the standard has become the social scientific norm, but it was initially a point of contention among statisticians.

- 12.

Practical significance is a general concept that has gained a great deal of support in the social scientific community in the last few decades. For example, in the most recent Publication manual of the American Psychological Association (2010), a failure to report effect sizes (as practical significance measures) is considered a defect in the reporting of research: “No approach to probability value directly reflects the magnitude of an effect or the strength of a relation. For the reader to fully understand the importance of your findings, it is almost always necessary to include some index of effect size or strength of relation in your Results section.” Additionally, the Journal of Applied Psychology, generally considered a top-tier social scientific journal, now requires authors to: “… indicate in the results section of the manuscript the complete outcome of statistical tests including significance levels, some index of effect size or strength of relation, and confidence intervals” (Zedeck 2003, p. 4). In these guidelines, statistical significance would appear to be the first hurdle to meet, followed by a consideration of practical significance.

- 13.

Interestingly enough, the OFCCP Statistical Standards Group of 1979 actually endorsed the absolute difference as the preferred practical significance measure (likely in part as a criticism of the 4/5th rule discussed below).

- 14.

See Biddle (2012) for a review of the historical context of the 4/5ths rule.

- 15.

The Department of Labor provided some general rules of thumb for interpreting the usefulness of correlations in the testing context, usually when a continuous test score predicts a continuous performance outcome. In this context, a correlation of 0.11 or less is “unlikely to be useful,” between 0.11 and 0.20 “depends on the circumstances,” between 0.21 and 0.35 is “likely to be useful,” and above 0.35 is “very beneficial.” However, given the special statistical case of two dichotomous variables, it is unclear how these DOL rules of thumb apply to adverse impact analyses.

- 16.

The shortfall is equal to the difference in selection rates multiplied by the number of minority applicants.

References

Abramson, J. H. (2011). WINPEPI updated: computer programs for epidemiologists, and their teaching potential. Epidemiologic Perspectives & Innovations, 8(1), 1.

Agresti, A. (2002). Categorical data analysis (2nd ed.). New York: Wiley.

Agresti, A. (2007). An introduction to categorical data analysis (2nd ed.). New York: Wiley.

American Psychological Association. (2010). Publication manual of the american psychological association. Washington, DC: Author.

Baker, S. G., & Kramer, B. S. (2001). Good for women, good for men, bad for people: Simpson’s Paradox and the importance of sex-specific analysis in observational studies. Journal of Women’s Health and Gender-Based Medicine, 10, 867–872.

Biddle Consulting Group. (2009). Adverse Impact Toolkit. http://www.biddle.com/adverseimpacttoolkit/. Accessed 20 Dec 2014.

Biddle, D. A. (2012). Adverse impact and test validation: A practitioner’s handbook (3rd ed). Folsom: Infinity.

Biddle, D. A., & Morris, S. B. (2011). Using Lancaster’s mid-p correction to the Fisher exact test for adverse impact analyses. Journal of Applied Psychology, 96, 956–965.

Boardman, A. E. (1979). Another analysis of the EEOC four-fifths rule. Management Science, 8, 770–776.

Bobko, P., & Roth, P. L. (2004). The four-fifths rule for assessing adverse impact: An arithmetic, intuitive, and logical analysis of the rule and implications for future research and practice. Research in Personnel and Human Resources Management, 23, 177–198.

Breslow, N. E., & Day, N. E. (1980). Statistical Methods in Cancer Research, Volume I: The Analysis of Case-Control Studies (Vol. 32). Lyon: IARC Scientific Publications.

Brooks, M. E., Dalal, D. K., & Nolan, K. P. (2013). Are common language effect sizes easier to understand than traditional effect sizes? Journal of Applied Psychology, 99, 332–340.

Cohen, J. (1994). The earth is round (p<0.05). American Psychologist, 49, 997–1003.

Cohen, D. & Dunleavy, E.M. (2009, March). A review of OFCCP enforcement statistics: A call for transparency in OFCCP reporting. Washington, DC: The Center for Corporate Equality: Author.

Cohen, D., & Dunleavy, E. M. (2010). A review of OFCCP enforcement statistics for fiscal year 2008. Washington, DC: Center for Corporate Equality.

Cohen, D. B., Aamodt, M. G., & Dunleavy, E. M. (2010). Technical advisory committee report on best practices in adverse impact analyses. Washington, DC: Center for Corporate Equality.

Collins, M. W., & Morris, S. B. (2008). Testing for adverse impact when sample size is small. Journal of Applied Psychology, 93, 463–471.

Dunleavy, E. M., & Gutman, A. (2011). An update on the statistical versus practical significance debate: A review of Stagi v Amtrak (2010). The Industrial-Organizational Psychologist, 48, 121–129.

Esson, P. L., & Hauenstein, N. M. (2006). Exploring the use of the four-fifths rule and significance tests in adverse impact court case rulings. Paper presented at the 21st annual conference of the Society for Industrial and Organizational Psychology, Dallas, TX.

Fisher, R. A. (1925). Statistical methods for research workers. Edinburgh: Oliver & Boyd.

Fleiss J. L. (1981). Statistical methods for rates and proportions (2nd ed). Wiley Series in Probability and mathematical Statistics. New York: Wiley.

Gastwirth, J. L. (1984). Statistical methods for analyzing claims of employment discrimination. Industrial and Labor Relations Review, 38, 75–86.

Gastwirth, J. L. (1988). Statistical reasoning in law and public policy (Vol. 1). San Diego: Academic Press.

Greenberg, I. (1979). An analysis of the EEOC four-fifths rule. Management Science, 8, 762–769.

Gutman, A., Koppes, L. & Vodanovich, S. (2010). EEO Law and Personal Practices (3rd Ed.). New York: Routledge, Taylor & Francis Group.

Hauck, W. W. (1984). A comparative study of conditional maximum likelihood estimation of a common odds ratio. Biometrics, 40, 1117–1123.

Hirji, K. F. (2006). Exact analysis of discrete data. Boca Raton: CRC Press.

Hirji, K. F., Tan, S., & Elashoff, R. M. (1991). A quasi-exact test for comparing two binomial proportions. Statistics in Medicine, 10, 1137–1153.

Hough, L. M., Oswald, F. L., & Ployhart, R. E. (2001). Determinants, detection and amelioration of adverse impact in personnel selection procedures: Issues, evidence, and lessons learned. International Journal of Selection and Assessment, 9, 152–194.

Howard, E., Morris, S. B. (2011, April) Multiple Event Tests for Aggregating Adverse Impact Evidence. Paper presented at the 26th annual conference of the Society for Industrial and Organizational Psychology, Chicago, IL.

Hwang, G., & Yang, M.C. (2001). An optimality theory for mid-P values in 2 × 2 contingency tables. Statistica Sinica, 11, 807–826.

Jacobs, R., Murphy, K. R., & Silva R. (2012). Unintended consequences of EEO enforcement policies: Being big is worse than being bad. Journal of Business and Psychology. doi:10.1007/s10869-012-9268-3.

Kirk, R. E. (1996). Practical significance: A concept whose time has come. Educational and Psychological Measurement, 56, 746–759.

Kroll, N. E. A. (1989). Testing independence in 2 × 2 contingency tables. Journal of Educational Statistics, 14, 47–79.

Kuncel, N. R., & Rigdon, J. (2012). Communicating research findings. In N. W. Schmitt & S. Highhouse (Eds.), Handbook of psychology (Vol. 12). Industrial and organizational psychology (2nd ed., pp. 43–58). New York: Wiley.

Mantel, N., & Haenszel, W. (1959). Statistical aspects of the analysis of data from retrospective studies of disease. Journal of the National Cancer Institute, 22, 719–748.

McDaniel, M. A., Kepes, S., Banks, G. C. (2011). The Uniform Guidelines are a detriment to the field of personnel selection. Industrial and Organizational Psychology: Perspectives on Science and Practice, 4, 419–514.

Miao, W., & Gastwirth, J. L. (2013). Properties of statistical tests appropriate for the analysis of data in disparate impact cases. Law, Probability and Risk, 12, 37–61.

Morris, S. B. (2001). Sample size required for adverse impact analysis. Applied HRM Research, 6, 13–32.

Morris, S. B., & Lobsenz, R. E. (2000). Significance tests and confidence intervals for the adverse impact ratio. Personnel Psychology, 53, 89–111.

Murphy, K., & Jacobs, R. (2012). Using effect size measures to reform the determination of adverse impact in equal employment litigation. Psychology, Public Policy and the Law.

Office of Federal Contract Compliance Programs. (1993). Federal contract compliance manual. Washington, DC: U.S. Department of Labor.

Paetzold, R. L., & Willborn, S. L. (1994). Statistics in discrimination: Using statistical evidence in discrimination cases. Colorado Springs: Shepard’s/McGraw-Hill.

Roth, P. L., Bobko, P., & Switzer, F. S (2006). Modeling the behavior of the 4/5th rule for determining adverse impact: Reasons for caution. Journal of Applied Psychology, 91, 507–522.

Siskin, B. R., & Trippi, J. (2005). Statistical issues in litigation. In F. J. Landy (Ed.), Employment discrimination litigation: Behavioral, quantitative, and legal perspectives (pp. 132–166). San Francisco: Jossey-Bass.

Tarone, R. E. (1985). On heterogeneity tests based on efficient scores. Biometrika, 72, 91–95.

U.S. Equal Employment Opportunity Commission, Civil Service Commission, Department of Labor, & Department of Justice. (1978). Uniform guidelines on employee selection procedures. Federal Register, 43(166), 38295–38309.

Wagner, C. H. (1982). Simpson’s paradox in real life. The American Statistician, 36(1), 46–48.

Zedeck, S. (2003). Applied psychology: Editorial. Journal of Applied Psychology, 88, 3–5.

Zedeck, S. (2010). Adverse impact: History and evolution. In J. L. Outtz (Ed.), Adverse impact: Implications for organizational staffing and high stakes selection (pp. 3–27). New York: Routledge.

Cases Cited

Albemarle Paper Company v. Moody, 422 US 405 (1975).

Apsley v. Boeing Co., 691 F.3d 1184 (10th Cir. 2012).

Capaci v. Katz & Besthoff, Inc., 711 F.2d 647 (5th Cir. 1983).

Castaneda v. Partida, 430 U.S. 482 (1977).

Connecticut v. Teal, 457 US 440, 29 FEP 1 (1982).

Contreras v. City of Los Angeles, 656 F.2d 1267 (9th Cir. 1981).

Cytel. (2014). StatXact 10 [Computer software]. Cambridge, MA: Author.

Dukes v. Wal-Mart Stores, Inc., 222 F.R.D. 137 (N.D. Cal. 2004).

Frazier v. Garrison, 980 F.2d (5th Cir. 1993).

Griggs v. Duke Power, 401 US 424 (1971).

Hazelwood School District v. United States, 433 US 299 (1977).

International Brotherhood of Teamsters v. United States, 431 US 324 (1977).

Meacham v. Knolls Atomic Power Lab, 128 S. Ct. 2395 (2008).

Moore v. Southwestern Bell Telephone Company, 593 F.2d 607 (5th Cir. 1979).

Ricci v. DeStefano, 129 S. Ct. 2658 (2009).

Trout v. Hidalgo, 517 F. Supp. 873 (D.D.C. 1981).

U.S. v. Commonwealth of Virginia, 620 F.2d 1018 (4th Cir. 1978).

Waisome v. Port Authority, 948 F.2d 1370, 1376 (2d Cir. 1991).

Watson v. Fort Worth, 487 U.S. 977 (1988).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Recommended Readings

-

Cohen, J. (1994). The earth is round (p<0.05). American Psychologist, 49, 997–1003.

-

This article is one of the most well-known and commonly referenced sources on the limitations of statistical significance testing and the usefulness of effect size measures.

-

-

Cohen, D., B., Aamodt, M. G., & Dunleavy, E. M. (2010). Technical Advisory Committee report on best practices in adverse impact analyses. Washington, D.C.: Center for Corporate Equality.

-

This report is a contemporary review of adverse impact measurement and associated issues and includes expert perspectives across a wide range of disciplines.

-

-

Collins, M.W. & Morris, S.B. (2008). Testing for adverse impact when sample size is small. Journal of Applied Psychology, 93, 463–471.

-

This article provides a detailed comparison of alternate statistical significance tests for adverse impact analysis.

-

-

Gastwirth, J.L. (1988). Statistical reasoning in law and public policy (Vol. 1). San Diego, CA: Academic Press.

-

This book is one of the most well-known and commonly referenced sources on a wide variety of EEO analytic issues.

-

-

Gutman, A., Koppes, L. & Vodanovich, S. (2010). EEO Law and Personal Practices (3rd Edition). New York: Routledge, Taylor & Francis Group.

-

This book provides a thorough and accessible summary of EEO laws and their implications for employment practices. In particular, pages 44–73 describe the legal context for adverse impact analyses.

-

-

U.S. Equal Employment Opportunity Commission, Civil Service Commission, Department of Labor, & Department of Justice. (1978). Uniform Guidelines on Employee Selection Procedures. Federal Register, 43 (166), 38295–38309.

-

These guidelines describe the standards used by federal enforcement agencies to evaluate the presence of disparities in employment outcomes, as well as evidence that can be used to establish the job-relatedness of employment practices.

-

Glossary

-

Adverse impact: Substantial differences in employment outcomes across protected groups.

-

Disparate impact theory: Unintentional discrimination where facially neutral selection criteria disproportionately exclude higher percentages of one group relative to another.

-

Disparate treatment theory: Intentional discrimination where protected group status is used in some way to make employment decisions.

-

Disparate treatment—pattern or practice: Pervasive, systematic disparate treatment against a protected group.

-

Effect size: A statistic that represents the magnitude of a phenomenon, such as the size of a disparity in employment outcomes.

-

Statistical significance testing: A decision-making paradigm relying on probability theory to evaluate the likelihood that an observed difference in employment outcomes between groups in a sample could have occurred simply due to chance or random fluctuations, given a neutral selection procedure.

-

Practical significance measurement: A decision-making paradigm based on the magnitude or size of a disparity in employment outcomes between groups, relative to professional judgment regarding which disparities are trivial and which are substantial.

-

Protected group: A group covered by EEO laws. Under Title VII of the Civil Rights Act of 1964, protected groups are defined by race, color, sex, religion, or national origin.

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Dunleavy, E., Morris, S., Howard, E. (2015). Measuring Adverse Impact in Employee Selection Decisions. In: Hanvey, C., Sady, K. (eds) Practitioner's Guide to Legal Issues in Organizations. Springer, Cham. https://doi.org/10.1007/978-3-319-11143-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-11143-8_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-11142-1

Online ISBN: 978-3-319-11143-8

eBook Packages: Behavioral ScienceBehavioral Science and Psychology (R0)