Abstract

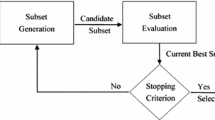

In the era of big data and federated learning, traditional feature selection methods show unacceptable performance for handling heterogeneity when deployed in federated environments. We propose Fed-FiS, an information-theoretic federated feature selection approach to overcome the problem occur due to heterogeneity. Fed-FiS estimates feature-feature mutual information (FFMI) and feature-class mutual information (FCMI) to generate a local feature subset in each user device. Based on federated values across features and classes obtained from each device, the central server ranks each feature and generates a global dominant feature subset. We show that our approach can find stable features subset collaboratively from all local devices. Extensive experiments based on multiple benchmark iid (independent and identically distributed) and non-iid datasets demonstrate that Fed-FiS significantly improves overall performance in comparison to the state-of-the-art methods. This is the first work on feature selection in a federated learning system to the best of our knowledge.

This work was partially supported by the Wallenberg AI, Autonomous Systems and Software Program (WASP) funded by Knut and Alice Wallenberg Foundation.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Worldline and the ULBML Group. Anonymized credit card transactions labeled as fraudulentor genuine. https://www.kaggle.com/mlg-ulb/creditcardfraud, 2020.

- 2.

References

Manikandan, G., Abirami, S.: Feature selection is important: state-of-the-art methods and application domains of feature selection on high-dimensional data. In: Kumar, R., Paiva, S. (eds.) Applications in Ubiquitous Computing. EICC, pp. 177–196. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-35280-6_9

Hoque, N., et al.: MIFS-ND: a mutual information-based feature selection method. Expert Syst. Appl. 41(14), 6371–6385 (2014)

Liu, G., et al.: Feature selection method based on mutual information and support vector machine. Int. J. Pattern Recogn. Artif. Intell. 35, 2150021 (2021)

Zheng, L., et al.: Feature grou** and selection: a graph-based approach. Inf. Sci. 546, 1256–1272 (2021)

Gui, Y.: ADAGES: adaptive aggregation with stability for distributed feature selection. In: Proceedings of the ACM-IMS on Foundations of Data Science Conference, pp. 3–12 (2020)

Soheili, M., et al.: DQPFS: distributed quadratic programming based feature selection for big data. J. Parallel Distrib. Comput. 138, 1–14 (2020)

Morán-Fernández, L., et al.: Centralized vs. distributed feature selection methods based on data complexity measures. Knowl.-Based Syst. 117, 27–45 (2017)

Tavallaee, M., et al.: A detailed analysis of the KDD CUP 99 data set. In: IEEE Symposium on Computational Intelligence for Security and Defense Applications, pp. 1–6 (2009)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Banerjee, S., Elmroth, E., Bhuyan, M. (2021). Fed-FiS: a Novel Information-Theoretic Federated Feature Selection for Learning Stability. In: Mantoro, T., Lee, M., Ayu, M.A., Wong, K.W., Hidayanto, A.N. (eds) Neural Information Processing. ICONIP 2021. Communications in Computer and Information Science, vol 1516. Springer, Cham. https://doi.org/10.1007/978-3-030-92307-5_56

Download citation

DOI: https://doi.org/10.1007/978-3-030-92307-5_56

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92306-8

Online ISBN: 978-3-030-92307-5

eBook Packages: Computer ScienceComputer Science (R0)