Abstract

Existing work on object detection often relies on a single form of annotation: the model is trained using either accurate yet costly bounding boxes or cheaper but less expressive image-level tags. However, real-world annotations are often diverse in form, which challenges these existing works. In this paper, we present UFO\(^2\), a unified object detection framework that can handle different forms of supervision simultaneously. Specifically, UFO\(^2\) incorporates strong supervision (e.g., boxes), various forms of partial supervision (e.g., class tags, points, and scribbles), and unlabeled data. Through rigorous evaluations, we demonstrate that each form of label can be utilized to either train a model from scratch or to further improve a pre-trained model. We also use UFO\(^2\) to investigate budget-aware omni-supervised learning, i.e., various annotation policies are studied under a fixed annotation budget: we show that competitive performance needs no strong labels for all data. Finally, we demonstrate the generalization of UFO\(^2\), detecting more than 1,000 different objects without bounding box annotations.

Z. Ren and X. Yang—Work partially done at NVIDIA.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

COCO*: because LVIS is a subset of COCO-115, we construct COCO* by taking COCO-115 images excluded from LVIS.

References

Instagram statistics 2019. www.omnicoreagency.com/instagram-statistics/

Youtube statistics 2019. https://merchdope.com/youtube-stats/

Arbeláez, P., Pont-Tuset, J., Barron, J., Marques, F., Malik, J.: Multiscale combinatorial grou**. In: CVPR (2014)

Bearman, A., Russakovsky, O., Ferrari, V., Fei-Fei, L.: What’s the point: semantic segmentation with point supervision. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 549–565. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_34

Berthelot, D., Carlini, N., Goodfellow, I.J., Papernot, N., Oliver, A., Raffel, C.: MixMatch: a holistic approach to semi-supervised learning. In: NeurIPS (2019)

Bilen, H., Vedaldi, A.: Weakly supervised deep detection networks. In: CVPR (2016)

Bradski, G.: The OpenCV Library. Dobb’s J. Softw. Tools 25, 120–125 (2000)

Chapelle, O., Schölkopf, B., Zien, A. (eds.): Semi-Supervised Learning. The MIT Press, Cambridge (2006)

Chen, Y., Li, W., Sakaridis, C., Dai, D., Gool, L.V.: Domain adaptive faster R-CNN for object detection in the wild. In: CVPR (2018)

Chéron, G., Alayrac, J.B., Laptev, I., Schmid, C.: A flexible model for training action localization with varying levels of supervision. In: NIPS (2018)

Doersch, C., Gupta, A., Efros, A.A.: Unsupervised visual representation learning by context prediction. In: ICCV (2015)

Everingham, M., Van Gool, L., Williams, C.K.I., Winn, J., Zisserman, A.: The PASCAL visual object classes (VOC) challenge. IJCV 88, 303–338 (2010). https://doi.org/10.1007/s11263-009-0275-4

Felzenszwalb, P.F., Girshick, R.B., McAllester, D.A., Ramanan, D.: Object detection with discriminatively trained part-based models. T-PAMI 32(9), 1627–1645 (2010)

Gao, Y., et al.: C-MIDN: coupled multiple instance detection network with segmentation guidance for weakly supervised object detection. In: ICCV (2019)

Ge, W., Yang, S., Yu, Y.: Multi-evidence filtering and fusion for multi-label classification, object detection and semantic segmentation based on weakly supervised learning. In: CVPR (2018)

Girshick, R.B.: Fast R-CNN. In: ICCV (2015)

Girshick, R.B., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: CVPR (2014)

Gupta, A., Dollar, P., Girshick, R.: LVIS: a dataset for large vocabulary instance segmentation. In: CVPR (2019)

He, K., Fan, H., Wu, Y., **e, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: CVPR (2019)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: ICCV (2017)

Hu, R., Dollár, P., He, K., Darrell, T., Girshick, R.: Learning to segment every thing. In: CVPR (2018)

Inoue, N., Furuta, R., Yamasaki, T., Aizawa, K.: Cross-domain weakly-supervised object detection through progressive domain adaptation. In: CVPR (2018)

Jie, Z., Wei, Y., **, X., Feng, J., Liu, W.: Deep self-taught learning for weakly supervised object localization. In: CVPR (2017)

Kantorov, V., Oquab, M., Cho, M., Laptev, I.: ContextLocNet: context-aware deep network models for weakly supervised localization. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9909, pp. 350–365. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46454-1_22

Khodabandeh, M., Vahdat, A., Ranjbar, M., Macready, W.G.: A robust learning approach to domain adaptive object detection. In: ICCV (2019)

Khoreva, A., Benenson, R., Hosang, J., Hein, M., Schiele, B.: Simple does it: weakly supervised instance and semantic segmentation. In: CVPR (2017)

Law, H., Deng, J.: CornerNet: detecting objects as paired keypoints. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 765–781. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_45

Lee, D.H.: Pseudo-label: the simple and efficient semi-supervised learning method for deep neural networks. In: ICML 2013 Workshop (2013)

Lin, D., Dai, J., Jia, J., He, K., Sun, J.: ScribbleSup: scribble-supervised convolutional networks for semantic segmentation. In: CVPR (2016)

Lin, T., et al.: Microsoft COCO: common objects in context. CoRR (2014)

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Miyato, T., Maeda, S., Koyama, M., Ishii, S.: Virtual adversarial training: a regularization method for supervised and semi-supervised learning. T-PAMI 41(8), 1979–1993 (2019)

Oliver, A., Odena, A., Raffel, C.A., Cubuk, E.D., Goodfellow, I.: Realistic evaluation of deep semi-supervised learning algorithms. In: NeurIPS (2018)

Papadopoulos, D.P., Uijlings, J.R.R., Keller, F., Ferrari, V.: Extreme clicking for efficient object annotation. In: ICCV (2017)

Papadopoulos, D.P., Uijlings, J.R.R., Keller, F., Ferrari, V.: Training object class detectors with click supervision. In: CVPR (2017)

Papandreou, G., Chen, L., Murphy, K.P., Yuille, A.L.: Weakly-and semi-supervised learning of a deep convolutional network for semantic image segmentation. In: ICCV (2015)

Pardo, A., Xu, M., Thabet, A.K., Arbelaez, P., Ghanem, B.: BAOD: budget-aware object detection. CoRR abs/1904.05443 (2019)

Park, T., Liu, M.Y., Wang, T.C., Zhu, J.Y.: Semantic image synthesis with spatially-adaptive normalization. In: CVPR (2019)

Peng, X., Sun, B., Ali, K., Saenko, K.: Learning deep object detectors from 3D models. In: ICCV (2015)

Radosavovic, I., Dollár, P., Girshick, R.B., Gkioxari, G., He, K.: Data distillation: towards omni-supervised learning. In: CVPR (2018)

Redmon, J., Divvala, S.K., Girshick, R.B., Farhadi, A.: You only look once: unified, real-time object detection. In: CVPR (2016)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: CVPR (2017)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. TPAMI 39(6), 1137–1149 (2016)

Ren, Z., Lee, Y.J.: Cross-domain self-supervised multi-task feature learning using synthetic imagery. In: CVPR (2018)

Ren, Z., et al.: Instance-aware, context-focused, and memory-efficient weakly supervised object detection. In: CVPR (2020)

Ren, Z., Yeh, R.A., Schwing, A.G.: Not all unlabeled data are equal: learning to weight data in semi-supervised learning. ar**v preprint ar**v:2007.01293 (2020)

Rosenberg, C., Hebert, M., Schneiderman, H.: Semi-supervised self-training of object detection models. In: WACV/MOTION (2005)

Shen, Y., Ji, R., Zhang, S., Zuo, W., Wang, Y.: Generative adversarial learning towards fast weakly supervised detection. In: CVPR (2018)

Singh, G., Saha, S., Sapienza, M., Torr, P., Cuzzolin, F.: Online real time multiple spatiotemporal action localisation and prediction on a single platform. In: ICCV (2017)

Singh, K.K., **ao, F., Lee, Y.J.: Track and transfer: watching videos to simulate strong human supervision for weakly-supervised object detection. In: CVPR (2016)

Su, H., Deng, J., Fei-Fei, L.: Crowdsourcing annotations for visual object detection. In: AAAI Technical Report, 4th Human Computation Workshop (2012)

Tang, P., et al.: PCL: proposal cluster learning for weakly supervised object detection. T-PAMI 42(1), 176–191 (2018)

Tang, P., Wang, X., Bai, X., Liu, W.: Multiple instance detection network with online instance classifier refinement. In: CVPR (2017)

Tarvainen, A., Valpola, H.: Weight-averaged consistency targets improve semi-supervised deep learning results. In: NeurIPS (2017)

Uijlings, J.R.R., Popov, S., Ferrari, V.: Revisiting knowledge transfer for training object class detectors. In: CVPR (2018)

Uijlings, J., van de Sande, K., Gevers, T., Smeulders, A.: Selective search for object recognition. IJCV 104, 154–171 (2013)

**e, Q., Dai, Z., Hovy, E., Luong, M.T., Le, Q.V.: Unsupervised data augmentation for consistency training. ar**v preprint ar**v:1904.12848 (2019)

Xu, J., Schwing, A.G., Urtasun, R.: Learning to segment under various forms of weak supervision. In: CVPR (2015)

Yang, Z., Mahajan, D., Ghadiyaram, D., Nevatia, R., Ramanathan, V.: Activity driven weakly supervised object detection. In: CVPR (2019)

Zeng, Z., Liu, B., Fu, J., Chao, H., Zhang, L.: WSOD2: learning bottom-up and top-down objectness distillation for weakly-supervised object detection. In: ICCV (2019)

Zhang, X., Feng, J., **ong, H., Tian, Q.: Zigzag learning for weakly supervised object detection. In: CVPR (2018)

Zhang, Y., Bai, Y., Ding, M., Li, Y., Ghanem, B.: W2F: a weakly-supervised to fully-supervised framework for object detection. In: CVPR (2018)

Zhou, B., Khosla, A., A., L., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: CVPR (2016)

Zhou, X., Zhuo, J., Krähenbühl, P.: Bottom-up object detection by grou** extreme and center points. In: CVPR (2019)

Zitnick, C.L., Dollár, P.: Edge boxes: locating object proposals from edges. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 391–405. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_26

Zou, Y., Yu, Z., Liu, X., Kumar, B., Wang, J.: Confidence regularized self-training. In: ICCV (2019)

Zou, Y., Yu, Z., Vijaya Kumar, B.V.K., Wang, J.: Unsupervised domain adaptation for semantic segmentation via class-balanced self-training. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11207, pp. 297–313. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01219-9_18

Acknowledgement

ZR is supported by Yunni & Maxine Pao Memorial Fellowship. This work is supported in part by NSF under Grant No. 1718221 and MRI #1725729, UIUC, Samsung, 3M, Cisco Systems Inc. (Gift Award CG 1377144) and Adobe.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendices

Appendix

In this document, we provide:

-

An extensive study on the LVIS [18] dataset where UFO\(^2\) learns to detect more than 1k different objects without bounding box annotation (Sect. A), which demonstrates the generalizability and applicability of UFO\(^2\)

-

Details regarding the annotation policies mentioned in Sect. 5.4 ‘Budget-aware Omni-supervised Detection’ of the main paper

-

Additional visualizations of the simulated partial labels

-

Additional qualitative results on COCO (complementary to main paper Sect. 5.2)

A Extensions: Learning to Detect Everything

In this section we show that without any architecture changes UFO\(^2\) can be generalized to detect any objects given image-level tags. Hence we follow [21] and refer to this setting as ‘learning to detect everything.’

Specifically, we aim to detect objects from the LVIS [18] dataset: it contains 1239 categories and we only use tags as annotation. We use COCO*Footnote 1 with boxes and train UFO\(^2\) on it first. Our model achieves 32.7% AP and 52.3% AP-50 on minival. We then jointly fine-tune this model using tags from LVIS and boxes from COCO*. The final model performs comparably on minival (31.6% AP, 50.1% AP-50) and also decent on LVIS validation of over 1k classes (3.5% AP, 6.3% AP-50 where a supervised model achieves 8.6% AP, 14.8% AP-50). To the best of our knowledge, no numbers have been reported on this dataset using weak labels. Our results are also not directly comparable to strongly supervised results [18] as we don’t use the bounding box annotation on LVIS.

Qualitative results are shown in Fig. 6. We observe that UFO\(^2\) is able to detect objects accurately even though no bounding box supervision is used for the new classes (e.g., short pants, street light, parking meter, frisbee, etc.). Specifically, UFO\(^2\) can (1) detect spatially adjacent or even clustered instances with great recall (e.g., goose, cow, zebra, giraffe); (2) recognize some obscure or hard objects (e.g., wet suite, short pants, knee pad); (3) localize different objects with tight and accurate bounding boxes. Importantly, note that we don’t need to change the architecture of UFO\(^2\) at all to integrate both boxes and tags as supervision.

B Annotation Policies

To study budget-aware omni-supervised object detection, we defined the following policies: 80%B, 50%B, 20%B motivated by the following findings: (1) among the three partial labels (tags, points, and scribbles), labeling of points (88.7 s/img; see Sect. 5.4 in the main paper) is roughly as efficient as annotating tags (80 s/img), both of which require half the time/cost of scribbles (160.4 s/img); (2) using points achieves a consistent performance boost compared to using tags (12.4 over 10.8 AP in Table 2; 30.1 over 29.4 AP in Table 3); (3) using scribbles is just slightly better than using points (13.7 over 12.4 AP in Table 2; 30.9 over 30.1 AP in Table 3) but twice as expensive to annotate; and (4) strong supervision (boxes) is still necessary to achieve good results (strongly supervised models are significantly better than others in Table 2 and Table 3).

Therefore, we choose to combine points and boxes as a new annotation policy which we found to work well under the fixed-budget setting as shown in Table 6: 80%B is slightly better than STRONG and 50%B also performs better than EQUAL-NUM. These results suggest that spending some amount of cost to annotate more images with points is a better annotation strategy than the commonly-adopted bounding box only annotation (STRONG). Meanwhile, the optimal annotation policy remains an open question and better policies may exist if more accurate scribbles are collected or advanced algorithms are developed to utilize partial labels.

C Additional Visualization of Partial Labels

We show additional results together with the ground-truth bounding boxes in Figs. 7–10. Figure 7 and Fig. 8 show labels for single objects (e.g., car, motor, sheep, chair, person, and bus) and Fig. 9 and Fig. 10 visualize labels for all the instances in the images.

We observe: (1) both points and scribbles are correctly located within the objects; (2) points are mainly located around the center area of the objects and with a certain amount of randomness, which aligns with our goal to mimic human labeling behavior as discussed in Sect. 4 of the main paper; (3) the generated scribbles are relatively simple yet effective in capturing the rough shape of the objects. Also, they exhibit a reasonable diversity. These partial labels serve as a proof-of-concept to show the effectiveness of the proposed UFO\(^2\) framework.

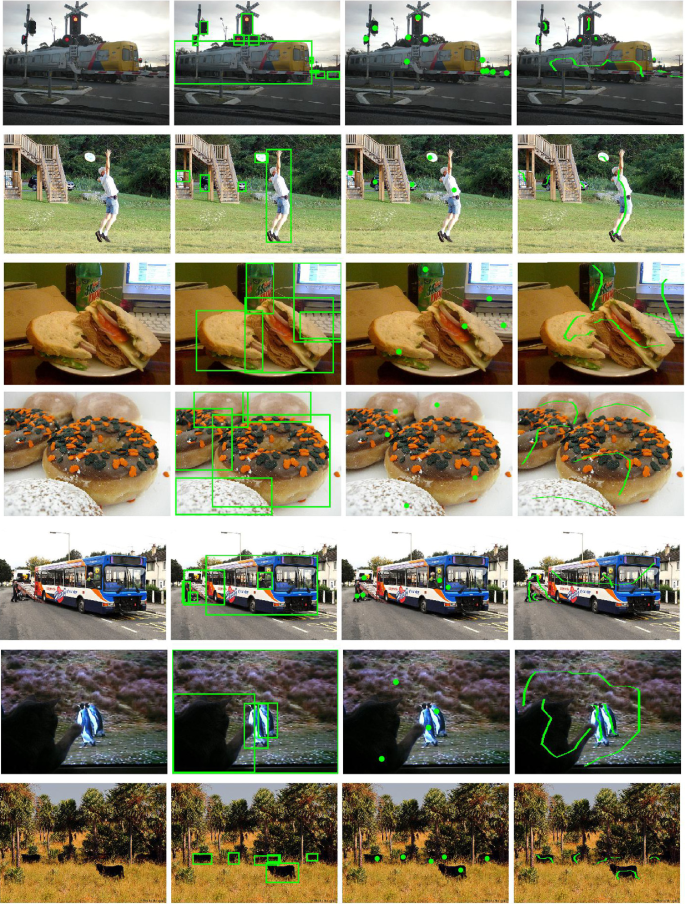

D Additional Qualitative Results

In Fig. 11 and Fig. 12 we show additional qualitative results. We compare the same VGG-16 based model trained on COCO-80 with different forms of supervision. From left to right we show predicted boxes and their confidence scores when using boxes, scribbles, points, and tags. Similar to the results in Sect. 5.2 of the main paper, we find that stronger labels better reduce false positive predictions and better localize true positive predictions.

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Ren, Z., Yu, Z., Yang, X., Liu, MY., Schwing, A.G., Kautz, J. (2020). UFO\(^2\): A Unified Framework Towards Omni-supervised Object Detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, JM. (eds) Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science(), vol 12364. Springer, Cham. https://doi.org/10.1007/978-3-030-58529-7_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-58529-7_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-58528-0

Online ISBN: 978-3-030-58529-7

eBook Packages: Computer ScienceComputer Science (R0)