Abstract

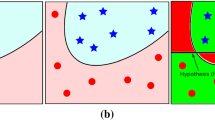

Kernel methods have been widely used in data classification. Many kernel-based classifiers like Kernel Support Vector Machines (KSVM) assume that data can be separated by a hyperplane in the feature space. These methods do not consider the data distribution. This paper proposes a novel Kernel Maximum A Posteriori (KMAP) classification method, which implements a Gaussian density distribution assumption in the feature space and can be regarded as a more generalized classification method than other kernel-based classifier such as Kernel Fisher Discriminant Analysis (KFDA). We also adopt robust methods for parameter estimation. In addition, the error bound analysis for KMAP indicates the effectiveness of the Gaussian density assumption in the feature space. Furthermore, KMAP achieves very promising results on eight UCI benchmark data sets against the competitive methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Schölkopf, B., Smola, A.: Learning with Kernels. MIT Press, Cambridge (2002)

Vapnik, V.N.: Statistical Learning Theory. John Wiley & Sons, Chichester (1998)

Mika, S., Ratsch, G., Weston, J., Scholkopf, B., Muller, K.: Fisher discriminant analysis with kernels. In: Proceedings of IEEE Neural Network for Signal Processing Workshop, pp. 41–48 (1999)

Lanckriet, G.R.G., Ghaoui, L.E., Bhattacharyya, C., Jordan, M.I.: A robust minimax approach to classification. Journal of Machine Learning Research 3, 555–582 (2002)

Huang, K., Yang, H., King, I., Lyu, M.R., Chan, L.: Minimum error minimax probability machine. Journal of Machine Learning Research 5, 1253–1286 (2004)

Duda, R.O., Hart, P.E., Stork, D.G.: Pattern Classification. Wiley-Interscience Publication (2000)

Friedman, J.H.: Regularized discriminant analysis. Journal of American Statistics Association 84(405), 165–175 (1989)

Centeno, T.P., Lawrence, N.D.: Optimising kernel parameters and regularisation coefficients for non-linear discriminant analysis. Journal of Machine Learning Research 7(2), 455–491 (2006)

Kimura, F., Takashina, K., S., T., Y., M.: Modified quadratic discriminant functions and the application to Chinese character recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence 9, 149–153 (1987)

Huang, S.Y., Hwang, C.R., Lin, M.H.: Kernel Fisher’s discriminant analysis in Gaussian Reproducing Kernel Hilbert Space. Technical report, Academia Sinica, Taiwan, R.O.C. (2005)

Fukunaga, K.: Introduction to Statistical Pattern Recognition, 2nd edn. Academic Press, San Diego (1990)

Author information

Authors and Affiliations

Editor information

Rights and permissions

Copyright information

© 2008 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Xu, Z., Huang, K., Zhu, J., King, I., Lyu, M.R. (2008). Kernel Maximum a Posteriori Classification with Error Bound Analysis. In: Ishikawa, M., Doya, K., Miyamoto, H., Yamakawa, T. (eds) Neural Information Processing. ICONIP 2007. Lecture Notes in Computer Science, vol 4984. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-69158-7_87

Download citation

DOI: https://doi.org/10.1007/978-3-540-69158-7_87

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-69154-9

Online ISBN: 978-3-540-69158-7

eBook Packages: Computer ScienceComputer Science (R0)