Abstract

Although manipulating 3D virtual models with mid-air hand gestures had the benefits of natural interactions and free from the sanitation problems of touch surfaces, many factors could influence the usability of such an interaction paradigm. In this research, the authors conducted experiments to study the vision-based mid-air hand gestures for scaling, translating, and rotating a 3D virtual car displayed on a large screen. An Intel RealSense 3D Camera was employed for hand gesture recognition. The two-hand gesture with grabbing then moving apart/close to each other was applied to enlarging/shrinking the 3D virtual car. The one-hand gesture with grabbing then moving was applied to translating a car component. The two-hand gesture with grabbing and moving relatively along the circumference of a horizontal circle was applied to rotating the car. Seventeen graduate students were invited to participate in the experiments and offer their evaluations and comments for gesture usability. The results indicated that the width and depth of detection ranges were the key usability factors for two-hand gestures with linear motions. For dynamic gestures with quick transitions and motions from open to close hand poses, ensuring gesture recognition robustness was extremely important. Furthermore, given a gesture with ergonomic postures, inappropriate control-response ratio could result in fatigue due to repetitive exertions of hand gestures for achieving the precise controls of 3D model manipulation tasks.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

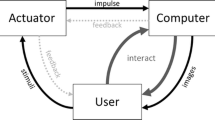

Manipulating 3D digital contents through mid-air hand gestures is a new paradigm of Human Computer Interaction. In many applications, such as interactive and virtual product exhibition in public spaces and medical image displays in surgery rooms, the tasks may include scaling, translation, and rotation of 3D components. In order to ensure the natural map** between controls and displays, the selections of mid-air hand gestures for different tasks were based on the metaphors of physical object operations [1] or the gestures from user-elicitation studies [2]. Given a consensus hand gesture type for a specific task, the performance of gesture recognition and control could still be influenced by many factors, such as the moving speed and trajectory of hands, the occlusion of fingers due to hand pose changes and transitions, as well as the individual differences of performing a specific hand gesture. Since the characteristics of diverse gestures could result in different challenges, it is necessary to identify usability factors for specific gestures through experiments. Therefore, the objective of this research is to study the usability factors of hand gestures for 3D digital content manipulations.

2 Literature Review

With the benefits of natural and intuitive interactions, and free from sanitation problems in public spaces, the applications of mid-air hand gestures included interactive navigation systems in museum or virtual museum [3, 4], medical or surgical imaging system [5,6,7,8], large display interactions [9], interactive and public display [10], and 3D modelling [11,12,13,14]. Based on previous research, mid-air hand gestures could be analyzed in five gesture types: pointing, semaphoric, pantomimic, iconic, and manipulation [15]. Based on the number and trajectory of hands, mid-air gestures could be classified as one or two hands, linear or circular movements, and different degrees of freedom in path (1D, 2D, or 3D) [16]. Gesture vocabulary was dependent on the context [17, 18]. For example, the gestures for short-range human computer interaction [19] and TV controls [20] were reported to be different. For mid-air 3D object manipulation, natural gestures were necessary for accurate control tasks of scaling, translation, and rotation [21]. While choosing an appropriate mid-air hand gesture, it is necessary to consider the mental models of target users [22], reduce the workload [23], and increase gesture recognition robustness [24]. Although design principles could be derived from the literature, the factors that influence the perceived usability of gestures for specific tasks should be identified through experiments.

3 Experiment

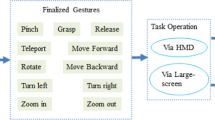

In order to investigate the usability factors of mid-air hand gestures for manipulating 3D virtual models, an experimental system was constructed by modifying the sample programs of Intel RealSense 3D Camera with Unity 3D Toolkit (Fig. 1). In a laboratory with illumination control, the participant stood on the spot in front of a 100-inch projection screen, at a distance of 240 cm. During the experiments, the 3D virtual car model was projected on the screen. Each participant completed the tasks for scaling the car, translating the car seat, and rotating the car with respect to the vertical axis using designated hand gestures as follows.

Among the diverse gesture types, “grab and move” and bimanual “handle bar metaphor” were reported as the intuitive gestures for object manipulation tasks [1, 2, 25]. In addition, users often preferred using gestures resembling physical manipulation for wall-sized displays [26]. Therefore, the two-hand gesture with grabbing while moving apart/close to each other was applied to enlarging/shrinking the 3D virtual car (Fig. 2). The one-hand gesture with grabbing while moving up/down, left/right, or forward/backward was applied to translating a car seat (Fig. 3). The two-hand gesture with grabbing while moving relatively along the circumference of a horizontal circle, a handlebar metaphor, was applied to rotating the car (Fig. 4). The characteristics of these gestures and referenced literature were summarized in Table 1.

In the experiment, an Intel RealSense 3D Camera (F200) was used to extract the positions and movements of 22 joints on each hand skeleton. With the Intel RealSense SDK, basic gestures, such as spread fingers and fist could be recognized (Fig. 5). Spread fingers and fist were the static gestures of opening palm and grabbing, respectively. Therefore, it was expected to discriminate the transitions from opening palm to grabbing, and vice versa.

The camera was placed between the participant and the screen. The distance to the participant was adjusted with respect to the arm length. The height was adjusted to the elbow height of each participant.

4 Results and Discussions

Seventeen students, 7 female and 10 male, were invited to participate in the experiment. They studied in either the Ph.D. program of Design Science or the Master program of Industrial Design, with the age range from 22 to 37 (mean: 26.12, standard deviation: 4.65). All participants had the experiences of using 3D modelling software and smartphones with touch gestures. In the experiment, they were asked to apply gestures to carry out scaling, translation, and rotation tasks.

After completing each task, they were asked to evaluate the gesture using a 7-point Likert scale, indicating the degree of agreement, from the perspectives of acceptance to performing in public, comfort, smoothness of operation, easy to understand, easy to remember, informative feedback, correctness of system response, appropriate control-response ratio, and overall satisfaction (Table 2). The result of ANOVA indicated that there were significant differences in user evaluation among these usability criteria. The gesture for scaling was considered as the one needed to be improved in smoothness of operation, correctness of system response, control-response ratio, and overall satisfaction. The gesture for translation yielded the lowest score in the smoothness of operation. The gesture for rotation yielded the lowest score in appropriate control-response ratio.

Tables 3, 5, and 7 listed the reported usability problems with respect to scaling, translation, and rotation tasks, respectively. Two-hand gestures for scaling and rotation tasks caused more usability problems in failure of gesture recognition, lag in system response, gesture detection range, control-response ratio, and fatigue. Based on the original comments from participants, quick movements or rapid pose changes of two hands were the major causes of system failures. Evidently, the original detection range was not wide or deep enough for the natural and linear motions of both hands. The default control-response ratio needed to be adaptive for precise controls.

In addition, the participants were encouraged to offer user-defined gestures for each task. The alternative gestures were listed in Tables 4, 6, and 8. The alternative gestures for scaling (Table 4) included using one hand with posture change (from open palm to grab or pinch) or using two hands to form two corners of a rectangle boundary and slightly adjust the boundary size (Fig. 6). These gestures featured the benefit of requiring less movement range. The alternative gesture for translation was to employ pinching, instead of grabbing (Table 6). The alternative gestures for rotation ranged from steering wheel metaphor, one-hand circular movement, or a more complicated gesture with the first hand staying still as a rotation axis and the second hand moving circularly with respect to the first hand (Table 8).

5 Conclusion

In this research, the usability factors of mid-air hand gestures for 3D virtual model manipulations were identified. The results indicated that the width and depth of detection ranges were the key factors for two-hand gestures with linear motions. For dynamic gestures with quick transitions and motions from open to close hand poses, ensuring gesture recognition robustness was extremely important. Furthermore, given a gesture with ergonomic postures, inappropriate control-response ratio could result in fatigue due to repetitive exertions of hand gestures for achieving the precise controls of 3D model manipulation tasks. These results could be used to inform the development team of vision-based mid-air hand gestures and serve as the checking lists for gesture evalation.

References

Song, P., Goh, W.B., Hutama, W., Fu, C., Liu, X.: A handle bar metaphor for virtual object manipulation with mid-air interaction. In: Proceedings of the 2012 ACM Annual Conference on Human Factors in Computing Systems - CHI 2012 (2012). doi:10.1145/2207676.2208585

Groenewald, C., Anslow, C., Islam, J., Rooney, C., Passmore, P., Wong, W.: Understanding 3D mid-air hand gestures with interactive surfaces and displays: a systematic literature review. In: Proceedings of the 30th International BCS Human Computer Interaction Conference (BCS HCI 2016), 11-15 July 2016. Bournemouth University, Poole doi:10.14236/ewic/hci2016.43

Hsu, F., Lin, W.: A multimedia presentation system using a 3D gesture interface in museums. Multimed. Tools Appl. 69(1), 53–77 (2012). doi:10.1007/s11042-012-1205-y

Caputo, F.M., Ciortan, I.M., Corsi, D., De Stefani, M., Giachetti, A.: Gestural interaction and navigation techniques for virtual museum experiences. Zenodo (2016). http://doi.org/10.5281/zenodo.59882

O’Hara, K., Gonzalez, G., Sellen, A., Penney, G., Varnavas, A., Mentis, H., Criminisi, A., Corish, R., Rouncefield, M., Dastur, N., Carrell, T.: Touchless interaction in surgery. Commun. ACM 57(1), 70–77 (2014)

Rosa, G.M., Elizondo, M.L.: Use of a gesture user interface as a touchless image navigation system in dental surgery: case series report. Imaging Sci. Dentistry 44(2), 155 (2014). doi:10.5624/isd.2014.44.2.155

Rossol, N., Cheng, I., Shen, R., Basu, A.: Touchfree medical interfaces. In: 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (2014). doi:10.1109/embc.2014.6945140

Hettig, J., Mewes, A., Riabikin, O., Skalej, M., Preim, B., Hansen, C.: Exploration of 3D medical image data for interventional radiology using myoelectric gesture control. In: Eurographics Workshop on Visual Computing for Biology and Medicine (2015)

Chattopadhyay, D., Bolchini, D.: Understanding visual feedback in large-display touchless Interactions: an exploratory study. [Research Report] IUPUI Scholar Works, Indiana University (2014)

Ackad, C., Clayphan, A., Tomitsch, M., Kay, J.: An in-the-wild study of learning mid-air gestures to browse hierarchical information at a large interactive public display. In: Ubicomp 2015, 7-11 September 2015, Osaka, Japan (2015)

Vinayak, Ramani, K.: A gesture-free geometric approach for mid-air expression of design intent in 3D virtual pottery. Comput. Aided Des. 69, 11–24 (2015). doi:10.1016/j.cad.2015.06.006

Vinayak, Ramani, K.: Extracting hand grasp and motion for intent expression in mid-air shape deformation: a concrete and iterative exploration through a virtual pottery application. Comput. Graph. 55, 143–156 (2016). doi:10.1016/j.cag.2015.10.012

Cui, J., Fellner, Dieter W., Kuijper, A., Sourin, A.: Mid-air gestures for virtual modeling with leap motion. In: Streitz, N., Markopoulos, P. (eds.) DAPI 2016. LNCS, vol. 9749, pp. 221–230. Springer, Cham (2016). doi:10.1007/978-3-319-39862-4_21

Nakazato, K., Nishino, H., Kodama, T.: A desktop 3D modeling system controllable by mid-air interactions. In: 2016 10th International Conference on Complex, Intelligent, and Software Intensive Systems (CISIS) (2016). doi:10.1109/cisis.2016.80

Aigner, R., Wigdor, D., Benko, H., Haller, M., Lindlbauer, D., Ion, A., Zhao, S., Koh, J.: Understanding mid-air hand gestures: a study of human preferences in usage of gesture types for HCI. Microsoft Research Technical report MSR-TR-2012-111 (2012). http://research.microsoft.com/apps/pubs/default.aspx?id=175454

Nancel, M., Wagner, J., Pietriga, E., Chapuis, O., Mackay, W.: Mid-air pan-and-zoom on wall-sized displays. In: Proceedings of the 2011 Annual Conference on Human Factors in Computing Systems - CHI 2011 (2011). doi:10.1145/1978942.1978969

LaViola, Jr., J.J.: 3D gestural interaction: the state of the field. ISRN Artif. Intell., Article ID 514641 (2013)

Pisharady, P.K., Saerbeck, M.: Recent methods and databases in vision-based hand gesture recognition: a review. Comput. Vis. Image Underst. 141, 152–165 (2015). Pose & Gesture

Pereira, A., Wachs, J.P., Park, K., Rempel, D.: A user-developed 3-d hand gesture set for human-computer interaction. Hum. Factors 57(4), 607–621 (2015). doi:10.1177/0018720814559307

Choi, E., Kim, H., Chung, M.K.: A taxonomy and notation method for three-dimensional hand gestures. Int. J. Ind. Ergon. 44(1), 171–188 (2014). doi:10.1016/j.ergon.2013.10.011

Mendes, D., Relvas, F., Ferreira, A., Jorge, J.: The benefits of DOF separation in mid-air 3D object manipulation. In: Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology - VRST 2016 (2016). doi:10.1145/2993369.2993396

Cui, J., Kuijper, A., Fellner, D.W., Sourin, A.: Understanding people’s mental models of mid-air interaction for virtual assembly and shape modeling. In: Proceedings of the 29th International Conference on Computer Animation and Social Agents - CASA 2016 (2016). doi:10.1145/2915926.2919330

Nunnari, F., Bachynskyi, M., Heloir, A.: Introducing postural variability improves the distribution of muscular loads during mid-air gestural interaction. In: Proceedings of the 9th International Conference on Motion in Games - MIG 2016 (2016). doi:10.1145/2994258.2994278

Smedt, Q.D., Wannous, H., Vandeborre, J.: Skeleton-based dynamic hand gesture recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) (2016). doi:10.1109/cvprw.2016.153

Fonseca, F., Ferreira, A., Mendes, D., Jorge, J., Araújo, B.: 3D mid-air manipulation techniques above stereoscopic tabletops. In: ISIS3D Workshop in Conjunction with ITS 2013, 6 October 2013, Scotland, UK (2013)

Wittorf, M.L., Jakobsen, M.R.: Eliciting mid-air gestures for wall-display interaction. In: Proceedings of the 9th Nordic Conference on Human-Computer Interaction - NordiCHI 2016 (2016). doi:10.1145/2971485.2971503

Acknowledgement

The authors would like to express our gratitude to the Ministry of Science and Technology of the Republic of China for financially supporting this research under Grant No. MOST 105-2221-E-036-009.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Chen, LC., Cheng, YM., Chu, PY., Sandnes, F.E. (2017). Identifying the Usability Factors of Mid-Air Hand Gestures for 3D Virtual Model Manipulation. In: Antona, M., Stephanidis, C. (eds) Universal Access in Human–Computer Interaction. Designing Novel Interactions. UAHCI 2017. Lecture Notes in Computer Science(), vol 10278. Springer, Cham. https://doi.org/10.1007/978-3-319-58703-5_29

Download citation

DOI: https://doi.org/10.1007/978-3-319-58703-5_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58702-8

Online ISBN: 978-3-319-58703-5

eBook Packages: Computer ScienceComputer Science (R0)