Abstract

Saving energy and, therefore, reducing the Total Cost of Ownership (TCO) for High Performance Computing (HPC) data centers has increasingly generated attention in light of rising energy costs and the technical hurdles imposed when powering multi-MW data centers. The broadest impact on data center energy efficiency can be achieved by techniques that do not require application specific tuning. Improving the Power Usage Effectiveness (PUE), for example, benefits everything that happens in a data center. Less broad but still better than individual application tuning would be to improve the energy efficiency of the HPC system itself. One property of homogeneous HPC systems that hasn’t been considered so far is the existence of node power variation.

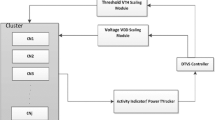

This paper discusses existing node power variations in two HPC systems. It introduces three energy-saving techniques: node power aware scheduling, node power aware system partitioning, and node ranking based on power variation, which take advantage of this variation, and quantifies possible savings for each technique. It will show that using node power aware system partitioning and node ranking based on power variation will save energy with very minimal effort over the lifetime of the system. All three techniques are also relevant for distributed and cloud environments.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

All4Green: http://www.all4green-project.eu/

ASHRAE TC 9.9.2011: 2011 thermal guidelines for liquid cooled data processing environments. White paper (2011). www.tc99.ashraetcs.org

Auweter, A., et al.: A case study of energy aware scheduling on SuperMUC. In: Kunkel, J.M., Ludwig, T., Meuer, H.W. (eds.) ISC 2014. LNCS, vol. 8488, pp. 394–409. Springer, Heidelberg (2014)

Banerjee, A., Mukherjee, T., Varsamopoulos, G., Gupta, S.K.S.: Cooling-aware and thermal-aware workload placement for green hpc data centers. In: GREENCOMP 2010 Proceedings of the International Conference on Green Computing, pp. 245–256. IEEE Computer Society, Washington, DC, USA (2010). http://dx.doi.org/10.1109/GREENCOMP.2010.5598306

Beloglazov, A., Abawajy, J., Buyya, R.: Energy-aware resource allocation heuristics for efficient management of data centers for cloud computing. Future Gener. Comput. Syst. 28(5), 755–768 (2012). http://www.sciencedirect.com/science/article/pii/S0167739X11000689, special Section: Energy efficiency in large-scale distributed systems

Beloglazov, A., Buyya, R., Lee, Y.C., Zomaya, A.Y.: A taxonomy and survey of energy-efficient data centers and cloud computing systems. Adv. Comput. 82, 47–111 (2011). http://dblp.uni-trier.de/db/journals/ac/ac82.html#BeloglazovBLZ11

Davis, J.D., Rivoire, S., Goldszmidt, M., Ardestani, E.K.: Accounting for variability in large-scale cluster power models. In: Exascale Evaluation and Research Techniques (2011). http://research.microsoft.com/pubs/146087/EXERT_Variability_CR3.pdf

DCD Intelligence: Is the industry getting better at using power? Data Center Dynamics FOCUS 33, January/February 2014 33, 16–17 (2014). http://content.yudu.com/Library/A2nvau/FocusVolume3issue33/resources/index.htm?referrerUrl=

Dongarra, J., Heroux, M.A.: Toward a new metric for ranking high performance computing systems. Sandia Report, SAND2013-4744 312 (2013)

EEHPCWG: Energy efficient high performance computing power measurement methodology. Tech. rep., Energy Effiicient High Performace Computing Working Group (2013). http://www.green500.org/sites/default/files/eehpcwg/EEHPCWG_PowerMeasurementMethodology.pdf

EEHPCWG: Energy efficiency considerations for hpc procurement documents: 2014. Tech. rep., Energy Effiicient High Performace Computing Working Group (2014). http://eehpcwg.lbl.gov/sub-groups/equipment-1/procurement-considerations/procurement-considerations-presentations

Fit4Green: http://www.fit4green.eu/

Ge, R., Feng, X., Cameron, K.: Modeling and evaluating energy-performance efficiency of parallel processing on multicore based power aware systems. In: IEEE International Symposium on Parallel Distributed Processing, IPDPS 2009, pp. 1–8 (May 2009)

Green500 List: http://www.green500.org/

Energy Efficient HPC Working Group: http://eehpcwg.lbl.gov/

Hackenberg, D., Oldenburg, R., Molka, D., Schone, R.: Introducing firestarter: a processor stress test utility. In: 2013 International Green Computing Conference (IGCC), pp. 1–9 (June 2013)

Hackenberg, D., Schöne, R., Molka, D., Müller, M., Knüpfer, A.: Quantifying power consumption variations of HPC systems using spec mpi benchmarks. Comput. Sci. Res. Dev. 25(3–4), 155–163 (2010). http://dx.doi.org/10.1007/s00450-010-0118-0

Workshop on HPC centre infrastructures, E.: http://www-hpc.cea.fr/fr/evenements/Workshop-HPC-2013.htm

Keane, J., Kim, C.: An odomoeter for cpus. IEEE Spectr. 48(5), 28–33 (2011)

Kogge, P.: ExaScale computing study: Technology challenges in achieving exascale systems. Univ. of Notre Dame, CSE Dept. Tech. Report TR-2008-13 (September 28, 2008)

Koomey, J.G.: Worldwide electricity used in data centers (2008). http://iopscience.iop.org/1748-9326/3/3/034008/pdf/1748-9326_3_3_034008.pdf

Koomey, J.G.: Growth in data center electricity use 2005 to 2010 (2011). http://www.twosides.us/content/rspdf_218.pdf

Leibniz Supercomputing Centre: http://www.lrz.de

Johnsson, L., Netzer, G., Boyer, E., Carpenter, P., Januszewski, R., Koutsou, G., Saastad, O.W., Stylianou, G., Wilde, T.: D9.3.4 Final Report on Prototype Evaluation. PRACE 1IP-WP9 public deliverable, p. 44 (2013). http://www.prace-ri.eu/IMG/pdf/d9.3.4_1ip.pdf

Liu, H.: A measurement study of server utilization in public clouds. In: 2011 IEEE Ninth International Conference on Dependable, Autonomic and Secure Computing (DASC), pp. 435–442 (December 2011)

Mark Aggar (Microsoft): The IT Energy Efficiency Imperative. White paper (2011)

Naffziger, S.: AMD at ISSCC: Bulldozer Innovations Target Energy Efficiency. http://community.amd.com/community/amd-blogs/amd-business/blog/2011/02/22/amd-at-isscc-bulldozer-innovations-target-energy-efficiency

Partnership for Advanced Computing in Europe: http://www.prace-ri.eu/

Partnership for Advanced Computing in Europe: http://www.prace-ri.eu/prace-2ip/

Ravi A. Giri (Staff Engineer, Intel IT) and Anand Vanchi (Solutions Architect, Intel Data Center Group): Increasing Data Center Efficiency with Server Power Measurements. IT@Intel White Paper, p. 7 (2011)

Samak, T., Morin, C., Bailey, D.: Energy consumption models and predictions for large-scale systems. In: 2013 IEEE 27th International Parallel and Distributed Processing Symposium Workshops & Ph.D. Forum (IPDPSW), pp. 899–906. IEEE (2013)

Scogland, T.R., Steffen, C.P., Wilde, T., Parent, F., Coghlan, S., Bates, N., Feng, W.C., Strohmaier, E.: A power-measurement methodology for large-scale, high-performance computing. In: ICPE 2014 Proceedings of the 5th ACM/SPEC International Conference on Performance Engineering, pp. 149–159. ACM, New York, NY, USA (2014). http://doi.acm.org/10.1145/2568088.2576795

Shoukourian, H., Wilde, T., Auweter, A., Bode, A.: Monitoring power data: a first step towards a unified energy efficiency evaluation toolset for HPC data centers. Environ. Model. Softw. 56, 13–26 (2014). http://www.sciencedirect.com/science/article/pii/S1364815213002934, thematic issue on Modelling and evaluating the sustainability of smart solutions

Shoukourian, H., Wilde, T., Auweter, A., Bode, A.: Predicting the energy and power consumption of strong and weak scaling HPC applications. Supercomput. Front. Innovations 1(2), 20–41 (2014)

Stansberry, M.: Uptime institute annual data center industry survey report and full results (2013). http://www.data-central.org/resource/collection/BC649AE0-4223-4EDE-92C7-29A659EF0900/uptime-institute-2013-data-center-survey.pdf

Wang, L., Khan, S.U., Dayal, J.: Thermal aware workload placement with task-temperature profiles in a data center. J. Supercomput. 61(3), 780–803 (2012)

Whitney, J., Delforge, P.: Scaling up energy efficiency across the Data Center Industry: evaluating Key Drivers and Barriers. Data Center Efficiency Assessment (2014). http://www.nrdc.org/energy/files/data-center-efficiency-assessment-ip.pdf

Wilde, T., Auweter, A., Patterson, M., Shoukourian, H., Huber, H., Bode, A., Labrenz, D., Cavazzoni, C.: DWPE, a new data center energy-efficiency metric bridging the gap between infrastructure and workload. In: 2014 International Conference on High Performance Computing Simulation (HPCS), pp. 893–901 (July 2014)

Wilde, T., Auweter, A., Shoukourian, H.: The 4 Pillar Framework for energy efficient HPC data centers. In: Computer Science - Research and Development, pp. 1–11 (2013). http://dx.doi.org/10.1007/s00450-013-0244-6

Wu, X., Lively, C., Taylor, V., Chang, H.C., Su, C.Y., Cameron, K., Moore, S., Terpstra, D., Weaver, V.: Mummi: multiple metrics modeling infrastructure. In: 2013 14th ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), pp. 289–295 (July 2013)

Acknowledgments

The authors would like to thank Jeanette Wilde (LRZ) for her valuable comments and support.

The work presented here has been carried out within the SIMOPEK project, which has received funding from the German Federal Ministry for Education and Research under grant number 01IH13007A, at the Leibniz Supercomputing Centre (LRZ) with support of the State of Bavaria, Germany, and the Gauss Centre for Supercomputing (GCS).

The EPOCH code used in this research was developed under UK Engineering and Physics Sciences Research Council grants EP/G054940/1, EP/G055165/1 and EP/G056803/1.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Wilde, T., Auweter, A., Shoukourian, H., Bode, A. (2015). Taking Advantage of Node Power Variation in Homogenous HPC Systems to Save Energy. In: Kunkel, J., Ludwig, T. (eds) High Performance Computing. ISC High Performance 2015. Lecture Notes in Computer Science(), vol 9137. Springer, Cham. https://doi.org/10.1007/978-3-319-20119-1_27

Download citation

DOI: https://doi.org/10.1007/978-3-319-20119-1_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20118-4

Online ISBN: 978-3-319-20119-1

eBook Packages: Computer ScienceComputer Science (R0)