Abstract

Research accumulated has suggested that narrowing instructional quality gaps can improve educational equity and the well-being of children in social and economic backgrounds. Considering that the disparity of instructional quality may affect educational inequality across different regions in China, this study explored how teaching quality varied in 30 lessons primary English classrooms in an economically disadvantaged province in China. This study adopted a mixed-method strategy with quantitative classroom observation data to select four lessons contrastive in teaching quality for subsequent qualitative analysis to explore classroom processes in-depth. Using two internationally validated classroom observation instruments, ICALT and TEACH, added a further dimension to examine how characteristics of instruments might influence perceived instructional quality. Results revealed that while both high-inference instruments were theoretically comparable in distinguishing teaching quality, only ICALT predicted learner engagement. While quantitative instruments could not provide detailed accounts of classroom processes, qualitative accounts of the four lessons could uncover the deep relationships between teacher-student interactions and differences in instructional quality. These findings suggest that conceptually similar instruments may vary in predictive power and that systematic qualitative analysis is indispensable in complementing high-inference instruments to provide an objective teacher evaluation.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

In the last decade, economically poorer regions worldwide, including inland provinces in China, have received considerable financial support from governmental and non-governmental organisations for building school and teaching and learning facilities equip** to guarantee pupils’ schooling. Sammons (2007) identified strong links between school education effectiveness and educational equity and concluded that teacher exerts a substantially more significant effect on children than school, and educational effectiveness varies more at the class level.

Quite a few studies have investigated educational inequalities in China, especially underprivileged areas, from different perspectives such as educational financing (e.g., Li et al., 2007; Tsang & Ding, 2005), gender (e.g., Hannum, 2005; Zeng et al., 2014), poverty (e.g., Heckman & Yi, 2012; Zhang, 2017; Yang et al., 2009), ethnicity (e.g., Hannum et al., 2008, 2015), and urbanisation (e.g., Qian & Smyth, 2008; Yang et al., 2014). Unsurprisingly, educational inequalities in China were found to be narrowed significantly, with the adverse effects primarily mitigated. However, the influence of these factors still exists.

In addition to non-classroom observation factors, classroom teaching quality directly impacts students’ learning effectiveness. Given the significant role of classroom teaching practices in greater educational equity (Sammons, 2007), a research gap lies in the lack of lesson observation evidence on the quality of classroom teaching exploration in an underprivileged area in China. Furthermore, the rapid development of China society in recent years makes studies easily and quickly outdated. Lack of timely updated research prevents audiences’ knowledge of the education situation from kee** pace with reality. This study explored educational inequality at the classroom teaching level from a teaching effectiveness perspective in an under-advantaged province in China. Using two classroom observation instruments, ICALT (Van de Grift, 2007) and TEACH (World Bank, 2019), we explored the instructional quality gaps between example lessons and how the perceived instructions differed in learning and teaching interactions.

2 Literature Review

2.1 Teaching Quality in Develo** Countries and Underdeveloped Regions

Factors affecting students’ outcomes at the classroom level have received more attention than factors at the school level in educational effectiveness research (Muijs et al., 2014). Knowledge in effective teaching practice at the classroom level is crucial for enhancing teacher capability to develop agile differentiated instruction strategies for diverse learners’ needs (Edwards et al., 2006). Although strenuous efforts have been made to probe into teaching quality in classrooms, studies between developed and develo** countries are insufficient. The Organisation for Economic Cooperation and Development’s PISA 2018 project (OECD, 2019), which evaluated the academic performance of junior secondary students worldwide, involved only two develo** countries/regions among the 30 participating countries/regions.

We generally lack knowledge in classroom-level teaching quality in develo** countries/regions except for a few noticeable empirical studies. For example, Chiangkul (2016) claimed that insufficient capability in the knowledge and teaching skills of the younger Thai teachers was evident in the Trends International Mathematics and Science Study (TIMSS) 2015. In South Africa and Botswana, teachers were found to lack knowledge about combining practical pedagogical skills with subject content (Sapire & Sorto, 2012). In rural Guatemala, Marshall and Sorto (2012) found that teaching practice in mathematics classrooms adopted less complex pedagogical skills than developed countries like Japan, America and Germany. Similarly, teaching quality in China varies province by province, and inland provinces have disadvantages noticeably in recruiting talented teachers. Moreover, the teaching capability of rural schoolteachers was generally lower than that of urban teachers, resulting in a remarkable gap between rural and urban schools in West China (Wang & Li, 2009). Thus, understanding teaching effectiveness in rural regions of economically disadvantaged provinces in China would contribute to strategies to promote educational quality and equity for children in the regions in the future.

2.2 Classroom Observation and Comparison of Instruments

Studies of student academic outcomes significantly contribute to classroom effectiveness, but the specific processes are not articulated (Pianta et al., 2008). The invention of classroom observation instruments provides a powerful approach for probing into classroom reality. It is seen as a more just form of data collection to examine teachers’ behaviours (Pianta et al., 2008). Classroom observation used to be limited to teacher appraisal, lesson evaluation, professional development of novice teachers, identifications of expert teachers from experienced teachers, but it has become popular with the interest in the classroom level teaching process in research increased (Wragg, 2013). Systematic classroom observation allows teachers to compare specific predetermined and agreed categories of behaviour and practice, which originated in teacher effectiveness research (Muijs & Reynolds, 2005).

Lesson videos of classroom teaching practice could be another observation form that provides researchers with a window to explore what happens in classrooms (Sapire & Sorto, 2012). For teaching analysis, video data was first used in the TIMSS 1995 video study by Stigler et al. (1999). Video recordings allow raters to slow down, pause, replay and re-interpret teaching practice, and capture complex teaching paths (Erickson, 2011; Jacobs et al., 1999; Klette, 2009). Furthermore, recorded teaching practice makes visual representation possible for researchers to capture anticipated details of classrooms that may escape their gaze (Lesh & Lehrer, 2000; Tee et al., 2018).

A few observation instruments were developed to evaluate teachers’ actual teaching processes and their contribution to student achievements. For exploring the generic pedagogic capability of teachers, these observational tools include the Framework for Teaching (Danielson, 1996), the International System for Teacher Observation and Feedback (Teddlie et al., 2006), the International Comparative Analysis of Learning and Teaching (ICALT) (Van de Grift, 2007), the Classroom Assessment Scoring System (CLASS) (Pianta et al., 2008), and the TEACH (World Bank, 2019). Some assess specific competencies, such as classroom talk (Mercer, 2010) and project-based learning (Stearns et al., 2012). Instruments for subject-specific pedagogies are available to researchers as well, such as English reading (Gersten et al., 2005), mathematical instruction (Schoenfeld, 2013) and historical contextualisation (Huijgen et al., 2017).

For instrument application, scholars compared different instruments for STEM classrooms in post-secondary education (Anwar & Menekse, 2021), mathematics and science classrooms in secondary education (Boston et al., 2015; Marshall et al., 2011) and preservice teacher internships (Caughlan & Jiang, 2014; Henry et al., 2009). However, no instruments comparison study based on English as a second language classrooms in primary education was found, which could contribute to essential education quality improvement in develo** countries.

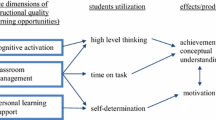

In the present study that compared ICALT and TEACH, we identified two issues in our careful comparisons of the two instruments. First, theoretically speaking, the two instruments are conceptually similar. The teaching behaviours under the Classroom Culture domain of TEACH are conceptually similar to the behavioural indicators of the Safe and Stimulating Learning Climate and Efficient Organisation domains of ICALT (Van de Grift, 2007). Similarly, the Socioemotional Skills domain of TEACH is conceptually comparable to the Intensive and Activating Teaching domain of ICALT. The Instruction domain of TEACH is similar to ICALT’s Clear and Structured Instructions, Adjusting Instructions and Learner Processing to Inter-Learner Differences and Teaching Learning Strategies domains.

The inspectors initially developed ICALT to study primary classrooms in England and the Netherlands. The ICALT was then used as a research tool to compare teaching practices in developed and develo** countries (Maulana et al., 2021). In contrast, TEACH was developed as a system diagnostic and monitoring tool of teaching practices at a primary school level to foster professional development in low- and middle-income countries (Molina et al., 2018). Thus, the difference in scale development would be, theoretically and methodologically, critical if TEACH is more suitable for develo** regions or countries than ICALT. For example, it is unlikely that catering for learner diversity is considered essential in develo** countries where access to free education is challenging. Maulana et al. (2021) have shown that teaching behaviours associated with differentiation could be country-specific rather than universal.

Second, it is less difficult to conduct classroom observation with TEACH in practice than ICALT. ICALT was designed to observe whether teachers adjust teaching according to the level of students, but ICALT also emphasises stimulating students with weak learning abilities to build self-confidence. This teaching behaviour reflects a higher teaching skill of teachers. Kyriakides et al. (2009) found that teacher behaviours varied distinctively in difficulty levels, and it is not uncommon that teachers cannot master some advanced teaching skills even after professional training. Similarly, Ko et al. (2015) found that while teachers in Guangzhou were found performing better than Hong Kong teachers in many aspects of perceived teaching quality, Hong Kong teachers did better in catering for learner diversity because Hong Kong has practised an inclusive education policy for nearly two decades.

2.3 Qualitative In-Depth Lesson Analysis from a Dialogic Teaching Perspective

Apart from the dominant quantitative teacher effectiveness research, a consistently growing body of research investigated learning and teaching from a qualitative perspective on dialogic teaching in the last decades (Howe & Mercer, 2017; Vrikki et al., 2019) with regarding dialogic teaching as vital to student learning outcome (Alexander, 2006; Howe et al., 2019). Alexander (2008) proposed dialogic teaching as a learning process that promotes students to develop their higher-order thinking through reasoning, discussing, arguing, and explaining. Dialogic teaching is believed to have two main types, teacher-student interaction and student-student interaction (Howe & Abedin, 2013), with five core principles: collective, reciprocal, supportive, cumulative and purposeful (Alexander, 2008).

Hennessy and his team (2016) introduced a coding approach with developed Scheme for Education Dialogue Analysis (SEDA) to conduct qualitative in-depth lesson analysis for characterising and analysing classroom dialogues. It is considered a practical approach to evaluate how high-quality interaction is productive for learning (Hennessy et al., 2020), and has become quite prevalent in recent years (Song et al., 2019). For example, Shi et al. (2021), informed by SEDA’s condensed version, the Cambridge Dialogue Analysis Scheme (CDAS) (Vrikki et al., 2019), successfully modified SEDA to make it more suitable for their data set.

3 Research Questions

Based on the above background and consideration, the objective of this study is to answer the following research questions:

-

1.

How were teaching practices rated using different classroom instruments (i.e., ICALT and TEACH) in the same lessons?

-

(a)

In what aspects did the ratings look similar based on the two observation instruments?

-

(b)

How did the rating show more variations based on the two observation instruments?

-

(a)

-

2.

To what extent the above differences could be identified in an in-depth qualitative analysis of four purposively selected lessons?

4 Method

This study adopted a subsequent quantitative-qualitative research strategy to probe into the link and differences between two instructional quality assessment instruments, the TEACH and the ICALT. This research used the classroom observation strategy to explore teachers’ teaching quality and teacher-student interactions.

4.1 Samples

This study involved 20 primary schools in an underprivileged province in China in two different districts (one city/urban and one county/rural). Among these twenty schools, eleven schools were from the rural area, and nine were from the urban area. Thirty English teachers (one lesson per teacher) randomly selected from the sample schools participated in this study. The data collection was conducted with a third party that targeted primary school teachers whose teaching experience was more than two years and less than eight years. Hence, we controlled the teaching experience of participants by excluding teachers with less than two years or more than eight years.

Thirty lessons (one lesson per teacher) were recorded and observed by a well-trained rater with instruments to obtain quantitative data. Then, four lessons were selected for in-depth qualitative analysis.

4.2 Instruments

Classroom observation instruments are often assumed to study similar teaching characteristics, so they are expected to be comparable (Ko, 2010). ICALT (Van de Grift, 2007) and TEACH (World Bank, 2019) are two internationally validated classroom observation instruments on generic teaching behaviours. Analysis of this study focuses on high-inference indicators of these two instruments.

4.2.1 ICALT

ICALT instrument (Van de Grift, 2007) assesses classroom teaching behaviours divided into three parts. The core part has 32 behavioural indicators to be evaluated on a four-point scale to determine the relative strengths and effectiveness of a teaching behaviour (i.e., 1 = mostly weak; 2 = more often weak than strong; 3 = more often strong than weak; 4 = mostly strong). Four to ten behavioural indicators are grouped in one of the six primary domains in the instrument: Safe and Stimulating Learning Climate, Efficient Organisation, Clarity and Structure of Instruction, Intensive and Activating Teaching, Adjusting Instructions and Learner Processing to Inter-Learner Differences groups, and Teaching Learning Strategies. The second part comprises 115 observable teaching behaviours, with 3–10 matching a behavioural indicator in the core part. For example, ‘The teacher lets learners finish their sentences,’ ‘The teacher listens to what learners have to say,’ and ‘The teacher does not make role stereoty** remarks’ are corresponding teaching behaviours for the first indicator, ‘The teacher shows respect for learners in his/her behaviour and language’. Before giving a score for the behavioural teaching indicators, a rater should determine whether the observed behaviours are observed during the lesson. Whenever a teaching behaviour is observed, it should be scored 1; or a zero should be given if it is not observed. This part of ICALT has made the instrument quite different from many other instruments (e.g., the Classroom Assessment Scoring System by Pianta et al., 2008; Pianta & Hamre, 2009) because a rater is expected to judge the effectiveness of a teaching indicator on the grounds of a set of observed teaching behaviours. The last part of ICALT includes three behavioural indicators for learner engagement and ten associated learning behaviours, evaluated in 4-point and 2-point respectively.

4.2.2 TEACH

TEACH was a validated classroom observation tool developed by the World Bank (2019), applicable for Grade 1–6 classrooms in primary schools. It aimed to promote teaching quality improvement in under-advantaged nations. Raters of this instrument showed high inter-rater reliability (Molina et al., 2018). This instrument offers a unique window into some seldom investigated but weighty domains of class level teaching and learning experiences. The Time on Task component requires observers to record in three ‘snapshots’ of 1–10 seconds whether teachers provide most students with learning activities and how many students are on task. Classroom Culture, Instruction, and Socioemotional Skills are the three domains of the Quality of Teaching Practice component, followed by nine corresponding indicators that point to 28 teaching behaviours. Based on observation reality, observers rate each behaviour item with a three-level scale, ‘high’, ‘medium’ and ‘low’, equal to ‘definitely having this behaviour’, ‘somewhat having this behaviour’ and ‘only having opposite behaviour’ respectively. It should be noted that four behaviour items can be marked as ‘N/A’ if they do not occur in the classroom. By matching its corresponding behavioural ratings, each indicator is scored with a five-point scale, ranging from 1 to 5 (‘1’ is the lowest and ‘5’ is the highest).

4.2.3 Comparison of ICALT and TEACH

Through careful comparisons at the level of behavioural indicators, it was found that the teaching behaviours under the Classroom Culture domain of TEACH correspond to the behavioural indicators of the Safe and Stimulating Learning Climate and Efficient Organisation domains of ICALT (Van de Grift, 2007). Similarly, the Socioemotional Skills domain of TEACH corresponds to the Intensive and Activating Teaching domain of ICALT. The Instruction domain of TEACH corresponds to ICALT’s Clear and Structured Instructions, Adjusting Instructions and Learner Processing to Inter-Learner Differences and Teaching Learning Strategies domains. It is less difficult to conduct classroom observation with TEACH than ICALT. As mentioned earlier, while ICALT and TEACH could be used to observe whether teachers adjust teaching according to student abilities, the Adjusting Instructions and Learner Processing to Inter-Learner Differences domain in ICALT also emphasises stimulating students with weak learning abilities to build self-confidence. This domain reflects a higher level of teaching skills of teachers.

However, as a specific classroom observation instrument for teacher evaluation in primary schools in underdeveloped countries, TEACH is a better choice for in-depth qualitative analysis on dialogic teaching with its official training manual (World Bank, 2019), providing clear definitions on teaching behaviour items and detailed guidance for observer training. All teaching behaviour indicators in TEACH have unified official inspection standards, ensuring the reliability of coding scheme building and the in-depth qualitative dialogue analysis process and results. Accordingly, a new qualitative coding scheme, TEACH Tool for Lesson Analysis (TTLA), was developed based on the TEACH manual and partially summarised in Table 7.1.

4.3 Raters

The first author served as a research assistant in a commissioned impact study in which she collected all videos while she observed, recorded and rated with TEACH all the lessons onsite. Then, she reviewed the lesson videos with ICALT again within a month. The rater held a master’s degree with considerable lesson observation experience after taking TEACH and ICALT training workshops. The first author evaluated the same lesson videos with two instruments in the workshops and conducted a comparison and discussion afterwards. Then the raters launched the second and third rounds of lesson video evaluation practice. An additional rater was employed to ensure better consistency on inter-rater reliability concerns. The rater informed teachers only one night before the observation to prevent teachers from preparing perfect teaching in advance. All 30 classrooms were recorded with a camera to enable later transcripts on teaching practice and in-depth coding of teaching behaviours.

4.4 Data Collection

4.4.1 Quantitative Rating

A total of thirty English lessons were observed. Quantitative analysis was conducted with SPSS 20 to compare the perceived instructional quality of the same classrooms in different aspects of classroom observation instruments, TEACH and ICALT and determine which instrument could better predict student engagement. As Z-scores averages were provided in the official manual of TEACH (World Bank, 2019), selecting lessons for comparison based on those averages would provide objective ground beyond the present study. Two ‘weak’ lessons (Lesson 1, z = −1.52; Lesson 2, z = −0.96) and two ‘strong’ lessons (Lesson 3, z = 1.24; Lesson 4, z = 2.62) were eventually selected for in-depth qualitative analyses to explore variations in the evaluations of teaching quality with different instruments (see Table 7.1).

4.4.2 Qualitative Coding

In-depth qualitative analyses were performed based on the teaching behaviour definitions in the TEACH manual for better validity. TTLA was employed to code the teaching behaviours of the four selected four lessons. Teaching activities and interactions between teachers and students of each sample lesson illustrated teaching practices more specifically than quantitative ratings.

5 Results

5.1 Quantitative Analyses of All Lessons

All TEACH and ICALT factors were standardised for quantitative analyses because the scales used were different in the two instruments. Due to the small sample sizes, only one regression model was tested using SPSS 20.0 to predict learner engagement in ICALT using the overall scores of both TEACH and ICALT.

Table 7.2 presents the mean, standard deviation, and reliability (alpha and omega) of factors in two instruments. We include both McDonald’s Omega (McDonald, 2013) and Cronbach’s alpha (1951), as the former is considered more suitable regardless of the number of items within a factor. The results indicated that the two values do not show much difference. It also demonstrates the descriptive statistics of the overall scores and good item consistencies of all nine items in TEACH (α = 0.82) and 32 items in ICALT (α = 0.932). Due to a limited number of items in each TEACH factor, there is a low internal consistency level for Socioemotional Skills (α = 0.483). In ICALT, the Adjusting Instructions and Learner Processing to Inter-Learner Differences domain (α = 0.361) and Teaching Learning Strategies domain (α = 0.599) also show low reliabilities.

Spearman rho’s correlation coefficients between TEACH and ICALT factors are presented in Table 7.3. There are strong positive correlations between three TEACH factors, while the ICALT domain Adjusting Instructions and Learner Processing to Inter-learner Differences does not significantly correlate with other ICALT domains. Learner engagement was significantly correlated with most factors in both TEACH and ICALT, except for the Adjusting Instructions and Learner Processing to Inter-Learner Differences domain in ICALT.

With the limitation of the participant number, only one regression model with the overall scores of TEACH and ICALT in the prediction of learner engagement could be conducted (see Table 7.4). Results show that only the ICALT score could significantly predict learner engagement, F (2, 27) = 29.92, p < .00, R2 = 0.83.

5.2 Comparisons of ICALT and TEACH Results of the Selected Four Lessons

As shown in Table 7.5, the individual and overall aspects of LESSON 1 and LESSON 2 were relatively weak with lower means, while LESSON 3 and LESSON 4 were high-quality lessons. The standard deviations of the ICALT averages (Table 7.5) were observably lower than that of TEACH, indicating that variations in ratings were more considerable if TEACH was used for observation.

At the domain level, LESSON 1 has a much lower mean in the Instruction domain (M = 1.75) but a little higher means in the Classroom Culture (M = 2.5) and Socioemotional Skills (M = 2.33) domains than those of LESSON 2 (M = 2.75, 2.0, 2.0 respectively) in the TEACH results. However, the ICALT results show LESSON 1 scored much higher means in the Safe and Stimulating Learning Climate domain (M = 2.25) and a little higher in the Intensive and Activating Teaching domain (M = 1.57), and a little lower mean in Clear and Structured Instructions domain (M = 2.29) than LESSON 2. It is worth noting that the ICALT rankings of these two less effective lessons are higher than those of TEACH. Interestingly, LESSON 1 ranks the last in TEACH but the 22nd out of 30 in ICALT. LESSON 2 ranks higher than LESSON 1 in TEACH (28th) but higher in ICALT (26th).

Regarding the two more effective lessons, means of LESSON 3 in the Instruction (M = 3.0) and Socioemotional Skills (M = 3.0) domains are significantly lower than LESSON 4 (M = 3.75, 3.67 respectively) in TEACH. In contrast, for ICALT, means for LESSON 3 were lower in the Safe and Stimulating Learning Climate (M = 3.0), Intensive and Activating Teaching (M = 2.29), Adjusting Instructions and Learner Processing to Inter-Learner Differences (M = 1.0), and Teaching Learning Strategies (M = 1.0) domains than those for LESSON 4 (M = 3.5, 2.71, 1.5, 1.67 respectively). LESSON 3 were rated better in two ICALT domains, Efficient Organisation (M = 3.75) and Clear and Structured Instructions (M = 3.57), than LESSON 4 (M = 3.5, 2.71 respectively). Additionally, the ranking of two high-quality lessons of TEACH was a little higher than that of ICALT. LESSON 3 ranks 3rd in TEACH but 5th in ICALT, and LESSON 4 ranks 1st in TEACH and 2nd in ICALT.

5.3 Qualitative Characteristics of Teacher-Student Interactions

Two low-quality lessons (LESSONS 1 & 2) and two high-quality lessons (LESSONS 3 & 4) were selected as above mentioned. Four lessons were transcribed verbatim and coded with non-verbal communication captured by two coders. Coders coded these lessons with the TTLA framework outlined in Table 7.1. Teaching behaviours reflected in dialogue content are coded with corresponding codes. Multiple coding appears when more than one behaviour is reflected.

The performances of two low-quality lessons (LESSONS 1 & 2) were unsatisfactory in the teacher-student interaction. Table 7.6 shows the learning activity Reading Sentences of LESSON 1. The teacher performed good at providing students with opportunities to play a role in the classroom (S7b) and promoted students’ voluntary behaviours (S7c). Nevertheless, students were not clear with the learning activity behaviour expectation since the teacher did not explain it before the learning activity. When the teacher said, ‘partner A partner B’, all students were confused and silent (Line 2). They had no idea what the teacher expected them to do until she asked who wanted to be Partner A in English and Chinese.

The situation in LESSON 2 (Table 7.7) was also difficult. The teacher in LESSON 2 performed poorly in respecting students. The teacher even taunted the students (line 7: Aren’t you full? Can’t the brain think? [means You are a fool in Chinese culture]). On the bright side, the teacher offered students opportunities to play a role in the classroom (9 lines out of 10 lines of teacher talk were coded with S7b) by asking questions to check students’ level of understanding (I4a). However, he did not tell students what they could refer to and where the references were in advance, so it was hard to follow him. Students responded to the teachers’ questions with silence (Line 4, Line 6, Line 11, Line 13), making the lesson challenging to move on.

As one of the high-quality lessons, LESSON 3 led the students to review the words learned before (Table 7.8). First, the teacher explained the expected behaviours of the learning activity and demonstrated how to carry out the activity in detail, and even conducted simulation (Line 7, C2a, I3d; Line 9, I3d; Line 11, C2a; Line 13, C2a). In this activity, the teacher attached great importance to students’ mastery of learning content and students’ involvement in the classroom (Line 13, I4a, S7b; Line 15, I4a, S7b; Line 18, I4a, S7b). She checked students’ understanding individually. Four out of the teachers’ seven communicative behaviours were coded as C1a (lines 7, 13, 15 and 17). That means that teachers are very good at respecting students.

In LESSON 4, the teacher adopted pictures describing as a learning activity (Table 7.9). Code I3c appeared in every line in this learning activity since the teacher utilised picture materials that connected with students’ lives. That raised students’ strong interest and initiative in this learning activity. The teacher put forward a series of questions around the given pictures to check the students’ understanding of the grammar (Line 128, I4a; Line130 I4a; Line 132, I4a; Line 134, I4a). Questioning on life connected materials also promote students’ participation and allows them to take on a classroom role (S7b). Overall, 13 out of 14 lines were coded with two or three codes. This incident illustrates teacher-student interaction was of high quality in this learning activity.

Teaching styles differ among these four lessons and show a large gap between high-quality and low-quality lessons. The difference between a good lesson and a weak one is noticeable. In outstanding high-quality lessons, teachers respected students, articulated clear expectations, and let students play a role in classroom learning. These are some weaknesses of low-quality lessons. For LESSON 1 and LESSON 2, teachers’ behaviours did not show good respect, affecting students’ interest in the lesson. Teachers also did not make their expectations for students on classroom activity clear. This teaching behaviour makes it difficult for students to understand the teacher’s intention. In the end, the students could not give the expected responses. Moreover, having no opportunity to play a role in the classroom made students lack participation and fail to learn confidently.

6 Discussion and Conclusion

6.1 Instrument Characteristics as Biases and Limitations

As shown in Table 7.5, only some general teaching behaviours are assessed (I3a to I6c) in TEACH, which means teachers only need to conduct common teaching behaviours to meet the standards to get higher scores.

‘High-quality’ lessons ranked a little lower in ICALT than in TEACH. It indicated that ICALT has higher overall classroom teaching requirements than TEACH. Regarding ‘low-quality lessons ranked higher in ICALT than in TEACH, the teachers in these two classes did not perform well in general teaching behaviour, but they had deeper teaching behaviour. Nevertheless, it does not affect the determination of the final characterisation of ‘low-quality.’

Our results indicated that TEACH is a feasible coding scheme for in-depth qualitative analysis on dialogic teaching as it fit our research demands to associate it with a quantitative lesson observation instrument. There is a trade-off between instrument complexity and ease of usage as TEACH was developed to provide quick training for practitioners in develo** countries for teacher evaluation and professional teacher development. In contrast, ICALT was initially developed for high-stake inspections and subsequently for high-quality research in developed and develo** countries (Maulana et al., 2021).

6.2 The Practicability of Promoting Teacher Reflections: TEACH vs ICALT

The quantitative results indicated that ICALT predicted student engagement better than TEACH. However, the subscale Learner engagement is part of ICALT, so it is not surprising that the results might favour ICALT more than TEACH. However, both ICALT and TEACH results showed that clear and structured instructions improve student engagement. Adequate instructions could contribute to a better and depth understanding of classroom activities and contents, resulting in higher student involvement in classroom learning (Boston & Candela, 2018).

Moreover, among the ICALT domains, the average score of the Adjusting Instructions and Learner Processing to Inter-Learner Differences was lower than other domains in ICALT, indicating that teachers in the sample hardly presented student-centred instructions to address learner diversity. A lower rating might be caused by the limited background information of the students available to the raters. The raters did not know the students’ learning differences ahead of the class; hence, it might be hard for them to identify students with diverse learning needs to associate teaching behaviours expected to address learner diversity during the classroom observation (Edwards et al., 2006). Thus, a rater may be biased against the teacher if s/he lacks the understanding of students as learners. Among TEACH factors, teachers with better socioemotional skills, including autonomy, perseverance, social and collaborative skills, could have engaged students better in classroom learning.

In addition to the low average score, the Adjusting Instructions and Learner Processing to Inter-Learner Differences subscale also has poor reliability. A similar reason that observers lack contextual information in the classroom might affect the reliability. For example, it is not easier to identify whether a student is weaker without asking the teacher. Another explanation is that as the teaching quality of each teacher was assessed based on one single lesson, personalised instruction to fit in inter-learner differences and adjusting might not be readily recognisable in one single lesson but more evident in more lessons observed for the whole academic term. A longitudinal study in which teaching quality can be assessed several times throughout a whole academic term or year could be conducted in the future to better capture student-centred instructions in the teaching quality.

7 Conclusion

Two significant limitations of the present study were the small sample size and selection of samples. In this study, as the sampling only covered teaching whose teaching experience was more than two years and less than eight years, the teachers who taught more than eight years or just started to teach less than two years were underrepresented. Future studies can focus on the assessments and comparisons of teaching quality based on teachers with all lengths of teaching experience. For example, a study on 47 rural primary schools in Guizhou Province showed that the length of teaching experiences varied across teachers, and teachers with 4–10 years of teaching experience only accounted for 27% of the population (Peng, 2015).

Teacher-student interaction is an essential factor affecting classroom teaching quality (Berlin & Cohen, 2018). The differences between high-quality and low-quality lessons are highlighted in respecting students, behaviour expectation for students, and students playing a role in classroom aspects. If a class does not have these characteristics, it is challenging to associate students’ interests with specific teaching behaviours and subsequently affect the student learning achievement and make a fair judgement on teaching quality.

There are many classroom observation tools for us to choose for teacher evaluation and research. However, we compared two instruments designed for different purposes and probably for different audiences and contexts. When choosing these tools, we should first consider comparing the lens of different instruments (Walkington & Marder, 2018; Walkowiak et al., 2019), as we have done to balance efficiency and exhaustivity for the research needs. When analysing the comparative results, we should also thoroughly consider the limitations of our observation tools. We also conducted in-depth qualitative analyses because high-inference classroom observation instruments like ICALT and TEACH cannot provide detailed accounts of classroom processes. Our coding strategies also provide the potential for quantifying qualitative data. We suggest systematic in-depth qualitative analysis with detailed contextual information provide dby the teacher and a longitudinal approach be indispensable to complement high-inference instruments in more objective research and fairer teacher evaluation.

References

Alexander, R. (2006). Towards dialogic teaching (3rd ed.). Dialogos.

Alexander, R. (2008). Towards dialogic teaching: Rethinking classroom talk (4th ed.). Dialogos.

Anwar, S., & Menekse, M. (2021). A systematic review of observation protocols used in post-secondary STEM classrooms. Review of Education, 9(1), 81–120.

Berlin, R., & Cohen, J. (2018). Understanding instructional quality through a relational lens. ZDM, 50(3), 367–379.

Boston, M. D., & Candela, A. G. (2018). The instructional quality assessment as a tool for reflecting on instructional practice. ZDM, 50(3), 427–444.

Boston, M., Bostic, J., Lesseig, K., & Sherman, M. (2015). A comparison of mathematics classroom observation protocols. Mathematics Teacher Educator, 3(2), 154–175.

Caughlan, S., & Jiang, H. (2014). Observation and teacher quality: Critical analysis of observational instruments in preservice teacher performance assessment. Journal of Teacher Education, 65(5), 375–388.

Chiangkul, W. (2016). The state of Thailand education 2014/2015 “How to reform Thailand education towards 21st century?”. Office of the Education Council.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334.

Danielson, C. (1996). Enhancing professional practice: A framework for teaching. Association for Supervision and Curriculum Development.

Edwards, C. J., Carr, S., & Siegel, W. (2006). Influences of experiences and training on effective teaching practices to meet the needs of diverse learners in schools. Education, 126(3), 580–591.

Erickson, F. (2011). Uses of video in social research: A brief history. International Journal of Social Research Methodology, 14(3), 179–189.

Gersten, R., Baker, S. K., Haager, D., & Graves, A. W. (2005). Exploring the role of teacher quality in predicting Reading outcomes for first-grade English learners: An observational study. Remedial and Special Education, 26(4), 197–206.

Hannum, E. (2005). Market transition, educational disparities, and family strategies in rural China: New evidence on gender stratification and development. Demography, 42(2), 275–299.

Hannum, E., Behrman, J., Wang, M., & Liu, J. (2008). Education in the reform era. In L. Brandt & T. G. Rawski (Eds.), China’s great economic transformation (pp. 215–249). Cambridge University Press.

Hannum, E., Cherng, H. Y. S., & Wang, M. (2015). Ethnic disparities in educational attainment in China: Considering the implications of interethnic families. Eurasian Geography and Economics, 56(1), 8–23.

Heckman, J. J., & Yi, J. (2012). Human capital, Economic Growth, and Inequality in China (No. w18100). National Bureau of Economic Research.

Hennessy, S., Rojas-Drummond, S., Higham, R., Márquez, A. M., Maine, F., Ríos, R. M., García-Carriónc, R., Torreblancab, O., & Barrera, M. J. (2016). Develo** a coding scheme for analysing classroom dialogue across educational contexts. Learning, Culture and Social Interaction, 9, 16–44.

Hennessy, S., Howe, C., Mercer, N., & Vrikki, M. (2020). Coding classroom dialogue: Methodological considerations for researchers. Learning, Culture and Social Interaction, 25, 100404.

Henry, M. A., Murray, K. S., Hogrebe, M., & Daab, M. (2009). Quantitative analysis of indicators on the RTOP and ITC observation instruments. MSPnet. http://mspnet-static.s3.amazonaws.com/MA_Henry_Quantitative_Analysis_RTOP_ITC_112509_FINAL.pdf

Howe, C., & Abedin, M. (2013). Classroom dialogue: A systematic review across four decades of research. Cambridge Journal of Education, 43(3), 325–356.

Howe, C., & Mercer, N. (2017). Commentary on the papers. Language and Education, 31(1), 83–92.

Howe, C., Hennessy, S., Mercer, N., Vrikki, M., & Wheatley, L. (2019). Teacher-student dialogue during classroom teaching: Does it really impact on student outcomes. Journal of the Learning Sciences, 28(4–5), 462–512. https://doi.org/10.1080/10508406.2019.1573730

Huijgen, T., van de Grift, W., Van Boxtel, C., & Holthuis, P. (2017). Teaching historical contextualisation: The construction of a reliable observation instrument. European Journal of Psychology of Education, 32(2), 159–181.

Jacobs, J. K., Kawanaka, T., & Stigler, J. W. (1999). Integrating qualitative and quantitative approaches to the analysis of video data on classroom teaching. International Journal of Educational Research, 31, 717–724.

Klette, K. (2009). Challenges in strategies for complexity reduction in video studies. Experiences from the PISA+ study: A video study of teaching and learning in Norway. In T. In Janík & T. Seidel (Eds.), The power of video Sstudies in investigating teaching and learning in the classroom (pp. 61–82). Waxmann.

Ko, J. Y. O. (2010). Consistency and variation in classroom practice: A mixed-method investigation based on case studies of four EFL teachers of a disadvantaged secondary school in Hong Kong (Publication No. 11363). Doctoral dissertation, University of Nottingham. Nottingham eThesis.

Ko, J., Ho, M., & Chen, W. (2015, July–Oct). Teacher report —teacher effectiveness and goal orientation. report Nos. 1–43. Tai Po: Hong Kong Institute of Education.

Kyriakides, L., Creemers, B. P., & Antoniou, P. (2009). Teacher behaviour and student outcomes: Suggestions for research on teacher training and professional development. Teaching and teacher education, 25(1), 12-23.

Lesh, R. A., & Lehrer, R. (2000). Iterative refinement cycles of videotape analyses of conceptual change. In A. E. Kelly & R. A. Lesh (Eds.), Handbook of research design in mathematics and science education (pp. 665–708). LEA.

Li, W., Park, A., Wang, S., & **, L. (2007). School equity in rural China. In E. Hannum & A. Park (Eds.), Education and reform in China (pp. 27–43). Routledge.

Marshall, J. H., & Sorto, M. A. (2012). The effects of teacher mathematics knowledge and pedagogy on student achievement in rural Guatemala. International Review of Education, 58(2), 173–197.

Marshall, J. C., Smart, J., Lotter, C., & Sirbu, C. (2011). Comparative analysis of two inquiry observational protocols: Striving to better understand the quality of teacher-facilitated inquiry-based instruction. School Science and Mathematics, 111(6), 306–315.

Maulana, R., André, S., Helms-Lorenz, M., Ko, J., Chun, S., Shahzad, A., et al. (2021). Observed teaching behaviour in secondary education across six countries: Measurement invariance and indication of cross-national variations. School Effectiveness and School Improvement, 32(1), 64–95.

McDonald, R. P. (2013). Test theory: A unified treatment. Psychology Press.

Mercer, N. (2010). The analysis of classroom talk: Methods and methodologies. British Journal of Educational Psychology, 80(1), 1–14.

Molina, E., Fatima, S. F., Ho, A. D. Y., Melo Hurtado, C. E., Wilichowksi, T., & Pushparatnam, A. (2018). Measuring teaching practices at scale: Results from the development and validation of the teach classroom observation tool (World Bank Policy Research Working Paper, No. 8653). The World Bank.

Muijs, D., & Reynolds, D. (2005). Effective teaching: Introduction & conclusion. Sage.

Muijs, D., Kyriakides, L., Van der Werf, G., Creemers, B., Timperley, H., & Earl, L. (2014). State of the art–teacher effectiveness and professional learning. School Effectiveness and School Improvement, 25(2), 231–256.

OECD. (2019, April 26). PISA 2018 assessment and analytical framework. https://www.oecd-ilibrary.org/education/pisa-2018-assessment-and-analytical-framework_b25efab8-en

Peng, Y. (2015). The recruitment and retention of teachers in rural areas of Guizhou, China. Doctoral dissertation, University of York, York, UK.

Pianta, R. C., & Hamre, B. K. (2009). Conceptualisation, measurement, and improvement of classroom processes: Standardised observation can leverage capacity. Educational Researcher, 38(2), 109–119.

Pianta, R. C., La Paro, K. M., & Hamre, B. K. (2008). Classroom assessment scoring system™: Manual K-3. Paul H Brookes Publishing.

Qian, X., & Smyth, R. (2008). Measuring regional inequality of education in China: Widening coast–inland gap or widening rural-urban gap? Journal of International Development: The Journal of the Development Studies Association, 20(2), 132–144.

Sammons, P. (2007). School effectiveness and equity: Making connections. CfBT.

Sapire, I., & Sorto, M. A. (2012). Analysing teaching quality in Botswana and South Africa. Prospects, 42(4), 433–451.

Schoenfeld, A. H. (2013). Classroom observations in theory and practice. ZDM, 45(4), 607–621.

Shi, Y., Shen, X., Wang, T., Cheng, L., & Wang, A. (2021). Dialogic teaching of controversial public issues in a Chinese middle school. Learning, Culture and Social Interaction, 30, 100533.

Song, Y., Chen, X., Hao, T., Liu, Z., & Lan, Z. (2019). Exploring two decades of research on classroom dialogue by using bibliometric analysis. Computers & Education, 137, 12–31.

Stearns, L. M., Morgan, J., Capraro, M. M., & Capraro, R. M. (2012). A teacher observation instrument for PBL classroom instruction. Journal of STEM Education: Innovations & Research, 13(3), 7.

Stigler, J. W., Gonzales, P., Kwanaka, T., Knoll, S., & Serrano, A. (1999). The TIMSS videotape classroom study: Methods and findings from an exploratory research project on eighth-grade mathematics instruction in Germany, Japan, and the United States. National Center for Education Statistics (ED), .

Teddlie, C., Creemers, B., Kyriakides, L., Muijs, D., & Yu, F. (2006). The international system for teacher observation and feedback: Evolution of an international study of teacher effectiveness constructs. Educational Research and Evaluation, 12(6), 561–582.

Tee, M. Y., Samuel, M., Nor, N. M., Sathasivam, R. V., & Zulnaidi, H. (2018). Classroom practice and the quality of teaching: Where a nation is going? Journal of International and Comparative Education, 7(1), 17–33.

Tsang, M. C., & Ding, Y. (2005). Resource utilisation and disparities in compulsory education in China. China Review, 5, 1–31.

Van de Grift, W. (2007). Quality of teaching in four European countries: A review of the literature and application of an assessment instrument. Educational Research, 49(2), 127–152.

Vrikki, M., Wheatley, L., Howe, C., Hennessy, S., & Mercer, N. (2019). Dialogic practices in primary school classrooms. Language and Education, 33(1), 85–100. https://doi.org/10.1080/09500782.2018.1509988

Walkington, C., & Marder, M. (2018). Using the UTeach Observation Protocol (UTOP) to understand the quality of mathematics instruction. ZDM, 50(3), 507–519.

Walkowiak, T. A., Adams, E. L., & Berry, R. Q. (2019). Validity arguments for instruments that measure mathematics teaching practices: Comparing the M-Scan and IPL-M. In J. D. Bostic, E. E. Krupa, & J. C. Shih (Eds.), Assessment in mathematics education contexts (pp. 90–119). Routledge.

Wang, J., & Li, Y. (2009). Research on the teaching quality of compulsory education in China’s west rural schools. Frontiers of Education in China, 4(1), 66–93.

World Bank. (2019, July 9). TEACH manual. World Bank. https://www.worldbank.org/en/topic/education/brief/teach-hel**-countries-track-and-improve-teaching-quality?cid=EXT_WBEmailShare_EXT

Wragg, T. (2013). An introduction to classroom observation. Routledge.

Yang, J., Huang, X., & Li, X. (2009). Education inequality and income inequality: An empirical study on China. Frontier of Education in China, 4(3), 413–434.

Yang, J., Huang, X., & Liu, X. (2014). An analysis of education inequality in China. International Journal of Educational Development, 37, 2–10.

Zeng, J., Pang, X., Zhang, L., Medina, A., & Rozelle, S. (2014). Gender inequality in education in China: A meta-regression analysis. Contemporary Economic Policy, 32(2), 474–491.

Zhang, H. (2017). Opportunity or new poverty trap: Rural-urban education disparity and internal migration in China. China Economic Review, 44, 112–124.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this chapter

Cite this chapter

Lei, J.C., Chen, Z., Ko, J. (2023). Differences in Perceived Instructional Quality of the Same Classrooms with Two Different Classroom Observation Instruments in China: Lessons Learned from Qualitative Analysis of Four Lessons Using TEACH and ICALT. In: Maulana, R., Helms-Lorenz, M., Klassen, R.M. (eds) Effective Teaching Around the World . Springer, Cham. https://doi.org/10.1007/978-3-031-31678-4_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-31678-4_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-31677-7

Online ISBN: 978-3-031-31678-4

eBook Packages: EducationEducation (R0)