Abstract

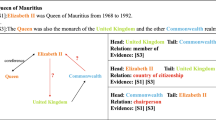

Document-level Relation Extraction (DocRE) is the task of extracting relational facts mentioned in the entire document. Despite its popularity, there are still two major difficulties with this task: (i) How to learn more informative embeddings for entity pairs? (ii) How to capture the crucial context describing the relation between an entity pair from the document? To tackle the first challenge, we propose to encode the document with a task-specific pre-trained encoder, where three tasks are involved in pre-training. While one novel task is designed to learn the relation semantic from diverse expressions by utilizing relation-aware pre-training data, the other two tasks, Masked Language Modeling (MLM) and Mention Reference Prediction (MRP), are adopted to enhance the encoder’s capacity in text understanding and coreference capturing. For addressing the second challenge, we craft a hierarchical attention mechanism to refine the context for entity pairs, which considers the embeddings from the encoder as well as the sequential distance information of mentions in the given document. Extensive experimental study on the benchmark dataset DocRED verifies that our method achieves better performance than the baselines.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

References

Christopoulou, F., Miwa, M., Ananiadou, S.: Connecting the dots: Document-level neural relation extraction with edge-oriented graphs. ar**v preprint ar**v:1909.00228 (2019)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: Pre-training of deep bidirectional transformers for language understanding. ar**v preprint ar**v:1810.04805 (2018)

Guo, Z., Zhang, Y., Lu, W.: Attention guided graph convolutional networks for relation extraction. ar**v preprint ar**v:1906.07510 (2019)

Gupta, P., Rajaram, S., Schütze, H., Runkler, T.: Neural relation extraction within and across sentence boundaries. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 6513–6520 (2019)

Hadsell, R., Chopra, S., LeCun, Y.: Dimensionality reduction by learning an invariant map**. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2006), vol. 2, pp. 1735–1742. IEEE (2006)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. ar**v preprint ar**v:1412.6980 (2014)

Luan, Y., Wadden, D., He, L., Shah, A., Ostendorf, M., Hajishirzi, H.: A general framework for information extraction using dynamic span graphs. ar**v preprint ar**v:1904.03296 (2019)

Mintz, M., Bills, S., Snow, R., Jurafsky, D.: Distant supervision for relation extraction without labeled data. In: Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, pp. 1003–1011 (2009)

Nan, G., Guo, Z., Sekulić, I., Lu, W.: Reasoning with latent structure refinement for document-level relation extraction. ar**v preprint ar**v:2005.06312 (2020)

Peng, N., Poon, H., Quirk, C., Toutanova, K., Yih, W.T.: Cross-sentence n-ary relation extraction with graph LSTMs. Trans. Assoc. Comput. Linguist. 5, 101–115 (2017)

Quirk, C., Poon, H.: Distant supervision for relation extraction beyond the sentence boundary. ar**v preprint ar**v:1609.04873 (2016)

Sahu, S.K., Christopoulou, F., Miwa, M., Ananiadou, S.: Inter-sentence relation extraction with document-level graph convolutional neural network. ar**v preprint ar**v:1906.04684 (2019)

Soares, L.B., FitzGerald, N., Ling, J., Kwiatkowski, T.: Matching the blanks: Distributional similarity for relation learning. ar**v preprint ar**v:1906.03158 (2019)

Song, L., Zhang, Y., Wang, Z., Gildea, D.: N-ary relation extraction using graph state LSTM. ar**v preprint ar**v:1808.09101 (2018)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Sun, H., Ma, H., Yih, W.T., Tsai, C.T., Liu, J., Chang, M.W.: Open domain question answering via semantic enrichment. In: Proceedings of the 24th International Conference on World Wide Web, pp. 1045–1055 (2015)

Tang, H., et al.: HIN: hierarchical inference network for document-level relation extraction. In: Lauw, H.W., Wong, R.C.-W., Ntoulas, A., Lim, E.-P., Ng, S.-K., Pan, S.J. (eds.) PAKDD 2020. LNCS (LNAI), vol. 12084, pp. 197–209. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-47426-3_16

Tenney, I., Das, D., Pavlick, E.: Bert rediscovers the classical nlp pipeline. ar**v preprint ar**v:1905.05950 (2019)

Vaswani, A., et al.: Attention is all you need. ar**v preprint ar**v:1706.03762 (2017)

Velikovi, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., Bengio, Y.: Graph attention networks (2017)

Verga, P., Strubell, E., McCallum, A.: Simultaneously self-attending to all mentions for full-abstract biological relation extraction. ar**v preprint ar**v:1802.10569 (2018)

Wang, H., Focke, C., Sylvester, R., Mishra, N., Wang, W.: Fine-tune bert for docred with two-step process. ar**v preprint ar**v:1909.11898 (2019)

Wang, L., Cao, Z., De Melo, G., Liu, Z.: Relation classification via multi-level attention CNNs. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 1298–1307 (2016)

Weikum, G., Theobald, M.: From information to knowledge: harvesting entities and relationships from web sources. In: Proceedings of the Twenty-Ninth ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems, pp. 65–76 (2010)

**ao, C., et al.: Denoising relation extraction from document-level distant supervision. ar**v preprint ar**v:2011.03888 (2020)

Xu, B., Wang, Q., Lyu, Y., Zhu, Y., Mao, Z.: Entity structure within and throughout: Modeling mention dependencies for document-level relation extraction. ar**v preprint ar**v:2102.10249 (2021)

Yao, Y., et al.: Docred: A large-scale document-level relation extraction dataset. ar**v preprint ar**v:1906.06127 (2019)

Ye, D., et al.: Coreferential reasoning learning for language representation. ar**v preprint ar**v:2004.06870 (2020)

Zeng, S., Xu, R., Chang, B., Li, L.: Double graph based reasoning for document-level relation extraction. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) (2020)

Acknowledgments

We are grateful to Heng Ye, Jiaan Wang and all reviews for their constructive comments. This work was supported by the National Key R&D Program of China (No. 2018AAA0101900), the Priority Academic Program Development of Jiangsu Higher Education Institutions, National Natural Science Foundation of China (Grant No. 62072323, 61632016, 62102276), Natural Science Foundation of Jiangsu Province (No. BK20191420).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Zou, M. et al. (2021). Document-Level Relation Extraction with Entity Enhancement and Context Refinement. In: Zhang, W., Zou, L., Maamar, Z., Chen, L. (eds) Web Information Systems Engineering – WISE 2021. WISE 2021. Lecture Notes in Computer Science(), vol 13081. Springer, Cham. https://doi.org/10.1007/978-3-030-91560-5_25

Download citation

DOI: https://doi.org/10.1007/978-3-030-91560-5_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-91559-9

Online ISBN: 978-3-030-91560-5

eBook Packages: Computer ScienceComputer Science (R0)