Abstract

Several behavioral studies show that semantic content influences reach-to-grasp movement responses. However, not much is known about the influence of motor activation on semantic processing. The present study aimed at filling this gap by examining the influence of pre-activated motor information on a subsequent lexical decision task. Participants were instructed to observe a prime object (e.g., the image of a frying pan) and then judge whether the following target was a known word in the lexicon or not. They were required to make a keypress response to target words describing properties either relevant (e.g., handle) or irrelevant (e.g., ceramic) for action or unrelated to the prime object (e.g., eyelash). Response key could be located on the same side as the depicted action-relevant property of the prime object (i.e., spatially compatible key) or on the opposite side (i.e., spatially incompatible key). Results showed a facilitation in terms of lower percentage errors when the target word was action-relevant (e.g., handle) and there was spatial compatibility between the orientation of the action-relevant component of the prime object and the response. This preliminary finding suggests that the activation of motor information may affect semantic processing. We discuss implications of these results for current theories of action knowledge representation.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Knowledge of object use is one of the most important available types of knowledge for a living being. For instance, humans can make use of a hammer to nail wooden planks and build a house, chimpanzees can use a twig to “fish” for insects, and birds of prey called bearded vultures, or lammergeiers, can make use of stones to break bones and feed themselves with marrow.

A basic issue in human cognition is how information concerning actions with objects is represented. Are motor representations critical components of object concepts? This question taps into the ongoing debate on the format (i.e., neural substrate, patterns of activation) of conceptual representations (for an overview see Scerrati 2017; Scerrati et al. 2017). Such debate critically involves two out of the three main research questions outlined in the present volume, that is, how concepts become acquired and how they are being used in cognitive tasks. The current research is a psychological investigation, which attempts to address these questions and, specifically, how concept learning and representation interact with the development of motor abilities.

An increasing widespread view assumes that knowledge is grounded in sensory-motor experiences (Barsalou 1999, 2008, 2016; Gallese and Lakoff 2005; Glenberg and Kaschak 2002, 2003; Glenberg and Robertson 2000; Pulvermüller 1999, 2001; Zwaan 2004). The semantic analysis reported in Vernillo (Chap. 8) demonstrated that the literal meaning of action verbs poses constrains on their usage in metaphorical sentences. Neuropsychological research provides further support for the grounding assumption by showing the existence of selective impairments at the expenses of specific categories of information. For example, following a stroke, a viral infection or a neurodegenerative disease, such as the Alzheimer disease (AD) or Semantic Dementia (SD), people may selectively lose knowledge of living animate (i.e., animals) or inanimate (i.e., fruit/vegetables) entities, conspecifics (i.e., other people) or non-living things (i.e., manipulable artefacts). According to the sensory/functional theory (Warrington and McCarthy 1983, 1987; Warrington and Shallice 1984; see also Damasio 1989; Farah and McClelland 1991; Humphreys and Forde 2001; McRae and Cree’s 2002), category-specific deficits can be explained by assuming that knowledge of a specific category is located near the sensory and motor areas of the brain dedicated to perception of its instances’ perceptual qualities and kind of movements. Therefore, when a sensory-motor area is damaged, the processing of instances of the specific category that rely on that area is impaired. Importantly, neuropsychological research also suggests that sensory-motor representations are involved not only in comprehending and producing voluntary movements but also in thinking about them (Buxbaum et al. 2000).

In addition, neuroimaging studies have largely shown different neural activations for different categories. For instance, Chao et al. (1999, 2002) found differential activation for animals and tools. Furthermore, Chao and Martin (2000) described regions in the dorsal visual pathway, such as the posterior parietal cortex, that were differentially recruited when participants viewed manipulable objects like tools and utensils. Also, semantic knowledge of actions has been shown to involve different loci of representation in the brain than semantic knowledge of entities, specifically the frontal lobe motor-related areas (see, for example, Hickok 2014; Kemmerer 2015). Interestingly, a growing body of neuroimaging research also shows that knowledge of object use is automatically activated upon naming (Chao and Martin 2000; Chouinard and Goodale 2010), categorizing (Gerlach et al. 2002), and even passively viewing manipulable objects (Creem-Regehr et al. 2007; Grèzes et al. 2003; Vingerhoets 2008; Wadsworth and Kana 2011).

Similarly, several behavioral studies showed that semantic content influences reach-to-grasp movement responses. For instance, Gentilucci and Gangitano (1998) found that automatic word reading influenced gras** movements: Their subjects automatically associated the meaning of the word (“corto: short”, “lungo: long”) with the distance to cover in order to perform a gras** action and activated a motor program for a nearer/farther object position. Glenberg and Kaschak (2002) showed that judging sensibility of sentences was easier when the movement implied by the sentence was in the same direction as the movement required by the response. In a similar vein, Zwaan et al. (2002) showed that object verification and naming was easier when the object’s shape on display matched the shape implied by a previously presented sentence. Furthermore, Glover et al. (2004) demonstrated that reading words describing objects activated motor tendencies, which influenced the gras** of target blocks. Lindemann et al. (2006) further showed that action semantics activation hinges on the specific action intention of an actor. Importantly, Myung et al. (2006) showed similar effects of semantics with a lexical decision task that required keypress responses: Performance on the target word was better when semantically dissimilar prime-target pairs shared manipulation information (e.g., typewriter and piano).

Although much is known about how semantic content mediates action in response to the environment, the influence of motor activation on semantic processing did not receive as much attention. The present study aimed at filling this gap by focusing on potential effects of action on language. If, as assumed by the sensory/functional theory (Warrington and McCarthy 1983, 1987; Warrington and Shallice 1984; see also Damasio 1989; Farah and McClelland 1991; Humphreys and Forde 2001; McRae and Cree’s 2002), conceptual content is stored closed to the sensory and motor systems, and, as claimed by the grounded view, semantics shares a common neural substrate with the sensory and the motor systems (Barsalou 1999, 2008, 2016), then effects should be observed bilaterally, that is, not only from language to action but also vice versa (see Meteyard and Vigliocco 2008).

The current study is aimed at testing whether: (a) motor information concerning objects can be pre-activated through the presentation of images of graspable objects as primes (e.g., “frying pan”); and (b) pre-activated motor information concerning graspable objects can affect performance on a lexical decision task involving target words describing objects’ properties relevant for action (e.g., handle).

To this end, participants were instructed to observe a prime object that could be presented in two different orientations, that is, with the action-relevant component (e.g., the frying pan’s handle) oriented either toward the left or toward the right. They were then asked to perform a lexical decision task (LDT)—a task commonly used in studies on lexical-semantic processing (Meyer and Schvaneveldt 1971; see also Iani et al. 2009; Scerrati et al. 2017)—on a subsequent target word. Specifically, they were required to judge whether the following target was a known word in the Italian lexicon or not by pressing a key either on the same side as the depicted action-relevant property of the prime object (i.e., spatially compatible key) or on the opposite side (i.e., spatially incompatible key). Target words matching in frequency and length were of three different types: words describing properties relevant for action with the object (action-relevant words, e.g., handle); words describing properties irrelevant for action with the object (action-irrelevant words, e.g., ceramic); words describing things unrelated to the object (unrelated words, e.g., eyelash).

If the image of the graspable object (i.e., the prime image) directly cues a specific motor representation, which becomes part of the concept held in working memory (e.g., Bub and Masson 2010), then we should observe a facilitation on the subsequent lexical decision task provided that the target word is action-relevant (e.g., handle) and the orientation of the action-relevant component of the prime object is spatially compatible with the response key. Indeed, several behavioral studies showed a facilitation when the responding hand of the participant and the orientation of the object’s graspable component, that is, its affordance (e.g., the handle; for the original idea of affordance see Gibson 1979) were compatible (i.e., on the same side) rather than incompatible (i.e., on opposite sides). This finding supports the assumption that seeing a picture of a graspable object activates the motor actions associated with its use (Iani et al. 2019; Pellicano et al. 2010; Saccone et al. 2016; Scerrati et al. 2019, 2020; Tipper et al. 2006; Tucker and Ellis 1998; Vainio et al. 2007). Therefore, we expect that the presentation of the graspable prime object will pre-activate manipulation information about objects. This in turn should facilitate a lexical decision task on target words describing those objects’ properties relevant for action (e.g., handle). In contrast, no such facilitation is expected for target words that describe properties irrelevant for action with (action-irrelevant words, e.g., ceramic) or unrelated to (unrelated words, e.g., eyelash) the prime object. In other words, we expect that motor information evoked by object observation will have different effects as a function of the following type of word. Specifically, we predict that motor information will determine a motor-to-semantic priming effect for action-relevant words as the processing of these words can benefit from the activation of motor knowledge. Conversely, it should determine neither benefits nor disadvantages for action-irrelevant and unrelated words as these words refer to motor-irrelevant features of the prime objects. Hence, we expect to observe an interaction between spatial compatibility and the type of word.

2 Method

2.1 Materials

The prime stimuli were digital photographs of four domestic objects (can, door, frying pan, radiator) selected from public-domain images available on the Internet. Prime objects could be presented in two orientations, that is, with the action-relevant component (e.g., the frying pan’s handle) oriented either toward the left or toward the right. These objects subtended a maximum of 13.7° of visual angle horizontally and 12.3° of visual angle vertically when viewed from a distance of 60 cm. Prime objects were centered on screen according to the length and width of the entire object.

The target stimuli were twelve words belonging to three different categories: Four words referred to a characteristic of the prime object that was relevant for action (e.g., handle); four words referred to a characteristic of the prime object that was irrelevant for action (e.g., ceramic); four words referred to things unrelated to the prime object (e.g., eyelash). For the complete list of stimuli, see Appendix. Target words ranged from 2.7 to 5.4 cm (from 5 to 10 characters) which resulted in a visual angle range between 2.5° and 5.1° when viewed from a distance of 60 cm.

Words from the three categories (action-relevant, action-irrelevant, and unrelated) were matched in terms of frequency and length. For lexical frequency, the Italian database Colfis was used (Bertinetto et al. 1995). Values for frequency and length of target words are reported in Table 1.

To control for association strength between the prime object and the target word, 40 Italian participants (23 males; mean age: 28 years old; SD: 9 years) who did not participate in the main Experiment were asked to rate the twelve target words in terms of their degree of association with the prime objects on a 1–7 points Likert scale (1 = “not associated at all”; 7 = “very associated”). The mean ratings were 5.2 for action-relevant words related to the prime object, 5.4 for action-irrelevant words related to the prime object, and 1.5 for words unrelated to the prime object.

Twelve legal non-word fillers (e.g., celimora) were created using a non-word generator for the Italian language available online.Footnote 1 The non-words were preceded by the same prime objects.

To control for potential phonological associations between the non-word fillers and the target words, 28 new Italian participants (11 males; mean age: 27 years old; SD: 7 years) were engaged in a free association production task. The task required participants to write down the first two Italian words that each of the twelve non-words brought to mind. Only one participant reported the Italian word ciglia (included in the unrelated category) in response to the non-word geglie. However, given it was an isolated case, we did not consider it necessary to exclude this non-word from our selection of non-word fillers.

3 Participants

Thirty-four participants (13 males; mean age: 22 years old; SD: 3 years) from the University of Modena and Reggio Emilia where the experiment was conducted. All participants were native speakers of Italian, had normal or corrected to normal vision, and were naïve as to the purpose of the experiment. Handedness was measured by the Edinburgh Handedness Inventory (Oldfield 1971), which revealed that 25 participants were right-handed (laterality mean = 0.76; SD = 0.13), seven participants were ambidextrous (laterality mean = 0.25; SD = 0.21) and two participants were left-handed (laterality mean = −0.69; SD = 0.10). The experiment was conducted in accordance with the ethical standards laid down in the Declaration of Helsinki and fulfilled the ethical standard procedure recommended by the Italian Association of Psychology (AIP). All procedures were approved by the Department of Education and Human Sciences of the University of Modena and Reggio Emilia where the experiment was conducted. All participants gave their written informed consent to participate to the study.

4 Apparatus

Stimulus presentation, response times (RTs) and accuracy were controlled and recorded by E-Prime 2 (Psychology Software Tools, Inc., Sharpsburg, PA). Participants completed the experiment on a HP ProDesk 490 G1 MT running Windows 7 with a 19 in monitor and a display with a resolution of 1280 × 1024 pixels.

5 Design and Procedure

Two factors were manipulated: Target word with 3 levels (action-relevant; action-irrelevant; unrelated), and Spatial compatibility—between the orientation of the action-relevant component of the prime object and the response—with two levels (spatially compatible: both handle and response on the right or on the left; spatially incompatible: handle on the right and response on the left and viceversa). Both factors were manipulated within-subject.

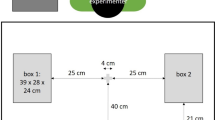

Participants sat at a viewing distance of about 60 cm from the monitor in a dimly-lit room. Each trial started with the presentation of a fixation cross (0.3 cm × 0.3 cm) for 500 ms. Immediately after the fixation, the prime object appeared on screen for 1000 ms. Then, either the target word or the non-word filler was displayed on screen until a response was given or until 1500 ms had elapsed (see Fig. 1 for details). RT latencies were measured from the onset of the target stimulus. Both target and filler stimuli were bold lowercase Courier new 18 and were presented in black in the center of a white background.

Participants were asked to make a lexical decision, that is, determine whether the displayed letter string was an Italian word or not, by pressing one of two lateralized buttons as quickly and as accurately as possible. Response keys were the “-” and the “z” keys on an Italian QWERTY keyboard. Half of the participants responded by pressing the “-” key with their right index finger when the letter string was an Italian word, and the “z” key with their left index finger when it was a non-word. The other half was assigned to the opposite map**.

The order of presentation of each prime-target pair was randomized across participants. The experiment consisted of 24 practice trials (different from those used in the experiment) and two experimental blocks of 48 trials each, for a total of 120 trials per participant. Blocks were separated by a self-paced interval and the experiment lasted approximately 10 min.

6 Results

Responses to non-word fillers were discarded. Omissions (1%) and outlying RT (5%) that were two standard deviations (SD) from the participant’s mean were excluded from the analysis.

Two repeated measures ANOVAs with Target Word (action-relevant, action-irrelevant, unrelated) and Spatial compatibility (compatible, incompatible) as within-subject factors were conducted, one for RT latencies and one for percentage errors (3.5%). When sphericity was violated, the Huynh–Feldt correction was applied, although the original degrees of freedom are reported.

The results of the ANOVA on the RT latencies did not reveal any significant main effect or interaction, all F < 1. In contrast, the results of the ANOVA on the percentage errors showed a significant main effect of Target Word (F(2, 66) = 3.67, MSe = 61.15, p = 0.043, np2 = 0.10), that is, lexical decision responses were more accurate for action-relevant target words (1.65%) than for both action-irrelevant (4.22%) and unrelated target words (4.59%), t(33) = 2.92, p = 0.006, and t(33) = 2.61, p = 0.01, respectively. No other main effect resulted significant, F < 1. Results are shown in Fig. 2.

Importantly, there was a marginally significant interaction between Target Word and Spatial compatibility (F(2, 66) = 3.42, MSe = 35.68, p = 0.057, np2 = 0.09). Paired comparisons revealed that lexical decision responses for action-relevant target words tended to be more accurate in the spatially compatible condition (0.73%) than in the spatially incompatible condition (2.57%), t(33) = 1.71, p = 0.09 two tailed. In contrast, lexical decision responses for action-irrelevant target words tended to be more accurate in the spatially incompatible condition (2.94%) than in the spatially compatible condition (5.51%), t(33) = −1.74, p = 0.09 two tailed. Finally, lexical decision responses for unrelated target words did not differ in the spatially compatible (4.41%) and incompatible (4.77%) conditions. Figure 3 shows the results graphically.

7 Discussion

Although much evidence is available on the influence of semantics on action preparation and execution (Gentilucci and Cangitano 1998; Glenberg and Kaschak 2002; Glover et al. 2004; Lindemann et al. 2006; Myung et al. 2006; Zwaan et al. 2002), the effects of motor control on language processing are poorly investigated.

The current study examined whether semantic processing may be influenced by the activation of the motor system. If conceptual content is stored closed to the sensory and motor systems (Warrington and McCarthy 1983, 1987; Warrington and Shallice 1984; see also Damasio 1989; Farah and McClelland 1991; Humphreys and Forde 2001; McRae and Cree’s 2002), and if it shares a common neural substrate with the sensory and the motor systems (Barsalou 1999, 2008, 2016), then effects of language on action and of action on language should be observed likewise (Meteyard and Vigliocco 2008).

We explored whether presenting images of graspable objects (e.g., “frying pan”) as prime stimuli could pre-activate manipulation information about objects, which in turn could facilitate a lexical decision task on target words referring to objects’ properties relevant for action (e.g., handle). That is, we expected that object observation would activate motor knowledge leading to a motor-to-semantic priming effect only for target words referring to action-relevant components of objects as only the processing of action-relevant words should benefit from the activation of motor knowledge.

In line with our hypothesis, we found that performing a lexical decision on action-relevant target words produced more accurate responses than performing the same task on action-irrelevant words and on words unrelated to the prime objects. This finding suggests that language processing is somewhat facilitated provided that words are not only related to the prime object seen before but also relevant for action with that object. It is plausible to assume that the prime object’s graspability was able to shift participants’ attention to the action-relevant features of the object thus facilitating the subsequent lexical decision on words describing those features.

Furthermore, we found an interaction between the type of word (relevant-for-action; irrelevant-for-action; unrelated) and spatial compatibility (compatible, incompatible). In line with our hypothesis, we observed a tendency toward lower percentage errors (i.e., facilitation) when the target word was action-relevant (e.g., handle) and there was spatial compatibility between the orientation of the action-relevant component of the prime object and the response. Conversely, we observed a tendency toward higher percentage errors (i.e., interference) when the target word was action-irrelevant (e.g., ceramic) and there was spatial compatibility between the orientation of the action-relevant component of the prime object and the response. Therefore, motor information activated by observing objects’ orientation may influence language processing to the extent that words being processed are relevant for action with such objects. This preliminary finding supports the assumption that observing a graspable object activates the motor actions associated with its use (Iani et al. 2019; Pellicano et al. 2010; Saccone et al. 2016; Scerrati et al. 2019, 2020; Tipper et al. 2006; Tucker and Ellis 1998; Vainio et al. 2007).

Taken together these findings suggest that the activation of motor information may affect semantic processing.

However, the present study has a limitation in that our results only emerged for percentage errors (not response latencies). This may be the consequence of the low level of verbal processing involved by the lexical decision task. Indeed, the LDT may recruit the semantic system to a small extent (see Scerrati et al. 2017) thus failing to show a robust influence of motor information on language processing that is able to affect response latencies (for task-dependent influences of motor information on conceptual processing see De Bellis et al. 2016; see García and Ibáñez 2016 for review). That is, if the LDT is performed by relying on a simple word association strategy, i.e., without determining the type of association between the property word and the concept word (for example, whether the property word refers to a part of the concept word as in the concept-property pair frying pan-handle), then the underlying conceptual representations may not be retrieved at all, this resulting in motor information being unable to exert a robust influence on semantic processing (e.g., Solomon and Barsalou 2004). In addition, as highlighted by a recent review by García and Ibáñez (2016), the allowed time-lag (2.5 s) between motor and linguistic information may have played a role in our study leading to a weaker influence of motor knowledge on language processing. Such weakened influence may reflect in the motor-to-semantic priming effect failing to show for response latencies. Even holding these caveats in mind, our study indicates a possible influence of motor control on cognitive functions and strengthens the hypothesis of the proximity of language and sensory-motor systems in the human brain (see also Goldstone and Barsalou 1998).

Future studies may extend the investigation of mutual effects of semantic content and motor control by introducing other tasks that more explicitly require the construction of modality-specific representations (e.g., motor representations). In fact, it is plausible that a conceptual, recognition-oriented task may reveal effects of motor control on semantic processing more easily than a more implicit task such as the lexical decision task. A different task will help identify to which extent the nature of the task determines the motor-to-semantic priming effect and to discard other possible factors.

References

Barsalou, L. W. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22, 577–660.

Barsalou, L. W. (2008). Grounded cognition. Annual Review of Psychology, 59, 617–645.

Barsalou, L. W. (2016). On staying grounded and avoiding quixotic dead ends. Psychonomic Bulletin & Review, 23, 1122–1142.

Bertinetto, P. M., Burani, C., Laudanna, A., Marconi, L., Ratti, D., Rolando, C., & Thornton, A. M. (1995). CoLFIS (Corpus e Lessico di Frequenza dell’Italiano Scritto) [Corpus and frequency lexicon of written Italian]. Institute of Cognitive Sciences and Technologies.

Bub, D. N., & Masson, M. E. (2010). On the nature of hand-action representations evoked during written sentence comprehension. Cognition, 116(3), 394–408.

Buxbaum, L. J., Veramontil, T., & Schwartz, M. F. (2000). Function and manipulation tool knowledge in apraxia: knowing ‘what for’ but not ‘how’. Neurocase, 6(2), 83–97.

Chao, L. L., & Martin, A. (2000). Representation of manipulable manmade objects in the dorsal stream. NeuroImage, 12, 478–484.

Chao, L. L., Haxby, J. V., & Martin, A. (1999). Attribute-based neural substrates in posterior temporal cortex for perceiving and knowing about objects. Nature Neuroscience, 2, 913–919.

Chao, L. L., Weisberg, J., & Martin, A. (2002). Experiencedependent modulation of category related cortical activity. Cerebral Cortex, 12, 545–551.

Chouinard, P. A., & Goodale, M. A. (2010). Category-specific neural processing for naming pictures of animals and naming pictures of tools: An ALE meta-analysis. Neuropsychologia, 48(2), 409–418.

Creem-Regehr, S. H., Dilda, V., Vicchrilli, A. E., Federer, F., & Lee, J. N. (2007). The influence of complex action knowledge on representations of novel graspable objects: Evidence from functional magnetic resonance imaging. Journal of the International Neuropsychological Society, 13(6), 1009–1020.

Damasio, A. R. (1989). Time-locked multiregional retroactivation: A systems-level proposal for the neural substrates of recall and recognition. Cognition, 33, 25–62.

De Bellis, F., Ferrara, A., Errico, D., Panico, F., Sagliano, L., Conson, M., & Trojano, L. (2016). Observing functional actions affects semantic processing of tools: Evidence of a motor-to-semantic priming. Experimental Brain Research, 234(1), 1–11.

Farah, M. J., & McClelland, J. L. (1991). A computational model of semantic memory impairment: Modality specificity and emergent category specificity. Journal of Experimental Psychology: General, 120(4), 339–357.

Gallese, V., & Lakoff, G. (2005). The brain’s concepts: The role of the sensory–motor system in conceptual knowledge. Cognitive Neuropsychology, 22(3/4), 455–479.

García, A. M., & Ibáñez, A. (2016). A touch with words: Dynamic synergies between manual actions and language. Neuroscience & Biobehavioral Reviews, 68, 59–95.

Gentilucci, M., & Gangitano, M. (1998). Influence of automatic word reading on motor control. European Journal of Neuroscience, 10(2), 752–756.

Gerlach, C., Law, I., & Paulson, O. B. (2002). When action turns into words. Activation of motor-based knowledge during categorization of manipulable objects. Journal of Cognitive Neuroscience, 14(8), 1230–1239.

Gibson, J. J. (1979). The ecological approach to visual perception. Boston, MA: Houghton Mifflin.

Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin & Review, 9(3), 558–565.

Glenberg, A. M., & Kaschak, M. P. (2003). The body’s contribution to language. In B. H. Ross (Ed.), The psychology of learning and motivation (Vol. 43, pp. 93–126). San Diego, CA: Academic Press.

Glenberg, A. M., & Robertson, D. A. (2000). Symbol grounding and meaning: A comparison of high-dimensional and embodied theories of meaning. Journal of Memory and Language, 43, 379–401.

Glover, S., Rosenbaum, D. A., Graham, J., & Dixon, P. (2004). Gras** the meaning of words. Experimental Brain Research, 154(1), 103–108.

Goldstone, R. L., & Barsalou, L. W. (1998). Reuniting perception and conception. Cognition, 65(2–3), 231–262.

Grèzes, J., Tucker, M., Armony, J., Ellis, R., & Passingham, R. E. (2003). Objects automatically potentiate action: An fMRI study of implicit processing. European Journal of Neuroscience, 17(12), 2735–2740.

Hickok, G. (2014). The myth of mirror neurons: The real neuroscience of communication and cognition. New York, NY: Norton.

Humphreys, G. W., & Forde, E. M. E. (2001). Hierarchies, similarity, and interactivity in object recognition: “Category-specific” Neuropsychological deficits. Behavioral and Brain Sciences, 24(3), 453–509.

Iani, C., Ferraro, L., Maiorana, N. V., Gallese, V., & Rubichi, S. (2018). Do already grasped objects activate motor affordances? Psychological Research, 83, 1363–1374, https://doi.org/10.1007/s00426-018-1004-9.

Iani, C., Job, R., Padovani, R., & Nicoletti, R. (2009). Stroop effects on redemption and semantic effects on confession: Simultaneous automatic activation of embedded and carrier words. Cognitive Processing, 10, 327–334.

Kemmerer, D. (2015). Are the motor features of verb meanings represented in the precentral motor cortices? Yes, but within the context of a flexible, multilevel architecture for conceptual knowledge. Psychonomic Bulletin & Review, 22, 1068–1075.

Lindemann, O., Stenneken, P., Van Schie, H. T., & Bekkering, H. (2006). Semantic activation in action planning. Journal of Experimental Psychology: Human Perception and Performance, 32(3), 633.

McRae, K., & Cree, G. S. (2002). Factors underlying category-specific semantic deficits. In E. M. E. Forde, & G. Humphreys (Eds.), Category-specificity in brain and mind (pp. 211–249). East Sussex, UK: Psychology Press.

Meteyard, L., & Vigliocco, G. (2008). The role of sensory and motor information in semantic representation: A review. In P. Calvo, & T. Gomila (Eds.), Handbook of cognitive science: An embodied approach (pp. 291–312). Elsevier.

Meyer, D. E., & Schvaneveldt, R. W. (1971). Facilitation in recognizing pairs of words: Evidence of a dependence between retrieval operations. Journal of Experimental Psychology, 90(2), 227.

Myung, J. Y., Blumstein, S. E., & Sedivy, J. C. (2006). Playing on the typewriter, ty** on the piano: Manipulation knowledge of objects. Cognition, 98(3), 223–243.

Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia, 9(1), 97–113.

Pellicano, A., Iani, C., Borghi, A. M., Rubichi, S., & Nicoletti, R. (2010). Simon-like and functional affordance effects with tools: The effects of object perceptual discrimination and object action state. Quarterly Journal of Experimental Psychology, 63(11), 2190–2201.

Pulvermüller, F. (1999). Words in the brain’s language. Behavioral and Brain Sciences, 22, 253–336.

Pulvermüller, F. (2001). Brain reflections of words and their meaning. Trends in Cognitive Sciences, 5(12), 517–524.

Saccone, E. J., Churches, O., & Nicholls, M. E. (2016). Explicit spatial compatibility is not critical to the object handle effect. Journal of Experimental Psychology: Human Perception and Performance, 42(10), 1643–1653.

Scerrati, E. (2017). From amodal to grounded to hybrid accounts of knowledge: New evidence from the investigation of the modality-switch effect. Unpublished doctoral dissertation, University of Bologna, Bologna, Italy.

Scerrati, E., Iani, C., Lugli, L., & Rubichi, S. (2019). C’è un effetto di potenziamento dell’azione con oggetti bimanuali? Giornale Italiano di Psicologia, 4, 987–996, https://doi.org/10.1421/95573.

Scerrati, E., Iani, C., Lugli, L., Nicoletti, R., & Rubichi, S. (2020). Do my hands prime your hands? The hand-to-response correspondence effect? Acta Psychologica, 203, 103012, https://doi.org/10.1016/j.actpsy.2020.103012.

Scerrati, E., Lugli, L., Nicoletti, R., & Borghi, A. M. (2017). The multilevel modality-switch effect: what happens when we see the bees buzzing and hear the diamonds glistening. Psychonomic Bulletin & Review, 24(3), 798–803.

Solomon, K. O., & Barsalou, L. W. (2004). Perceptual simulation in property verification. Memory & Cognition, 32(2), 244–259.

Tipper, S. P., Paul, M. A., & Hayes, A. E. (2006). Vision-for-action: The effects of object property discrimination and action state on affordance compatibility effects. Psychonomic Bulletin & Review, 13(3), 493–498.

Tucker, M., & Ellis, R. (1998). On the relations between seen objects and components of potential actions. Journal of Experimental Psychology: Human Perception and Performance, 24(3), 830–846.

Vainio, L., Ellis, R., & Tucker, M. (2007). The role of visual attention in action priming. The Quarterly Journal of Experimental Psychology, 60(2), 241–261.

Vingerhoets, G. (2008). Knowing about tools: Neural correlates of tool familiarity and experience. Neuroimage, 40(3), 1380–1391.

Wadsworth, H. M., & Kana, R. K. (2011). Brain mechanisms of perceiving tools and imagining tool use acts: A functional MRI study. Neuropsychologia, 49(7), 1863–1869.

Warrington, E. K., & McCarthy, R. A. (1983). Category specific access dysphasia. Brain, 106, 859–878.

Warrington, E. K., & McCarthy, R. A. (1987). Categories of knowledge: Further fractionations and an attempted integration. Brain, 110, 1273–1296.

Warrington, E. K., & Shallice, T. (1984). Category-specific semantic impairments. Brain, 107, 829–854.

Zwaan, R. (2004). The immersed experiencer: Toward an embodied theory of language comprehension. In B. H. Ross (Ed.), The psychology of learning and motivation 44 (pp. 35–62). San Diego, CA: Academic Press.

Zwaan, R. A., Stanfield, R. A., & Yaxley, R. H. (2002). Language comprehenders mentally represent the shapes of objects. Psychological Science, 13(2), 168–171.

Acknowledgments

The authors wish to thank Sara Gambetta for her help with materials selection and data collection.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

Prime objects | Target words | Non-words | ||||

|---|---|---|---|---|---|---|

Action-relevant | Action-irrelevant | Unrelated | ||||

calorifero radiator | manopola knob | ghisa cast iron | panchina bench | agraccia | bucconede | celimora |

lattina can | linguetta tab | alluminio aluminium | astuccio case | conichia | fangialle | geglie |

padella frying pan | manico handle | ceramica ceramic | corredo dowry | ghipi | naseco | mezecolo |

porta door | maniglia door-handle | compensato plywood | ciglia eyelash | ommibicio | rinchite | sobbeme |

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2021 The Author(s)

About this chapter

Cite this chapter

Scerrati, E., Iani, C., Rubichi, S. (2021). Does the Activation of Motor Information Affect Semantic Processing?. In: Bechberger, L., Kühnberger, KU., Liu, M. (eds) Concepts in Action. Language, Cognition, and Mind, vol 9. Springer, Cham. https://doi.org/10.1007/978-3-030-69823-2_7

Download citation

DOI: https://doi.org/10.1007/978-3-030-69823-2_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-69822-5

Online ISBN: 978-3-030-69823-2

eBook Packages: Religion and PhilosophyPhilosophy and Religion (R0)