Abstract

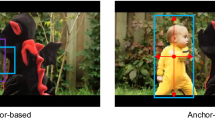

Siamese network based trackers have become a mainstream in visual object tracking. Recently, several high-performance multi-stage trackers have been proposed and some of them adopt SiamRPN for the first-stage region proposal. We argue that an anchor-based region proposal network is not necessary for the tracking task, as a tracker has a strong prior about the location and size of the target. In this paper, we propose a two-stage visual tracker which uses SiamFC for region proposal. SiamFC defines a bounding box by its center, which is a typical anchor-free (AF) network, so we dub our tracker AF2S. As the model size of SiamFC is only about 1/10 that of SiamRPN, AF2S results in a significantly lighter model than its SiamRPN-based counterparts. In the design of AF2S, we first build a strong AlexNet-based SiamFC baseline which improves the AUC on OTB-100 from 0.582 to 0.665. Further, we propose a position-sensitive convolutional layer which can be stacked after SiamFC backbone to increase the robustness of proposals without losing localization precision. Finally, a relation network is used for box refinement. Experimental results show that AF2S achieves the best performance on OTB-100 and VOT-18 among the state-of-the-art trackers which use AlexNet as backbone. On LaSOT-test, AF2S achieves an AUC of 0.480, which is among the first-tier performance even when trackers with more powerful backbone and much larger model size are considered.

A. He and G. Wang—This work is carried out while Anfeng He and Guangting Wang are interns in MSRA.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.S.: Fully-convolutional siamese networks for object tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 850–865. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_56

Chen, B., Wang, D., Li, P., Wang, S., Lu, H.: Real-time’actor-critic’tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 318–334 (2018)

Dai, J., Li, Y., He, K., Sun, J.: R-FCN: object detection via region-based fully convolutional networks. In: NIPS, pp. 379–387 (2016)

Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: ATOM: accurate tracking by overlap maximization. In: CVPR, pp. 4660–4669 (2019)

Fan, H., Lin, L., Yang et al., F.: LaSOT: a high-quality benchmark for large-scale single object tracking. ar**v preprint ar**v:1809.07845 (2018)

Fan, H., Ling, H.: Parallel tracking and verifying: a framework for real-time and high accuracy visual tracking. In: ICCV, October 2017

Fan, H., Ling, H.: Siamese cascaded region proposal networks for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7952–7961 (2019)

Gao, J., Zhang, T., Xu, C.: Graph convolutional tracking. In: ICCV, pp. 4649–4659 (2019)

He, A., Luo, C., Tian, X., Zeng, W.: A twofold siamese network for real-time object tracking. In: CVPR, June 2018

Jiang, B., Luo, R., Mao, J., **ao, T., Jiang, Y.: Acquisition of localization confidence for accurate object detection. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 784–799 (2018)

Kristan, M., Leonardis, A., Matas, J., Felsberg, M.: The visual object tracking vot2016 challenge results. In: ECCV Workshop (2016)

Kristan, M., Leonardis, A., Matas, J., et al.: The sixth visual object tracking vot2018 challenge results. In: ECCV Workshop (2018)

Kristan, M., et al.: A novel performance evaluation methodology for single-target trackers. IEEE Trans. Pattern Anal. Mach. Intell. 38(11), 2137–2155 (2016). https://doi.org/10.1109/TPAMI.2016.2516982

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: NIPS, pp. 1097–1105 (2012)

Li, B., Wu, W., Wang, Q., Zhang, F., **ng, J., Yan, J.: SiamRPN++: evolution of siamese visual tracking with very deep networks. In: CVPR, pp. 4282–4291 (2019)

Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with siamese region proposal network. In: CVPR, June 2018

Li, X., Ma, C., Wu, B., He, Z., Yang, M.H.: Target-aware deep tracking. In: CVPR, pp. 1369–1378 (2019)

Li, Y., Qi, H., Dai, J., Ji, X., Wei, Y.: Fully convolutional instance-aware semantic segmentation. In: CVPR, pp. 2359–2367 (2017)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Real, E., Shlens, J., Mazzocchi, S.: YouTube-BoundingBoxes: a large high-precision human-annotated data set for object detection in video. In: CVPR, pp. 7464–7473 (2017)

Russakovsky, O., Deng, J., Su, H., Krause, J.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Wang, G., Luo, C., **ong, Z., Zeng, W.: SPM-tracker: series-parallel matching for real-time visual object tracking. In: CVPR, pp. 3643–3652 (2019)

Wang, Q., Teng, Z., **ng, J., Gao, J.: Learning attentions: residual attentional siamese network for high performance online visual tracking. In: CVPR, June 2018

Wu, Y., Lim, J., Yang, M.H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015)

Zhang, Z., Peng, H.: Deeper and wider siamese networks for real-time visual tracking. In: CVPR, pp. 4591–4600 (2019)

Zhu, Z., Wang, Q., Li, B., Wu, W.: Distractor-aware siamese networks for visual object tracking. In: ECCV, pp. 101–117 (2018)

Acknowledgement

This work was supported by National Key Research and Development Program of China under Grant 2017YFB1002203 and the National Natural Science Foundation of China under Grant 61872329.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

He, A., Wang, G., Luo, C., Tian, X., Zeng, W. (2020). AF2S: An Anchor-Free Two-Stage Tracker Based on a Strong SiamFC Baseline. In: Bartoli, A., Fusiello, A. (eds) Computer Vision – ECCV 2020 Workshops. ECCV 2020. Lecture Notes in Computer Science(), vol 12539. Springer, Cham. https://doi.org/10.1007/978-3-030-68238-5_42

Download citation

DOI: https://doi.org/10.1007/978-3-030-68238-5_42

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-68237-8

Online ISBN: 978-3-030-68238-5

eBook Packages: Computer ScienceComputer Science (R0)