Abstract

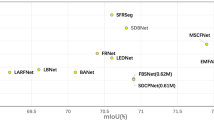

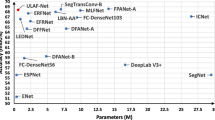

Semantic segmentation is a pixel-level image dense labeling task and plays a core role in autonomous driving. In this regard, how to balance between precision and speed is a frequently-studied issue. In this paper, we propose an alternative attentional residual dense factorized network (AttRDFNet) to address this issue. Specifically, we design a residual dense factorized convolution block (RDFB), which reaps the benefits of low-level and high-level layer-wise features through dense connection to boost segmentation precision whilst enjoying efficient computation by factorizing large convolution kernel into the product of two smaller kernels. This reduces computational burdens and makes real time become possible. To further leverage layer-wise features, we explore the graininess-aware channel and spatial attention modules to model different levels of salient features of interest. As a result, AttRDFNet can run with the inputs of the resolution 512 \( \times \) 1024 at the speed of 55.6 frames per second on a single Titan X GPU with solid 68.5% Mean IOU on the test set of Cityscapes. Experiments on the Cityscapes dataset show that AttRDFNet has real-time inference whilst achieving competitive precision against well-behaved counterparts.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for image segmentation. TPAMI 39(12), 2481–2495 (2017). https://doi.org/10.1109/TPAMI.2016.2644615

Brostow, G.J., Shotton, J., Fauqueur, J., Cipolla, R.: Segmentation and recognition using structure from motion point clouds. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008. LNCS, vol. 5302, pp. 44–57. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-88682-2_5

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Semantic image segmentation with deep convolutional nets and fully connected CRFs. ar**v:1412.7062 (2014). https://doi.org/10.1080/17476938708814211

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. TPAMI 40(4), 834–848 (2017). https://doi.org/10.1109/TPAMI.2017.2699184

Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. ar**v:1706.05587 (2017)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 833–851. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_49

Chen, L., et al.: SCA-CNN: spatial and channel-wise attention in convolutional networks for image captioning. In: CVPR, pp. 5659–5667 (2017). https://doi.org/10.1109/CVPR.2017.667

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR, pp. 3213–3223 (2016). https://doi.org/10.1109/CVPR.2016.350

Fu, J., Liu, J., Tian, H., Fang, Z., Lu, H.: Dual attention network for scene segmentation, pp. 3146–3154 (2019)

Gamal, M., Siam, M., Abdel-Razek, M.: ShuffleSeg: Real-time semantic segmentation network. ar**v:1803.03816 (2018)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: CVPR, pp. 7132–7141 (2018). https://doi.org/10.1109/CVPR.2018.00745

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: CVPR, pp. 4700–4708 (2017). https://doi.org/10.1109/CVPR.2017.243

Jégou, S., Drozdzal, M., Vazquez, D., Romero, A., Bengio, Y.: The one hundred layers tiramisu: fully convolutional densenets for semantic segmentation. In: CVPR Workshop, pp. 1175–1183 (2017). https://doi.org/10.1109/CVPRW.2017.156

**, J., Dundar, A., Culurciello, E.: Flattened convolutional neural networks for feedforward acceleration. ar**v:1412.5474 (2014)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. ar**v preprint ar**v:1412.6980 (2014)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: NIPS, pp. 1097–1105 (2012). https://doi.org/10.1145/3065386

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: CVPR, pp. 3431–3440 (2015). https://doi.org/10.1109/CVPR.2015.7298965

Mehta, S., Rastegari, M., Caspi, A., Shapiro, L., Hajishirzi, H.: ESPNet: efficient spatial pyramid of dilated convolutions for semantic segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11214, pp. 561–580. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01249-6_34

Paszke, A., Chaurasia, A., Kim, S., Culurciello, E.: ENet: A deep neural network architecture for real-time semantic segmentation. ar**v:1606.02147 (2016)

Poudel, R.P., Bonde, U., Liwicki, S., Zach, C.: ContextNet: Exploring context and detail for semantic segmentation in real-time. ar**v:1805.04554 (2018)

Romera, E., Alvarez, J.M., Bergasa, L.M., Arroyo, R.: ERFNet: efficient residual factorized convnet for real-time semantic segmentation. TITS 19(1), 263–272 (2018). https://doi.org/10.1109/TITS.2017.2750080

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Russakovsky, O., et al.: Imagenet large scale visual recognition challenge. IJCV 115(3), 211–252 (2015). https://doi.org/10.1007/s11263-015-0816-y

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. ar**v:1409.1556 (2014)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: CVPR, pp. 2818–2826 (2016). https://doi.org/10.1109/CVPR.2016.308

Treml, M., et al.: Speeding up semantic segmentation for autonomous driving. In: NIPS Workshop, vol. 2, p. 7 (2016)

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: CVPR, pp. 7794–7803 (2018). https://doi.org/10.1109/CVPR.2018.00813

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 3–19. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_1

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: CVPR, pp. 2472–2481 (2018). https://doi.org/10.1109/CVPR.2018.00262

Acknowledgments

This work was supported by the National Natural Science Foundation of China [61806213, 61702134, U1435222].

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Yang, L., Lan, L., Zhang, X., Huang, X., Luo, Z. (2019). Attentional Residual Dense Factorized Network for Real-Time Semantic Segmentation. In: Tetko, I., Kůrková, V., Karpov, P., Theis, F. (eds) Artificial Neural Networks and Machine Learning – ICANN 2019: Image Processing. ICANN 2019. Lecture Notes in Computer Science(), vol 11729. Springer, Cham. https://doi.org/10.1007/978-3-030-30508-6_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-30508-6_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30507-9

Online ISBN: 978-3-030-30508-6

eBook Packages: Computer ScienceComputer Science (R0)