Abstract

Image quality measurement is a critical problem for image super-resolution (SR) algorithms. Usually, they are evaluated by some well-known objective metrics, e.g., PSNR and SSIM, but these indices cannot provide suitable results in accordance with the perception of human being. Recently, a more reasonable perception measurement has been proposed in [1], which is also adopted by the PIRM-SR 2018 challenge. In this paper, motivated by [1], we aim to generate a high-quality SR result which balances between the two indices, i.e., the perception index and root-mean-square error (RMSE). To do so, we design a new deep SR framework, dubbed Bi-GANs-ST, by integrating two complementary generative adversarial networks (GAN) branches. One is memory residual SRGAN (MR-SRGAN), which emphasizes on improving the objective performance, such as reducing the RMSE. The other is weight perception SRGAN (WP-SRGAN), which obtains the result that favors better subjective perception via a two-stage adversarial training mechanism. Then, to produce final result with excellent perception scores and RMSE, we use soft-thresholding method to merge the results generated by the two GANs. Our method performs well on the perceptual image super-resolution task of the PIRM 2018 challenge. Experimental results on five benchmarks show that our proposal achieves highly competent performance compared with other state-of-the-art methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Single image super-resolution (SISR) is a hotspot in image restoration. It is an inverse problem which recovers a high-resolution (HR) image from a low-resolution (LR) image via super-resolution (SR) algorithms. Traditional SR algorithms are inferior to deep learning based SR algorithms on speed and some distortion measures, e.g., peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM). In addition, SR algorithms based on deep learning can also obtain excellent visual effects [2,3,4,5,6,7,8].

Here, SR algorithms with deep learning can be divided into two categories. One is built upon convolutional neural network with classic L1 or L2 loss in pixel space as the optimization function, which can gain a higher PSNR but over-smoothness for lacking enough high-frequency texture information. The representative approaches are SRResNet [5] and EDSR [7]. The other is based on generative adversarial networks (GAN), e.g., SRGAN [5] and EnhanceNet [9], which introduces perceptual loss in the optimization function. This kind of algorithms can restore more details and improve visual performance at the expense of objective evaluation indices. Different quality assessment methods are used in various application scenarios. For example, medical imaging may concentrate on objective evaluation metrics, while the subjective visual perception may be more important for natural images. Therefore, we need to make a balance between the objective evaluation criteria and subjective visual effects.

Blau et al. [6] proposed a cascaded pyramid structure with two branches, one is for feature extraction, the other is for image reconstruction. Moreover, Charbonnier loss was applied to multiple levels and it can generate sub-band residual images at each level. Tong et al. [15] introduced dense blocks combining low-level features and high-level features to improve the performance effectively. Lim et al. [7] removed Batch Normalization layers in residual blocks (ResBlocks) and adopted residual scaling factor to stabilize network training. Besides, it also proposed multi-scale SR algorithm via a single network. However, when the scaling factor is equal to or larger than \(4\times \), the results obtained by the aforementioned methods mostly look smooth and lack enough high-frequency details. The reason is that the optimization targets are mostly based on minimizing L1 or L2 loss in pixel space without considering the high-level features.

2.2 Image Super-Resolution Using Generative Adversarial Networks

Super-Resolution with Adversarial Training. Generative adversarial nets (GANs) [16] consist of Generator and Discriminator. In the task of super-resolution, e.g., SRGAN [5], Generator is used to generate SR images. Discriminator distinguishes whether an image is true or forged. The goal of Generator is to generate a realistic image as much as possible to fool Discriminator. And Discriminator aims to distinguish the ground truth from the generated SR image. Thus, Generator and Discriminator constitute an adversarial game. With adversarial training, the forged data and the real data can eventually obey a similar image statistics distribution. Therefore, adversarial learning in SR is important for recovering the image textural statistics.

Perceptual Loss for Deep Learning. In order to be better accordant with human perception, Johnson et al. [17] introduced perceptual loss based on high-level features extracted from pre-trained networks, e.g. VGG16, VGG19, for the task of style transfer and SR. Ledig et al. [5] proposed SRGAN, which aimed to make the SR images and the ground-truth (GT) similar not only in low-level pixels, but also in high-level features. Therefore, SRGAN can generate realistic images. Sajjadi et al. proposed EnhanceNet [9], which applied a similar approach and introduced the local texture matching loss, reducing visually unpleasant artifacts. Zhang et al. [18] explained why the perceptual loss based on deep features fits human visual perception well. Mechrez et al. proposed contextual loss [19, 20] which was based on the idea of natural image statistics, and it is the best algorithm for recovering perceptual results in previous published works currently. Although these algorithms can obtain better perceptual image quality and visual performance, it cannot achieve better results in terms of objective evaluation criteria.

2.3 Image Quality Evaluation

There are two ways to evaluate image quality including objective and subjective assessment criteria. The popular objective criteria includes the following: PSNR, SSIM, multi-scale structure similarity index (MSSSIM), information fidelity criterion (IFC), weighted peak signal-to-noise ratio (WPSNR), noise quality measure (NQM) [21] and so on. Although IFC has the highest correlation with perceptual scores for SR evaluation [21], it is not the best criterion to assess the image quality. The subjective assessment is usually scored by human subjects in the previous works [22, 23]. However, there is not a suitable objective evaluation in accordance with the human subjective perception yet. In the PIRM-SR 2018 challenge [10], the assessment of perceptual image quality is proposed which combines the quality measures of Ma [24] and NIQE [25]. The formula of perceptual index is represented as follows,

Here, a lower perceptual index indicates better perceptual quality.

3 Proposed Methods

We first describe the overall structure of Bi-GANs-ST and then construct the networks MR-SRGAN and WP-SRGAN. The soft thresholding method is used for image fusion, as presented in Sect. 3.4.

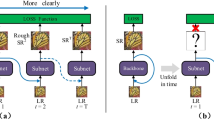

3.1 Basic Architecture of Bi-GANs-ST

As shown in Fig. 1, our Bi-GANs-ST mainly consists of three parts: (1) memory residual SRGAN (MR-SRGAN), (2) weight perception SRGAN (WP-SRGAN), (3) soft thresholding (ST). The two GANs are used for generating two complementary SR images, and ST fuses the two SR results for balancing the perceptual score and RMSE.

3.2 MR-SRGAN

Network Architecture. As illustrated in Fig. 2, our MR-SRGAN is composed of Generator and Discriminator. In Generator, LR images are input to the network followed by one Conv layer for extracting shallow features. Then four memory residual (MR) blocks are applied for improving image quality which help to form persistent memory and improve the feature selection ability of model like MemEDSR [26]. Each MR block consists of four ResBlocks and a gate unit. The former generates four-group features and then we extract a certain amount of features from these features by the gate unit. And the input features are added to the extracted features as the output of MR block. In ResBlocks, all the activation function layers are replaced with parametric rectified linear unit (PReLU) function and all the Batch Normalization (BN) layers are discarded in the generator network for reducing computational complexity. Finally, we restore the original image size by two upsampling operations. n is the corresponding number of feature maps and s denotes the stride for each convolutional layer in Figs. 2 and 3. In Discriminator, we use the same setting as SRGAN [5].

Loss Function. The total generator loss function can be represented as three parts: pixel-wise loss, adversarial loss and perceptual loss, the formulas are as follows,

where \(L_{pixel}\) is the pixel-wise MSE loss between the generated images and the ground truth, \(L_{vgg}\) is the perceptual loss which calculates MSE loss between features extracted from the pre-trained VGG16 network, and \(L_{adv}\) is the adversarial loss for Generator in which we remove logarithm. \(\lambda _1\), \(\lambda _2\) are the weights of adversarial loss and perceptual loss. \(x_{t}\), \(x_{l}\) denote the ground truth and LR images, respectively. \(G(x_{l})\) is the SR images forged by Generator. N represents the number of training samples. \(\phi \) represents the features extracted from pre-trained VGG16 network.

3.3 WP-SRGAN

Network Architecture. In WP-SRGAN, we use 16 ResBlocks in the generator network which is depicted in Fig. 3. Each ResBlock is consisted of convolutional layer, PReLU activation layer and convolutional layer. And Batch Normalization (BN) layers are removed in both Generator and Discriminator. The architecture of Discriminator in WP-SRGAN is the same as MR-SRGAN except for removing BN layers.

Loss Function. As shown in Fig. 3, a two-stage bias adversarial training mechanism is adopted in WP-SRGAN by using different Generator losses. In the first stage, as the red box shows, we optimize the Generator loss which is consisted of pixel-wise loss and adversarial loss to obtain better objective performance (i.e., reduce the RMSE value). In the second stage, as the orange box shows, we regard the network parameters in the first stage as the pre-trained model and then replace the aforementioned generator loss with perceptual loss and adversarial loss to optimize for improving the subjective visual effects (e.g., reduce the perceptual index). The two-stage losses are represented as Eqs. (6) and (7).

Here, the pixel-wise loss is defined as the Eq. (3), the perceptual loss adopts MSE loss by the features extracted from pre-trained VGG19 network, and the adversarial loss is donated as follows,

By adopting two-stage adversarial training mechanism, it can make the generated SR image similar to the corresponding ground truth in high-level features space.

3.4 Soft-Thresholding

We can obtain different SR results by the two GANs aforementioned. One is MR-SRGAN, which emphasizes on improving the objective performance. The other is WP-SRGAN, which obtains the result that favors better subjective perception. To balance the perceptual score and RMSE of SR results, soft thresholding method proposed by Deng et al. [27] is adopted to fuse the two SR images (i.e., MR-SRGAN, WP-SRGAN) which can be regarded as a way of pixel interpolation. The formulas are shown as follows,

where \(I_{e}\) is the fused image, \(\Delta =I_{G}-I_{g}\), \(I_{G}\) is the generated image by WP-SRGAN whose perceptual score is lower, \(I_{g}\) is the generated image by MR-SRGAN whose RMSE value is lower. \(\xi \) is the adjusted threshold which is discussed in Sect. 4.2.

4 Experimental Results

In this section, we conduct extensive experiments on five publicly available benchmarks for scaling factor \(4\times \) image SR: Set5 [28], Set14 [29], B100 [30], Urban100 [31], Managa109 [32], separately. The first three datasets Set5, Set14, BSD100 mainly contain natural images, Urban100 consists of 100 urban images, and Manga109 is Japanese anime containing fewer texture features. Then we compare the performance of our proposed Bi-GANs-ST algorithm with the state-of-the-art SR algorithms in terms of objective criteria and subjective visual perception.

4.1 Implementation and Training Details

We train our networks using the RAISEFootnote 1 dataset which consists of 8156 HR RAW images. The HR images are downsampling by bicubic interpolation method for the scaling factor \(4\times \) to obtain the LR images. To analyze our models capacity, we evaluate them on the PIRM-SR 2018 self validation dataset [\(3\times 3\). The learning rate is initialized to \(1e-4\) and Adam optimizer with the momentum 0.9 is utilized. The network is trained for 600 epochs, and we choose the best results according to the metric SSIM.

In Generator of WP-SRGAN, 16 ResBlocks are used and the filter size is \(3\times 3\). The filter size is \(9\times 9\) in the first and last convolutional layer. All the convolutional layers use one stride and one padding. The weights are initialized by Xavier method. All the convolutional and upsampling layers are followed by PReLU activation function. The learning rate is initialized to \(1e-4\) and decreased by a factor of 10 for \(2.5\times 10^{5}\) iterations and total iterations are \(5\times 10^{5}\). We use Adam optimizer with momentum 0.9. In Discriminator, the filter size is \(3\times 3\), and the number of features is twice increased from 64 to 512, the stride is one or two, alternately.

The weights of adversarial loss and perceptual loss both in MP-SRGAN and WP-SRGAN (i.e., \(\lambda _1\) and \(\lambda _2\)) are set to \(1e-3\), \(6e-3\), respectively. And the threshold (i.e., \(\xi \)) for image fusion is set to 0.73 in our experiment.

4.2 Model Analysis

Training WP-SRGAN with Two Stages. We analyze the experimental results of the two-stage adversarial training mechanism in WP-SRGAN. The quantitative and qualitative results on PIRM-SR 2018 self validation dataset are shown in Table 1 and Fig. 4.

In Table 1, WP-SRGAN with two stages can achieve lower perceptual score than WP-SRGAN with the first stage. As shown in Fig. 4, the recovered details of WP-SRGAN with two stages are much more than WP-SRGAN with the first stage. And the images generated by two stages look more realistic. Therefore, we use WP-SRGAN with two stages in our model.

Soft Thresholding. In the challenge, three regions are defined by RMSE between 11.5 and 16. According to different threshold settings, we draw the perceptual-distoration plane which is shown in Fig. 5, according to the results fused by Eqs. (9) and (10). The points on the curve denote the different thresholds from 0 to 1 with an interval of 0.1. Experimental results show that we can obtain excellent perceptual score in Region3 (RMSE is between 12.5 and 16) when \(\xi \) is set to 0.73.

Model Capacity. To demonstrate the capability of our models, we analyze the SR results of MR-SRGAN, WP-SRGAN and Bi-GANs-ST for the metrics perceptual score and RMSE on the PIRM-SR 2018 self validation dataset. The quantitative and qualitative results are shown in Table 2 and Fig. 6. The experimental results show that Bi-GANs-ST can keep balance between the perceptual score and RMSE.

4.3 Comparison with the State-of-the-arts

To verificate the validity of our Bi-GANs-ST, we conduct extensive experiments on five publicly available benchmarks and compare the results with other state-of-the-art SR algorithms, including EDSR [7], EnhanceNet [9]. We use the open-source implementations for the two comparison methods. We evaluate the SR images with image quality assessment indices (i.e., PSNR, SSIM, perceptual score, RMSE) where PSNR and SSIM are measured on the y channel and ignored 6 pixels from the border.

The quantitative results for evaluating PSNR and SSIM are shown in Table 3. The best algorithm is EDSR, which is on average 1.0 dB, 0.54 dB, 0.34 dB, 0.83 dB and 1.13 dB higher than our MR-SRGAN. The PSNR values of our Bi-GANs-ST are higher than EnhanceNet on Set5, Urban100, Manga109 approximately 0.64 dB, 0.3 dB, 0.13 dB, respectively. The SSIM values of our Bi-GANs-ST are all higher than EnhanceNet. Table 4 shows the quantitative evaluation of average perceptual score and RMSE. For perceptual score index, our WP-SRGAN achieves the best and Bi-GANs-ST achieves the second best on five benchmarks except for Set5. For RMSE index, EDSR performs the best and our MR-SRGAN performs the second best.

The visual perception results of \(4\times \) enlargement of different algorithms on five benchmarks are shown in Fig. 7. These visual results are produced by Bicubic, EDSR, EnhanceNet, MR-SRGAN, WP-SRGAN, Bi-GANs-ST and the ground truth from left to right. EDSR can generate the images which look clear and smooth but not realistic. The SR images of our MR-SRGAN algorithm are like to EDSR. EnhanceNet can generate more realistic images with unpleasant noises. The SR images of our WP-SRGAN algorithm obtain more details like EnhanceNet with less noises which are more close to the ground-truth. And our Bi-GANs-ST algorithm has fewer noises than WP-SRGAN.

5 Conclusions

In this paper, we propose a new deep SR framework Bi-GANs-ST by integrating two complementary generative adversarial networks (GAN) branches. To keep better balance between the perceptual score and RMSE of generated images, we redesign two GANs (i.e., MR-SRGAN, WP-SRGAN) to generate two complementary SR results based on SRGAN. Last, we use soft-thresholding method to fuse two SR results which can make the perceptual score and RMSE tradeoff. Experimental results on five publicly benchmarks show that our proposed algorithm can perform better perceptual results than other SR algorithms for \(4\times \) enlargement.

Notes

References

Blau, Y., Michaeli, T.: The perception-distortion tradeoff. ar**v preprint ar**v:1711.06077 (2017)

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38(2), 295–307 (2016)

Kim, J., Kwon Lee, J., Mu Lee, K.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1646–1654 (2016)

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 391–407. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_25

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: CVPR, vol. 2, p. 4 (2017)

Lai, W.S., Huang, J.B., Ahuja, N., Yang, M.H.: Deep laplacian pyramid networks for fast and accurate superresolution. In: IEEE Conference on Computer Vision and Pattern Recognition, vol. 2, p. 5 (2017)

Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, vol. 1, p. 4 (2017)

Haris, M., Shakhnarovich, G., Ukita, N.: Deep backprojection networks for super-resolution. In: Conference on Computer Vision and Pattern Recognition (2018)

Sajjadi, M.S., Schölkopf, B., Hirsch, M.: Enhancenet: single image super-resolution through automated texture synthesis. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 4501–4510. IEEE (2017)

Blau, Y., Mechrez, R., Timofte, R., Michaeli, T., Zelnik-Manor, L.: 2018 PIRM challenge on perceptual image super-resolution. ar**v preprint ar**v:1809.07517 (2018)

Shi, W., et al.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1874–1883 (2016)

Kim, J., Kwon Lee, J., Mu Lee, K.: Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1637–1645 (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Mao, X., Shen, C., Yang, Y.B.: Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In: Advances in Neural Information Processing Systems, pp. 2802–2810 (2016)

Tong, T., Li, G., Liu, X., Gao, Q.: Image super-resolution using dense skip connections. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 4809–4817. IEEE (2017)

Goodfellow, I., et al.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 2672–2680 (2014)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 694–711. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_43

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. ar**v preprint (2018)

Mechrez, R., Talmi, I., Zelnik-Manor, L.: The contextual loss for image transformation with non-aligned data. ar**v preprint ar**v:1803.02077 (2018)

Mechrez, R., Talmi, I., Shama, F., Zelnik-Manor, L.: Learning to maintain natural image statistics. ar**v preprint ar**v:1803.04626 (2018)

Yang, C.-Y., Ma, C., Yang, M.-H.: Single-image super-resolution: a benchmark. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8692, pp. 372–386. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10593-2_25

Moorthy, A.K., Bovik, A.C.: Blind image quality assessment: from natural scene statistics to perceptual quality. IEEE Trans. Image Process. 20(12), 3350–3364 (2011)

Mittal, A., Moorthy, A.K., Bovik, A.C.: No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 21(12), 4695–4708 (2012)

Ma, C., Yang, C.Y., Yang, X., Yang, M.H.: Learning a no-reference quality metric for single-image super-resolution. Comput. Vis. Image Underst. 158, 1–16 (2017)

Mittal, A., Soundararajan, R., Bovik, A.C.: Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 20(3), 209–212 (2013)

Chen, R., Qu, Y., Zeng, K., Guo, J., Li, C., **e, Y.: Persistent memory residual network for single image super resolution. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, vol. 6 (2018)

Deng, X.: Enhancing image quality via style transfer for single image super-resolution. IEEE Signal Process. Lett. 25(4), 571–575 (2018)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-Morel, M.L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding (2012)

Zeyde, R., Elad, M., Protter, M.: On single image scale-up using sparse-representations. In: Boissonnat, J.-D., et al. (eds.) Curves and Surfaces 2010. LNCS, vol. 6920, pp. 711–730. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-27413-8_47

Arbelaez, P., Maire, M., Fowlkes, C., Malik, J.: Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 898–916 (2011)

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206 (2015)

Matsui, Y., et al.: Sketch-based manga retrieval using manga109 dataset. Multimedia Tools Appl. 76(20), 21811–21838 (2017)

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grant 61876161, Grant 61772524, Grant 61373077 and in part by the Bei**g Natural Science Foundation under Grant 4182067.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Luo, X., Chen, R., **e, Y., Qu, Y., Li, C. (2019). Bi-GANs-ST for Perceptual Image Super-Resolution. In: Leal-Taixé, L., Roth, S. (eds) Computer Vision – ECCV 2018 Workshops. ECCV 2018. Lecture Notes in Computer Science(), vol 11133. Springer, Cham. https://doi.org/10.1007/978-3-030-11021-5_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-11021-5_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-11020-8

Online ISBN: 978-3-030-11021-5

eBook Packages: Computer ScienceComputer Science (R0)