Abstract

Background

Health systems in the United States are increasingly required to become leaders in quality to compete successfully in a value-conscious purchasing market. Doing so involves develo** effective clinical teams using approaches like the clinical microsystems framework. However, there has been limited assessment of this approach within United States primary care settings.

Methods

This paper describes the implementation, mixed-methods evaluation results, and lessons learned from instituting a Microsystems approach across 6 years with 58 primary care teams at a large Midwestern academic health care system. The evaluation consisted of a longitudinal survey augmented by interviews and focus groups. Structured facilitated longitudinal discussions with leadership captured ongoing lessons learned. Quantitative analysis employed ordinal logistic regression and compared aggregate responses at 6-months and 12-months to those at the baseline period. Qualitative analysis used an immersion/crystallization approach.

Results

Survey results (N = 204) indicated improved perceptions of: organizational support, team effectiveness and cohesion, meeting and quality improvement skills, and team communication. Thematic challenges from the qualitative data included: lack of time and coverage for participation, need for technical/technology support, perceived devaluation of improvement work, difficulty aggregating or spreading learnings, tensions between team and clinic level change, a part-time workforce, team instability and difficulties incorporating a data driven improvement approach.

Conclusions

These findings suggest that a microsystems approach is valuable for building team relationships and quality improvement skills but is challenged in a large, diverse academic primary care context. They additionally suggest that primary care transformation will require purposeful changes implemented across the micro to macro-level including but not only focused on quality improvement training for microsystem teams.

Similar content being viewed by others

Background

In the United States, health systems are increasingly required to become leaders in quality improvement (QI) in order to compete successfully in a value-conscious purchasing market [1,2,3] Effective clinical teams are considered essential to the production of high-value systems of care particularly within primary care. The clinical microsystems framework [4] is one approach to training primary care teams how to engage in QI activities. Based on learnings from analyses of high-performing service organizations both external to and within health care [4, 5], this approach recognizes that health care value is created (or lost) by front-line teams informed by the needs of their patients and capable of designing and implementing change. In addition to disciplined process improvement education, the Microsystem approach recognizes the importance of managing relationships between microsystem members and between microsystems and the larger macro-systems [4]. Established curriculum include training on practice assessment, process improvement, socio-cultural and organizational change skills [6].

Despite widespread use of the Microsystems approach across multiple countries and hospital and ambulatory care settings [7,8,9,10,11], published evaluations of its implementation within United States primary care settings are limited in number and scope. This gap is surprising given an international emphasis on re-engineering primary care [12,13,14,15,16], and this approach being considered as one of the first few comprehensive models, besides the Chronic Care Model [17], the and the Idealized Design of Clinical Office Practices [18], that address team-based primary care redesign [19]. Two extensive qualitative evaluations have been conducted outside of the United States. These describe higher staff morale, empowerment, commitment, and clarity of purpose resulting from this approach [20, 21]. A single U.S.-based article briefly describes inclusion of microsystems training as part of a collaborative primary care faculty development initiative, and suggests that it would do best when there is “shared accountability for relationship service and reliability across the three [primary care] disciplines.” [22] Given the unique characteristics of primary care in an academic setting (e.g., providers who perform clinical care part-time among other responsibilities and the presence of trainees), further understanding of the strengths and limitations of the Microsystems approach is needed.

From 2008 to 2014, a Microsystems approach was implemented with 58 primary care teams at a large Midwestern academic health care center that was aligning its primary care disciplines and embarking upon an ambitious primary care delivery system redesign. This study was designed to address the following questions: 1) what was the 6 and 12 month impact of the Microsystems approach on primary care team member perception of effectiveness and cohesion, quality improvement skills, and communication skills? 2) What challenges occurred during program implementation and were these able to be overcome? Given the importance of context in understanding quality improvement interventions, we also provide a detailed description of the delivery system and intervention in hopes of informing others embarking on similar system-wide multi-clinic primary care improvement efforts.

Methods

Description of the delivery system

At the launch of the redesign initiative in 2008, the academic health center consisted of three separate, legal organizations: UW School of Medicine and Public Health, UW Medical Foundation, and UW Hospital and Clinics. Clinics were owned and operated by one of these organizations but were collectively branded as UW Health. Forty-six primary care clinics were located throughout the state of Wisconsin, with the majority in the Madison area. There were 276 primary care physicians: 38 pediatricians, 62 general internists, and 156 family physicians who conducted ~ 649,000 primary care visits annually. Practices varied in size from 1 to 20 physicians.

The impetus for redesign

The three chief executives launched the UW Health Primary Care Redesign initiative, an ambitious effort to transform delivery of primary care within the entire system regardless of clinic location, primary care specialty or ownership [23]. The need to radically transform care delivery arose from access issues within primary care and lagging clinical quality performance compared to other health systems in the state as publicly reported by the Wisconsin Collaborative for Healthcare Quality, a voluntary performance reporting system (www.wchq.org). These issues reached a critical state when a new provider group entered the local market and successfully recruited several faculty physicians. This acute loss of established physicians coupled with the national difficulty of attracting new physicians to primary care created a short term access crisis that prompted a focus on transforming the primary care system to achieve what is now termed the quadruple aim [24].

Guiding principles and vision

The initiative was guided by evidence-based principles for transformative change [25,26,27]. UW Health leaders in quality and safety had developed a framework for change based on redesign principles from the Institute of Medicine (IOM) and organizational behavior theory that was used to organize its large-scale change initiatives [28]. Kotter’s 8-steps for organizational transformation provided guidance for change over time [25]. A governance structure, the Primary Care Steering Committee, was created consisting of physicians and administrative leaders responsible for primary care. A vision for the transformed primary care system was created by a broad representation of front-line clinicians and staff working collaboratively with senior leadership and communicated widely throughout the organization. The academic health system’s Health Innovation Program was created to integrate healthcare research and practice. In support of this mission, health services researchers were included on the Initiative’s strategy oversight and implementation teams. Researchers led the study design for the evaluation including collection of qualitative data that was rapidly analyzed and shared with operational leaders in ‘near real time,’ providing important findings that informed future implementation strategies.

The microsystems approach

The Dartmouth Clinical Microsystem curriculum was considered by the Steering Committee to be the framework that best fit existing organizational strengths in process improvement education and its readiness to address change at all levels of the health system. Prior to implementation of this approach, primary care clinic leaders had participated in structured process improvement education incorporating concepts from various models including the Institute for Healthcare Improvement (IHI) model of improvement, systems thinking, and Lean Six Sigma concepts [29,30,31]. The clinical microsystem approach specifically was selected because it allowed for those who were affected by the change to design the change by equip** front-line care team members and administrative managers with common team-building and improvement skills useful for redesigning care processes.

In this approach, a microsystem is defined as a small care unit consisting of a care team, its panel of patients, and the core processes that produce its patterns and norms [4]. Within the organization, a primary-care microsystem generally consisted of a physician (or several part-time physicians), a receptionist, registered nurse and other clinical staff (e.g. medical assistant, licensed practical nurse) and often included an advanced practice provider and residents. Figure 1 depicts these organizational levels.

The microsystems program

Table 1 provides a summary of program goals, components and involvement. As shown, program activities consisted cohort learning sessions, team meetings, and practice coaching. During these activities teams learned how to continuously assess their microsystems. Data analysis and team input drove the establishment of improvement aims and implementation of interventions. Initially aims could be developed in any area, but by the third cohort microsystem teams were asked to focus improvement efforts on specific areas (e.g. access) that were aligned with organizational priorities and supported by organizational resources.

Coaches provided ongoing teaching on how to follow the FOCUS (Find-Organize-Clarify-Understand-Select) PDCA (Plan-Do-Check-Act) improvement process and apply appropriate improvement tools. The coach also helped facilitate team building, team communication and patient engagement. After 6 months, coaches met their teams with decreasing frequency to reinforce skills and by 12 months transitioned contact to an as-needed basis.

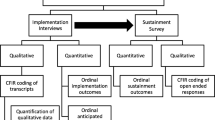

Program evaluation design

The overall objectives of this concurrent mixed-methods evaluation was to understand: 1) the short term and sustained impact of the program on team effectiveness and cohesion, quality improvement skills, and communication skills; 2) What challenges occurred during program implementation and were these able to be overcome? The multidisciplinary evaluation team consisted of physicians and staff skilled in quality improvement methodologies, mixed and qualitative research methods, programs evaluation, and statistics. Consistent with common use of mixed-methods in dissemination and implementation science [32], for our first question we used a quantitative method, a longitudinal survey, to examine deductively the outcomes of the implementation with breadth across team member participants. For our second question, we employed multiple qualitative methods, individual and group interviews/discussions, to examine inductively with depth the implementation context and process by sampling multiple participants and implementers during the first two cohorts (when significant challenges were expected to be discovered), and program leaders across the entire implementation period. Individual interviews were used for participants to enhance confidentiality, and group interviews were used for implementers and leaders in order to capture the range of opinions and agreement. These concurrent methods received equal weight (QUAN + QUAL) and were mixed for complementarity. Throughout the study period because our research was closely embedded within the implementation process, we used an empowerment evaluation approach [33], which aims to aid organizations in improving their innovation during the implementation process through sharing and discussing data and encouraging reflection. Therefore, during the program we collected and reported to program leadership findings about challenges from the qualitative data near “real time” and reported the analyzed quantitative outcome data every 6 months. As described further below, the summative evaluation in this paper presents the quantitative longitudinal outcomes, context and resolved and ongoing challenges analyzed from the qualitative data across the multi-year program.

Data collection

Quantitative evaluation consisted of an electronic survey sent to all team member participants at baseline, 6, and 12 months. Across all cohorts and time periods, 15 items assessed perceptions in the following domains: organizational support, team cohesion and effectiveness, quality improvement and meeting skills, and communication effectiveness. A single question assessed overall satisfaction with the program at 6 and 12 months. Responses were generally on a five-item Likert scale (strongly agree, agree, neutral, disagree, strongly disagree). Two survey items about patient engagement were analyzed for a separate publication [34] and thus these findings are not presented. Several survey items were taken from prior Microsystems tools [28, 35] while others were created or adapted for the local context. These adaptations reflected the local purpose or specific leadership roles and structures (e.g. “to make my work meaningful” adapted to “to be effective in my job”; “clinic manager support”; “physician leader communication”).

These data were augmented by individual interviews, focus groups and facilitated stakeholder group discussions led by the first author or a masters-level researcher trained in qualitative methods. Interviews were generally conducted on-site and lasted 15–75 min. During the first and second cohort (2009–2010), 69 team members from 11/17 teams were interviewed. Program leaders informed the selection of these teams using a maximum variation approach by varying primary care specialty, location (rural or urban), and degree of engagement in program activities. All team members who were present on the days that the research team was on-site were interviewed. Because teams microsystems varied in membership, interviewees came from a variety of roles: 14 physicians (3 residents), 6 Advanced Practice Providers, 8 Administrative supervisors, 1 Pharmacist, 7 Registered or Licensed Practical Nurses, 17 Medical Assistants/Certified Nursing Assistants, 4 Schedulers, 4 Radiology, Laboratory or Medical Technicians, and 8 Receptionists. Additionally, two one-hour clinic manager and program leader focus groups and one coach focus group were held. Lastly, four longitudinal, facilitated structured discussions about ongoing lessons learned were held with stakeholders responsible for program development, implementation, and evaluation. Six participants requested that their interview not be recorded. In these cases, handwritten notes were taken during the interview and narrative summaries were constructed immediately afterwards. For the remaining 63 interviews, all focus groups, and structured discussions, audiota** and transcription occurred.

Data analysis

Survey data were analyzed with STATA version 14 software (StataCorp, College Station, Texas) employing ordinal logistic regression adjusting for role (Physician/APP/Pharmacist, nurse, medical assistant, receptionist, other) with time as an indicator variable and a robust estimate of the variance that accounted for clustering by clinic and cohort. Analyses compared individual aggregate responses at 6-months and 12-months to those at the baseline period and were considered significant at p < 0.05. Missing data, ranging from 3 to 5% per question, were dropped after examination revealed no systematic pattern.

Qualitative analyses employed an immersion/crystallization approach [36]. Two individuals skilled in qualitative analysis independently read all transcripts and summaries, understanding each clinic team as a case and paying particular attention to any text that identified implementation challenges and solutions. This text was transferred to a structured matrix in Excel that was organized by both clinic site, cohort, and role. Text was examined line by line and coded to identify the type of challenge (e.g. time, knowledge, technology, motivation, spread) and attempted solution. These codes were aggregated into themes by topic (e.g staffing and support, participation motivators, sustainment), and the researchers met weekly to discuss these themes. Because of the participatory aspect of the evaluation, researchers also triangulated quantitative findings with the qualitative themes that emerged across the different levels of data by role (individual team members, managers, coaches, and program leadership). This allowed program implementers to make adaptations to the intervention and involve those that were experiencing the challenge. Member checking occurred through regular public presentation of findings back to participating individuals and leadership as part of the participatory approach to the evaluation.

Results

Table 2 provides examples of successful team projects by specialty.

Survey results

204/257 (79%) of individuals completed the baseline survey, with responses from all 58 teams. Across cohorts of teams receiving Microsystems training, response rates ranged from 49 to 92% at baseline, 45–77% at 6 months, and 52–81% at 12 months. The mean (standard deviation) response to the question “participating in the Microsystems program has been a worthwhile experience” was 3.83 (0.91) at 6 months and 3.80 (0.90) at 12 months, with responses on a scale of 5 = strongly agree, 4 = agree, 3 = neutral, 2 = disagree, 1 = strongly disagree.

Table 3 displays longitudinal aggregate mean responses to the remaining questions organized by domain. In terms of organizational support, respondents did not perceive a significant change in their clinic manager’s support of their microsystems work (4.10 (mean), baseline (time point); 4.21 6 months; 4.25, 12 months). At 12 months compared to baseline, there were statistically significant increases in microsystem team member’s perceptions of having the tools and equipment needed to be effective (3.32, baseline; 3.50, 6 months; 3.66, 12 months) and useful knowledge and information about their patient population (3.38, baseline; 3.69, 6 months; 3.74, 12 months).

Respondents perceived high team effectiveness and cohesion from the outset of the program. Two items showed statistically significant improvements at 6 months—the ability to solve difficult tasks if effort was invested (3.94, baseline; 4.19, 6 months) and having a strong bond with team members (3.90, baseline; 4.16, 6 months). Items that did not show significant changes queried about group capability to perform tasks well, manage unexpected troubles, and competency to make team level improvements.

Also as shown in Table 3, responses indicate that team members perceived significant gains over baseline at 6 months and 12 months in all five quality improvement skills queried: knowing the top processes needing improvement, using data to identify improvement activities, creating a focused meeting agenda, serving in different team roles and knowing the purpose of the team. Respondents also perceived significant gains in effectively communicating their work to the manager (3.13, baseline; 4.01, 6 months; 4.00, 12 months) and other teams in the practice (2.96, baseline; 3.48, 6 months; 3.40, 12 months).

Qualitative results

Several key challenges to the success of the program were identified by teams and clinic leadership during the implementation process. For many of these, the organization was able to respond to Microsystem program needs and develop specific solutions; Table 4 describes challenges where a solution was implemented, along with a representative quote. These included: lack of time and coverage for program participation, need for technical/technology support, lack of perceived value for improvement work, and difficulty spreading learnings.

However, additional challenges persisted. These challenges are described below including: tensions between team-and clinic-level change, part-time workforce, team instability, challenge of a structured QI approach, and difficulties aggregating learnings.

Tensions between team and clinic level change

Coaches, who could structure meetings and teach improvement skills, lacked the operational authority of clinic managers who are responsible for daily operations and clinic-wide performance. These differing positions led to conflicts, particularly when a team’s improvements were viewed as too disruptive to existing clinic workflow or culture. Additionally, for the first two cohorts, leadership discouraged clinic managers from being present at team meetings out of concerns that this would decrease front-line quality improvement learning and innovation. This independence was a major shift in culture. As one manager explained: “I think the more uniformity you have, the more people can adhere to what the expectations are rather than having one group doing this and one group doing that- and then trying to manage that…is really difficult.”

Part-time workforce

The Microsystem approach was designed for single physician teams who engaged in accomplishing QI goals throughout the week in between weekly meetings. However, in this organization many of the clinicians and staff were part-time. This created difficulty in figuring out how to equitably divide the between-meeting work. It was also difficult to schedule weekly meetings so that the entire team could attend. As a medical assistant explained, “Four of us are part-time workers so it means that every week somebody comes in on their day off to work on microsystems.” Lastly, situations arose where a part-time staff member received training as part of one team, and then was also part of a team that in a future training cohort. It was unclear if and how much of the training should be repeated in this circumstance.

Team instability

Turnover occurred particularly within certain positions (e.g., medical assistants). Their replacements were added to the team because it made practical sense given that they were part of the natural microsystem. However, bringing on someone new was perceived as difficult because there was no one clearly responsible for on-boarding, and understanding the program required time. As a team member explained, “When you do have a new employee training for Microsystems we can take time out of our team meeting to train, but we’re not as productive and that puts somebody on the spot-- the new trainee.” Also, some physicians changed their clinical FTE during the program. Residents additionally had variables schedules. This led to shifts and difficulties in scheduling team meetings and/or variable attendance at meetings.

Implementing a data-driven QI approach

The Microsystems approach required teams to spend time evaluating their microsystem in a data-driven process and learning a standard language and set of QI tools. Teams could not select improvement foci based solely on perceptions of what should be addressed-this gap had to be demonstrated with data. This process felt cumbersome to several team members because collected data did not always capture the ‘stories’ that impact individual Microsystems. As one put it, “You need something for people to grab on to, feel some success with and then maybe you can understand the process better. Just going in and trying to understand the process and then picking something to do [is] harder to do than having some ideas and seeing if you can’t fit them into the process.”

Difficulty aggregating learnings

Despite the directive to teams to focus on organizational improvement priorities (e.g., access), variations in clinic structure, specialty, and culture made it difficult to spread successful improvement practices. Teams that successfully improved quality documented their approaches in a “playbook.” However, there was not a standard mechanism or clear accountability for spreading these playbooks to teams at the same delivery site or to other clinics in the organization. Operational leaders concluded that the process of disseminating Microsystem-discovered improvements system-wide was “too long and costly.” In contrast, the organization was achieving significant gains in quality measures using a “top down” approach: designing improved processes and structures centrally, testing them with Microsystems trained teams, and spreading these improved processes across clinics with local leadership support [28]. As one leader pointed out, “Develo** the Microsystems program was not sufficient in and of itself to achieve organizational transformation….we had to address all levels of change, not just the patient and care team.”

Integrating quantitative and qualitative results

From the survey findings, team members perceived the program provided them with additional support- data, knowledge, equipment and improved communication. The in-depth interview findings did not contradict these findings. However, leaders did not measure the success of the program according to these metrics. Instead, the focus was on resource use, rapidity of change, and scalability.

Discussion

This paper describes and evaluates the multi-year experience of a health system that implemented a Microsystem approach to primary care redesign in pursuit of the quadruple aim. The program significantly increased perceived QI skills and bonds between team members. However, it required adjustments in response to implementation barriers such as the need for dedicated time, coverage, and technical support. Significant challenges persisted due to the nature of primary care in an academic health center including part-time clinicians, turnover, and competing responsibilities.

Our findings suggest important considerations for health systems that are embarking on systematic approaches to redesign in pursuit of high value care. Similar to existing literature [20, 21] we found the microsystems approach improved relationships and clarity of purpose at the team level. However, the resources required to implement, maintain, and disseminate the program were formidable. Although implemented in a setting dedicated to holistic change across primary care disciplines [22], this approach alone was insufficient to achieve transformative primary care redesign in a time frame that was acceptable to the organization.

Operational leaders need to balance standardization of medical best practices with the variation present across clinics within a health system. Doing so requires stakeholder input, and the Microsystem approach may promote buy-in with front line clinicians and staff. Implementation of this program also did lead to important information for leadership as it continued to embark on primary care redesign. Variation in team processes, culture, structure, skills and knowledge were uncovered through this program. For example, variation across the system (e.g., union versus non-union staff, years using an electronic health record (EHR)) contributed to clinic-to-clinic variation in the Microsystem experience. Insights into these variations provided critical input to future work that standardized primary care team composition and care models [23], developed a training program for clinic dyad leaders (physicians and managers), and focused on system-level supports (e.g., optimal use of EHR [37], enterprise-wide care guidelines).

The health system’s Microsystem program was ended and replaced by a centralized QI approach that simultaneously addressed change at all levels of the health system, including but not exclusively focused on the microsystem level. With the prior focus on the microsystem, teams were not empowered to make changes at the organizational level. They could only improve processes within the constraints of existing system-wide structures such as EHR functionality or centralized calling centers. In the shift to a more centralized approach, multi-disciplinary teams with meso- and macro-leaders were able to improve infrastructure which ‘made it easy’ for microsystem teams to achieve improvements in care. Primary care initiatives continued to be piloted with teams that had received microsystems training. These teams had achieved a cultural shift and understood the need for data-driven change. They also had regular practice in using quality improvement skills. They were able to apply their testing skills to inform the design of final system standards [28]. As a consequence of these whole-system redesign efforts directed at improving preventive and chronic care, teams were able to achieve significant gains in publicly-reported primary care performance metrics across primary care clinics. Implications of our findings for others both in the United States and elsewhere is the critical importance of providing a clear process of quality improvement education across all stakeholder levels – leadership, operational management, and teams – in order to increase engagement in the change process, improve communications and clarity of purpose, and develop a culture that is able to improve outcomes. One increasingly popular approach in primary care is the Lean management system, which can empower staff at all levels, promote teamwork [38], and lead to an innovation culture [39]. Recent studies of factors leading successful implementation of the Lean approach reveal the crucial role of leadership [40, 41], also suggesting a different entry point than the microsystems level in the quest for transformative change.

Our evaluation is subject to several strengths and limitations that should be taken into account when examining these findings. Strengths include relatively high participation rates in data collection over multiple time points, a multi-year implementation across several teams, and extensive qualitative data collection employing multiple methods and frequent member checking that increased suggestion of the validity of our results. Limitations include the transferability of results from practices affiliated with a Midwestern Academic Health Center, the focus on evaluation of the implementation rather than clinical outcomes, missing data from survey or item nonresponse over time, the multiplicity effect of assessing multiple outcomes, and use of aggregate rather than individually linked responses. Future research should consider evaluating differences and similarities in the implementation and sustainability of primary care practice quality improvement interventions across different practice settings and sizes. However, our implementation study in an academic setting with education, research and clinical missions is likely representative of other settings where multiple priorities compete for limited resources. Similar to other studies [21], our experience identified important limitations to implementing clinical microsystems which may limit the feasibility of this method in widespread primary care redesign efforts.

Conclusion

These findings suggest that a microsystems approach is valuable for building team relationships and quality improvement skills but is challenged in a large, diverse academic primary care context. Transformative change requires purposeful redesign across the entire system [42]. Microsystem investment alone may facilitate change but is not sufficient to respond to the challenges of a rapidly changing health care environment and the need to compete in value-focused markets.

Abbreviations

- EHR:

-

Electronic health record

- QI:

-

Quality improvement

References

Washington AE, Coye MJ, Feinberg DT. Academic health centers and the evolution of the health care system. JAMA. 2013;310:1929.

Dzau VJ, Cho A, ElLaissi W, Yoediono Z, Sangvai D, Shah B, et al. Transforming academic health centers for an uncertain future. N Engl J Med. 2013;369:991–3.

Miller HD. Making Value-Based Payment Work for Academic Health Centers. Acad Med. 2015;90:1294–7.

Nelson EC, Batalden PB, Huber TP, Mohr JJ, Godfrey MM, Headrick LA, et al. Microsystems in health care: part 1. Learning from high-performing front-line clinical units. Jt Comm J Qual Improv. 2002;28:472–93.

Quinn JB. Intelligent Enterprise: a knowledge and service based paradigm for industry. 1st ed. New York: Toronto: Free Press; 1992.

The Dartmouth Institute | Microsystem Academy. http://www.clinicalmicrosystem.org/. Accessed 19 Sep 2018.

Anderson D, Khatri K, Blankson M. Using clinical microsystems to implement care coordination in primary care. J Nursing Care. 2015;4:1–8.

O’Dwyer C. The Introduction of Clinical Microsystems into an Emergency Department. 2014. p. 126.

Tess AV, Yang JJ, Smith CC, Fawcett CM, Bates CK, Reynolds EE. Combining clinical microsystems and an experiential quality improvement curriculum to improve residency education in internal medicine. Acad Med. 2009;84:326–34.

von Plessen C, Aslaksen A. Improving the quality of palliative care for ambulatory patients with lung cancer. BMJ. 2005;330:1309–13.

Bobb JF, Lee AK, Lapham GT, Oliver M, Ludman E, Achtmeyer C, et al. Evaluation of a pilot implementation to integrate alcohol-related care within primary care. Int J Environ Res Public Health. 2017;14:1030.

NHS England » General Practice Forward View. https://www.england.nhs.uk/gp/gpfv/. Accessed 19 Sep 2018.

Union PO of the E. A new drive for primary care in Europe : rethinking the assessment tools and methodologies : report of the expert group on health systems performance assessment. 2018. https://publications.europa.eu/hu/publication-detail/-/publication/82541e0b-3c6e-11e8-b5fe-01aa75ed71a1. Accessed 19 Sep 2018.

Breton M, Smithman MA, Touati N, Boivin A, Loignon C, Dubois C-A, et al. Family physicians attaching new patients from centralized waiting lists: a cross-sectional study. J Prim Care Community Health. 2018;9. https://doi.org/10.1177/2150132718795943.

van Weel C, Kassai R. Expanding primary care in south and East Asia. BMJ. 2017;356:j634.

Mossman K, Onil Bhattacharyya MD, McGahan A, Mitchell W. Expanding the reach of primary Care in Develo** Countries. Harv Bus Rev. 2017; https://hbr.org/2017/06/expanding-the-reach-of-primary-care-in-develo**-countries. Accessed 19 Sep 2018.

Wagner EH, Austin BT, Von Korff M. Improving outcomes in chronic illness. Manag Care Q. 1996;4:12–25.

Berwick D, Kilo C. Idealized design of clinical office practice: an interview with Donald Berwick and Charles kilo of the Institute for Healthcare Improvement. Manag Care Q. 1999;7:62–9.

Kilo CM, Wasson JH. Practice redesign and the patient-centered medical home: history, promises, and challenges. Health Aff. 2010;29:773–8.

Kollisch DO, Hammond CS, Thompson E, Strickler J. Improving family medicine in Kosovo with microsystems. J Am Board Fam Med. 2011;24:102–11.

Williams I, Dickinson H, Robinson S, Allen C. Clinical microsystems and the NHS: a sustainable method for improvement? J Health Organ Manag. 2009;23:119–32.

Carney PA, Eiff MP, Green LA, Carraccio C, Smith DG, Pugno PA, et al. Transforming primary care residency training: a collaborative faculty development initiative among family medicine, internal medicine, and pediatric residencies. Acad Med. 2015;90:1054–60.

Koslov S, Trowbridge E, Kamnetz S, Kraft S, Grossman J, Pandhi N. Across the divide: “primary care departments working together to redesign care to achieve the triple aim.”. Healthcare. 2016;4:200–6.

Bodenheimer T, Sinsky C. From triple to quadruple aim: Care of the Patient Requires Care of the provider. Ann Fam Med. 2014;12:573–6.

Kotter JP. Leading change: why transformation efforts fail. Harv Bus Rev. 1995;73(2):59–67.

Institute of Medicine (US) Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington (DC): National Academies Press (US); 2001. http://www.ncbi.nlm.nih.gov/books/NBK222274/. Accessed 19 Sep 2018

Shortell S, Kalunzy A. Organization theory and health services management. In: Health care management: organization design and behavior. 4th ed. Delmar: Cengage Learning; 2011.

Kraft S, Carayon P, Weiss J, Pandhi N. A simple framework for complex system improvement. Am J Med Qual. 2015;30:223–31.

Institute for Healthcare Improvement: How to Improve. http://www.ihi.org:80/resources/Pages/HowtoImprove/default.aspx. Accessed 19 Sep 2018.

Heuvel JVD, Does RJMM, Koning HD. Lean six sigma in a hospital. Int J Six Sigma Competitive Advantage. 2006;2:377.

Lane DC, Oliva R. The greater whole: towards a synthesis of system dynamics and soft systems methodology. Eur J Oper Res. 1998;107:214–35.

Brownson RC, Colditz GA, Proctor EK. Editors. Dissemination and implementation research in health: translating science to practice. 1 edition. Oxford. New York: Oxford University Press; 2012.

Fetterman DM. Empowerment Evaluation. Am J Eval. 1994;15:1–15.

Davis S, Berkson S, Gaines ME, Prajapati P, Schwab W, Pandhi N, et al. Implementation science workshop: engaging patients in team-based practice redesign — critical reflections on program design. J Gen Intern Med. 2016;31:688–95.

Reason P, Bradbury-Huang H, editors. The SAGE handbook of action research: participative inquiry and practice. Second ed. London: SAGE publications Ltd; 2013.

Crabtree BF, Miller WL. Doing qualitative research. 2nd ed. Thousand Oaks, Calif; London: SAGE; 1999. https://trove.nla.gov.au/version/7365559. Accessed 19 Sep 2018

Pandhi N, Yang W-L, Karp Z, Young A, Beasley JW, Kraft S, et al. Approaches and challenges to optimising primary care teams’ electronic health record usage. J Innovation in Health Informatics. 2014;21:142–51.

Hung DY, Harrison MI, Truong Q, Du X. Experiences of primary care physicians and staff following lean workflow redesign. BMC Health Serv Res. 2018;18:274.

Henrique DB, Filho MG. A systematic literature review of empirical research in lean and six sigma in healthcare. Total Qual Manag Bus Excell. 2018;0:1–21.

Laureani A, Antony J. Leadership – a critical success factor for the effective implementation of lean six sigma. Total Qual Manag Bus Excell. 2018;29:502–23.

van Assen MF. Exploring the impact of higher management’s leadership styles on lean management. Total Qual Manag Bus Excell. 2018;29:1312–41.

Berwick DM. A User’s manual for the IOM’s ‘quality chasm’ report. Health Aff. 2002;21:80–90.

Acknowledgements

The authors would like to thank participants and leaders of the Microsystem program, and Zaher Karp, Amy Irwin, **nyi Wang and Wan-Lin Yang for data collection and analysis support.

Funding

The project described was supported by the Clinical and Translational Science Award (CTSA) program, previously through the National Center for Research Resources (NCRR) grant 1UL1RR025011, and now by the National Center for Advancing Translational Sciences (NCATS), grant 9U54TR000021. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health, the Department of Veterans Affairs, or the United States government. This project was also supported by the University of Wisconsin Carbone Cancer Center (UWCCC) Support Grant from the National Cancer Institute, grant number P30 CA014520. Additional support was provided by the UW School of Medicine and Public Health from the Wisconsin Partnership Program.

Availability of data and materials

In order to protect the anonymity of the participants, only de-identified data will be available from the corresponding author.

Author information

Authors and Affiliations

Contributions

NP, SK and SB formulated the questions for study. NP and SB collected qualitative data. NP led all analyses. NP and SK drafted the manuscript. SD, SK, ET and WC assisted in review of the analyses and interpretation of data. All authors participated in critically revising the manuscript and approved the final version to be published.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

These activities received an exemption from the University of Wisconsin-Madison institutional review board.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Pandhi, N., Kraft, S., Berkson, S. et al. Develo** primary care teams prepared to improve quality: a mixed-methods evaluation and lessons learned from implementing a microsystems approach. BMC Health Serv Res 18, 847 (2018). https://doi.org/10.1186/s12913-018-3650-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-018-3650-4