Abstract

In real-world materials research, machine learning (ML) models are usually expected to predict and discover novel exceptional materials that deviate from the known materials. It is thus a pressing question to provide an objective evaluation of ML model performances in property prediction of out-of-distribution (OOD) materials that are different from the training set. Traditional performance evaluation of materials property prediction models through the random splitting of the dataset frequently results in artificially high-performance assessments due to the inherent redundancy of typical material datasets. Here we present a comprehensive benchmark study of structure-based graph neural networks (GNNs) for extrapolative OOD materials property prediction. We formulate five different categories of OOD ML problems for three benchmark datasets from the MatBench study. Our extensive experiments show that current state-of-the-art GNN algorithms significantly underperform for the OOD property prediction tasks on average compared to their baselines in the MatBench study, demonstrating a crucial generalization gap in realistic material prediction tasks. We further examine the latent physical spaces of these GNN models and identify the sources of CGCNN, ALIGNN, and DeeperGATGNN’s significantly more robust OOD performance than those of the current best models in the MatBench study (coGN and coNGN) as a case study for the perovskites dataset, and provide insights to improve their performance.

Similar content being viewed by others

Introduction

Machine learning (ML)-based models have swiftly emerged as the state-of-the-art (SOTA) performers in a wide range of materials informatics problems such as materials property prediction1,2,3,4,5,6,7, crystal structure prediction8,9,10,11,12, material generation13,14,15,16, high-throughput screening17,18, and inverse design of materials19,20. Among these, one of the most exciting applications of ML-based models is to predict various properties of materials given their compositions, or structures. Composition-based ML models have shown limited prediction performance21,22,23, as most material properties are highly dependent on their crystal structures. Recent research has demonstrated that structure-based deep learning (DL) models can achieve significantly better accuracy in predicting materials properties compared to methods that exclusively rely on composition descriptors24,25,26. Especially, graph neural network (GNN) models have been widely utilized for this purpose due to their demonstrated superior effectiveness in this task3,4,24,27,28. This is because GNNs excel at capturing the local environment of each atom by considering its neighboring atoms and their interactions, which is crucial in determining the macro-properties of a material29,30,31,32,33.

Several benchmark studies have been conducted to evaluate the performances of existing ML methods24,26,34,35. Fung et al.24 presented a comprehensive benchmark of SOTA GNN models evaluated across multiple diverse problems, while Varivoda et al.35 presented a benchmark study of ML models for property prediction with uncertainty quantification. Both benchmarks overlooked the inclusion of OOD test sets, compromising their applicability for real-world materials property prediction. Dunn et al.26 presented the Matbench benchmark test suite and an automated procedure for evaluating ML models for predicting material properties. This benchmark contains nine distinct structure-based property prediction tasks. Remarkably, the SOTA GNN model coGN36 has consistently demonstrated superior performance with an MAE of 0.017 eV for formation energy prediction and 0.156 eV for bandgap prediction, while the top positions on the leaderboard37 for all nine tasks are all secured by structure-based GNN models. However, the excellent performances of these GNN models are overestimated, as verified by our work. We find that the reported superior performances of SOTA models in the MatBench study originated from their performance evaluation method, in which an entire dataset is randomly split into five training and test folds leading to high similarity between both sets due to the high sample redundancy of materials databases38. This dataset redundancy in material databases such as ICSD39, Materials Project40, OQMD41, and AFLOW42, is caused by the historical iterative tinkering process of experimental material discovery and accumulation, which tends to generate many materials with high similarity. Moreover, studies have revealed that current ML models have low generalization performance on material datasets for test samples with different data distributions, and their performances are frequently overestimated because of high dataset redundancy34,38,43,44,45. Li et al.44 discovered that ML models trained on Materials Project 2018 data may experience a significant decline in performance when applied to new materials introduced in Materials Project 2021 data, primarily due to a shift in data distribution. Consequently, the resulting high prediction performance over these test sets in Matbench assumed homogeneity in terms of composition, structure, or properties and was randomly distributed within the entire dataset space. This material property performance evaluation approach, guided by the assumption of independent and identically distributed (i.i.d.) data, proved inadequate in replicating their performance in real-world material discovery applications. In practical scenarios, ML models are often utilized to discover or screen outlier materials that deviate from the distribution of the training set and need their property to be predicted. Moreover, in real-world situations, researchers commonly are more interested in a limited number of outlier materials that are different from the known material compositions or structures, referred to as OOD materials. These materials could be located in a chemical space with limited known counterparts, or they might display exceptionally high or low property values38. An evaluation of ML-based material property prediction performance in these particular situations was not provided in the MatBench study and in the literature to our knowledge.

Recently, within the domain of ML, researchers started to extensively investigate OOD generalization resulting from changes in data distribution between the original and target domains, primarily in the context of transfer learning46, domain generalization47,48, causal learning49, and domain adaptation50. This shift in distribution is a key concern in these areas. Most of these methods are still unexplored for improving the material property prediction performance in OOD materials. To our knowledge, there has not been a comprehensive benchmark study of ML models for OOD property prediction of inorganic materials in general. Another shortcoming of current ML approaches for material property prediction is that the ML models are usually trained without considering the distribution of the test set. In practical materials property prediction tasks, the compositions or structures of the target materials are already available, which can be and should be used as guidance information for training better ML models for property prediction of these target materials38. Furthermore, Schrier et al.51 highlighted that material scientists usually prioritize investigating the properties of new materials with unique compositions or characteristics, which frequently gives rise to challenging OOD ML problems. This underscores the critical need for a systematic investigation into the prediction problem of the properties of OOD materials.

Due to the sheer dominance of GNNs in the MatBench study, this work aims for a benchmark study for GNN-based OOD materials property prediction. Our work is complementary to the benchmark study by Fung et al.24 on GNNs’ performance on materials property prediction. However, their analysis did not consider OOD materials as test sets. Although several works have been done on the OOD topic in general48,52,53,54 including a similar benchmark work55 for organic materials, a benchmark study of structure-based GNNs for inorganic materials in general was highly warranted. Previous related works38,45,56,57 do contain some level of OOD predictions for ML models, but no prior work does a complete benchmark study for inorganic materials in general for structure-based GNNs and identifies issues with current popular benchmarks. Kauwe et al.56 tested only two simple ML models (SVM and logistic regression) for a composition-based extrapolation performance study. **ong et al.34 provided only a single method to split the data to identify outlier materials, whereas our current paper proposes five different methods to generate outlier test sets (See the “OOD test set generation” subsection). Additionally, they only experimented with one GNN model, where we took account of eight different SOTA GNN models. Meredig et al.45 provided two different methods for OOD target generation, but their target generation methods are based solely on the elemental composition of the materials and do not apply to structure-based models which are the current SOTA performers. OC2057 and OC2258 both presented clearly defined data-splitting methods (ID/OOD) for catalyst datasets. Not only they did not include the SOTA performing models for materials property prediction in their benchmark (ALIGNN, DeeperGATGNN, coGN and coNGN) which makes their results prone to criticism, they also did the benchmark with respect to catalyst datasets or for the realm of catalysis, and not for general inorganic materials. They sampled their splits based on catalyst composition (ID: for those from the same distribution as training, and OOD: for unseen catalyst compositions). They did not provide any structure-based target set clustering like our work. Moreover, they treated any data that is unseen (not in the dataset) as OOD, whereas those OOD data can come from the same distribution as their ID set, which is a big issue. Hu et al.38 proposed five target generation methods based on composition-based descriptors. However, it failed to define a method to generate OOD targets for evaluating structure-based ML models. Our paper’s target generation method can handle structures of crystals by incorporating structure-based descriptors such as OFM for the clustering, which was missing from their work. Furthermore, none of the previous studies systematically provided any insight regarding the current bottlenecks of GNNs for OOD materials property prediction. Although Choudhary and Sumpter59 demonstrated that a GNN model, once trained solely on perfect materials, is capable of predicting vacancy formation energies (Evac) in defective structures, eliminating the necessity for extra training data, their findings are not generalized and tested across multiple datasets, models, and target material properties. In response, we addressed these limitations in our benchmark study. In particular, our work focused on effectively predicting properties of minority or outlier material clusters that exhibit different distributions compared to the training set. These scenarios are characterized by the core issue of OOD ML. A general framework of our benchmark study is presented in Fig. 1. Details about the datasets and the OOD target generation methods can be found in the next section. Our contributions are summarized as follows:

-

We proposed a set of OOD material property prediction benchmark problems for three datasets from the MatBench study, where each category of OOD targets possesses unique characteristics, creating a more realistic and complex challenge for current SOTA GNN algorithms.

-

Through comprehensive experiments on these OOD problems, we benchmarked GNN algorithms for property prediction for these OOD datasets. We showed that current GNN algorithms have limited generalization capabilities and are not well-suited for real-world OOD material property prediction tasks, with the exception of a few cases. In general, all the algorithms perform worse on average on the OOD test problems than their baseline performance in the original MatBench study, suggesting methods like domain adaptation are needed to improve their OOD prediction performance.

-

By delving into the physical latent spaces of the GNN models, we identified possible reasons for the comparatively better performance of CGCNN, ALIGNN, and DeeperGATGNN and the subpar performance of the current top models in the MatBench leaderboard - coGN and coNGN as a case study on the perovskites dataset.

First, we generate OOD test sets for the three datasets chosen, where we propose five different methods to split each dataset into 50 folds, ensuring the test set varies in distribution from the training set in each fold. Next, we perform preprocessing steps such as input representation, data scaling, etc. for the GNNs. Subsequently, we train the GNN models and compile the test set results. After that, we evaluate the performance over the 50 folds for each OOD target generation method. We conduct additional analyses on the obtained results, including investigating the physical latent spaces of the GNN models to understand their characteristics in predicting the properties of OOD materials.

Results

OOD benchmark problems, models and datasets

We analyzed eight GNN models for material property regression tasks using three datasets sourced from MatBench26 and mentioned in Table 1. Details about the GNN models can be found in the “Methods” section. The raw dataset details are shown in Table 2. For simplicity, we refer to the matbench_dielectric dataset as the ‘dielectric dataset’, the matbench_log_gvrh dataset as the ‘elasticity dataset’, and the matbench_perovskites dataset as the ‘perovskites dataset’.

In realistic scenarios, the test set often deviates significantly from the training and validation set in terms of distribution. Rather than applying conventional methods like random train-test splitting or k-fold cross-validation (which also relies on random splitting), we proposed five practical scenarios for predicting material properties. These scenarios are designed to focus on properties of less common or underrepresented materials in the dataset, which are often of particular interest to material researchers who are more interested in discovering novel exceptional outlier materials. For each raw dataset, we outlined five methods (see the next subsection for details) for selecting which samples from the sparse property or structure space will be designated as the target test samples. Overall, each target generation method generates 50 clusters, where each cluster has a different distribution from the others. As materials property prediction datasets are relatively small datasets, whereas the number of GNN parameters varies from ≈1.8–6 million, we opted for a higher number of folds for our work. To efficiently train these GNN models, we need a decent amount of training samples. If we used a small number of folds, then each model would have been trained on a smaller training set and tested on a larger test fold. Here, the potential for the data distribution in the test fold to differ from the training set will be bigger, and we thus expect a higher prediction error on average60. In contrast, using a higher number of folds (≈30–50) means that each model will be trained on a larger training set and tested on a smaller test fold which leads to a lower prediction error as the models see more of the available data60. For each fold, we selected a cluster as the test set, and the rest as training and validation sets, and averaged the results over 50 folds to get the final result.

OOD test set generation

In typical real-world scenarios, researchers are acquainted with their target materials of interest, often lacking labeled samples. In this work, we specifically concentrate on instances where the target set comprises no labeled samples. Accordingly, we propose the following target set generation methods to simulate real-world conditions for materials property prediction.

Leave-one-cluster-out (LOCO)

Meredig et al.45 proposed this approach in their assessment of the generalization performance of ML models for predicting material properties. Initially, we apply the k-means algorithm61 based on the orbital-field matrix (OFM) features62 to cluster the whole dataset into 50 clusters. OFM captures the electronic structure of materials by considering the orbitals’ interactions, which are fundamental to understanding the material’s properties, and easily interpretable due to their physical basis63. The OFM features were calculated fast using the MatMiner23 package. Subsequently, we evaluate the models’ performance by iteratively using each of the clusters as test sets. While enhancing the widely employed random splitting method to mitigate performance overestimation, it still incorporates all samples, especially those located in densely redundant areas. This implies that it retains a susceptibility to some degree of overestimation.

Single-point targets with the lowest structure density (SparseXsingle)

In this method, we begin by converting material structures into the 1024-dimension OFM feature space. Subsequently, we apply the t-distributed stochastic neighbor embedding (t-SNE)64 for dimension reduction converting the OFM feature space to a 2D space (x-value). Following this, we calculate the density for each data point in the 2D space and select 500 samples with the lowest density. The density was calculated using Gaussian Kernel Density function of the t-SNE. We apply k-means clustering on these chosen samples to convert them into 50 clusters. Finally, we extract one sample from each cluster, yielding a test set with 50 target samples.

Single-point targets with the lowest property density (SparseYsingle)

In this method, we follow the preprocessing method of SparseXsingle, where all structures are converted into 1024-dimension OFM features. Following this, we sort the samples based on their property values (y-value). This process estimates the density of y-values using kernel density estimation for each data point and picks the 500 samples with the lowest density. Then we apply the k-means clustering to convert these chosen samples to 50 clusters. From each cluster, we pick one sample, obtaining our test set with 50 target samples.

Cluster targets with the lowest structure density (SparseXcluster)

This sparse cluster target set generation method is similar to the SparseXsingle method. However, after k-means clustering, rather than selecting just one sample, we extend the selection to include N nearest neighbors for each chosen sample to form the target cluster. The process of picking neighbors ensures that no sample is selected into multiple target clusters with the neighbors determined by the Euclidean distance of OFM features.

Cluster targets with the lowest property density (SparseYcluster)

This sparse cluster target set generation method closely resembles the SparseYsingle method but with a notable distinction. Following k-means clustering, instead of selecting a single sample, we expand the selection to include N nearest neighbors for each chosen sample to create a target cluster. The neighbor-picking process is conducted to prevent any sample from being selected into multiple target clusters. The determination of neighbors is based on the Euclidean distance of OFM features.

The distribution of the whole dielectric datasets and their different target sets are shown in Fig. 2. Additionally, Supplementary Fig. 1 and 2 provide visualizations for the elasticity and perovskites datasets. It can be observed that realistic target sets predominantly reside in sparser regions, whereas commonly employed random splitting tends to align with dense areas exhibiting a distribution akin to that of the training set. In total, we prepared 3 datasets, the dielectric dataset, the elasticity dataset, and the perovskites dataset, for the benchmark evaluations, and each of them contains LOCO, SparseXsingle, SparseXcluster, SparseYsingle, and SparseYcluster test sets for regression. The number of samples for each cluster of these datasets is shown in Supplementary Tables 1, 2, and 3.

a 50-fold CV (with random splitting) of the whole dielectric dataset with 4764 samples represented by cross symbols with 50 different colors. b Leave-one-cluster-out target (LOCO) clusters. c In SparseXsingle, 50 test samples are represented by cross symbols with 50 different colors, and gray points represent the remaining samples. d In SparseYsingle, 50 test samples are represented by cross symbols with 50 different colors, and gray points represent the remaining samples. e SparseXcluster displays 50 test clusters represented by cross symbols with 50 different colors, and gray points represent the remaining samples. f SparseYcluster displays 50 test clusters represented by cross symbols with 50 different colors, and gray points represent the remaining samples.

Performance comparison on OOD test sets

Here we report the OOD performance of selected GNN models for three datasets. The training hyperparameters used for this benchmark study are listed in the Supplementary Notes. The results of the dielectric dataset for five different OOD target generation methods are summarized in Table 3. For the LOCO generation method, we found that coNGN achieved the SOTA OOD test results on the dielectric dataset (MAE: 0.4983), and coGN performed the second best (MAE: 0.4984), showing a 3.11% better performance than the third best model CGCNN (MAE: 0.5911). The other GNN models (except DeeperGATGNN) performed significantly worse than these two for the LOCO targets, with DimeNet++ registering the highest MAE at 2.7720. This discrepancy in performance can be attributed to the fact that in contrast to random train-test splitting or cross-validation, the LOCO targets tend to have different distributions compared to the training sets (refer to Fig. 2b). This introduces increased complexity and challenges for conventional ML/DL models such as MEGNet that are well-trained to achieve good prediction performance on i.i.d. test sets. The OOD test sets for the SparseXcluster and SparseYcluster datasets are formed through a two-step process. Initially, 50 seed samples with the highest sparsity in the OFM space are chosen. From these, 10 samples that are most similar to the seed samples (depending on the x-axis or y-axis) are selected. The main goal for these target generation methods is to evaluate the effectiveness of an ML/DL algorithm to predict the properties without using closest neighbors. For the SparseXcluster targets, coGN and coNGN achieved the best performances on the dielectric dataset (MAE: 0.5241 and 0.5242, respectively), followed by CGCNN with an MAE increase of ≈14.57% to 0.6006. However, for the SparseYcluster targets, DeeperGATGNN achieved the SOTA MAE of 0.3959, which is 10.10% less than that of its closest model ALIGNN (MAE: 0.4359). The remaining models again displayed significantly subpar performance compared to these three models for SparseXcluster and SparseYcluster targets. The single-point sparse X and sparse Y test sets are distinctive because they consist of only one sample each, with all other samples being utilized for training and validation. coGN and coNGN outperformed all other models for the SparseXsingle targets (MAE: 0.5518 and 0.5519, respectively), with a staggering 44.18% decrease in MAE than the second best performing model, CGCNN (MAE: 0.9888). For the SparseYsingle targets, ALIGNN achieved the lowest MAE (0.2513), which is slightly better (8.75%) than its closest performer, DeeperGATGNN (MAE: 0.2733). In contrast, other models were consistently outperformed by these three models by a large margin for the single-point Sparse X and Y targets, with SchNet achieving the highest MAE (3.9767) for SparseXsingle targets, and DimeNet++ obtaining the highest MAE (2.5866) for SparseYsingle targets.

Table 4 shows the summarized results for five types of OOD test sets on the elasticity dataset. CGCNN achieved the SOTA MAE for both LOCO (0.0585 \({\log }_{10}\)(GPa)) and SparseXcluster (0.0499 \({\log }_{10}\)(GPa)) targets, which is significantly better than the second best performing model ALIGNN (MAE: 0.0974 \({\log }_{10}\)(GPa), and 0.0834 \({\log }_{10}\)(GPa), respectively) for both these OOD targets (66.50%, and 67.13%, respectively). On the other hand, ALIGNN achieved the SOTA MAEs on the rest of the OOD targets (SparseYcluster: 0.0631 \({\log }_{10}\)(GPa), SparseXsingle: 0.0853 \({\log }_{10}\)(GPa), and SparseYsingle: 0.0450 \({\log }_{10}\)(GPa)). CGCNN performed the second best for the SparseYcluster and SparseXsingle targets (MAE: 0.0752 \({\log }_{10}\)(GPa), and 0.0895 \({\log }_{10}\)(GPa), respectively), with an increase in MAE of 16.09%, and 4.92%, respectively, while DeeperGATGNN performed the second best for the SparseYsingle targets (MAE: 0.0807 \({\log }_{10}\)(GPa)) with a remarkable 79.33% increase in MAE. coGN and coNGN were outperformed by CGCNN and ALIGNN on all five types of targets, while DeeperGATGNN performed better than them on four out of five targets. Other models performed consistently worse than CGCNN, ALIGNN, and DeeperGATGNN on the elasticity dataset, with MEGNet registering the worst MAE for the LOCO (1.4468 \({\log }_{10}\)(GPa)), SparseXcluster (1.4113 \({\log }_{10}\)(GPa)), and SparseYcluster (1.5659 \({\log }_{10}\)(GPa)) targets, DimeNet++ achieving the worst MAE for the SparseXsingle targets (1.3214 \({\log }_{10}\)(GPa)), and SchNet recording the poorest MAE for the SparseYsingle targets (1.4855 \({\log }_{10}\)(GPa)).

Results on the perovskites dataset are summarized in Table 5. We can find that DeeperGATGNN outperformed all other algorithms for four out of five OOD targets (MAEs - LOCO: 0.036 eV/unit cell, SparseXcluster: 0.0464 eV/unit cell, SparseYcluster: 0.0333 eV/unit cell, SparseXsingle: 0.0373 eV/unit cell), demonstrating superior performance on the perovskites data. ALIGNN trailed its performance with a significant increase in MAE of 5.75%, 0.86%, 22.52%, and 2.40%, for the LOCO (MAE: 0.0386 eV/unit cell), SparseXcluster (MAE: 0.0468 eV/unit cell), SparseYcluster (MAE: 0.0341 eV/unit cell), and SparseXsingle (MAE: 0.0457 eV/unit cell) targets, respectively. ALIGNN achieved the SOTA performance for the single-point Sparse Y targets (MAE: 0.0243 eV/unit cell), outperforming DeeperGATGNN (MAE: 0.0259 eV/unit cell) by a slight margin (6.58%). coGN and coNGN performed worse ALIGNN and DeeperGATGNN on all five OOD targets, while CGCNN achieved lower MAE than them on four out of five targets. All other models demonstrated their subpar performance on the OOD perovskites data, with DimeNet++ achieving the highest MAEs for LOCO (MAE: 1.4666 eV/unit cell), SparseXcluster (MAE: 1.4567 eV/unit cell), and SparseXsingle targets (MAE: 1.5248 eV/unit cell), and SchNet registering poorest MAEs for SparseYcluster (MAE: 1.4736 eV/unit cell), and SparseYsingle (MAE: 1.5173 eV/unit cell) targets.

While the latest SOTA GNN models try to outperform each other by achieving the best results on specific datasets as reported in the Matbench study26, they often overfit the i.i.d. training datasets, which prevent them from achieving good performance on the OOD test sets. CGCNN’s simplicity and primitiveness overcome this issue as it carries less bias from its design to perform well on OOD tests of some specific datasets (e.g., MaterialsProject formation energy/ band-gap dataset, etc.). This is why it outperformed all many SOTA GNN algorithms on several targets. However, with the dataset size increasing, its performance started to lag behind ALIGNN and DeeperGATGNN. ALIGNN’s SOTA performance can be attributed to its line graph encoding used to incorporate the triplet feature and the two-step edge-gated convolution operation. On the other hand, DeeperGATGNN’s unique architecture based on a global attention mechanism aided with differentiable group normalization and skip-connection contributes to its overall SOTA performance on the perovskites dataset. Notwithstanding that coGN and coNGN achieved superior results for three categories of targets on the dielectric dataset, overall it lagged behind CGCNN, ALIGNN and DeeperGATGNN on average considering 15 different OOD targets across three datasets. Their line-graph (similar to ALIGNN) and nested line graph-based architectures, better atom neighborhood selection method, and the usage of asymmetric unit cell representation can be attributed for this. But with the increase of dataset size, their OOD performances deteriorated. But they outperformed the rest of the algorithms (except the mentioned three GNNs) as they are also designed to adapt well for some particular datasets. This indicates that OOD data techniques, such as domain adaptation, are needed to alleviate their prediction performance. Moreover, we found that MEGNet, SchNet, and DimeNet++ achieved worse but similar OOD performances on all three datasets, which demonstrates they are not suitable GNN models for making OOD materials property predictions.

Although CGCNN, ALIGNN, and DeeperGATGNN displayed high resilience in handling OOD test data (and coGN and coNGN only for the dielectric dataset targets) across all datasets’ targets, their results are still bottlenecked by poor results for a few test clusters. The fold-wise MAE plots for these three algorithms on the dielectric dataset, elasticity dataset, and perovskites dataset are presented in Fig. 3, Supplementary Fig. 3, and 4, respectively. The distribution of the MAE for 50 folds/clusters showed that only a few clusters are responsible for the overall MAE of each algorithm to surge. We also find that while ALIGNN achieves best or the second best OOD performance on the dielectric datasets (Table 3), it can have significantly degraded prediction MAEs for a few OOD test sets as shown in the highest peak in Fig. 3a–e. This analysis highlights specific areas where each algorithm’s performance could be further optimized to enhance its overall accuracy and reliability. The parity plots of CGCNN, coGN, and DeeperGATGNN’s prediction performance on the perovskites dataset are plotted in Fig. 4 for the LOCO targets, and in Supplementary Figs. 5–8 for the rest of the targets. These figures demonstrated superior OOD prediction performance of DeeperGATGNN compared to CGCNN and coGN on the perovskites dataset. For all categories of targets, DeeperGATGNN achieved better prediction accuracy compared to CGCNN and coGN for non-OOD samples, which is proportional to their prediction accuracy for the OOD samples.

Distribution of the MAEs for each fold of CGCNN, ALIGNN, and DeeperGATGNN on the dielectric dataset for (a) LOCO, (b) SparseXcluster, (c) SparseYcluster, (d) SparseXsingle, and (e) SparseYsingle OOD targets. It showed that a few folds/clusters are extremely difficult to predict with MAE values greater than 1.0, which lead to high variation in the models' predictions.

These show that DeeperGATGNN has better performance (MAE: 0.0365 eV/unit cell, R2 score ≈ 0.96) on the non-OOD samples compared to CGCNN (MAE: 0.0651 eV/unit cell, R2 score ≈ 0.90) and coGN (MAE: 0.0631 eV/unit cell, R2 score ≈ 0.92), which is proportional to its better OOD performance than the other two for the LOCO targets.

Performance comparison with MatBench SOTA performance

To investigate the issue of performance degradation of GNN models in OOD material property prediction, we compared the MatBench SOTA algorithms’ performance on the OOD datasets with those on the Matbench study (Fig. 5). The MatBench SOTA algorithms and their MAEs for all three datasets in the Matbench study26 are listed in Table 2. We calculated the performance changes (in percentage) from the MatBench SOTA MAE to the MAE found for all five OOD target generation methods for each algorithm. The goal of this analysis is to find out the feasibility of current GNN algorithms for high-performance OOD materials property prediction. We found that all models’ OOD performances are significantly worse than their SOTA results in MatBench, with degradation ranging from −0.83% to a substantial -1366.87%. These results indicate the inadequacy of current GNN algorithms for OOD property prediction for materials data. The only exception found on the dielectric dataset is for the SparseYsingle targets, where ALIGNN’s performance is found to be improved by 7.31%. On the other hand, MEGNet, SchNet, and DimeNet++ performed the worst with a performance change of >−750% for all five types of OOD targets. However, judging from Fig. 5a, we observed that coGN and coNGN adapted the best on average in the new OOD target-based predictions on the dielectric dataset, but with no improvements over the MatBench SOTA results.

Performance comparison of different GNN models’ MAEs for all five different types of OOD targets with the SOTA MAEs found in the MatBench study on the (a) dielectric dataset, (b) elasticity dataset, and (c) perovskites dataset. Although on average, all models' MAEs are significantly higher than the SOTA MAEs, CGCNN, ALIGNN, and DeeperGATGNN outperformed MatBench’s SOTA results in some cases.

Similar comparison results on the elasticity dataset are shown in Fig. 5b. Again, all the GNN algorithms exhibited their deficiency to generalize, achieving the MAE increases for all algorithms across all OOD test sets ranging from -12.24% to a remarkable -2237.23%. Exception from these results are CGCNN’s outperforming the MAEs of SOTA algorithms for LOCO and SparseXcluster targets (12.7%, and 25.51% improvement, respectively), and ALIGNN’s superior performance for both the SparseYcluster and SparseYsingle targets (5.82%, and 32.82% improvement, respectively). The finding from this figure is that with the increase of data from the dielectric dataset to the elasticity dataset, the previously deteriorated results of MEGNet, SchNet, and DimeNet++ became even more deteriorated with a notable performance degradation of >−1500% for all five types of OOD targets. However, DeeperGATGNN, CGCNN, and ALIGNN’s average performance degradations on the elasticity dataset were lower than those of the dielectric dataset.

Finally, we showed the performance degradation on the perovskites dataset of the MAEs for all MatBench SOTA algorithms by comparing the OOD results in Fig. 5c and their i.i.d. performance in Table 2. We again noticed that almost all algorithms’ performance is much worse than their performance on the MatBench study, ranging from -23.84% to a staggering -5568.31%. In contrast, ALIGNN and DeeperGATGNN outperformed the previous SOTA MAE by 3.87%, and 9.73%, respectively, for the SparseYsingle targets. We observed that ALIGNN improved on all three datasets for the SparseYsingle OOD targets, which demonstrated its fair generalization capability for this type of OOD target. But considering all the OOD target generation methods, none of the algorithms showed good generalization capability on average, demonstrating the necessity for methods like domain adaptation to improve the OOD prediction performance of current GNN models.

Comparison of OOD performance with baseline i.i.d. performance

Here we aim to check how the evaluated GNNs’ performances degrade when changing their test sets from i.i.d to OOD. The i.i.d. baseline MAEs for all GNN algorithms can be found on the MatBench leaderboard37, while the OOD test set performances on the dielectric, elasticity, and perovskites dataset can be found in Tables 3, 4, and 5, respectively. The comparison of all algorithms’ prediction performances for all five OOD targets with their i.i.d. baseline MAEs in the MatBench study on the dielectric dataset are shown in Fig. 6a. We limited the maximum value of y-axis to 3 for better visualization. We found that OOD MAEs of MEGNet, SchNet, DimeNet++, coGN, and coNGN are significantly worse than their i.i.d. baseline MAEs for all OOD target sets, which proved their inadequate prediction capabilities on OOD datasets. However, CGCNN was found to be most benefited from the 50-fold OOD test set cross-validation experiments as it improved over its baseline performance in the MatBench study on three targets - LOCO (14.09%), SparseYcluster (12.26%), and SparseYsingle (20.23%), while ALIGNN and DeeperGATGNN only managed to improve for the SparseYsingle targets (27.14%, and 18.53%, respectively).

Performance comparison of different GNN models’ MAEs for all five different types of OOD targets with their baseline i.i.d. MAEs found in the MatBench study on the (a) dielectric dataset, (b) elasticity dataset, and (c) perovskites dataset. The baseline MAE for each algorithm is labeled. All the models on average achieved higher MAEs than their baseline i.i.d. MAEs which proved the inadequacy of current GNN models for OOD materials property prediction.

The OOD test set comparison with the MatBench baseline results of each algorithm on the elasticity and perovskites dataset are shown in Fig. 6b, and 6c, respectively. We again limited the maximum value of y-axis to 1 for both these figures for better visualization. On both these datasets, MEGNet, SchNet, DimeNet++, coGN, and coNGN proved to be much worse that their baseline prediction settings. This was evident from the results of Tables 4 and 5 as all of the SOTA OOD results on these datasets were achieved by either CGCNN, ALIGNN, or DeeperGATGNN. On the elasticity dataset, CGCNN improved over its baseline MAE for all OOD target sets (LOCO: 34.65%, SparseXcluster: 44.24%, SparseYcluster: 15.98%, SparseXsingle: 0.01%, SparseYsingle: 6.18%), while it failed to improve over its baseline performance on the perovskites dataset for any type of OOD targets. ALIGNN and DeeperGATGNN’s OOD results are better than their baseline results for only the SparseYcluster targets (11.75%, and 5%, respectively), and SparseYsingle targets (37.05%, and 10.65%, respectively) on the elasticity dataset. However, they only succeeded in improving over their baseline MAEs for the SparseXsingle targets (15.68%, and 10.21%, respectively). In contrast, all other models performed significantly worse than these three on both datasets.

Our 50-fold cross-validation scenario is a much more complex challenge for the current GNN algorithms than the scenario presented in the MatBench Study because of the OOD test sets for each fold rather than the i.i.d. test sets from random splitting as used in Matbench. Despite this, three algorithms’ success in outperforming their baseline results for multiple targets proved the robustness of their inherent prediction capabilities. It also manifested the lack of reliability of the material property prediction performance of current GNNs reported in the MatBench study as indicators of their effectiveness in real-world materials property prediction, especially for those obscure materials that researchers desire.

Physical insights

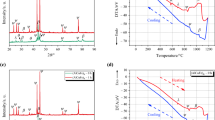

We utilized t-distributed stochastic neighbor embedding (t-SNE)64, a commonly utilized non-linear method for visualizing and interpreting complex, high-dimensional data, to investigate specific insights into the materials’ physics. t-SNE aims to reduce higher-dimensional data into a much lower dimension (typically 2D or 3D) while preserving the proximity of data points in both dimensions. We selected the perovskites formation energy dataset for training. We also only investigated CGCNN, ALIGNN, DeeperGATGNN, coGN, and coNGN for this experiment - the first three models for their comparatively robust OOD prediction performance on average across all five targets on this dataset and the last two for their SOTA performance in the current MatBench leaderboard37. Our objective is to visualize the distribution of latent representations learned through the training of different models. For each trained model, we retrieved the output of the first layer after the final graph convolution layer and plotted the t-SNE diagrams in Fig. 7.

t-SNE diagrams for (a) CGCNN, (b) ALIGNN, (c) DeeperGATGNN, (d) coGN, and (e) coNGN were plotted after training with the perovskites dataset and retrieving latent representation of the first layer after the final graph convolution layer. Different colors indicate different formation energy levels in the latent space, where each point represents a separate perovskite material. CGCNN, ALIGNN, and DeeperGATGNN have much smoother clusters compared to coGN and coNGN, where different colored clusters are hardly separable.

The t-SNE diagrams portray integrated latent spaces that combine structure and composition information for the materials that have been trained. Various colors correspond to different levels of formation energy for the samples represented in those latent spaces. We noticed that each of these GNNs is capable of producing effective representations that result in the clustering of materials with similar formation energies. Within each cluster, it can be anticipated that points will exhibit resemblances in both their atomic configurations and elemental compositions. While each model may produce distinct latent spaces, we can gain valuable insights into their prediction patterns by examining these clusters. For example, we observed that coGN and coNGN have similar patterns in their latent spaces which can explain their similar OOD benchmark results (see Table 5). Also, we can see that the lower and higher energy distribution overlaps in the latent spaces of coGN and coNGN are very high. We can hardly separate different colored regions in their latent spaces, whereas the same separation is much smoother in CGCNN, ALIGNN, and DeeperGATGNN. This might explain the better OOD performances of CGCNN, ALIGNN, and DeeperGATGNN, and substandard OOD performances of coGN and coNGN for all categories of OOD targets on average on the perovskites dataset.

Discussion

Our benchmark study aimed to systematically evaluate the performance of eight GNN models in the challenging task of structure-based OOD materials property prediction and identify models that exhibit superior OOD prediction performance while understanding the factors contributing to their efficacy. The motivation behind this work stems from the fact that material researchers are typically drawn to exploring novel materials with exceptional properties and unconventional compositions or structures, which presents a common difficulty in current ML techniques known as the OOD prediction problem. Through rigorous experimentation, we found that no single algorithm achieved SOTA performance for all OOD target set generation methods for a given dataset, let alone on all datasets.

First, to ensure a fair and comprehensive evaluation, we subjected each of the three chosen datasets from the MatBench study to a systematic division into 50 folds. This division process was conducted using five distinct target set generation methods, resulting in a total of 250 folds across the three datasets. Notably, within each fold, the test samples were methodically drawn to be OOD from the remaining dataset using a strategic approach based on predefined criteria. These posed a strong challenge for the GNNs chosen in this study as they were not only tasked with performing effectively on the challenging OOD test samples but also with demonstrating effectiveness across the entirety of the 50-fold cross-validation setup, underscoring the robustness and adaptability required for this comprehensive assessment. In total, we trained a total of 6000 deep learning GNN models, analyzed the results and drew important conclusions from them. So, with respect to the amount of work done, our conclusions and remarks have a high amount of credibility.

Second, we found that no single algorithm triumphed in all situations which indicated their lack of generalization capability across different datasets and unreliability in making real-world materials property prediction. However, CGCNN, ALIGNN, and DeeperGATGNN proved to be more robust than other algorithms, and coGN and coNGN only for the dielectric targets. CGCNN excelled in certain OOD scenarios due to its rudimentary nature. This achievement is particularly significant given that CGCNN outperformed both coGN and coNGN for most of the OOD targets, which currently hold the SOTA performance for the majority of tasks in the MatBench leaderboard. While cutting-edge GNNs often strive for optimal results on specific datasets, they risk getting prone to overfitting to these specific datasets or types of material data. CGCNN’s simplistic and primitive architecture overcomes this problem by offering a significantly less complex model compared to other SOTA GNNs, such as SchNet. This makes CGCNN more challenging to overfit, as the likelihood of overfitting tends to increase with the number of parameters in a deep neural network model. But in some cases, having a larger model with more parameters might be necessary to capture intricate patterns in complex material data, which becomes a trade-off for CGCNN in performance on both the MatBench study and our OOD study. As a result, it trailed ALIGNN and DeeperGATGNN (which have more training parameters and better architectures) with the increasing number of materials in other datasets. The key to ALIGNN’s remarkable performance lies in its distinctive line graph encoding strategy, which enables the utilization of triplet features that effectively capture long-range interactions between atoms. Moreover, the incorporation of two levels of edge-gated convolutions in updating both node and edge features also plays a pivotal role in its SOTA performance on the elasticity dataset on average. However, DeeperGATGNN claimed the SOTA performance on average on the largest dataset (perovskites), as both ALIGNN and CGCNN suffered from over-smoothing (a phenomenon that makes GNN node features almost similar after a certain graph convolution layer). DeeperGATGNN evades this issue by incorporating differentiable group normalization and residual skip-connections, which allows it to use > 50 graph convolution layers to extract deeper-level features from the encoded materials graphs. Despite all this, no single algorithm dominated for all types of OOD targets, which necessitates new methods such as domain adaptation, or meta-learning to improve the ML OOD prediction performance.

The best GNN models in the MatBench study, namely coGN and coNGN, demonstrated underperformance than ALIGNN, DeeperGATGNN, and CGCNN on average, but performed better than the rest of the GNNs (MEGNet, DimeNet++, and SchNet). However, they achieved the SOTA performances on three out of 15 OOD targets (all of them on the dielectric clusters). The overall OOD prediction inadequacy of these models demonstrates that their SOTA performances in the MatBench leaderboard seem to be largely due to the overfitting of the specific datasets’ folds. It is peculiar that despite leveraging line graphs to utilize angle information, the results of coGN and coNGN are not as competitive as ALIGNN for OOD prediction. By delving into the physical latent spaces of these models for the perovskites dataset, we identified that the t-SNE clusters of coGN and coNGN are very overlap** in terms of property ranges (see Fig. 7), which indicates their higher MAE on this dataset. Also, the effect of nested line graph (coNGN) is almost non-existent as its performance compared to coGN differs by only ≈ 0.002% only among datasets for different targets, which raises the question about the impact of nesting with the non-nested version (coGN) for OOD targets. SchNet and DimeNet++ are primarily designed for molecular property prediction which can be accounted for their subpar performance on both the MatBench leaderboard and our OOD prediction benchmark. Moreover, MEGNet has the most number of parameters among all the GNNs selected, making it the most prone to overfitting.

Through a thorough examination, we also observed a noteworthy trend across all datasets and target generation methods: the performance of each algorithm for such OOD test sets is consistently lower than those of the baselines established in the MatBench study, except for a few cases (see Fig. 6). This collective underperformance demonstrated that traditional GNN models are not robust enough to handle OOD property prediction yet. This empirical evidence necessitates incorporating enhanced robustness methods in these algorithms, such as domain adaptation, or federated learning. Other methods like adversarial learning or active learning can also be utilized. Moreover, physics informed GNNs can be another promising direction for develo** a model suitable for OOD prediction. Especially, current GNNs’ node and edge embeddings, updated with more physics-based information can be used to devise a better model for OOD cases. Surprisingly, both ALIGNN and DeeperGATGNN performed better than their baseline results for the SparseYsingle OOD test sets on all datasets. In fact, other GNN algorithms also had the least performance degradation in making predictions for this method compared to the other four. In contrast, the SparseXsingle targets caused all the algorithms the highest performance degradation on average. Moreover, Fig. 3, and Supplementary Fig. 2 and 3 showed that only a few clusters/samples are extremely difficult to predict, which leads to the high variation of the model’s prediction performance. As these partitions are done based on the structure x, or property y values of the t-SNE of the OFM feature space, this can be a good research direction to find out the opposite physical relation of both directions values to design a more robust GNN. Of course, the main research goal of this work is to design highly robust GNN algorithms that achieve high-performance predictions on unknown outlier materials. The unexpected resilience displayed by CGCNN, ALIGNN, and DeeperGATGNN in a few cases can be a promising research direction to investigate further for this endeavor.

Methods

State-of-the-art (SOTA) algorithms for structure-based material property prediction

We have chosen to evaluate the OOD performance for the following top structure-based material property algorithms, as reported in the MatBench study26. They are all based on graph neural networks (GNNs) with different properties.

CGCNN

CGCNN, proposed by **e and Grossman1, is the earliest known GNN for the materials property prediction problem. After converting the crystals into crystal graphs and other preprocessing steps, CGCNN serially applies N graph convolutional layers and L1 hidden layers to the input crystal graph which results in a new graph with each node representing the local environment of each atom. Following the pooling operation, a vector representing the entire crystal is linked to L2 hidden layers and subsequently connected to the output layer to generate predictions.

The lth convolutional layer updates the node feature of the ith atom vi through a process of convolution involving neighboring atoms and bonds of atom i using a nonlinear graph convolution function as given below:

In Eq. (1), \({e}_{{(i,j)}_{k}}\) denotes the edge feature of the kth bond connecting atom i and atom j, and ϕ denotes the convolution operator.

MEGNet

MEGNet (Chen et al.2) first performs the preprocessing steps to convert the input into graph embedding consisting of node and edge vectors. After that, N MEGNet layers are applied, which include two dense layers, followed by the graph convolution operation. Next, a readout method is applied to combine sets of atomic and bond vectors into a single vector, followed by several size-reducing dense layers to finally produce the single-valued prediction.

The convolution operator can be defined as follows:

In Eq. (2), and Eq. (3), vi denotes the node representation for node i, ei,j denotes the edge representation between node i, and j, \({v}_{i}^{{\prime} }\), and \({e}_{i,j}^{{\prime} }\) denotes the updated node representation and edge representation, respectively, \({{{{\mathcal{N}}}}}_{i}\) denotes nodei’s neighborhood, ϕe, and ϕv denote the edge update function, and the node update function, respectively, and ⊕ denotes the concatenation operator.

SchNet

Schütt et al.65 developed SchNet for molecules which can also be applied to crystalline solids. It first creates the embeddings for graphs from the input materials and then applies N interaction blocks to it, which includes the graph convolutions operation. After that, an atom-wise (a recurring building block applied separately to the node vectors) layer (reduces feature size), and a shifted softplus operation is applied. The final output is generated after applying another size-reducing atom-wise layer and a sum pooling operation.

The convolution operator can be defined as follows:

In Eq. (4), vi denotes the node representation for node i, ei,j denotes the edge representation between node i, and j, \({v}_{i}^{{\prime} }\), \({{{{\mathcal{N}}}}}_{i}\) denotes node i’s neighborhood, and ϕ denotes the convolution operator.

DimeNet++

DimeNet++, developed by Gasteiger et al.66 is a faster and improved version of the previously proposed DimeNet27 primarily for molecular property prediction. DimeNet++ takes a different approach than traditional GNNs for this task by embedding and updating the messages between atoms (mji). This allows DimeNet++ to incorporate directional information, considering bond angles (α(kj, ji)), in addition to interatomic distances dji. DimeNet++ goes further by jointly embedding distances and angles using a spherical 2D Fourier-Bessel basis. The following equation updates messages between atoms mji:

In Eq. (5), \({{{{\mathcal{N}}}}}_{i}\) denotes node i’s neighborhood, fint denotes the interaction function, \({e}_{RBF}^{(ji)}\) denotes the radial basis function representation of dji, and \({a}_{SBF}^{(kj,ji)}\) denotes the spherical basis function representation of dkj and α(kj, ji).

ALIGNN

In the prepossessing step, ALIGNN (Choudhary and DeCost3) converts a crystal to a crystal graph as done in CGCNN, and calculates node and edge features and other required processing. Moreover, it creates a line graph of the original graph to incorporate the angle feature between bonds. ALIGNN first applies edge-gated graph convolution on the line graph, and utilizes the edge representation and triplet representation features concerning the angle between the edge pairs) from layer l to update the triplet representation, and bond messages of layer l + 1. The updated bond messages from layer l + 1 are passed to the next stage where they are incorporated with the original graph and the node representation from layer l. Then through a second edge-gated graph convolution, the node and the edge representation of layer l + 1 are calculated. The equations for updating the node and the edge features are given below:

In Eq. (6), and Eq. (7), u(l), t(l), v(l), and e(l) denotes the bond message representation, triplet representation, node representation, and edge representation, respectively, of layer l, and ϕeg denotes the edge-gated graph convolution operator.

DeeperGATGNN

Omee et al.4 developed the global attention-based GNN model DeeperGATGNN which essentially overcame the over-smoothing issue of GNNs (where with the increase of graph convolution layers, all the node feature vectors of the graph eventually update to the same vector) with the inclusion of differentiable group normalization (DGN)67 and skip-connections68, and can go beyond >50 layers. The process begins with an initial graph-encoded material serving as the input. Following this, multiple Augmented Graph Attention (AGAT) layers, each containing 64 neurons, and a DGN (Dynamic Graph Network) are utilized. There is a skip connection from the output of the l-th AGAT layer to the output of the (l + 1)-th AGAT layer, implemented post-DGN application. Subsequently, a global attention layer is introduced, where the node feature vectors are merged with the composition encoded vector. These are then processed through two fully connected layers, resulting in a context vector that encapsulates weights associated with the positions of each node. This context vector is then combined with the node feature vectors, followed by a global pooling of these vectors. The node features undergo further processing through one or two hidden layers, and finally, the output property is generated through an additional fully connected layer.

The local soft-attention αi,j between a node i and its neighbor j can be represented by the following rule:

In equation (8), \({{{{\mathcal{N}}}}}_{i}\) denotes the node i’s neighborhood, and ai,j denotes the weight coefficient between nodes i and j, indicating the significance of node j concerning node i. The global attention gi, employed just before global pooling, computes the overall importance of each node. It can be expressed by the following equation:

In equation (9), \(x\in {{\mathbb{R}}}^{F}\) denotes a learned embedding, E denotes a compositional vector of the crystal, \(W\in {{\mathbb{R}}}^{1\times (F+| E| )}\) denotes a parameterized matrix, and xc denotes the learned embedding of any atom c within the crystal.

coGN and coNGN

coGN and coNGN (Ruff et al.36) use the basic GNN framework of Battaglia et al.69, where a single GNN layer is defined by a graph network (GN) block responsible for converting a generic graph with edge, node, and global graph attributes using three update functions ϕ and three aggregation functions ρ. For the original material encoded graph G, a line graph L(G) is constructed, so that there is an edge \({e}_{{e}_{ij},{e}_{jk}}^{L(G)}\) for every two incident edges eij, ejk in G (referring to the angle information between those two edges). Each nested GN block (total T such blocks are applied) takes the edge features xE, node features xV, graph level features xG, and the encoded graph G itself as the input and outputs the updated node representation \({x}_{V}^{{\prime} }\), updated edge representation \({x}_{E}^{{\prime} }\), updated graph level representation \({x}_{G}^{{\prime} }\), and the graph G. The edge, node, and graph level representation update operations of coGN are given below:

In Eq. (10), (11), and (12), \({x}_{{e}_{ij}}\) denotes the edge representation of edge eij between node i, and j, \({x}_{{v}_{i}}\) denotes the node representation of node i, \({\hat{x}}_{{v}_{i}}\) denotes the local edge aggregated representation of node i, \({x}_{{v}_{i}}^{{\prime} }\) denotes the updated node representation of node i, \({\hat{x}}_{G}\) denotes the node aggregated representation of graph G, and \({\tilde{x}}_{G}\) denotes the global edge aggregated representation of graph G. In the nested version (coNGN), the edge update is further continued by incorporating the angle information (x∠) from the line graph L(G) using the following equation:

Evaluation criterion

We use the mean absolute error (MAE) metric, which is a standard evaluation criterion for regression-based materials property prediction problems. MAE can be calculated by the following equation:

In Eq. (14), yi denotes the ground true property values, \({\hat{y}}_{i}\) denotes the predicted property values, and n denotes the number of data points in the dataset.

Data availability

All three datasets are chosen from the MatBench study26. Details about the datasets with 50-fold OOD splits can be found at https://github.com/sadmanomee/OOD_Materials_Benchmark.

Code availability

The source codes of the GNN algorithms and the OOD target generation methods’ code can be found at https://github.com/sadmanomee/OOD_Materials_Benchmark.

References

**e, T. & Grossman, J. C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett. 120, 145301 (2018).

Chen, C., Ye, W., Zuo, Y., Zheng, C. & Ong, S. P. Graph networks as a universal machine learning framework for molecules and crystals. Chem. Mater. 31, 3564–3572 (2019).

Choudhary, K. & DeCost, B. Atomistic line graph neural network for improved materials property predictions. Npj Comput. Mater. 7, 185 (2021).

Omee, S. S. et al. Scalable deeper graph neural networks for high-performance materials property prediction. Patterns 3, 100491 (2022).

Yan, K., Liu, Y., Lin, Y. & Ji, S. Periodic graph transformers for crystal material property prediction. Adv. Neural. Inf. Process. Syst. 35, 15066–15080 (2022).

Wang, A. Y.-T., Kauwe, S. K., Murdock, R. J. & Sparks, T. D. Compositionally restricted attention-based network for materials property predictions. Npj Comput. Mater. 7, 77 (2021).

Goodall, R. E. & Lee, A. A. Predicting materials properties without crystal structure: deep representation learning from stoichiometry. Nat. Commun. 11, 6280 (2020).

Cheng, G., Gong, X.-G. & Yin, W.-J. Crystal structure prediction by combining graph network and optimization algorithm. Nat. Commun. 13, 1492 (2022).

Omee, S. S., Wei, L., Hu, M. & Hu, J. Crystal structure prediction using neural network potential and age-fitness pareto genetic algorithm. J. Mater. Inf. 4, 2 (2024).

Hu, J. et al. Deep learning-based prediction of contact maps and crystal structures of inorganic materials. ACS Omega 8, 26170–26179 (2023).

Qi, H. et al. Latent conservative objective models for offline data-driven crystal structure prediction. In: ICLR 2023 Workshop on Machine Learning for Materials (2023).

Wang, J. et al. MAGUS: machine learning and graph theory assisted universal structure searcher. Natl. Sci. Rev. 10, nwad128 (2023).

Merchant, A. et al. Scaling deep learning for materials discovery. Nature 624, 80–85 (2023).

Yang, S. et al. Scalable diffusion for materials generation. In: International Conference on Learning Representations (2024).

Zhao, Y. et al. High-throughput discovery of novel cubic crystal materials using deep generative neural networks. Adv. Sci. 8, 2100566 (2021).

Zhao, Y. et al. Physics guided deep learning for generative design of crystal materials with symmetry constraints. Npj Comput. Mater. 9, 38 (2023).

Fanourgakis, G. S., Gkagkas, K., Tylianakis, E. & Froudakis, G. E. A universal machine learning algorithm for large-scale screening of materials. J. Am. Chem. Soc. 142, 3814–3822 (2020).

Ojih, J., Rodriguez, A., Hu, J. & Hu, M. Screening outstanding mechanical properties and low lattice thermal conductivity using global attention graph neural network. Energy AI 14, 100286 (2023).

Zunger, A. Inverse design in search of materials with target functionalities. Nat. Rev. Chem. 2, 0121 (2018).

Han, S. et al. Design of new inorganic crystals with the desired composition using deep learning. J. Chem. Inf. Model. 63, 5755–5763 (2023).

Seko, A., Hayashi, H., Nakayama, K., Takahashi, A. & Tanaka, I. Representation of compounds for machine-learning prediction of physical properties. Phys. Rev. B 95, 144110 (2017).

Ward, L., Agrawal, A., Choudhary, A. & Wolverton, C. A general-purpose machine learning framework for predicting properties of inorganic materials. Npj Comput. Mater. 2, 1–7 (2016).

Ward, L. et al. Matminer: An open source toolkit for materials data mining. Comput. Mater. Sci. 152, 60–69 (2018).

Fung, V., Zhang, J., Juarez, E. & Sumpter, B. G. Benchmarking graph neural networks for materials chemistry. Npj Comput. Mater. 7, 1–8 (2021).

Hu, J. et al. MaterialsAtlas.org: a materials informatics web app platform for materials discovery and survey of state-of-the-art. Npj Comput. Mater. 8, 65 (2022).

Dunn, A., Wang, Q., Ganose, A., Dopp, D. & Jain, A. Benchmarking materials property prediction methods: the Matbench test set and Automatminer reference algorithm. Npj Comput. Mater. 6, 138 (2020).

Gasteiger, J., Groß, J. & Günnemann, S. Directional message passing for molecular graphs. In: International Conference on Learning Representations (2019).

Gasteiger, J., Becker, F. & Günnemann, S. Gemnet: Universal directional graph neural networks for molecules. Adv. Neural. Inf. Process. Syst. 34, 6790–6802 (2021).

Reiser, P. et al. Graph neural networks for materials science and chemistry. Commun. Mater. 3, 93 (2022).

Louis, S.-Y. et al. Graph convolutional neural networks with global attention for improved materials property prediction. Phys. Chem. Chem. Phys. 22, 18141–18148 (2020).

Kong, S. et al. Density of states prediction for materials discovery via contrastive learning from probabilistic embeddings. Nat. Commun. 13, 949 (2022).

Cong, G. & Fung, V. Improving materials property predictions for graph neural networks with minimal feature engineering. Mach. Learn.: Sci. Technol. 4, 035030 (2023).

**ao, J., Yang, L. & Wang, S. Graph isomorphism network for materials property prediction along with explainability analysis. Comput. Mater. Sci. 233, 112619 (2024).

**ong, Z. et al. Evaluating explorative prediction power of machine learning algorithms for materials discovery using k-fold forward cross-validation. Comput. Mater. Sci. 171, 109203 (2020).

Varivoda, D., Dong, R., Omee, S. S. & Hu, J. Materials property prediction with uncertainty quantification: a benchmark study. Appl. Phys. Rev. 10 (2023).

Ruff, R., Reiser, P., Stühmer, J. & Friederich, P. Connectivity optimized nested line graph networks for crystal structures. Digit. Discov. 3, 694–601 (2024).

Matbench leaderboard. https://matbench.materialsproject.org/ (2021).

Hu, J., Liu, D., Fu, N. & Dong, R. Realistic material property prediction using domain adaptation based machine learning. Digit. Discov. 3, 300–312 (2024).

Bergerhoff, G., Hundt, R., Sievers, R. & Brown, I. The inorganic crystal structure data base. J. Chem. Inf. Comput. Sci. 23, 66–69 (1983).

Jain, A. et al. Commentary: The Materials Project: a materials genome approach to accelerating materials innovation. APL Mater.1 (2013).

Kirklin, S. et al. The open quantum materials database (OQMD): assessing the accuracy of dft formation energies. Npj Comput. Mater. 1, 1–15 (2015).

Curtarolo, S. et al. AFLOW: An automatic framework for high-throughput materials discovery. Comput. Mater. Sci. 58, 218–226 (2012).

Li, K. et al. Exploiting redundancy in large materials datasets for efficient machine learning with less data. Nat. Commun. 14, 7283 (2023).

Li, K., DeCost, B., Choudhary, K., Greenwood, M. & Hattrick-Simpers, J. A critical examination of robustness and generalizability of machine learning prediction of materials properties. Npj Comput. Mater. 9, 55 (2023).

Meredig, B. et al. Can machine learning identify the next high-temperature superconductor? Examining extrapolation performance for materials discovery. Mol. Syst. Des. Eng. 3, 819–825 (2018).

Wenzel, F. et al. Assaying out-of-distribution generalization in transfer learning. Adv. Neural. Inf. Process. Syst. 35, 7181–7198 (2022).

Wang, J. et al. Generalizing to unseen domains: a survey on domain generalization. IEEE Trans. Knowl. Data Eng. 35, 8052–8072 (2022).

Shen, Z. et al. Towards out-of-distribution generalization: a survey. Preprint at https://arxiv.org/abs/2108.13624 (2021).

Schölkopf, B. et al. Toward causal representation learning. Proceedings of the IEEE 109, 612–634 (2021).

Wilson, G. & Cook, D. J. A survey of unsupervised deep domain adaptation. ACM Trans. Intell. Syst. Technol. (TIST) 11, 1–46 (2020).

Schrier, J., Norquist, A. J., Buonassisi, T. & Brgoch, J. In pursuit of the exceptional: Research directions for machine learning in chemical and materials science. J. Am. Chem. Soc. 145, 21699–21716 (2023).

Yang, J. et al. OpenOOD: benchmarking generalized out-of-distribution detection. Adv. Neural. Inf. Process. Syst. 35, 32598–32611 (2022).

Gui, S., Li, X., Wang, L. & Ji, S. GOOD: a graph out-of-distribution benchmark. Adv. Neural. Inf. Process. Syst. 35, 2059–2073 (2022).

Koh, P. W. et al. WILDS: A benchmark of in-the-wild distribution shifts. In: International Conference on Machine Learning, 5637–5664 (PMLR, 2021).

Shimakawa, H., Kumada, A. & Sato, M. Extrapolative prediction of small-data molecular property using quantum mechanics-assisted machine learning. Npj Comput. Mater. 10, 11 (2024).

Kauwe, S. K., Graser, J., Murdock, R. & Sparks, T. D. Can machine learning find extraordinary materials? Comput. Mater. Sci. 174, 109498 (2020).

Chanussot, L. et al. Open catalyst 2020 (OC20) dataset and community challenges. ACS Catal. 11, 6059–6072 (2021).

Tran, R. et al. The open catalyst 2022 (OC22) dataset and challenges for oxide electrocatalysts. ACS Catal. 13, 3066–3084 (2023).

Choudhary, K. & Sumpter, B. G. Can a deep-learning model make fast predictions of vacancy formation in diverse materials?AIP Adv. 13 (2023).

Bengio, Y. & Grandvalet, Y. No unbiased estimator of the variance of k-fold cross-validation. Adv. Neural. Inf. Process. Syst. 16 (2003).

Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inf. Theory. 28, 129–137 (1982).

Pham, T. L. et al. Machine learning reveals orbital interaction in materials. Sci. Technol. Adv. Mater. 18, 756 (2017).

Karamad, M. et al. Orbital graph convolutional neural network for material property prediction. Phys. Rev. Mater. 4, 093801 (2020).

Van der Maaten, L. & Hinton, G. Visualizing data using t-SNE.J. Mach. Learn. Res. 9 (2008).

Schütt, K. T., Sauceda, H. E., Kindermans, P.-J., Tkatchenko, A. & Müller, K.-R. Schnet–a deep learning architecture for molecules and materials. J. Chem. Phys. 148, 241722 (2018).

Gasteiger, J., Giri, S., Margraf, J. T. & Günnemann, S. Fast and uncertainty-aware directional message passing for non-equilibrium molecules. NeurIPS 2020 ML for Molecules Workshop (2020).

Zhou, K. et al. Towards deeper graph neural networks with differentiable group normalization. Adv. Neural. Inf. Process. Syst. 33, 4917–4928 (2020).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In: Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Battaglia, P. W. et al. Relational inductive biases, deep learning, and graph networks. Preprint at https://arxiv.org/abs/1806.01261 (2018).

Petousis, I. et al. High-throughput screening of inorganic compounds for the discovery of novel dielectric and optical materials. Sci. Data 4, 1–12 (2017).

De Breuck, P.-P., Hautier, G. & Rignanese, G.-M. Materials property prediction for limited datasets enabled by feature selection and joint learning with modnet. Npj Comput. Mater. 7, 83 (2021).

Castelli, I. E. et al. New cubic perovskites for one-and two-photon water splitting using the computational materials repository. Energy Environ. Sci. 5, 9034–9043 (2012).

Acknowledgements

The research reported in this work was supported in part by National Science Foundation under the grants 2110033, OAC-2311203, and 2320292. The views, perspectives, and content do not necessarily represent the official views of the NSF.

Author information

Authors and Affiliations

Contributions

Conceptualization, J.H.; methodology, S.O., J.H., N.F.; investigation, S.O., J.H., M.H.; software, S.O., N.F.; writing–original draft preparation, S.O., N.F., R.D., J.H.; writing–review and editing, S.O., N.F., R.D., M.H., J.H.; visualization, S.O., N.F.; supervision, J.H. and M.H.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Omee, S.S., Fu, N., Dong, R. et al. Structure-based out-of-distribution (OOD) materials property prediction: a benchmark study. npj Comput Mater 10, 144 (2024). https://doi.org/10.1038/s41524-024-01316-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-024-01316-4

- Springer Nature Limited