Abstract

Nanowire Networks (NWNs) belong to an emerging class of neuromorphic systems that exploit the unique physical properties of nanostructured materials. In addition to their neural network-like physical structure, NWNs also exhibit resistive memory switching in response to electrical inputs due to synapse-like changes in conductance at nanowire-nanowire cross-point junctions. Previous studies have demonstrated how the neuromorphic dynamics generated by NWNs can be harnessed for temporal learning tasks. This study extends these findings further by demonstrating online learning from spatiotemporal dynamical features using image classification and sequence memory recall tasks implemented on an NWN device. Applied to the MNIST handwritten digit classification task, online dynamical learning with the NWN device achieves an overall accuracy of 93.4%. Additionally, we find a correlation between the classification accuracy of individual digit classes and mutual information. The sequence memory task reveals how memory patterns embedded in the dynamical features enable online learning and recall of a spatiotemporal sequence pattern. Overall, these results provide proof-of-concept of online learning from spatiotemporal dynamics using NWNs and further elucidate how memory can enhance learning.

Similar content being viewed by others

Introduction

Neuromorphic devices offer the potential for a fundamentally new computing paradigm, one based on a brain-inspired architecture that promises enormous efficiency gains over conventional computing architectures1,2,3,4,5,6,7,8,9,10,11. A particularly successful neuromorphic computing approach is the implementation of spike-based neural network algorithms in CMOS-based neuromorphic hardware2,12,13,14,15,16,17. An alternate neuromorphic computing approach is to exploit brain-like physical properties exhibited by novel nano-scale materials and structures18,19,20,21,22, including, in particular, the synapse-like dynamics of resistive memory (memristive) switching4,23,24,25,26,27,28,29,30,31.

This study focuses on a class of neuromorphic devices based on memristive nanowire networks (NWNs)32,33. NWNs are comprised of metal-based nanowires that form a heterogeneous network structure similar to a biological neural network34,35,36,37,38. Additionally, nanowire-nanowire cross-point junctions exhibit memristive switching attributed to the evolution of a metallic nano-filament due to electro-chemical metallisation39,40,

Each row in Fig. 2 shows the averaged image, input and readout data for 100 MNIST samples randomly selected from the training set for the corresponding digit class.

For each class, the readout voltages from each channel (columns 3–7, blue) are distinctly different from the corresponding input voltages and exhibit diverse characteristics across the readout channels. This demonstrates that the NWN nonlinearly maps the input signals into a higher-dimensional space. Rich and diverse dynamical features are embedded into the channel readouts from the spatially distributed electrodes, which are in contact with different parts of the network (see Supplementary Fig. S6 for additional non-equilibrium dynamics under non-adiabatic conditions). We show below how the inter-class distinctiveness of these dynamical features, as well as their intra-class diversity, can be harnessed to perform online classification of the MNIST digits.

Online learning

Table 1 presents the MNIST handwritten digit classification results using the online method (external weights trained by an RLS algorithm). Results are shown for one and five readout channels. For comparison, also shown are the corresponding classification results using the offline batch method (external weights trained by backpropagation with gradient descent). Both classifiers learn from the dynamical features extracted from the NWN, with readouts delivered to the two classifiers separately. For both classifiers, accuracies increase with the number of readout channels, demonstrating the non-linearity embedded by the network in the readout data. For the same number of channels, however, the online method outperforms the batch method. In addition to achieving a higher classification accuracy, the online classifier W requires only a single epoch of 50,000 training samples, compared to 100 training epochs for the batch method using 500 mini-batches of 100 samples and a learning rate η = 0.1. The accuracy of the online classifier becomes comparable to that of the batch classifier when active error correction is not used in the RLS algorithm (see Supplementary Table 1). A key advantage of the online method is that continuous learning from the streaming input data enables relatively rapid convergence, as shown next.

To better understand how learning is achieved with the NWN device, we investigated further the dependence of classification accuracy on the number of training samples and the device readouts. Figure 3a shows classification accuracy as a function of the number of digits presented to the classifier during training (See Supplementary Fig. S7 for classification results using different electrode combinations for input/drain/readouts and different voltage ranges). The classification accuracy consistently increases as more readout samples are presented to the classifier to update W and plateaus at ≃92% after ≃10,000 samples. Classification accuracy also increases with the number of readout channels, corresponding to an increase in the number of dynamical features (i.e., 5 × 784 features per digit for 5 channel readouts, the channels are added following the order 1,2,13,15,12) that become sufficiently distinguishable to improve classification. However, as shown in Fig. 3b, this increase is not linear, with the largest improvements observed from 1 to 2 channels. Figure 3c shows the confusion matrix for the classification result using 5 readout channels after learning from 50,000 digit samples. The classification results for 8 digits lie within 1.5σ (where s.d. is σ = 3%) from the average (93.4%). Digit ‘1’ demonstrates significantly higher accuracy since it has a simpler structure, and ‘5’ is an outlier because of the irregular variances of handwriting and low pixel resolution (See Supplementary Fig. S8 for examples of misclassified digits).

a Testing accuracy as a function of the number of training samples read out from one and five channels. Inset shows a zoom-in of the converged region of the curve. b Maximum testing accuracy achieved after 50,000 training samples with respect to the number of readout channels used by the online linear classifier. Error bars indicate the standard error of the mean of 5 measurements with shuffled training samples. c Confusion matrix for online classification using 5 readout channels.

Mutual information

Mutual information (MI) is an information-theoretic metric that can help uncover the inherent information content within a system and provide a means to assess learning progress during training. Figure 4a shows the learning curve of the classifier, represented by the mean of the magnitude of the change in the weight matrix, \(\overline{| \Delta {{{{{{{\bf{W}}}}}}}}| }\), as a function of the number of sample readouts for 5 channels. Learning peaks at ≃102 − 103 samples, after which it declines rapidly and becomes negligible by 104 samples. This is reflected in the online classification accuracy (cf. Fig. 3a), which begins to saturate by ~104 samples. The rise and fall of the learning rate profile can be interpreted in terms of maximal dynamical information being extracted by the network. This is indicated by Fig. 4b, which presents mutual information (MI) between the 10 MNIST digit classes and each of the NWN device readouts used for online classification (cf. Fig. 3). The MI values for each channel are calculated by averaging the values across the 784 pixel positions. The coincidence of the saturation in MI with the peak in \(\overline{| \Delta {{{{{{{\bf{W}}}}}}}}| }\) between 102 − 103 samples demonstrates learning is associated with information dynamics. Note that by ≃102 samples, the network has received approximately 10 samples for each digit class (on average). It is also noteworthy that MI for the input channel is substantially smaller.

a Mean of the magnitude of changes in the linear weight matrix, \(\overline{| \Delta {{{{{{{\bf{W}}}}}}}}| }\), as a function of the number of samples learned by the network. b Corresponding Mutual Information (MI) for each of the 5 channels used for online classification (cf. Fig. 3) and for input channel 0.

Figure 5 shows MI estimated in a static way, combining all the samples after the whole training dataset is presented to the network. The MI maps are arranged according to the digit classes and averaged within each class. The maps suggest that distinctive information content is extracted when digit samples from different classes are streamed into the network. This is particularly evident when comparing the summed maps for each of the digits (bottom row of Fig. 5). Additionally, comparison with the classification confusion matrix shown in Fig. 3c reveals that the class with the highest total MI value (‘1’) exhibits the highest classification accuracy (98.4%), while the lowest MI classes (‘5’ and ‘8’) exhibit the lowest accuracies (89.6% and 89.5%), although the trend is less evident for intermediate MI values.

Sequence memory task

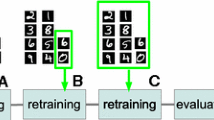

As mentioned earlier, RC is most suitable for time-dependent information processing. Here, an RC framework with online learning is used to demonstrate the capacity of NWNs to recall a target digit in a temporal digit sequence constructed from the MNIST database. The sequence memory task is summarised in Fig. 6. A semi-repetitive sequence of 8 handwritten digits is delivered consecutively into the network in the same way as individual digits were delivered for the MNIST classification task. In addition to readout voltages, the network conductance is calculated from the output current. Using a sliding memory window, the earliest (first) digit is reconstructed from the memory features embedded in the conductance readout of subsequent digits. Figure 6 shows digit ‘7’ reconstructed using the readout features from the network corresponding to the following 3 digits, ‘5’, ‘1’ and ‘4’. See “Methods” for details.

Samples of a semi-repetitive 8-digit sequence (14751479) constructed from the MNIST dataset are temporally streamed into the NWN device through one input channel. A memory window of length L (L = 4 shown as an example) slides through each digit of the readouts from 2 channels (7 and 13) as well as the network conductance. In each sliding window, the first (earliest) digit is selected for recall as its image is reconstructed from the voltage of one readout channel (channel 7) and memory features embedded in the conductance time series of later L − 1 digits in the memory window. Linear reconstruction weights are trained by the online learning method and reconstruction quality is quantified using the structural similarity index measure (SSIM). The shaded grey box shows an example of the memory exclusion process, in which columns of conductance memory features are replaced by voltage readouts from another channel (channel 13) to demonstrate the memory contribution to image reconstruction (recall) of the target digit.

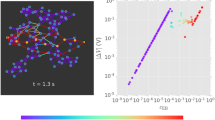

Figure 7a shows the network conductance time series and readout voltages for one of the digit sequence samples. The readout voltages exhibit near-instantaneous responses to high pixel intensity inputs, with dynamic ranges that vary distinctively among different channels. The conductance time series also exhibits a large dynamic range (at least 2 orders of magnitude) and, additionally, delayed dynamics. This can be attributed to recurrent loops (i.e., delay lines) in the NWN and to memristive dynamics determined by nano-scale electro-ionic transport. The delay dynamics demonstrate that NWNs retain the memory of previous inputs (see Supplementary Fig. S9 for an example showing the fading memory property of the NWN reservoir). Figure 7b shows the respective digit images and I − V curves for the sequence sample. The NWN is driven to different internal states as different digits are delivered to the network in sequence. While the dynamics corresponding to digits from the same class show some similar characteristics in the I − V phase space (e.g., digit ‘1’), generally, they exhibit distinctive characteristics due to their sequence position. For example, the first instance of ‘4’ exhibits dynamics that explore more of the phase space than the second instance of ‘4’. This may be attributed to differences in the embedded memory patterns, with the first ‘4’ being preceded by ‘91’ while the second ‘4’ is preceded by ‘51’ and both ‘9’ and ‘5’ have distinctively different phase space characteristics, which are also influenced by their sequence position as well as their uniqueness.

a Conductance time series (G) and readout voltages for one full sequence cycle during the sequence memory task. For better visualisation, voltage readout curves are smoothed by averaging over a moving window of length 0.05 s and values for channel 7 are magnified by ×10. b Corresponding digit images and memory patterns in I − V phase space.

Figure 8a shows the image reconstruction quality for each digit in the sequence as a function of memory window length. Structural similarity (SSIM) is calculated using a testing group of 500 sets, and the maximum values achieved after learning from 7000 training sets are presented (see Supplementary Fig. S10 for the learning curving for L = 4 and Supplementary Fig. S11 for average SSIM across all digits). The best reconstruction results are achieved for digits ‘1’ and ‘7’, which are repeated digits with relatively simple structures. In contrast, digit ‘4’, which is also repeated, but has a less simple structure, is reconstructed less faithfully. This indicates that the repeat digits produce memory traces that are not completely forgotten before each repetition (i.e., nano-filaments in memristive junctions do not completely decay). On average, the linear reconstructor is able to recall these digits better than the non-repeat digits. For the non-repeat digits (‘5’ and ‘9’), the reconstruction results are more interesting: digit ‘5’ is consistently reconstructed with the lowest SSIM, which correlates with its low classification accuracy (cf. Fig. 3c), while ‘9’ exhibits a distinctive jump from L = 4 to L = 5 (see also Fig. 8b). This reflects the contextual information used in the reconstruction: for L = 4, ‘9’ is reconstructed from the sub-sequence ‘147’, which is the same sub-sequence for ‘5’, but for L = 5, ‘9’ is uniquely reconstructed from sub-sequence‘1475’, with a corresponding increase in SSIM. This is not observed for digit ‘5’; upon closer inspection, it appears that the reconstruction of ‘5’ suffers interference from ‘9’ (see Supplementary Fig. S12) due to the common sub-sequence ‘147’ and to the larger variance of ‘5’ in the MNIST dataset (which also contributes to its misclassification). A similar jump in SSIM is evident for the repeat digit ‘7’ from L = 2 to L = 3. For L = 3, the first instance of ‘7’ (green curve) is reconstructed from ‘51’, while the second instance (pink curve) is reconstructed from ‘91’, so the jump in SSIM from L = 2 may be attributed to digit ‘7’ leaving more memory traces in digit ‘1’, which has a simpler structure than either ‘9’ or ‘5’.

a Maximum SSIM for each digit in the sequence as a function of the memory window length after the network learned from 7000 training sets. The testing set is comprised of 500 sequence samples. Error bars indicate the standard error of the mean across the samples within each digit class. b An example of a target digit from the MNIST dataset and the reconstructed digits using memory windows of different lengths. c Maximum SSIM with respect to the number of columns excluded in the memory feature space. The results are averaged across all testing digits using L = 4, and the standard error of the mean is indicated by the shading. Dashed blue lines indicate when whole digits are excluded.

While the SSIM curves for each individual digit in the sequence increase only gradually with memory window length, their average (shown in Supplementary Fig. S11) shows an increase up to L = 5, followed by saturation. This reflects the repetition length of the sequence.

Figure 8c shows the maximum SSIM, averaged over all reconstructed digits using L = 4 when memory is increasingly excluded from the online reconstruction. SSIM decreases as more columns of conductance features are excluded (replaced with memoryless voltage features). This demonstrates that the memory embedded in the conductance features enhances online learning by the reconstructor. In particular, the maximum SSIM plateaus when ~28 and ~56 columns (corresponding to whole digits) are excluded and decreases significantly when the number of columns excluded is approximately 14, 42 or 70, indicating most of the memory traces are embedded in the central image pixels.

Discussion

This study is the first to perform the MNIST handwritten digit classification benchmark task using an NWN device. In a previous study, Milano et al.65 simulated an NWN device and mapped the readouts to a ReRAM cross-point array to perform in materia classification (with a 1-layer neural network) of the MNIST digits, achieving an accuracy of 90.4%. While our experimental implementation is different, readouts from their simulated NWN device also exhibited diverse dynamics and distinct states in response to different digit inputs, similar to that observed in this study. Other studies using memristor cross-bar arrays as physical reservoirs achieved lower MNIST classification accuracies74,75. In contrast, NWN simulation studies achieved higher classification accuracies of ≃98% by either pre-processing the MNIST digits with a convolutional kernel62 or placing the networks into a deep learning architecture76.

In this study, the relatively high classification accuracy achieved with online learning (93.4%) can be largely attributed to the iterative algorithm, which is based on recursive least squares (RLS). Previous RC studies by Jaeger et al.77,78 suggested that RLS converges faster than least mean squares (similar to gradient-based batch methods), which tends to suffer more from numerical roundoff error accumulation, whereas RLS converges in a finite number of steps and uses the remaining training samples for fine-tuning69. This is evident in our results showing incremental learning of the weight matrix and is also corroborated by our mutual information analysis. While we performed online classification in an external digital layer, it may be possible to implement the online learning scheme in hardware using, for example, a cross-point array of regular resistors, which exhibit a linear (i.e., Ohmic) response. Such a system would then represent an end-to-end analogue hardware solution for efficient online dynamical learning in edge applications29,79. An all-analogue RC system was recently demonstrated by Zhong et al.30 using dynamic resistors as a reservoir and an array of non-volatile memristors in the readout module.

Other studies have exploited the structure of memristor cross-bar arrays to execute matrix–vector multiplication used in conventional machine learning algorithms for MNIST classification, both in experiment80,81 and simulation82,83, although crosstalk in memristor cross-bars limits the accuracy of classification implemented in this type of hardware80.

Beyond physical RC, unconventional physical systems like NWNs could potentially be trained with backpropagation to realise more energy-efficient machine learning than is currently possible with existing software and hardware accelerator approaches84. Furthermore, a related study by Loeffler et al.85 (see also refs. 86,87) demonstrates how the internal states of NWNs can be controlled by external feedback to harness NWN working memory capacity and enable cognitive tasks to be performed.

Information-theoretic measures like mutual information (MI) have been widely used to assess the intrinsic dynamics in random Boolean networks88,89, Ising models90, and the learning process of echo state networks91 as well as artificial neural networks (ANNs)92. In a previous simulation study62,64, we found that transfer entropy and active information storage in NWNs reveal that specific parts of the network exhibit richer information dynamics during learning tasks, and we proposed a scheme for optimising task performance accordingly. However, such element-wise calculations are not feasible for physical NWN hardware devices because the number of readouts from the system is limited by the size of the MEA. In this study, we applied a similar approach to that used in Shine et al.92 to estimate the information content of ANNs at different stages during the MNIST classification task. They found unequal credit assignment, with some image pixels, as well as specific neurons and weights in the ANN, contributing more to learning than others. In our case, by investigating the information content embedded in the NWN readouts, we found that the learning process synchronises with the information provided by the dataset in the temporal domain, while each readout channel provides distinct information about different classes. Interestingly, we also observed some indication of channel preference for a specific digit class, which could potentially be further exploited for channel-wise tuning for other learning tasks.

The sequence memory task introduced in this study is novel and demonstrates both online learning and sequence memory recall from the memory patterns embedded in NWN dynamics. In the brain, memory patterns are linked with network attractor states93. The brain’s neural network is able to remember sequence inputs by evolving the internal states to fixed points that define the memory pattern for the sequence94. In this study, we also found basins of attraction for the individual digits in the sequence, which allowed us to reconstruct the target digit image as a way of recalling the associated memory pattern. Delayed dynamics similar to that observed in the conductance time series of NWNs were also utilised by Voelker et al.95 to build spiking recurrent neural networks96 and implement memory-related tasks. In their studies, the delayed dynamics and memory are implemented in software-based learning algorithms, while NWNs are able to retain memory in hardware due to the memristive junctions and recurrent structure33. A similar study by Payvand et al.97 demonstrated sequence learning using spiking recurrent neural networks implemented in ReRAM to exploit the memory property of this resistive memory hardware. Although their sequence was more repetitive than ours and task performance is measured differently, they demonstrated improved performance when network weights were allowed to self-organise and adapt to changing input, similar to physical NWNs. Future potential applications like natural language processing and image analysis may be envisaged with NWN devices that exploit their capability of learning and memorising dynamic sequences. Future computational applications of NWNs may be realised under new computing paradigms grounded in observations and measurements of physical systems beyond the Turing Machine concept98.

In conclusion, we have demonstrated how neuromorphic nanowire network devices can be used to perform tasks in an online manner, learning from the rich spatiotemporal dynamics generated by the physical neural-like network. This is fundamentally different from data-driven statistical machine learning using artificial neural network algorithms. Additionally, our results demonstrate how online learning and recall of streamed sequence patterns are linked to the associated memory patterns embedded in the spatiotemporal dynamics.

Methods

Experimental setup

An NWN device, as shown in Fig. 9, was fabricated and characterised following the procedure developed in our previous studies34,35,36,49,59. Briefly, a multi-electrode array (MEA) device with 16 electrodes (4x4 grid) was fabricated as the substrate of the device using photolithographically patterned Cr/Ti (5 nm) and Pt (150 nm). Selenium nanowires were first formed by hydrothermal reduction of sodium selenite. Ag2Se nanowires were then synthesised by redispersing Se nanowires in a solution of silver nitrate (AgNO3). The resulting nanowire solution was drop-casted over the inner electrodes of the MEA to synthesise the nanowire network (See Supplementary Fig. S1 for SEM images of the NWN without electrodes and Supplementary Fig. S2 for a simulated NWN as well as its corresponding graph representation). A data acquisition device (PXI-6368) was employed to deliver electric signals to the network and simultaneously read out the voltage time series from all electrodes. A source measurement unit (PXI-4141) was used to collect the current time series through the grounded electrode. A switch matrix (TB-2642) was used to modularly route tall signals through the network as desired. All the equipment listed above was from National Instruments and controlled by a custom-made LabView package designed for these applications49,59,99. The readout voltage data exhibited non-uniform phase shifts of 10 − 100Δt compared to the input stream, so a phase correction method was applied to prepare the readout data for further usage (see details in the following section).

a Optical image of the multi-electrode array. Input/output are enabled by the outer electrodes. b Scanning electron microscopy (SEM) image of the Ag2Se Network. 16 inner electrodes are fabricated as a 4 × 4 grid and the nanowires are drop-casted on top of them. Scale bar: 100 μm. c Zoom-in of the SEM image for electrodes 0-3. Scale bar: 100 μm. d Zoom-in for electrode 0. Scale bar: 20 μm. e Zoom-in for electrode 3. Scale bar: 20 μm.

Online learning

Learning tasks were performed under a reservoir computing (RC) framework58,59,62,99. With N digit samples used for training, the respective pixel intensities were normalised to [0.1, 1] V as input voltage values and denoted by U ∈ RN×784 for future reference. U was then converted to a 1-D temporal voltage pulse stream and delivered to an input channel while another channel was grounded. Each voltage pulse occupied Δt = 0.001 s in the stream. Voltage features were read simultaneously from M other channels on the device (see Supplementary Fig. S3 for device setups). These temporal readout features were normalised and re-arranged to a 3-D array, V ∈ RN×M×784.

The phase of the readout voltage data (V) was adjusted per instance in the dataset based on the corresponding input using cross-correlation100. For the n-th digit sample, the respective segment in the input pulse stream was denoted as un ∈ R784×1, and the corresponding dynamical features from M readout channels were represented by [vn,1, vn,2, … , vn,m], where vn,m ∈ R784×1. The cross-correlation of un and vn,m is calculated as:

for τ = −783, −782, … 0, … , 783. The phase difference ϕ is determined by:

The 1-D phase adjustment was applied to the readout feature vn,m of the instance based on the phase difference ϕn,m.

The NWN device readouts embed dynamical features that are linearly separable, so classification can be performed in a linear output layer:

where W is the weight matrix (i.e., classifier), A is the readout feature space and Y contains the sample classes. An online method was implemented based on Greville’s iterative algorithm for computing the pseudoinverse of linear systems101. This method is also a special case of the recursive least square (RLS) algorithm69, using a uniform sample weighting factor of λ = 1.

The sample feature space was denoted by A = [a1, a2, … , an], A ∈ RK×N, in which each column (an) represented one sample and every sample was composed of K features (K = 784M). The corresponding classes for each sample were Y = [y1, y2, … , yn], Y ∈ R10×N. The order of the columns in A and Y are randomly shuffled to smooth the learning curve. During training, a new feature vector of the n-th digit sample an and its corresponding class vector yn were appended to the right of respective matrices An and Yn as columns, and the algorithm solved eqn. (3) for W(W ∈ R10×K) incrementally. The difference between the target yn and the projected result using the previous weight matrix Wn−1 was described by:

When ∥en∥ was below a preset threshold \({e}^{{\prime} }=0.1\), W was updated by:

where

with

For the cases when ∥en∥ was above the threshold, an error-correction scheme was applied to optimise the result68. In addition, A, Y and θ were initialised at n = 0 by:

with \(\epsilon=\overline{| {{{{{{{\bf{A}}}}}}}}| }\).

Mutual information

To gain deeper insight into the network’s behaviour and attribute real-time learning to its dynamics, mutual information (MI) between the dynamical features and the corresponding classes was calculated to estimate the information content in a way similar to a previous study on ANNs92. All MI results were calculated using the Java Information Dynamics Toolkit (JIDT)102. MI was estimated spatially based on the pixel positions from different readout channels and temporally as the feature space expanded when more samples were learned. Among the N digit samples delivered to the network, an ensemble was created using the readout data from channel m at the i-th pixel position: Vm,i = [v1,m,i, v2,m,i, … , vN,m,i], Vm,i ∈ R1×N. Another class vector P ∈ R1×N was created and mutual information was estimated accordingly by:

where ΩMI stands for the mutual information operator, where the Kraskov estimator was employed103.

A 3-D matrix \({{{{{{{\boldsymbol{{{{{{{{\mathcal{M}}}}}}}}}}}}}}}}\in {{{{{{{{\bf{R}}}}}}}}}^{N\times M\times 784}\) was generated after calculating spatial-temporally throughout V. \({{{{{{{\boldsymbol{{{{{{{{\mathcal{M}}}}}}}}}}}}}}}}\) was averaged across the pixel axis (third) to obtain the temporal mutual information per channel. The spatial analysis of mutual information was based on the calculation result for the whole dataset. The class-wise interpretation of \({{{{{{{\boldsymbol{{{{{{{{\mathcal{M}}}}}}}}}}}}}}}}\) was generated by averaging across samples corresponding to each digit class.

Sequence memory task

A sequence-based memory task was developed to investigate sequence memory and recall. Samples of an 8-digit sequence with a semi-repetitive pattern (14751479) were constructed by randomly sampling the respective digits from the MNIST dataset. Input pixel intensities were normalised to the range [0, 0.1] V, and the samples were streamed into and read out from the NWN in the same way as the classification task, using channels 9, 8 and 7 for input, ground and readout, respectively. In addition to dynamical features from the voltage readouts, memory features were used from the network conductance, calculated pixel-wise by

where \({{{{{{{\boldsymbol{{{{{{{{\mathcal{I}}}}}}}}}}}}}}}}\) is the current leaving the ground channel and U is the input voltage.

To test recall, a digit from the sequence was selected and its image was reconstructed from voltage readouts and memory features in the conductance time series corresponding to digits later in the sequence. A variable memory window of length L ∈ [2, 8] determines the sequence portion used to reconstruct a previous digit image, i.e., from L − 1 subsequent digits. For example, a moving window of length L = 4 reconstructs the first (target) digit from the conductance memory features in the subsequent 3 digits (cf. Fig. 6). By placing the target digits and memory features into ensembles, a dataset of 7000 training samples and 500 testing samples was composed using the sliding windows.

To reconstruct each target digit image, the same linear online learning algorithm used for MNIST classification was applied. In this case, Y in eqn. (3) was composed as Y = [y1, y2, . . . , yn], with Y ∈ R784×N, and softmax in eqn. (4) was no longer used. Structural similarity index measure (SSIM)104 was employed to quantify the reconstruction quality.

To further test that image reconstruction exploits memory features and not just dynamical features associated with the spatial pattern of the sequence (i.e., sequence classification), a memory exclusion test was developed as follows. The conductance features corresponding to a specified number of columns of inputs were replaced by voltage features from channel 13 (voltages are adjusted to the same scale as conductance) so that the memory in conductance is excluded without losing the non-linear features in the readout data (cf. Fig. 6). The target digit was then reconstructed for a varying number of columns with memory exclusion.