Abstract

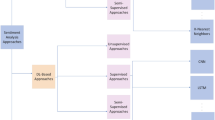

The last decade witnessed the proliferation of automated content analysis in communication research. However, existing computational tools have been taken up unevenly, with powerful deep learning algorithms such as transformers rarely applied as compared to lexicon-based dictionaries. To enable social scientists to adopt modern computational methods for valid and reliable sentiment analysis of English text, we propose an open and free web service named transformer- and lexicon-based sentiment analysis (TLSA). TLSA integrates diverse tools and offers validation metrics, empowering users with limited computational knowledge and resources to reap the benefit of state-of-the-art computational methods. Two cases demonstrate the functionality and usability of TLSA. The performance of different tools varied to a large extent based on the dataset, supporting the importance of validating various sentiment tools in a specific context.

Similar content being viewed by others

Data availability

The datasets analyzed during the current study are available in our BitBucket repository, https://bitbucket.org/leecwwong/tlsa_webservice_public/src/master/paper_datasets/.

Notes

Source code is accessible through https://bitbucket.org/leecwwong/tlsa_webservice_public/.

References

Baden, C., Pipal, C., Schoonvelde, M., & van der Velden, M. A. G. (2022). Three gaps in computational text analysis methods for social sciences: A research agenda. Communication Methods and Measures, 16(1), 1–18. https://doi.org/10.1080/19312458.2021.2015574

Baek, Y. M., Cappella, J. N., & Bindman, A. (2011). Automating content analysis of open-ended responses: Wordscores and affective intonation. Communication Methods and Measures, 5(4), 275–296. https://doi.org/10.1080/19312458.2011.624489

Barbieri, F., Camacho-Collados, J., Neves, L., & Espinosa-Anke, L. (2020). TweetEval: Unified benchmark and comparative evaluation for tweet classification. ar**v preprint ar**v:2010.12421.

Benoit, K., Conway, D., Lauderdale, B. E., Laver, M., & Mikhaylov, S. (2016). Crowd-sourced text analysis: Reproducible and agile production of political data. American Political Science Review, 110(2), 278–295.

Boumans, J. W., & Trilling, D. (2016). Taking stock of the toolkit: An overview of relevant automated content analysis approaches and techniques for digital journalism scholars. Digital Journalism, 4(1), 8–23. https://doi.org/10.1080/21670811.2015.1096598

Boukes, M., Van de Velde, B., Araujo, T., & Vliegenthart, R. (2020). What’s the tone? Easy doesn’t do it: Analyzing performance and agreement between off-the-shelf sentiment analysis tools. Communication Methods and Measures, 14(2), 83–104.

Bradley, M. M., & Lang, P. J. (1999). Affective norms for English words (ANEW): Instruction manual and affective ratings. Technical report C-1, the center for research in psychophysiology (Vol. 30, No. 1, pp. 25–36). University of Florida.

Cho, J. (2013). Campaign tone, political affect, and communicative engagement. Journal of Communication, 63(6), 1130–1152. https://doi.org/10.1111/jcom.12064

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37–46.

DeRobertis, N. (2020). Pysentiment2 0.1.1. Available from: https://pypi.org/project/pysentiment2/

De Smedt, T., & Daelemans, W. (2012). Pattern for Python. The Journal of Machine Learning Research, 13(1), 2063–2067.

Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). BERT: Pre-training of deep bidirectional transformers for language understanding. Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL).

Domahidi, E., Yang, J., Niemann-Lenz, J., & Reinecke, L. (2019). Outlining the way ahead in computational communication science: An introduction to the IJoC special section on “Computational Methods for Communication Science: Toward a Strategic Roadmap.’’. International Journal of Communication, 19328036, 13.

Feng, F., Yang, Y., Cer, D., Arivazhagan, N., & Wang, W. (2022). Language-agnostic BERT sentence embedding. Annual Meeting of the Association for Computational Linguistics (ACL).

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT press.

Guo, L., Mays, K., Lai, S., Jalal, M., Ishwar, P., & Betke, M. (2020). Accurate, fast, but not always cheap: Evaluating “crowdcoding’’ as an alternative approach to analyze social media data. Journalism & Mass Communication Quarterly, 97(3), 811–834.

Harmon-Jones, E., Harmon-Jones, C., & Summerell, E. (2017). On the importance of both dimensional and discrete models of emotion. Behavioral Sciences, 7(4), 66–82. https://doi.org/10.3390/bs7040066

Haselmayer, M., & Jenny, M. (2016). Sentiment analysis of political communication: Combining a dictionary approach with crowdcoding. Quality & Quantity, 56, 2623–2646. https://doi.org/10.1007/s11135-016-0412-4

Hilbert, M., Barnett, G., Blumenstock, J., Contractor, N., Diesner, J., Frey, S., & Zhu, J. J. (2019). Computational communication science: A methodological catalyzer for a maturing discipline. International Journal of Communication, 13, 3912–3934.

Hugging Face (2022). DistilBERT base uncased finetuned SST-2. https://huggingface.co/distilbert-base-uncased-finetuned-sst-2-english

Hutto, C., & Gilbert, E. (2014). VADER: A parsimonious rule-based model for sentiment analysis of social media text. International AAAI Conference on Web and Social Media, 8(1), 216–225.

James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An introduction to statistical learning. New York: Springer.

Kim, E., Hou, J., Han, J. Y., & Himelboim, I. (2016). Predicting retweeting behavior on breast cancer social networks: Network and content characteristics. Journal of Health Communication, 21(4), 479–486. https://doi.org/10.1080/10810730.2015.1103326

Krippendorff, K. H. (2004). Content analysis: An introduction to its methodology (2nd ed.). Sage.

Kroon, A. C., van der Meer, T., & Vliegenthart, R. (2022). Beyond counting words: Assessing performance of dictionaries, supervised machine learning, and embeddings in topic and frame classification. Computational Communication Research, 4(2), 528–570.

Lind, F., Gruber, M., & Boomgaarden, H. G. (2017). Content analysis by the crowd: Assessing the usability of crowdsourcing for coding latent constructs. Communication Methods and Measures, 11(3), 191–209. https://doi.org/10.1080/19312458.2017.1317338

Liu, Y., Ott, M., Goyal, N., Du, J., Joshi, M., Chen, D., ... & Stoyanov, V. (2019). RoBERTa: A robustly optimized BERT pretraining approach. ar**v preprint ar**v:1907.11692.

Loria, S. (2018). textblob Documentation. Release 0.15, 2(8). https://buildmedia.readthedocs.org/media/pdf/textblob/latest/textblob.pdf

Loughran, T., & McDonald, B. (2011). When is a liability not a liability? Textual analysis, dictionaries, and 10-Ks. Journal of Finance, 66(1), 35–65.

Martin, G. L., Mswahili, M. E., & Jeong, Y. S. (2021). Sentiment classification in Swahili language using multilingual BERT. ar**v preprint ar**v:2104.09006.

Mohammad, S. M. (2016). Sentiment analysis: Detecting valence, emotions, and other affectual states from text. In H. L. Meiselman (Ed.), Emotion Measurement (pp. 201–238). Duxford/Kidlington, UK: Elsevier Ltd.

Mohammad, S. M., & Turney, P. D. (2013). Crowdsourcing a word–emotion association lexicon. Computational intelligence, 29(3), 436–465.

Murphy, K. P. (2012). Machine learning: A probabilistic perspective. MIT press.

Nguyen, D. Q., Vu, T., & Nguyen, A. T. (2020). BERTweet: A pre-trained language model for English tweets. ar**v preprint ar**v:2005.10200.

Nielsen, F. Å. (2011). A new ANEW: Evaluation of a word list for sentiment analysis in microblogs. ar**v preprint ar**v:1103.2903.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., & Duchesnay, E. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12, 2825–2830.

Pérez, J. M., Giudici, J. C., & Luque, F. (2021). pysentimiento: A Python toolkit for sentiment analysis and social NLP tasks. ar**v preprint ar**v:2106.09462.

Pilny, A., McAninch, K., Slone, A., & Moore, K. (2019). Using supervised machine learning in automated content analysis: An example using relational uncertainty. Communication Methods and Measures, 13(4), 287–304.

Ribeiro, F. N., Araújo, M., Gonçalves, P., Gonçalves, M. A., & Benevenuto, F. (2016). Sentibench-a benchmark comparison of state-of-the-practice sentiment analysis methods. EPJ Data Science, 5(1), 1–29.

Riff, D., Lacy, S., Fico, F., & Watson, B. (2014). Analyzing media messages: Using quantitative content analysis in research (3rd ed.). Routledge.

Rodgers, S., & Thorson, E. (2003). A socialization perspective on male and female reporting. Journal of Communication, 53(4), 658–675. https://doi.org/10.1111/j.1460-2466.2003.tb02916.x

Rong, X. (2014). Word2vec parameter learning explained. ar**v preprint ar**v:1411.2738.

Sanh, V., Debut, L., Chaumond, J., & Wolf, T. (2019). DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. ar**v preprint ar**v:1910.01108

Shah, D. V., Cappella, J. N., & Neuman, W. R. (2015). Big data, digital media, and computational social science: Possibilities and perils. The ANNALS of the American Academy of Political and Social Science, 659(1), 6–13.

Shin, J., & Thorson, K. (2017). Partisan selective sharing: The biased diffusion of fact-checking messages on social media. Journal of Communication, 67(2), 233–255. https://doi.org/10.1111/jcom.12284

Sivakumar, S., & Rajalakshmi, R. (2022). Context-aware sentiment analysis with attention-enhanced features from bidirectional transformers. Social Network Analysis and Mining, 12(1), 1–23.

Smith, A., Tofu, D. A., Jalal, M., Halim, E. E., Sun, Y., Akavoor, V., & Wijaya, D. (2020). OpenFraming: We brought the ML; you bring the data. Interact with your data and discover its frames. ar**v preprint ar**v:2008.06974.

Song, X., Salcianu, A., Song, Y., Dopson, D., & Zhou, D. (2020). Fast wordpiece tokenization. ar**v preprint ar**v:2012.15524.

van Atteveldt, W., & Peng, T. Q. (2018). When communication meets computation: Opportunities, challenges, and pitfalls in computational communication science. Communication Methods and Measures, 12(2–3), 81–92.

van Atteveldt, W., Van der Velden, M. A., & Boukes, M. (2021). The validity of sentiment analysis: Comparing manual annotation, crowd-coding, dictionary approaches, and machine learning algorithms. Communication Methods and Measures, 15(2), 121–140.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin , I. (2017). Attention is all you need. Advances in Neural Information Processing Systems (NeurIPS), 30, 2.

Wallach, H. (2016). Computational social science: Towards a collaborative future. In R. M. Alvarez (Ed.), Computational social science: Discovery and prediction (p. 307). Cambridge, UK: Cambridge University Press.

Weber, R., Mangus, J. M., Huskey, R., Hopp, F. R., Amir, O., Swanson, R., & Tamborini, R. (2018). Extracting latent moral information from text narratives: Relevance, challenges, and solutions. Communication Methods and Measures, 12(2–3), 119–139.

Wu, J., Wong, C.-W., Zhao, X., & Liu, X. (2021). Toward effective automated content analysis via crowdsourcing. IEEE International Conference on Multimedia and Expo (ICME). https://doi.org/10.1109/ICME51207.2021.9428220

Young, L., & Soroka, S. (2012). Affective news: The automated coding of sentiment in political texts. Political Communication, 29(2), 205–231. https://doi.org/10.1080/10584609.2012.671234

Zhao, X. (2022). Toward more valid and transparent research: A methodological review of social media and crisis communication. In Y. ** & L. Austin (Eds.), Social Media and Crisis Communication (pp. 386–397). Taylor and Francis.

Zhao, X., Zhan, M., & Ma, L. (2020). How publics react to situational and renewing organizational responses across crises: Examining SCCT and DOR in social-mediated crises. Public Relations Review, 46(4), 1–10. https://doi.org/10.1016/j.pubrev.2020.101944

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, X., Wong, CW. Automated measures of sentiment via transformer- and lexicon-based sentiment analysis (TLSA). J Comput Soc Sc (2023). https://doi.org/10.1007/s42001-023-00233-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42001-023-00233-8