Abstract

The performance improvement of CMOS computer fails to meet the enormous data processing requirement of artificial intelligence progressively. The memristive neural network is one of the most promising circuit hardwares to make a breakthrough. This paper proposes a novel memristive synaptic circuit that is composed of four MOS transistors and one memristor (4T1M). The 4T1M synaptic circuit provides flexible control strategies to change memristance or respond to the input signal. Applying the 4T1M synaptic circuit as the cell of memristive crossbar array, based on the structure and algorithm of the back-propagation (BP) neural network, this paper proposes circuit design of the memristive crossbar-based BP neural network. By reusing the 4T1M memristive crossbar array, the computations in the forward-propagation process and back-propagation process of BP neural network are accomplished on the memristive crossbar-based circuit to accelerate the computing speed. The 4T1M memristive crossbar array can change all the cells’ memristance at a time, accordingly, the memristive crossbar-based BP neural network can realize synchronous memristance adjustment. The proposed memristive crossbar-based BP neural network is then evaluated through experiments involving XOR logic operation, iris classification, and MNIST handwritten digit recognition. The experimental results present fewer iterations or higher classification accuracies. Further, the comprehensive comparisons with the existing memristive BP neural networks highlight the advantages of the proposed memristive crossbar-based BP neural network, which achieves the fastest memristance adjustment speed using relatively few components.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

With the rapid development of the artificial intelligence, the amount of data being processed is increasing explosively. Thereupon, the tremendous improvement of the data processing performance becomes an urgent problem to be settled. The CMOS computer serves as the current hardware platform for artificial intelligence data processing. The CMOS computer adopts von Neumann architecture where the CPU and memory exchange data constantly. This causes the performance improvement bottleneck of the CMOS computer. On the other hand, the Moore’s law that describes the CMOS integration density development has gradually reaching its limit. It also restricts the performance improvement of the CMOS computer. Hence, there is a pressing need for emerging hardware technologies to deliver significant performance improvements [1, 2].

The memristive neural network is the promising emerging hardware technology to break through the data processing dilemma of the CMOS computer. Based on Ohm’s law and Kirchhoff’s law, the mathematical models of artificial intelligence is mapped to the circuit implementations of the memristive neural networks. The computations in the mathematical models can be accomplished on memristive neural network circuit hardware immediately. Therefore, the data processing speed is expedited remarkably. Additionally, the memristive neural network, leveraging the excellent properties of the memristor, such as changeable memristance, non-volatility, and nanosize, enables in-memory computing and large-scale parallel computing [3, 4].

Artificial neural network is a key branch of artificial intelligence [5,6,7]. The important part of artificial neural network, the deep neural network has become a research hotspot [8,9,10,11]. The back-propagation (BP) neural network serves as the fundamental prototype for implementing deep neural networks. The circuit design of memristive BP neural network provides a solid foundation for constructing memristive deep neural networks. Several memristive BP neural network circuits have been designed to accomplish various tasks.

A memristive bridge circuit is proposed in [12,13,14] to serve as the synaptic circuit. Based on this synaptic circuit, memristive BP neural networks are constructed for tasks, such as distinguishing car images and achieving three-bit parity. The memristive circuit, which has a crossbar structure, offers manufacturing advantages and enables high-density integration. In [15,16,17], a memristive crossbar array is utilized as the synaptic circuit, with each cell consisting solely of one memristor. The memristive neural networks presented in [15,16,17] are trained using a simplified BP algorithm to perform character recognition.For the memristive neural network in [18], every cell of the memristive crossbar array is comprised of one memristor and an additional sign control circuit, acting as the synaptic circuit to represent positive or negative synaptic weight. Hence, the cell of the memristive crossbar array needs twelve transistors and three memristors. For the memristive neural networks presented in [19,20,21,22,23], each cell in the memristive crossbar array is also composed of a single memristor, and two such memristors in different memristive crossbar arrays serve as the synaptic circuit.

The memristive crossbar array, consisting purely of memristors, suffers severely from the sneak path issue. In order to relieve the influence of sneak path, the CMOS-memristor hybrid crossbar structure is adopted in the circuit design of memristive neural network. The memristive crossbar arrays in [24,25,26,27,28] are one MOS transistor and one memristor structure, and the two cells in the two same memristive crossbar arrays act as a synaptic circuit. The corresponding memristive neural networks are trained to realize data processing, image compression, etc. For the memristive neural networks in [29, 30], the cell of the crossbar array is made up of two MOS transistors and one memristor, acting as the synaptic circuit.

However, the existing studies of memristive BP neural networks have some shortcomings. For a memristive BP neural network, one iteration can be divided into: forward-propagation process, back-propagation process and memristance adjustment stage. The synaptic weight variations are calculated by the forward-propagation process and back-propagation process. In the memristance adjustment stage, the synaptic weight variations are mapped as the memristance variations. Then, the memristors in the memristive crossbar array are adjusted to the specific memristance according to the memristance variations. The existing memristive crossbar-based BP neural networks are unable to adjust all the memristors’ memristance at a time in the memristance adjustment stage, namely, cannot realize synchronous memristance adjustment. The memristive neural networks in [19,20,21,22,23,24,25,26,27,28] can change one row memristors’ memristance at a time. The memristance adjustment stage needs much time. In the memristance adjustment stage, the memristive neural networks in [15,16,17,18] can only change one memristor’s memristance at one time. Hence, the memristance adjustment stage takes relatively more time. Additionally, some existing memristive neural networks only implement the forward-propagation process of BP neural network, while neglecting the circuit design for the back-propagation process [12,13,14, 20, 22].

In particular, the structure of the synaptic circuit in [12,13,14] restricts that the synaptic weight can only change in (− 1,1). As a result, the applicability of the memristive neural networks is limited to a few applications. The synaptic circuit in [29, 30] cannot represent zero weight and negative weight by the passive physical memristor. Moreover, the circuit designs of the memristive neural networks do not align with the computations in BP neural network. The circuit designs presented in [18] can only handle scalar-level computations, while computations in the BP neural network involve multiplications between matrices or vectors. The computation style of matrices or vectors is inconsistent with that of scalar-level computation, rendering the circuit designs in [18] inappropriate.

This paper proposes a novel four transistors and one memristor (4T1M) circuit, acting as the synaptic circuit. The synaptic circuit can represent positive weight, zero weight and negative weight properly. The corresponding synaptic weight variation scope can be adjusted. The 4T1M circuit is acted as the cell to construct the 4T1M memristive crossbar array. Then, the circuit design of memristive crossbar-based BP neural network is proposed based on the 4T1M memristive crossbar array. In comparison to the existing studies, the proposed memristive circuits have the following main innovations and contributions.

-

1)

This paper proposes a novel 4T1M synaptic circuit, which can respond to analog signal effectively and can be controlled to adjust memristance flexibly.

-

2)

By reusing the 4T1M memristive crossbar array, the computations between matrices or vectors in the forward-propagation process and back-propagation process are fulfilled on the circuit hardware properly to accelerate the computing speed.

-

3)

In the memristance adjustment stage, the proposed memristive crossbar-based BP neural network can change all the memristors’ memristance at a time, realizing synchronous memristance adjustment. The memristance adjustment speed is the fastest. Accordingly, the training speed is improved notably.

This paper proceeds as follows. “BP neural network” Section introduces the multilayer neural network and the iteration rules of BP algorithm. “Neuron circuit” Section presents the circuit design of the synaptic circuit, and analyzes its input signal response and memristance change. “Memristive crossbar-based BP neural network” Section presents the circuit design of the memristive neural network, analyzing the forward- and back-propagation processes and the synchronous weight training. “Memristive XOR network”, “Memristive iris classification network”, and “Memristive handwritten digit recognition network” Sections describe the circuit designs of the memristive multilayer neural networks to realize XOR logic operation, iris classification, and MNIST handwritten digit recognition, respectively. “Discussion” Section gives the comparisons of different memristive neural networks. Conclusions are drawn in “Conclusion” Section.

BP neural network

The BP neural network, which is trained by BP algorithm, is an elementary prototype to construct the deep neural network. In the training of the BP neural network, one iteration is composed of forward process and back-propagation process. In the forward-propagation process, the corresponding computations are given by

where \(\varvec{y}^{m+1}\) represents the output vector of \(m+1\) layer. \(\varvec{W}^{m+1}\) is the synaptic weight matrix of \(m+1\) layer. \(\varvec{y}^{m}\) denotes the output vector of m layer, which is also the input vector of \(m+1\) layer. \(\varvec{b}^{m+1}\) represents the bias vector of \(m+1\) layer. \(\varvec{f}^{m+1}(.)\) is the activation function. \(\varvec{x}^{m+1}\) denotes the summation result vector.

In this paper, the cross-entropy loss function is utilized by the BP neural network. The cross-entropy loss function can be represented as

where \(\varvec{d}\) represents the desired label vector, \(\varvec{y}^{M}\) denotes the output vector of the multilayer neural network.

The BP neural network adopts stochastic gradient descent to minimize the loss function. In the back-propagation process, the weight updating rules are given by

where \(\varvec{W}^{m+1}\)(k) is the synaptic weight matrix of kth iteration, \(\varvec{W}^{m+1}\)(k+1) is the synaptic weight matrix of \(k+1\)th iteration, \(\beta \) is the learning rate. The chain rules of BP sensitivities can be represented as

where \(\varvec{\dot{F}^m} (\varvec{x}^{\varvec{m}})\) represents the derivative of activation function of m layer, \(\varvec{s}^{m+1}\) denotes the sensitivity vector of \(m+1\) layer. \(\lambda \) is a constant, \(\varvec{s}^{M}\) is the sensitivity vector of the output layer.

As analyzed above, one iteration of the BP neural network not only contains the computations of the synaptic weight matrix in the forward-propagation process, but also includes the computation of the transposed matrix of the synaptic weights in the back-propagation process.

Neuron circuit

4T1M circuit

This paper proposes a novel synaptic circuit, consisting of four MOS transistors and one memristor (4T1M), as illustrated in Fig. 1a. In the synaptic circuit, \(T_{1}\) and \(T_{3}\) are PMOS transistors while \(T_{2}\) and \(T_{4}\) are NMOS transistors. The threshold voltages of PMOS and NMOS transistors are − 0.5V and 0.5V, respectively. The two NMOS transistors have the same parameters and the parameters of the two PMOS transistors are also the same.

Moreover, the parameters of the NMOS and PMOS transistors are symmetrical. \(V_{s}\) and \(V_{p}\) are two signal ports. \(V_{{\text {con}}}\) is the shared control signal, turning the four MOS transistors on or off.

For the synaptic circuit, there are two input styles: one is that \(V_{s}\) is set as input signal port and \(V_{p}\) is arranged as 0V. The other is that \(V_{p}\) is set as input signal port while \(V_{s}\) is arranged as 0V. Owing to the shared control signal and the symmetrical parameter settings of the four MOS transistors, these two input styles are structural symmetry. Then, no matter the signal is input from \(V_{s}\) or \(V_{p}\), the synaptic circuit can be analyzed in the same way.

In the paper, \(V_{p}\) is arranged as 0V to analyze the synaptic circuit. Moreover, leveraging the structural symmetry feature, the memristive crossbar array composed of the synaptic circuit can flexibly arrange the control signal and configure the input style to accomplish the computations of the synaptic weight matrix and the computation of the transposed matrix of synaptic weights. This paper adopts the AIST memristor model, which is obtained by fitting the experimental data of the physical Ag/AgInSbTe/Ta memristor.

The AIST memristor model is given by [15,16,17]

where \(R_{{\text {on}}}\) and \(R_{{\text {off}}}\) are the minimum the maximum memristance, respectively. \(i_{{\text {on}}}\), \(i_{{\text {off}}}\) and \(i_{0}\) are constant fitting parameters. \(\mu _v\) represents the average ion mobility in the memristor, D is the length of the memristor.

\(V_{T+}\) and \(V_{T-}\) denote the positive and negative threshold voltages of the memristor, respectively. When the voltage on the memristor is larger than \(V_{T+}\) or is smaller than \(V_{T-}\), the memristance increases or decreases, respectively. If the voltage across the memristor changes between \(V_{T-}\) and \(V_{T+}\), the memristance will keep unchanged. In this paper, \(V_{T-}\) and \(V_{T+}\) are set as − 0.5V and 0.5V, respectively.

When the input signal \(V_{s}\) is arranged as 1 V, the memristance can be managed to increase or decrease by setting the voltage of the control signal \(V_{{\text {con}}}\). In this situation, if \(V_{{\text {con}}}\) is set as 2V, the gate–source voltage of \(T_{1}\) and \(T_{2}\) is 1V while the voltage between gate and source of \(T_{3}\) and \(T_{4}\) is 2V. In accordance with the threshold features of the NMOS and PMOS transistors, \(T_{2}\) and \(T_{4}\) turn on while \(T_{1}\) and \(T_{3}\) turn off. Hence, the current flows from \(T_{2}\), the memristor, \(T_{4}\) to \(V_{p}\), as illustrated in Fig. 1b. The off-state MOS transistors are marked as gray in Fig. 1b. The current flows from the ‘-’ port of the memristor to the ‘+’ port. Accordingly, the voltage on the memristor is − 1V, making the memristance reduce. On the other hand, if \(V_{{\text {con}}}\) is set as − 2V, the voltages between gate and source of \(T_{1}\), \(T_{2}\), \(T_{3}\), and \(T_{4}\) are − 3V, − 3V, − 2V, and − 2V, respectively. Therefore, \(T_{1}\) and \(T_{3}\) turn on while \(T_{2}\) and \(T_{4}\) turn off. The corresponding current path is shown in Fig. 1c. The voltage across the memristor is 1V, and the memristance rises.

When the input signal \(V_{s}\) is arranged as − 1V, the memristance also can be regulated to rise or reduce by setting the voltage of the control signal \(V_{{\text {con}}}\). In this condition, if \(V_{{\text {con}}}\) is set as 2V, the gate–source voltages of \(T_{1}\), \(T_{2}\), \(T_{3}\), and \(T_{4}\) are 3V, 3V, 2V, and 2V, respectively. Then, \(T_{2}\) and \(T_{4}\) turn on while \(T_{1}\) and \(T_{3}\) turn off. The current flows from \(T_{4}\), the memristor, \(T_{2}\), to \(V_{s}\), which is shown in Fig. 2a, increasing the memristance. On the contrary, if \(V_{{\text {con}}}\) is − 2V, the current path is shown in Fig. 2b, and the memristance reduces.

According to the AIST memristor model and Ohm’s law, the decrement of the memristance can be obtained

where \(R_{m}(t)\) is the current memristance, \(R_{m}(0)\) is the initial memristance. On the other hand, the increment of the memristance can be represented as

As analyzed above, the input signal \(V_{s}\) is configured at \(\pm ~1\) V and the control signal \(V_{{\text {con}}}\) is set as \(\pm ~ 2\) V to modulate the memristance. Compared with the synaptic circuit in [31], the synaptic circuit in this paper is constituted by fewer components. In [31], the synaptic circuit incorporates a NOT gate to turn on or turn off the four MOS transistors. Besides, it needs two additional MOS transistors to select the input signals. In contrast, the synaptic circuit presented in this paper utilizes a shared control signal \(V_{{\text {con}}}\) for the four MOS transistors, eliminating the need for additional components.

Neuron circuit

The neuron circuit with one synaptic circuit is shown in Fig. 3. Due to the virtual ground of operational amplifier, the voltage at p, \(V_{p}\), is 0 V. The output voltage of the neuron circuit \(V_{{\text {out}}}\) is given by

When the neuron circuit responds to the input signal, the memristance must keep unchanged to ensure the output is stable and reliable. Hence, the input signal must change between − 0.5 V and 0.5 V.

If the input signal \(V_{s}\) varies between − 0.5 V and 0.5 V, in the condition that \(V_{{\text {con}}}\) is − 2 V, the gate - source voltage of \(T_{1}\) and \(T_{2}\) is a voltage between − 1.5V and − 2.5 V. \(T_{1}\) and \(T_{2}\) are PMOS transistor and NMOS transistor, respectively. Therefore, \(T_{1}\) turns on while \(T_{2}\) turns off. At the same time, the gate–source voltages of \(T_{3}\) and \(T_{4}\) are − 2 V, so \(T_{3}\) turns on and \(T_{4}\) turns off. Then, the input signal flows from \(T_{1}\), \(R_{m}\), \(T_{3}\) to the inverting terminal of the operational amplifier, getting the output of the neuron circuit shown in (13). In the other condition that \(V_{{\text {con}}}\) is 2 V, the gate–source voltage of \(T_{1}\) and \(T_{2}\) is a voltage between 1.5 V and 2.5 V. Meanwhile, the gate–source voltage of \(T_{3}\) and \(T_{4}\) is 2 V. Thus, \(T_{2}\) and \(T_{4}\) turn on while \(T_{1}\) and \(T_{3}\) turn off. Then, the input signal also can be responded according to (13). Consequently, the neuron circuit can respond to the voltage between − 0.5 V and 0.5 V properly.

The simulation of the neuron circuit is depicted in Fig. 4, illustrating the circuit’s stable and reliable response to continuously changing input signals. The input signal, which is marked as \(V_{{\text {in}}}\) in Fig. 4, is a sine voltage whose amplitude is 0.4V. The parameter setting of the AIST memristor model is presented in Table 1. The initial memristance of \(R_{m}\) is arranged about 50 K\(\Omega \), and the resistance of \(R_{1}\) is set as 30 K\(\Omega \). Fig. 4, \(R_{m}\) denotes the memristance variation, \(V_{{\text {rm}}}\) denotes the voltage on the memristor, \(V_{op}\) represents the output of the neuron circuit. Because the input signal changes between − 0.5V and 0.5V, the memristance keeps unchanged. As per equation (13), the input signal and the output of the neuron circuit exhibit opposite changes.

In Fig. 4, it is evident that regardless of the variations in the control signal \(V_{{\text {con}}}\), the input signal always contrasts with the output of the neuron circuit. Since the control signal \(V_{{\text {con}}}\) changes its voltage periodically, \(V_{{\text {rm}}}\) presents the irregular sine variation. This consistency between the simulation results and the analyses validates the findings.

The other simulation of the neuron circuit is shown in Fig. 5 to present the synaptic weight adjustment. In this simulation, the input signal \(V_{{\text {in}}}\) is manipulated to be either greater than 0.5V or smaller than − 0.5V to modify the memristance, thereby adjusting the synaptic weight. The input signal \(V_{{\text {in}}}\) is set as \(\pm \,0.6\)V, \(\pm \,0.8\)V, \(\pm \,1.0\)V, \(\pm \,1.2\)V, and \(\pm \,1.4\)V lasting each 20 ms, respectively.

The control signal \(V_{{\text {con}}}\) is the 10Hz square wave, whose high level is 2V and low level is − 2V. The parameter setting of AIST memristor model is also presented in Table 1. The resistance of \(R_{1}\) is set as 30 K\(\Omega \).

In 0–40 ms, the control signal is − 2V. The input signal is 0.6V in 0–20 ms and − 0.6V during 20–40 ms, respectively. The synaptic circuit can be analyzed similarly according to the illustrations in “4T1M circuit” Section. The current paths are similar as that presented in Figs. 1c and 2b. Thus, the voltage on the memristor \(V_{{\text {rm}}}\) is 0.6V in 0–20 ms and − 0.6V during 20–40 ms, respectively. The memristance varies accordingly.

During the period of 40–60 ms, the input signal remains constant at 0.8V, while the control signal is − 2V from 40–50 ms and 2V from 50–60 ms. Then, the current paths are similar to that shown in Figs. 1c and 2b, respectively. The voltage across the memristor \(V_{{\text {rm}}}\) is 0.8V in 40–50 ms and − 0.8V during 50–60 ms, respectively. Hence, the memristance increases in 40–50 ms and decreases during 50–60 ms. As we can see, although the input signal is a constant voltage in 40–60 ms, the memristance can be altered by arranging the control signal to change the voltage on the memristor \(V_{{\text {rm}}}\).

In the interval of 60–80 ms, the synaptic circuit can be analyzed similarly. In 80–100 ms, the control signal is 2V, and the input signal is 1V. The current path is shown in Fig. 1b, so \(V_{{\text {rm}}}\) is − 1V to decrease the memristance.

The rest of the simulation results also can be analyzed in a similar manner. The simulation results depicted in Fig. 5 are consistent with the circuit analyses of the neuron circuit. This demonstrates the simulation is effective and the neuron circuit can adjust synaptic weight flexibly. This paper adopts the fixed voltage 1V to adjust the synaptic weights.

As analyzed above, the proposed neuron circuit not only can input the signal that continuously changes from the negative voltage to the positive voltage, but also has flexible control strategies to alter memristance.

Memristive crossbar-based BP neural network

Circuit implementation

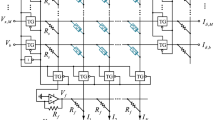

The circuit design of the memristive BP neural network lays a solid foundation for the construction of the memristive deep neural network. Utilizing memristive crossbar-based circuits enables high-density integration and offers manufacturing advantages. The proposed synaptic circuit is served as the cell of memristive crossbar array to construct the memristive crossbar-based BP neural network, which is shown in Fig. 6.

As shown in Fig. 6, this memristive crossbar-based BP neural network has two layers, every layer has two neuron circuits, and each neuron has two synaptic circuits. The circuit analyses of the first layer are presented as follows. In Fig. 6, \(V_{{\text {in}}1}\) and \(V_{{\text {in}}2}\) are the input signals, \(V_{{\text {tin}}1}\)–\(V_{{\text {tin}}4}\) are the transposed input signals of the memristive crossbar array, the voltages of these input signals vary between − 0.5V and 0.5V. \(V_{{\text {adj}}}\) is a shared power supply of all the synaptic circuits, which is applied to all the cells. \(B_{1}\) and \(B_{2}\) are the row lines of the crossbar array, \(C_{1}\) and \(C_{2}\) are the column lines of the crossbar array. \(V_{{\text {trf}}1}\)–\(V_{{\text {trf}}4}\) are the transposed output signals of the crossbar array. \(N_{1}\) and \(N_{2}\) represent the outputs of the multilayer neural network. \(V_{{\text {con}}1}\)–\(V_{{\text {con}}8}\) denote the control signals of the synaptic circuits, respectively.

The selector switch \(S_{1}\) is composed of one NMOS transistor and one PMOS transistor. The switch \(S_{2}\) or \(S_{3}\) is constituted by one NMOS transistor. \(V_{{\text {sec}}1}\)–\(V_{{\text {sec}}4}\) are the control signals of \(S_{1}\)–\(S_{3}\). In the memristive crossbar-based BP neural network, all the selector switches \(S_{1}\), the switches \(S_{2}\), and the switches \(S_{3}\) share the control signals \(V_{{\text {sec}}1}\) and \(V_{{\text {sec}}4}\), \(V_{{\text {sec}}2}\), and \(V_{{\text {sec}}3}\), respectively.

In the forward-propagation process, the control signals \(V_{{\text {sec}}1}\), \(V_{{\text {sec}}2}\), and \(V_{{\text {sec}}4}\) are set as 2V while \(V_{{\text {sec}}3}\) is − 2V. Then, the NMOS transistor in the selector switch \(S_{1}\) turns on to connect the input signals \(V_{{\text {in}}1}\) and \(V_{{\text {in}}2}\) into the memristive crossbar-based BP neural network. At the same time, the switch \(S_{2}\) turns on while the switch \(S_{3}\) turns off, linking with the operational amplifiers A1–A3, A7–A9 to get the outputs of the memristive multilayer neural network. The transposed input signals \(V_{{\text {tin}}1}\)–\(V_{{\text {tin}}4}\) are arranged as the high-impedance state to not influence the operation of the memristive crossbar array. In the first layer, because of the superposed effects of \(V_{{\text {in}}1}\) and \(V_{{\text {in}}2}\), the output voltage of the operational amplifier A3 is given by

The output of the operational amplifier A3 is connected to the column line \(C_{1}\) across the resistor \(R_{3}\). Due to the virtual ground of the operational amplifier A1, the voltage on the column line \(C_{1}\) is 0V. The output voltage of A3, \(V_{{\text {in}}1}\) and \(V_{{\text {in}}2}\) pass through the resistor \(R_{3}\), the memristors \(M_{111}\) and \(M_{121}\) respectively, generating superposed current on \(C_{1}\). Then, the output voltage of the operational amplifier A1, \(V_{{\text {out}}1}\) can be represented as

Similarly, the output voltage of the operational amplifier A2, \(V_{{\text {out}}2}\) can be represented as

where \(R_{m111}\), \(R_{m121}\), \(R_{m211}\), and \(R_{m221}\) represent the memristance of the memristors \(M_{111}\), \(M_{121}\), \(M_{211}\), and \(M_{221}\), respectively. The resistance of \(R_{N3}\) is equal to the resistance of \(R_{3}\) and \(R_{4}\). The output voltages of A1, A2 can be inferred by combining (14), (15) and (16)

Then, (17) and (18) can be simplified as

Hence, the synaptic weight is defined as

where \(G_{mij1}\) and \(G_{r}\) denote memconductance and conductance, respectively. Then, the negative weight, zero weight and positive weight are given by

For the proposed synaptic circuit, the variation range of the synaptic weight can be changed by setting k and \(G_{r}\). Nevertheless, the variation range of the synaptic weight is (− 1,1) in [12,13,14], limited by the synaptic circuit structure. This limitation hampers the applications of corresponding memristive neural networks [12,13,14]. In studies [29] and [30], the synaptic circuit fails to represent negative weights and zero weights using physical memristors.

\(V_{{\text {out}}1}\) and \(V_{{\text {out}}2}\) are sent to the activation function f(.), getting the outputs of the first layer, which are inputs of the second layer. The computation of the first layer can be given with matrix multiplication

Then, the outputs of the BP neural network \(N_{1}\) and \(N_{2}\) are given by

where \(\varvec{W}^{1}\) and \(\varvec{W}^{2}\) are the synaptic weight matrices of the first layer and second layer. Consequently, the computations in the forward-propagation process are conducted on the memristive circuit.

In the back-propagation process, the multiplications of the transposed matrix of synaptic weights can be accomplished by reusing the memristive crossbar array. These multiplications are carried out through the following steps.

Initially, the desired labels and the outputs \(N_{1}\) and \(N_{2}\) are applied to get the sensitivities of the second layer. For the second layer, the control signals \(V_{{\text {sec}}1}\) and \(V_{{\text {sec}}2}\) are set as − 2V. \(V_{{\text {sec}}3}\) and \(V_{{\text {sec}}4}\) are arranged as 2V. Thus, the selector switch \(S_{1}\) becomes high-impedance state to not affect the row lines \(B_{3}\) and \(B_{4}\). The switch \(S_{2}\) turns off, breaking the connections of the operational amplifiers A7–A9. At the same time, the switch \(S_{3}\) turns on to link with the operational amplifiers A10–A12. The sensitivities of the second layer are mapped as \(V_{{\text {tin}}3}\) and \(V_{{\text {tin}}4}\). Next, \(V_{{\text {tin}}3}\) and \(V_{{\text {tin}}4}\) are input to the column lines \(C_{3}\) and \(C_{4}\), reusing the memristive crossbar array. In this condition, the output of the operational amplifier A10 is given by

\(V_{A10}\), \(V_{{\text {tin}}3}\), and \(V_{{\text {tin}}4}\) generate superposed current on the row lines \(B_{3}\) and \(B_{4}\). Hence, the outputs of the operational amplifiers A11 and A12, \(V_{{\text {trf}}3}\) and \(V_{{\text {trf}}4}\), can be represented as

where \(R_{m112}\), \(R_{m122}\), \(R_{m212}\), and \(R_{m222}\) represent the memristance of the memristors \(M_{112}\), \(M_{122}\), \(M_{212}\), and \(M_{222}\), respectively. The resistance of \(R_{N10}\) is arranged to equal the resistance of \(R_{7}\) and \(R_{8}\). Then, \(V_{{\text {trf}}3}\) and \(V_{{\text {trf}}4}\) can be given with matrix multiplication

Thus, the multiplications of the transposed matrix of synaptic weights are accomplished on the memristive circuit. Then, the synaptic weight variations can be obtained. As analyzed above, the computations in the forward-propagation process and back-propagation process are efficiently handled by the circuit hardware, significantly enhancing computing speed. However, the memristive BP neural networks in [12,13,14, 20, 22] only implement the forward-propagation process on the circuit hardware.

Synchronous memristance adjustment

One iteration of the memristive BP neural network contains forward-propagation process, back-propagation process and memristance adjustment stage. The proposed memristive crossbar-based BP neural network can realize the synchronous memristance adjustment in the memristance adjustment stage, which is accomplished by the following steps.

The synaptic weight variations correspond to the memristance changes by (21). According to the equations (10)-(12), the memristance changes are mapped as the durations of the control signals. Next, the \(V_{{\text {sec}}2}\) is arranged as 2V to turn on the switch \(S_{2}\). The switch \(S_{3}\) is turned off. \(V_{{\text {tin}}1}\)–\(V_{{\text {tin}}4}\) are set to high-impedance state, not affecting the working of the memristive crossbar arrays. In this situation, the voltage on the column lines \(C_{1}\)–\(C_{4}\) is 0V. Both \(V_{{\text {sec}}1}\) and \(V_{{\text {sec}}4}\) are set as − 2V. Accordingly, the PMOS transistor in the selector switch \(S_{1}\) turns on to connect the power supply \(V_{{\text {adj}}}\) into the memristive BP neural network. The power supply \(V_{{\text {adj}}}\) whose voltage is 1V is shared for all the synaptic circuits. Then, the voltage across the memristors is ± 1V, which exceeds the negative or positive threshold voltage of the AIST memristor to change the memristance.

The eight synaptic circuits in the memristive crossbar-based BP neural network can be managed separately by the eight control signals \(V_{{\text {con}}1}\)–\(V_{{\text {con}}8}\). Referring to the analysis in “Neuron circuit” Section , each synaptic circuit can increase or decrease the memristor’s memristance respectively by arranging the control signal as 2V or − 2V. Therefore, all the synaptic circuits can change their memristors’ memristance at the same time by setting the control signals. The control signals last the corresponding time to change all the memristors’ memristance at a time, realizing synchronous memristance adjustment.

The memristive BP neural networks in [19,20,21,22,23,24,25,26,27,28] can adjust one row memristors’ memristance at a time. For the memristive BP neural networks in [15,16,17,18], only one memristor’ memristance can be adjusted at a time. However, the proposed memristive crossbar-based BP neural network can realize synchronous memristance adjustment, which improves the training speed notably. The scale or layers of the proposed memristive BP neural network can be extended by enlarging crossbar arrays or increasing the number of crossbar arrays.

Memristive XOR network

XOR (exclusive OR) logic operation is a classical linear inseparable task. Based on the circuit structure in Fig. 6, a memristive crossbar-based BP neural network is constructed to fulfill XOR logic operation. A two-layer neural network is adopted to complete XOR logic task. There are two neurons in the first layer, one neuron in the output layer.

The memristive XOR network is simulated on MATLAB/SIMULINK to prove the performance. In the simulation, the initial synaptic weights are randomly assigned values between 0 and 2, and the learning rate is set as 2. The high level of the desired label is arranged as 1V while the low level of the desired label is set as 0V. The high level of the input signal is mapped as 0.5V, and the low level is mapped to 0V. The activation function is the sigmoid function.

In the simulation, the training error change is shown in Fig. 7. When the voltage of the input signal is arranged that the high level is certain 0.5V while the low level is certain 0V, the training error change is shown as the blue line in Fig. 7. Conversely, the stochastic Gaussian noise which changes in 0–0.2V is added to the input signal. Hence, the voltage of the input signal is set that the high level is a random voltage in 0.3–0.5V while the low level is a random voltage in 0–0.2V. The corresponding training error change is shown as the red line in Fig. 7.

When the output of the XOR network exceeds 0.5V, it signifies the output is high level, and vice versa. After 32 iterations, no matter the input signal is the certain voltage or the stochastic voltage, the XOR network outputs the logic result correctly. Influenced by the stochastic Gaussian noise, the training error descent of the red line is smaller than that of the blue line.

For the memristive neural network in [17], the hidden layer contains three neurons. At the same time, the neural network needs 48 iterations to realize correct XOR logic task. In contrast, the XOR network presented in this paper achieves the same task with only two hidden layer neurons and within 32 iterations. Limited by the structure of the synaptic circuit, the synaptic weight in [12,13,14] can only change in (− 1,1). However, the memristive XOR network exhibits the larger synaptic weight variation range.

Memristive iris classification network

Circuit design

The iris classification is a linear inseparable problem. The iris data set is composed of three types of irises, every type of iris has 50 samples. Based on the circuit structure of the proposed memristive crossbar-based BP neural network, the constructed memristive iris classification network is a two-layer neural network, whose schematic diagram is shown in Fig. 8. There are four neurons in the hidden layer, while the output layer consists of three neurons representing the three distinct iris types.

As shown in Fig. 8, \(IN_{1}\)–\(IN_{4}\) represents the data components of one iris sample, \(N_{1}\)–\(N_{4}\) are the four neuron circuits in the hidden layer, \(OT_{1}\)–\(OT_{3}\) are the outputs of the iris classification network. \(V_{{\text {adj}}}\) is the shared power supply to adjust the memristors’ memristance in the memristive crossbar arrays, whose voltage is 1V. The transposed input signals \(V_{s1}\)–\(V_{s3}\) are applied to the memristive crossbar array, computing the product of the transposed matrix of synaptic weights, \(V_{m1}\)–\(V_{m5}\).

One iteration of the memristive iris classification network is accomplished by the following steps:

-

1)

The input signals \(IN_{1}\)–\(IN_{4}\) and \(b_{1}\) are applied to the memristive iris classification network, getting the outputs \(OT_{1}\)–\(OT_{3}\) immediately.

-

2)

According to the outputs \(OT_{1}\)–\(OT_{3}\) and the desired labels, the sensitivities of the output layer are obtained, which are mapped as the transposed input signals \(V_{s1}\)–\(V_{s3}\). \(V_{s1}\)–\(V_{s3}\) are input to the memristive crossbar array to get the product of the transposed matrix of synaptic weights directly.

-

3)

In accordance with \(V_{m1}\)–\(V_{m5}\), the synaptic weight variations are obtained. In addition, the synaptic weight changes are mapped to the durations of the control signals.

-

4)

The shared power supply \(V_{{\text {adj}}}\) is connected into the memristive iris classification network. Then, each control signal of the synaptic circuit is arranged as 2V or − 2V to adjust the memristor’s memristance separately. Hence, all the memristors’ memristance can be adjusted synchronously.

Simulation results

The memristive iris classification network is simulated on MATLAB/SIMULINK to assess its performance. The data components of iris samples are mapped to the voltages between − 0.5V to 0.5V. The activation function of the hidden layer is hyperbolic tangent function tanh(.)

The activation function of the output layer is the sigmoid function. Accordingly, the desired labels are set as \(\varvec{d}_1=\begin{bmatrix} 1&0&0 \end{bmatrix} ^\textrm{T}\), \(\varvec{d}_2=\begin{bmatrix} 0&1&0 \end{bmatrix} ^\textrm{T}\), and \(\varvec{d}_3=\begin{bmatrix} 0&0&1 \end{bmatrix} ^\textrm{T}\), representing the three types of irises.

In the simulation, the learning rate is 0.5, the initial synaptic weights are the random values in 0 to 0.4. The training set and the test set contain 75 stochastic samples, respectively. The maximum output of three output neurons is marked as the classification result. The iris classification network is trained with 900 iterations. The simulation of the memristive iris classification network is repeated 10 times. The average classification accuracy of the 10 simulations is 96.4\(\%\).

Further, the different stochastic Gaussian noises are added to the training set, demonstrating the anti-interference performance of the memristive iris classification network. The iris data are mapped as the voltages between − 0.45V and 0.45V, and then the stochastic Gaussian noise varying in − 0.05V to 0.05V is added to the voltages of the iris data. Consequently, the proportion of the random noise against the voltages of the iris data approaches 11 \(\%\). When the iris data mix with 11 \(\%\), 17.6 \(\%\), and 25\(\%\) random Gaussian noises, there are still a high test average classification accuracy to 95.87\(\%\), 94.93\(\%\), and 94.13\(\%\), respectively.

Along with the training of the memristive iris classification network, the errors between the desired labels and the outputs become smaller and smaller gradually. For the above four different simulations, the corresponding training error variations are shown in Fig. 9.

The proposed memristive iris classification network offers distinct advantages over the existing memristive BP neural networks for iris classification. The specific comparisons are presented in Table 2.

The input signal of the memristive neural networks in [16, 17] is binary. Nevertheless, the iris data are random values in the special range, which cannot be represented by the binary signal. Thus, the memristive neural networks in [16, 17] cannot be applied to fulfill the iris classification task. Furthermore, the memristive neural networks in [16, 17] can only adjust one memristor’s memristance at one time. However, the proposed memristive crossbar-based BP neural network can realize synchronous memristance adjustment.

The synaptic circuit in [29, The MNIST (Mixed National Institute of Standards and Technology) handwritten digit task has been widely applied for the performance verification. The MNIST data set is composed of the handwritten digits from 0 to 9. A handwritten digit is a 28 \(\times \) 28 gray-scale image, and the gray-scale value of one pixel is from 0 to 255. Applying the similar circuit structure shown in Fig. 8, the memristive crossbar-based BP neural network is constructed for MNIST handwritten digit recognition. A 28 \(\times \) 28 handwritten digit is transformed to one dimension vector, inputting to the memristive handwritten digit recognition network that is a two-layer neural network. The hidden layer encompasses 100 neurons. The output layer has 10 neurons to denote the handwritten digits 0–9, respectively. The training set consists of 10,000 images that are stochastically selected from the MNIST training data set. The 10,000 images of the training set contain the randomly selected 1000 images of ‘0’, ‘1’, ‘2’, ... ‘9’, respectively. The test set is constituted by the randomly chosen 2000 images from the MNIST test data set. The activation function of the hidden layer is arranged as The activation function of the output layer is sigmoid function. The memristive handwritten digit recognition network is simulated on MATLAB/SIMULINK to demonstrate the performance. The one-dimensional input vector values were mapped to voltages ranging from 0V to 0.5V. The initial synaptic weights are arranged as random values between − 1 and 1. The learning rate is 0.15. In the training, the 10,000 images are input to the memristive handwritten digit recognition network with stochastic orders. Here, it is marked as one step that all the 10,000 images in the training set are input to the recognition network once. Thus, one step has 10,000 iterations. The training lasts 200 steps. The mean square error variation of the 200 steps is shown in Fig. 10. As the training proceeds, the mean square error approaches 0 gradually. Subsequently, the test set is applied to the trained memristive neural network to evaluate its recognition accuracy. The simulation is repeated 10 times, and the average recognition accuracy is 93.32\(\%\). The simulation results of these three experiments collectively demonstrate the effectiveness of the proposed memristive crossbar-based BP neural network. They showcase its anti-interference performance and its ability to handle diverse tasks successfully. This paper proposes a 4T1M synaptic circuit to construct the memristive crossbar-based BP neural network. Compared with the existing memristive BP neural networks, the proposed memristive neural network has some advantages. The comparisons of the different circuit implementations of the BP neural network are presented in Table 3. In prior works [32,33,34,35], synaptic circuits are exclusively composed of MOS transistors. The synaptic circuits contain relatively more 52, 83, 92, and 166 MOS transistors, respectively. It is important to highlight that weight representation in these circuits is volatile. The neural network in [32] requires to keep training to avoid weight decay. The neural network in [34] also suffers from weight decay. In [33, 35], the synaptic circuits need additional circuits to store the weights to prevent weight decay. In contrast, memristors possess non-volatile properties, the memristive BP neural networks do not suffer weight decay, Moreover, the memristive synaptic circuit uses relatively fewer MOS transistors. The memristive crossbar-based circuits have advantages over the memristive circuits that are not crossbar array structure in manufacture. Moreover, the memristive crossbar-based circuits enable high-density integration. The synaptic circuit in [12,13,14] is not the crossbar array structure. The memristor CMOS hybrid synaptic circuit in [31] contains four MOS transistors and one memristor, and a NOT logic gate is used to control the operation. This synaptic circuit is also not the crossbar array structure. If the NOT gate is composed of four MOS transistors simply, there are eight MOS transistors and one memristor in the whole synaptic circuit. In order to compare the different characteristics of the memristive BP neural networks, a two-layer memristive neural network, both the first layer and the second layer are constituted by the 50\(\times \)50 memristive crossbar arrays, is taken as an example to implement the comparison. In [16, 17], the synaptic circuit is the cell of the 1 M (1 memristor) memristive crossbar array. However, in the memristance adjustment stage of one iteration, only one memristor’s memristance can be changed at a time. Hence, the exampled 1 M memristive neural network requires 5000 times memristance adjustment. Nevertheless, the memristive crossbar arrays constituted by memristors purely are susceptible to sneak path. Hence, the memristor CMOS hybrid circuit is served as the cell of the memristive crossbar array to relieve the effect of sneak path [24,25,26,27,28,29,30]. In [24,25,26,27,28], one MOS transistor and one memristor is applied to be the cell of memristive crossbar array, and the two cells at the two corresponding columns constitute the synaptic circuit. The memristive BP neural network can change a row memristors at a time in the memristance adjustment stage. Therefore, the exampled 1T1M memristive neural network requires 100 times memristance adjustment. The memristive BP neural network in [29, 30] can adjust one layer memristors’ memristance at a time, so the example 2T1M memristive neural network needs 2 times to realize the memristance adjustment [29, 30]. However, as outlined in “Memristive iris classification network” Section, the memristive BP neural network in [29, 30] exhibits some drawbacks. There are twelve MOS transistors and three memristors in one synaptic circuit [18]. It is also only to change one memristor’s memristance at a time. Thus, the example memristive neural network requires 5000 times memristance adjustment. Furthermore, the circuit designs in [18] can only fulfill scalar computations. While the mathematical model of BP neural network are matrix or vector operations. Therefore, the circuit designs in [18] are not suitable. As shown in Table 3, the proposed 4T1M synaptic circuit is the memristor CMOS hybrid structure. Thus, the proposed memristive crossbar-based BP neural network, which is crossbar structure, does not suffer from sneak path. The proposed memristive crossbar-based BP neural network can accomplish the matrix computations in the forward-propagation process and back-propagation process properly to accelerate the computation speed. The proposed synaptic circuit uses relatively small components. The proposed memristive crossbar-based BP neural network can realize synchronous memristance adjustment to achieve the fastest memristance adjustment speed. Hence, the training speed is improved notably. This paper proposes a novel 4T1M synaptic circuit. The corresponding circuit analysis and simulation demonstrate the 4T1M synaptic circuit can be flexibly controlled to change memristance or respond to the input signal. Subsequently, the memristive 4T1M crossbar array is designed to construct the memristive crossbar-based BP neural network. The corresponding circuit analysis for implementing BP neural network computations on the circuit hardware of the memristive crossbar-based BP neural network is presented. Further, based on the circuit structure of the proposed memristive crossbar-based BP neural network, the memristive neural networks are constructed to conduct three experiments. The experiment circuit design and the circuit simulation are presented in the paper. The experiment results shows the proposed memristive crossbar-based BP neural network outperforms the existed studies. Finally, the comprehensive comparisons of the memristive BP neural networks and the proposed one is discussed. In future studies, we will explore implementing all BP neural network computations with analog circuit. In another side, we will investigate the application of memristive circuit hardware to implement the more neural networks and the corresponding algorithms.Memristive handwritten digit recognition network

Discussion

Conclusions

Data availibility

The data used to support the findings of this study are available from the corresponding author upon request.

References

Theis N, Wong P (2017) The end of moores law: a new beginning for information technology. Comput Sci Eng 19(2):41–50

Liu X, Zeng Z (2022) Memristor crossbar architectures for implementing deep neural networks. Complex Intell Syst 8(1):787–802

Yang L, Zeng Z, Huang Y, Wen S (2018) Memristor-based circuit implementations of recognition network and recall network with forgetting stages. IEEE Trans Cogn Dev Sys 10(4):1133–1142

Huang Y, Kiani F, Ye F, **a Q (2023) From memristive devices to neuromorphic systems. Appl Phys Lett 122(11):110501

**ao Z, Xu X, **ng H, Luo S, Dai P, Zhan D (2021) RTFN: A robust temporal feature network for time series classification. Inform Sci 571:65–86

**ng H, **ao Z, Zhan D, Luo S, Dai P, Li K (2022) SelfMatch: Robust semisupervised time-series classification with self-distillation. Int J Intell Syst 37(11):8583–8610

Bacanin N, Zivkovic M, Hajdarevic Z, Janicijevic S, Dasho A, Marjanovic M (2022) Performance of sine cosine algorithm for aNN tuning and training for IoT security. Int Conf Hybrid Intell Syst 604:302–312

Bacanin N, Stoean R, Zivkovic M, Petrovic A, Rashid TA, Bezdan T (2021) Performance of a novel chaotic firefly algorithm with enhanced exploration for tackling global optimization problems: application for dropout regularization. Mathematics 9:1–33

Malakar S, Ghosh M, Bhowmik S, Sarkar R, Nasipuri M (2020) A GA based hierarchical feature selection approach for handwritten word recognition. Neural Comput Appl 32:2533–2552

BacaninID N, Budimirovic N, Venkatachalam K, Strumberger I, Alrasheedi AF, Abouhawwash M (2022) Novel chaotic oppositional fruit fly optimization algorithm for feature selection applied on COVID 19 patients health prediction. PLoS ONE 1:1–25

**ao Z, Zhang H, Tong H, Xu X (2022) An efficient temporal network with dual self-distillation for electroencephalography signal classification. IEEE Int Conf Bioinform Biomed (BIBM) 2022:1759–1762

Adhikari SP, Yang C, Kim H, Chua LO (2012) Memristor bridge synapse-based neural network and its learning. IEEE Trans Neural Netw Learn Syst 23(9):1426–1435

Adhikari SP, Kim H, Budhathoki RK, Yang C, Chua LO (2015) A circuit-based learning architecture for multilayer neural networks with memristor bridge synapses. IEEE Trans Circ Syst I Reg Papers 62(1):215–223

Yang C, Adhikari S, Kim H (2019) On learning with nonlinear memristor-based neural network and its replication. IEEE Trans Circ Syst I Reg Papers 66(10):3906–3916

Zhang Y, Wang X, Li Y, Friedman EG (2017) Memristive model for synaptic circuits. IEEE Trans Circ Syst II Exp Briefs 64(7):767–771

Zhang Y, Li Y, Wang X, Friedman EG (2017) Synaptic characteristics of ag/aginsbte/ta-based memristor for pattern recognition applications. IEEE Trans Elect Dev 64(4):1806–1811

Zhang Y, Wang X, Friedman EG (2017) Memristor-based circuit design for multilayer neural networks. IEEE Trans Circ Syst I Reg Papers 65(2):677–686

Krestinskaya O, Salama KN, James AP (2019) Learning in memristive neural network architectures using analog back-propagation circuits. IEEE Trans Circ Syst I Reg Papers 66(2):719–732

Prezioso M, Merrikh-Bayat F, Hoskins B, Adam G, Likharev KK, Strukov DB (2015) Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521(7550):61–64

Hu M, Li H, Chen Y, Wu Q, Rose GS, Linderman RW (2014) Memristor crossbar-based neuromorphic computing system: A case study. IEEE Trans Neural Netw Learn Syst 25(10):1864–1878

Kataeva I, Merrikh-Bayat F, Zamanidoost E, Strukov D (2015) Efficient training algorithms for neural networks based on memristive crossbar circuits. In: International Joint Conferrence on Neural Network, pp 1–8

Chabi D, Wang Z, Bennett C, Klein JO, Zhao W (2015) Ultrahigh density memristor neural crossbar for on-chip supervised learning. IEEE Trans Nanotechnol 14(6):954–962

Hasan R, Taha T. M (2014) Enabling back propagation training of memristor crossbar neuromorphic processors. In: International Joint Conferrence on Neural Network, pp 21–28

Choi S, Shin JH, Lee J, Sheridan P, Lu WD (2017) Experimental demonstration of feature extraction and dimensionality reduction using memristor networks. Nano Lett 17(5):3113–3118

Li C, Hu M, Li Y, Jiang H, Ge N, Montgomery E et al (2018) Analogue signal and image processing with large memristor crossbars. Nat Electron 1(1):52–60

Cai F, Correll M, Lee H, Lim Y, Bothra V, Zhang Z et al (2019) A fully integrated reprogrammable memristorccmos system for efficient multiply accumulate operations. Nat Electron 2(7):290–299

Wang Z, Li C, Song W, Rao M, Belkin D, Li Y et al (2019) Reinforcement learning with analogue memristor arrays. Nat Electron 2(1):115–124

Yao P, Wu H, Gao B, Tang J, Zhang Q, Zhang W et al (2020) Fully hardware-implemented memristor convolutional neural network. Nature 577(7792):641–646

Soudry D, Di Castro D, Gal A, Kolodny A, Kvatinsky S (2015) Memristor-based multilayer neural networks with online gradient descent training. IEEE Trans Neural Netw Learn Syst 26(10):2408–2421

Wen S, **ao S, Yan Z, Zeng Z, Huang T (2019) Adjusting learning rate of memristor-based multilayer neural networks via fuzzy method. IEEE Trans Comput Aided Des Integr Circ Syst 38(6):1084–1094

Yang L, Zeng Z, Huang Y (2020) An associative-memory-based reconfigurable memristive neuromorphic system with synchronous weight training. IEEE Trans Cogn Dev Sys 12(3):529–540

Valle M, Caviglia DD, Bisio GM (1996) An experimental analog vlsi neural network with on-chip back-propagation learning. Analog Integr Circ Sign Process 9(3):231–245

Lu C, Shi B, Chen L (2002) An on-chip bp learning neural network with ideal neuron characteristics and learning rate adaptation. Analog Integr Circ Sign Process 31(1):55–62

Morie T, Amemiya Y (1994) An all-analog expandable neural network LSI with on-chip backpropagation learning. IEEE J Solid-St Circ 29(9):1086–1093

Shima T, Kimura T, Kamatani Y, Itakura T, Fujita Y, Iida T (1992) Neuro chips with on-chip back-propagation and/or hebbian learning. IEEE J Solid-St Circ 27(12):1868–1876

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants 62106181, 62176189, 62176163, and 61936004, the Technology Innovation Project of Hubei Province of China under Grant 2019AE171, the 111 Project on Computational Intelligence and Intelligent Control, China under grant B18024, the Project of Hubei Provincial Education Department under Grant B2022058, Innovative Research Group Project of the National Natural Science Foundation of China under Grant 61821003.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, L., Ding, Z., Xu, Y. et al. Memristive crossbar-based circuit design of back-propagation neural network with synchronous memristance adjustment. Complex Intell. Syst. (2024). https://doi.org/10.1007/s40747-024-01407-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40747-024-01407-1