Abstract

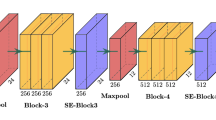

Facial expression recognition is a crucial area of study in psychology that can be applied to many fields, such as intelligent healthcare, human-computer interaction, fuzzy control and other domains. However, current deep learning models usually encounter high complexity, expensive computational requirements and outsized parameters. These obstacles hinder the deployment of applications on resource-constrained mobile terminals. This paper proposes an improved lightweight MobileViT with channel expansion and attention mechanism for facial expression recognition to address these challenges. In this model, we adopt a channel expansion strategy to effectively extract more critical facial expression feature information from multi-scale feature maps. Furthermore, we introduce a channel attention module within the model to improve feature extraction performance. Compared with typical lightweight models, our proposed model significantly improves the accuracy rate while maintaining a lightweight network. Our proposed model achieves 94.35 and 87.41% accuracy on the KDEF and RAF-DB datasets, respectively, demonstrating superior recognition performance.

Similar content being viewed by others

Data availability

The KDEF dataset and the RAF-DB dataset selected in this paper are both public available datasets. The KDEF dataset can be requested at http://www.emotionlab.se/kdef/. Similarly, the RAF-DB dataset can be requested at http://www.whdeng.cn/raf/model1.html.

References

Li, S., Deng, W.: Deep facial expression recognition: a survey. IEEE Trans. Affect. Comput. 13(3), 1195–1215 (2020)

Chowdary, M.K., Nguyen, T.N., Hemanth, D.J.: Deep learning-based facial emotion recognition for human-computer interaction applications. Neural Comput. Appl. 35(32), 23311–23328 (2023)

Rajawat, A.S., Bedi, P., Goyal, S., Bhaladhare, P., Aggarwal, A., Singhal, R.S.: Fusion fuzzy logic and deep learning for depression detection using facial expressions. Proc. Comput. Sci. 218, 2795–2805 (2023)

Kumar, G., Das, T., Singh, K.: Early detection of depression through facial expression recognition and electroencephalogram-based artificial intelligence-assisted graphical user interface. Neural Comput. Appl. 36(12), 6937–6954 (2024)

Khorrami, P., Paine, T., Huang, T.: Do deep neural networks learn facial action units when doing expression recognition? In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 19–27 (2015)

Alphonse, S., Verma, H.: Facial expression recognition with high response-based local directional pattern (HR-LDP) network. Comput. Mater. Cont. 78(2), 2067–2086 (2024)

Wang, K., He, R., Wang, S., Liu, L., Yamauchi, T.: The efficient-capsnet model for facial expression recognition. Appl. Intell. 53(13), 16367–16380 (2023)

Hasani, B., Mahoor, M.H.: Spatio-temporal facial expression recognition using convolutional neural networks and conditional random fields. In: 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), IEEE, pp. 790–795 (2017)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł, Polosukhin, I.: Attention is all you need. Adv. Neural. Inf. Process. Syst. 30, 5998–6008 (2017)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. ar**v preprint ar**v:2010.11929 (2020)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022 (2021)

Han, K., Wang, Y., Chen, H., Chen, X., Guo, J., Liu, Z., Tang, Y., **ao, A., Xu, C., Xu, Y., et al.: A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 45(1), 87–110 (2022)

Liu, C., Hirota, K., Dai, Y.: Patch attention convolutional vision transformer for facial expression recognition with occlusion. Inf. Sci. 619, 781–794 (2023)

Feng, H., Huang, W., Zhang, D., Zhang, B.: Fine-tuning swin transformer and multiple weights optimality-seeking for facial expression recognition. IEEE Access 11, 9995–10003 (2023)

Chen, X., Zheng, X., Sun, K., Liu, W., Zhang, Y.: Self-supervised vision transformer-based few-shot learning for facial expression recognition. Inf. Sci. 634, 206–226 (2023)

Mehta, S., Rastegari, M.: Mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer. ar**v preprint ar**v:2110.02178 (2021)

Cheng, Q., Li, X., Zhu, B., Shi, Y., **e, B.: Drone detection method based on MobileVit and CA-PANet. Electronics 12(1), 223 (2023)

Cao, K., Tao, H., Wang, Z., **, X.: MSM-ViT: A multi-scale MobileVit for pulmonary nodule classification using CT images. J. X-Ray Sci. Technol. (Preprint) 2023, 1–14 (2023)

Li, G., Wang, Y., Zhao, Q., Chang, B.: PMVT: a lightweight vision transformer for plant disease identification on mobile devices. Front. Plant Sci. 14, 1256773 (2023)

Xu, X., Liu, C., Cao, S., Lu, L.: A high-performance and lightweight framework for real-time facial expression recognition. IET Image Proc. 17(12), 3500–3509 (2023)

Wang, J., Zhang, Z.: Facial expression recognition in online course using light-weight vision transformer via knowledge distillation. In: Pacific Rim International Conference on Artificial Intelligence, Springer, pp. 247–253 (2023)

Shen, L., **, X.: VaBTFER: an effective variant binary transformer for facial expression recognition. Sensors 24(1), 147 (2023)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Yang, Y., Wang, X., Sun, B., Zhao, Q.: Channel expansion convolutional network for image classification. IEEE Access 8, 178414–178424 (2020)

Liu, S., Wang, Y., Yu, Q., Liu, H., Peng, Z.: CEAM-YOLOv7: improved YOLOv7 based on channel expansion and attention mechanism for driver distraction behavior detection. IEEE Access 10, 129116–129124 (2022)

Sundaram, S.M., Narayanan, R.: Human face and facial expression recognition using deep learning and sNET architecture integrated with bottleneck attention module. Traitement du Signal 40(2), 647–655 (2023)

Fu, R., Tian, M.: Classroom facial expression recognition method based on Conv3D-ConvLSTM-SEnet in online education environment. J. Circuits Syst. Comput. 27, 2450131 (2023)

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.-C.: Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

Lundqvist, D., Flykt, A., Öhman, A.: Karolinska directed emotional faces. PsycTESTS Dataset 91, 630 (1998)

Li, S., Deng, W.: Reliable crowdsourcing and deep locality-preserving learning for unconstrained facial expression recognition. IEEE Trans. Image Process. 28(1), 356–370 (2019)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-cam: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 618–626 (2017)

Maaten, L., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9(11), 2579–2605 (2008)

Kumar, H.N.N., Kumar, A.S., Prasad, M.S.G., Shah, M.A.: Automatic facial expression recognition combining texture and shape features from prominent facial regions. IET Image Process. 17(4), 1111–1125 (2023)

Zhou, Y., **, L., Ma, G., Xu, X.: Quaternion capsule neural network with region attention for facial expression recognition in color images. IEEE Trans. Emerg. Topics Comput. Intell. 6(4), 893–912 (2021)

Liu, T., Li, J., Wu, J., Du, B., Wan, J., Chang, J.: Confusable facial expression recognition with geometry-aware conditional network. Pattern Recogn. 148, 110174 (2024)

**, Y., Mao, Q., Zhou, L.: Weighted contrastive learning using pseudo labels for facial expression recognition. Vis. Comput. 39(10), 5001–5012 (2023)

Liu, Y., Dai, W., Fang, F., Chen, Y., Huang, R., Wang, R., Wan, B.: Dynamic multi-channel metric network for joint pose-aware and identity-invariant facial expression recognition. Inf. Sci. 578, 195–213 (2021)

Zheng, C., Mendieta, M., Chen, C.: Poster: a pyramid cross-fusion transformer network for facial expression recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3146–3155 (2023)

Zhang, W., Zhang, X., Tang, Y.: Facial expression recognition based on improved residual network. IET Image Proc. 17(7), 2005–2014 (2023)

Li, Y., Lu, G., Li, J., Zhang, Z., Zhang, D.: Facial expression recognition in the wild using multi-level features and attention mechanisms. IEEE Trans. Affect. Comput. 14(1), 451–462 (2020)

Jabbooree, A.I., Khanli, L.M., Salehpour, P., Pourbahrami, S.: A novel facial expression recognition algorithm using geometry \(\beta \)-skeleton in fusion based on deep CNN. Image Vision Comput. 134, 104677 (2023)

Chu, X., Tian, Z., Wang, Y., Zhang, B., Ren, H., Wei, X., **a, H., Shen, C.: Twins: revisiting spatial attention design in vision transformers. ar**v preprint ar**v:2104.13840 (2021)

Sajjad, M., Zahir, S., Ullah, A., Akhtar, Z., Muhammad, K.: Human behavior understanding in big multimedia data using CNN based facial expression recognition. Mobile Netw. Appl. 25, 1611–1621 (2020)

Wang, K., Peng, X., Yang, J., Meng, D., Qiao, Y.: Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 29, 4057–4069 (2020)

Acknowledgements

The authors would like to acknowledge the support and assistance from Anhui Jianzhu University, Hefei, China. We are also grateful to the reviewers and editors whose suggestions and comments helped refine and improve this paper.

Funding

This work was supported by grants from the Anhui Province Key Laboratory of Intelligent Building and Building Energy Saving (Grant No. IBES2022ZR02), Anhui Provincial Housing and Urban-Rural Construction Science and Technology Program (2023-YF113, 2023-YF004).

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: study conception and design: Wancheng Yu, Kunxia Wang; data collection: Wancheng Yu, Takashi Yamauchi; analysis and interpretation of results: Wancheng Yu, Takashi Yamauchi; draft manuscript preparation: Wancheng Yu, Kunxia Wang. All authors reviewed the results and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, K., Yu, W. & Yamauchi, T. MVT-CEAM: a lightweight MobileViT with channel expansion and attention mechanism for facial expression recognition. SIViP (2024). https://doi.org/10.1007/s11760-024-03356-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11760-024-03356-1