Abstract

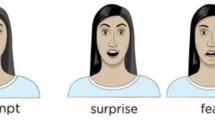

Real-time monitoring of students’ classroom engagement level is of paramount importance in modern education. Facial expression recognition has been extensively explored in various studies to achieve this goal. However, conventional models often grapple with a high number of parameters and substantial computational costs, limiting their practicality in real-time applications and real-world scenarios. To address this limitation, this paper proposes “Light_Fer,” a lightweight model designed to attain high accuracy while reducing parameters. Light_Fer’s novelty lies in the integration of depthwise separable convolution, group convolution, and inverted bottleneck structure. These techniques optimize the models’ architecture, resulting in superior accuracy with fewer parameters. Experimental results demonstrate that Light_Fer, with just 0.23M parameters, achieves remarkable accuracies of 87.81% and 88.20% on FERPLUS and RAF-DB datasets, respectively. Furthermore, by establishing a correlation between facial expressions and students’ engagement levels, we extend the application of Light_Fer to real-time detection and monitoring of students’ engagement during classroom activities. In conclusion, the proposed Light_Fer model, with its lightweight design and enhanced accuracy, offers a promising solution for real-time student engagement monitoring through facial expression recognition.

Similar content being viewed by others

Availability of data and materials

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Coates, H.: Engaging Students for Success-2008 Australasian Survey of Student Engagement. Australian Council for Educational Research, Victoria, Australia (2009)

Gunuc, S.: The relationships between student engagement and their academic achievement. Int. J. New Trends Educ. Their Implic. 5(4), 216–231 (2014)

Casuso-Holgado, M.J., Cuesta-Vargas, A.I., Moreno-Morales, N., Labajos-Manzanares, M.T., Barón-López, F.J., Vega-Cuesta, M.: The association between academic engagement and achievement in health sciences students. BMC Med. Educ. 13(1), 1–7 (2013)

Trowler, V.: Student engagement literature review. High. Educ. Acad. 11(1), 1–15 (2010)

Bower, G.H.: Mood and memory. Am. Psychol. 36(2), 129 (1981)

Whitehill, J., Serpell, Z., Lin, Y.-C., Foster, A., Movellan, J.R.: The faces of engagement: automatic recognition of student engagement from facial expressions. IEEE Trans. Affect. Comput. 5(1), 86–98 (2014)

Mehrabian, A.: Communication without words. Univ. East Lond. 24(4), 1084–5 (1968)

Ekman, P., Friesen,W.: Facial action coding system: A technique for the measurement of facial movement. In Environmental Psychology & Nonverbal Behavior. Consulting Psychologists Press: Palo Alto, CA, USA (1978)

Sathik, M., Jonathan, S.G.: Effect of facial expressions on student’s comprehension recognition in virtual educational environments. Springerplus 2, 1–9 (2013)

Altuwairqi, K., Jarraya, S.K., Allinjawi, A., Hammami, M.: A new emotion-based affective model to detect student’s engagement. J. King Saud Univ. Comput. Inf. Sci. 33(1), 99–109 (2021)

Fish, J., Brimson, J., Lynch, S.: Mindfulness interventions delivered by technology without facilitator involvement: what research exists and what are the clinical outcomes? Mindfulness 7, 1011–1023 (2016)

Hew, K.F.: Promoting engagement in online courses: What strategies can we learn from three highly rated MOOCS. Br. J. Educ. Technol. 47(2), 320–341 (2016)

Aneja, D., Colburn, A., Faigin, G., Shapiro, L., Mones, B.: Modeling stylized character expressions via deep learning. In: ACCV (2016)

Mollahosseini, A., Chan, D., Mahoor, M.H.: Going deeper in facial expression recognition using deep neural networks. IEEE (2016)

Grafsgaard, J., Wiggins, J.B., Boyer, K.E., Wiebe, E.N., Lester, J.: Automatically recognizing facial expression: predicting engagement and frustration. In: Educational Data Mining 2013 (2013)

Dubbaka, A., Gopalan, A.: Detecting learner engagement in MOOCS using automatic facial expression recognition. In: 2020 IEEE Global Engineering Education Conference (EDUCON) (2020)

Shen, J., Yang, H., Li, J., Cheng, Z.: Assessing learning engagement based on facial expression recognition in MOOC’s scenario. Multimedia Syst. 28, 469–478 (2022)

Liao, J., Liang, Y., Pan, J.: Deep facial spatiotemporal network for engagement prediction in online learning. Appl. Intell. 51, 6609–6621 (2021)

Gupta, S., Kumar, P., Tekchandani, R.K.: Facial emotion recognition based real-time learner engagement detection system in online learning context using deep learning models. Multimed. Tools Appl. 82(8), 11365–11394 (2023)

Hewitt, C., Gunes, H.: CNN-based facial affect analysis on mobile devices. ar**v preprint ar**v:1807.08775 (2018)

Barros, P., Churamani, N., Sciutti, A.: The facechannel: a light-weight deep neural network for facial expression recognition. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 652–656 (2020). IEEE

Ferro-Pérez, R., Mitre-Hernandez, H.: Resmonet: a residual mobile-based network for facial emotion recognition in resource-limited systems (2020)

Zhao, Z., Liu, Q., Zhou, F.: Robust lightweight facial expression recognition network with label distribution training. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 3510–3519 (2021)

Chollet, F.: Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1251–1258 (2017)

Iandola, F.N., Han, S., Moskewicz, M.W., Ashraf, K., Dally, W.J., Keutzer, K.: Squeezenet: Alexnet-level accuracy with 50x fewer parameters and\(<\) 0.5 mb model size. ar**v preprint ar**v:1602.07360 (2016)

Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam, H.: Mobilenets: efficient convolutional neural networks for mobile vision applications. ar**v preprint ar**v:1704.04861 (2017)

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.-C.: Mobilenetv2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

Howard, A., Sandler, M., Chu, G., Chen, L.-C., Chen, B., Tan, M., Wang, W., Zhu, Y., Pang, R., Vasudevan, V.: Searching for mobilenetv3. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1314–1324 (2019)

Zhang, X., Zhou, X., Lin, M., Sun, J.: Shufflenet: an extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6848–6856 (2018)

Ma, N., Zhang, X., Zheng, H.-T., Sun, J.: Shufflenet v2: practical guidelines for efficient CNN architecture design. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 116–131 (2018)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. ar**v preprint ar**v:1409.1556 (2014)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Zeng, J., Shan, S., Chen, X.: Facial expression recognition with inconsistently annotated datasets. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 222–237 (2018)

Li, Y., Lu, Y., Li, J., Lu, G.: Separate loss for basic and compound facial expression recognition in the wild. In: Asian Conference on Machine Learning, pp. 897–911 (2019). PMLR

Li, Y., Zeng, J., Shan, S., Chen, X.: Occlusion aware facial expression recognition using CNN with attention mechanism. IEEE Trans. Image Process. 28(5), 2439–2450 (2018)

Wang, K., Peng, X., Yang, J., Meng, D., Qiao, Y.: Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 29, 4057–4069 (2020)

Chen, S., Wang, J., Chen, Y., Shi, Z., Geng, X., Rui, Y.: Label distribution learning on auxiliary label space graphs for facial expression recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13984–13993 (2020)

Farzaneh, A.H., Qi, X.: Discriminant distribution-agnostic loss for facial expression recognition in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 406–407 (2020)

Wang, K., Peng, X., Yang, J., Lu, S., Qiao, Y.: Suppressing uncertainties for large-scale facial expression recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6897–6906 (2020)

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant (No. 62071161).

Author information

Authors and Affiliations

Contributions

Zhao conducted model proposal, experimental verification, and initial draft writing. Li provided significant technical advice and support in conceptualization and implementation, and contributed to paper writing. Yang and Ma provided research directions and project management.

Corresponding authors

Ethics declarations

Conflict of interest

The authors have no competing interests that might be perceived to influence the results and/or discussion reported in this paper.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhao, Z., Li, Y., Yang, J. et al. A lightweight facial expression recognition model for automated engagement detection. SIViP 18, 3553–3563 (2024). https://doi.org/10.1007/s11760-024-03020-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-024-03020-8