Abstract

Purpose

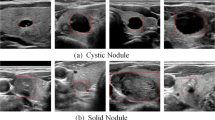

It plays a significant role to accurately and automatically segment lesions from ultrasound (US) images in clinical application. Nevertheless, it is extremely challenging because distinct components of heterogeneous lesions are similar to background in US images. In our study, a transfer learning-based method is developed for full-automatic joint segmentation of nodular lesions.

Methods

Transfer learning is a widely used method to build high performing computer vision models. Our transfer learning model is a novel type of densely connected convolutional network (SDenseNet). Specifically, we pre-train SDenseNet based on ImageNet dataset. Then our SDenseNet is designed as a multi-channel model (denoted Mul-DenseNet) for automatically jointly segmenting lesions. As comparison, our SDenseNet using different transfer learning is applied to segmenting nodules, respectively. In our study, we find that more datasets for pre-training and multiple pre-training do not always work in segmentation of nodules, and the performance of transfer learning depends on a judicious choice of dataset and characteristics of targets.

Results

Experimental results illustrate a significant performance of the Mul-DenseNet compared to that of other methods in the study. Specially, for thyroid nodule segmentation, overlap metric (OM), dice ratio (DR), true-positive rate (TPR), false-positive rate (FPR) and modified Hausdorff distance (MHD) are \(0.9257\pm 0.0027\), \(0.9596\pm 0.0009\), \(0.9869\pm 0.0008\), \(0.0183\pm 0.0066\) and \(0.3897\pm 0.3488\) mm, respectively; for breast nodule segmentation, OM, DR, TPR, FPR and MHD are \(0.8912\pm 0.0072\), \(0.9513\pm 0.0038\), \(0.9835\pm 0.0015\), \(0.2381\pm 0.1301\) and \(0.2017\pm 0.0302\) mm, respectively.

Conclusions

The experimental results illustrate our transfer learning models are very effective in segmentation of lesions, which also demonstrate that it is potential of our proposed Mul-DenseNet model in clinical applications. This model can reduce heavy workload of the physicians so that it can avoid misdiagnosis cases due to excessive fatigue. Moreover, it is easy and reproducible to detect lesions without medical expertise.

Similar content being viewed by others

References

Chang CY, Huang HC, Chen SJ (2010) Automatic thyroid nodule segmentation and component analysis in ultrasound images. Biomed Eng: Appl, Basis Commun 22(02):81–89

Chang H, Han J, Zhong C, Snijders AM, Mao JH (2017) Unsupervised transfer learning via multi-scale convolutional sparse coding for biomedical applications. IEEE Trans Pattern Anal Mach Intell 40(5):1182–1194

Cheng JZ, Ni D, Chou YH, Qin J, Tiu CM, Chang YC, Huang CS, Shen D, Chen CM (2016) Computer-aided diagnosis with deep learning architecture: applications to breast lesions in us images and pulmonary nodules in ct scans. Sci Rep 6(1):1–13

Ciresan D, Meier U, Schmidhuber J (2012) Multi-column deep neural networks for image classification. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 3642–3649. IEEE

Faisal A, Ng SC, Goh SL, George J, Supriyanto E, Lai KW (2015) Multiple lrek active contours for knee meniscus ultrasound image segmentation. IEEE Trans Med Imaging 34(10):2162–2171

Fang L, Qiu T, Liu Y, Chen C (2018) Active contour model driven by global and local intensity information for ultrasound image segmentation. Comput Math Appl 75(12):4286–4299

Geert L, Thijs K, Babak EB, Arnaud AAS, Francesco C, Mohsen G, Jeroen ADL, Bram VG, Clara I (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88

Huang G, Liu Z, Maaten LVD, Weinberger KQ (2017) Densely connected convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 2261–2269

Illanes A, Esmaeili N, Poudel P, Balakrishnan S, Friebe M (2019) Parametrical modelling for texture characterization-a novel approach applied to ultrasound thyroid segmentation. PLoS ONE 14(1):e0211215

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, pp 448–456. PMLR

Kim S, Choi J, Kim T, Kim C (2019) Self-training and adversarial background regularization for unsupervised domain adaptive one-stage object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 6092–6101

Koundal D, Gupta S, Singh S (2016) Automated delineation of thyroid nodules in ultrasound images using spatial neutrosophic clustering and level set. Appl Soft Comput 40:86–97

Krizhevsky A, Sutskever I, Hinton G.E (2012) Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp 1097–1105

Long M, Wang J, Ding G, Shen D, Yang Q (2014) Transfer learning with graph co-regularization. IEEE Trans Knowl Data Eng 26(7):1805–1818

Ma J, Kong D (2018) Deep learning models for segmentation of lesion based on ultrasound images. Adv Ultrasound Diagn Therapy 2(2):82–93

Ma J, Wu F, Zhao Q, Kong D (2017) Ultrasound image-based thyroid nodule automatic segmentation using convolutional neural networks. Int J Comput Assist Radiol Surg 12(11):1895–1910

Ma J, Wu F, Zhu J, Kong D (2017) Cascade convolutional neural networks for automatic detection of thyroid nodules in ultrasound images. Med Phys 44(5):1678–1691

Ma J, Wu F, Zhu J, Xu D, Kong D (2017) A pre-trained convolutional neural network based method for thyroid nodule diagnosis. Ultrasonics 73:221–230

Ma X, Niu Y, Gu L, Wang Y, Zhao Y, Bailey J, Lu F (2020) Understanding adversarial attacks on deep learning based medical image analysis systems. Pattern Recognition p 107332

Maas AL, Hannun AY, Ng AY (2013) Rectifier nonlinearities improve neural network acoustic models. In: ICML Workshop on Deep Learning for Audio, Speech and Language Processing

Panigrahi L, Verma K, Singh BK (2019) Ultrasound image segmentation using a novel multi-scale gaussian kernel fuzzy clustering and multi-scale vector field convolution. Expert Syst Appl 115:486–498

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M (2014) Imagenet large scale visual recognition challenge. International Journal of Computer Vision pp 1–42

Schneider RJ, Perrin DP, Vasilyev NV, Marx GR, Pedro J, Howe RD (2010) Mitral annulus segmentation from 3d ultrasound using graph cuts. IEEE Trans Med Imaging 29(9):1676–1687

Seo H, Yu L, Ren H, Li X, Shen L, **ng L (2021) Deep neural network with consistency regularization of multi-output channels for improved tumor detection and delineation. IEEE Transactions on Medical Imaging

Shin HC, Roth HR, Gao M, Lu L, Xu Z, Nogues I, Yao J, Mollura D, Summers RM (2016) Deep convolutional neural networks for computer-aided detection: Cnn architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging 35(5):1285–1298

Tatiana T, Francesco O, Barbara C (2014) Learning categories from few examples with multi model knowledge transfer. IEEE Trans Pattern Anal Mach Intell 36(5):928–941

Wan L, Zeiler M, Zhang S, Cun Y.L, Fergus R (2013) Regularization of neural networks using dropconnect. In: Proceedings of the 30th International Conference on Machine Learning (ICML-13), pp. 1058–1066

**an M, Zhang Y, Cheng HD (2015) Fully automatic segmentation of breast ultrasound images based on breast characteristics in space and frequency domains. Pattern Recogn 48(2):485-497

Xu Y, Fang X, Wu J, Li X, Zhang D (2015) Discriminative transfer subspace learning via low-rank and sparse representation. IEEE Trans Image Process 25(2):850–863

Xu Y, Pan SJ, **ong H, Wu Q, Luo R, Min H, Song H (2017) A unified framework for metric transfer learning. IEEE Trans Knowl Data Eng 29(6):1158–1171

Xu Y, Wang Y, Yuan J, Cheng Q, Wang X, Carson PL (2019) Medical breast ultrasound image segmentation by machine learning. Ultrasonics 91:1–9

Yosinski J, Clune J, Bengio Y, Lipson H (2014) How transferable are features in deep neural networks? In: Advances in Neural Information Processing Systems, pp 3320–3328

Zang X, Bascom R, Gilbert C, Toth J, Higgins W (2015) Methods for 2-d and 3-d endobronchial ultrasound image segmentation. IEEE Trans Biomed Eng 63(7):1426–1439

Zhang L, Zuo W, Zhang D (2016) Lsdt: Latent sparse domain transfer learning for visual adaptation. IEEE Trans Image Process 25(3):1177–1191

Zhang W, Li R, Deng H, Wang L, Lin W, Ji S, Shen D (2015) Deep convolutional neural networks for multi-modality isointense infant brain image segmentation. Neuroimage 108:214–224

Acknowledgements

This study was funded by Guangdong Basic and Applied Basic Research Foundation (Grant No. 2019A1515110206), the Fundamental Research Funds of Shandong University (Grant No. 11500079614128, 2019GN084), the National Natural Science Foundation of China (Grant No. 62001268, 11771160, 11801511) and the Preferred Foundation of Zhejiang Province Postdoctoral Research Projects (Grant No. 524000-X81801)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest

Ethical approval

For the retrospective studies formal consent is not required. This article does not contain any studies with human participants or animals performed by any of the authors

Informed consent

Informed consent was obtained from all individual participants included in the study

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ma, J., Bao, L., Lou, Q. et al. Transfer learning for automatic joint segmentation of thyroid and breast lesions from ultrasound images. Int J CARS 17, 363–372 (2022). https://doi.org/10.1007/s11548-021-02505-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-021-02505-y