Abstract

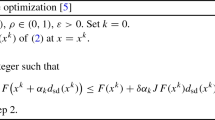

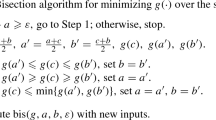

This paper proposes a line search technique to solve a special class of multi-objective optimization problems in which the objective functions are supposed to be convex but need not be differentiable. This is an iterative process to determine Pareto critical points. A suitable sub-problem is proposed at every iteration of the iterative process to determine the direction vector using the sub-differential of every objective function at that point. The proposed method is verified in numerical examples. This methodology does not bear any burden of selecting suitable parameters like the scalarization methods.

Similar content being viewed by others

Data Availability

No datasets were generated or analysed during the current study.

References

Tammer, C., Weidner, P.: Scalarization and Separation by Translation Invariant Functions. Springer, Cham (2020)

Miettinen, K., Mäkelä, M.: Interactive bundle-based method for nondifferentiable multiobjeective optimization: Nimbus. Optimization 34(3), 231–246 (1995)

Miettinen, K., Mäkelä, M.M.: Comparative evaluation of some interactive reference point-based methods for multi-objective optimisation. J. Oper. Res. Soc. 50(9), 949–959 (1999)

Laumanns, M., Thiele, L., Deb, K., Zitzler, E.: Combining convergence and diversity in evolutionary multiobjective optimization. Evol. Comput. 10(3), 263–282 (2002)

Mostaghim, S., Branke, J., Schmeck, H.: Multi-objective particle swarm optimization on computer grids. In: Proceedings of the 9th Annual Conference on Genetic and Evolutionary Computation, pp. 869–875 (2007)

Coello, C.A.C.: Evolutionary Algorithms for Solving Multi-objective Problems. Springer, New York (2007)

Deb, K.: Recent advances in evolutionary multi-criterion optimization (emo). In: Proceedings of the Genetic and Evolutionary Computation Conference Companion, pp. 702–735 (2017)

Deb, K., Jain, H.: An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part i: solving problems with box constraints. IEEE Trans. Evol. Comput. 18(4), 577–601 (2013)

Seada, H., Deb, K.: Non-dominated sorting based multi/many-objective optimization: Two decades of research and application. Multi-Objective Optimization: Evolutionary to Hybrid Framework, 1–24 (2018)

Fliege, J., Svaiter, B.F.: Steepest descent methods for multicriteria optimization. Math. Methods Oper. Res. 51, 479–494 (2000)

Drummond, L.G., Svaiter, B.F.: A steepest descent method for vector optimization. J. Comput. Appl. Math. 175(2), 395–414 (2005)

Fukuda, E.H., Drummond, L.G.: On the convergence of the projected gradient method for vector optimization. Optimization 60(8–9), 1009–1021 (2011)

Drummond, L.G., Iusem, A.N.: A projected gradient method for vector optimization problems. Comput. Optim. Appl. 28, 5–29 (2004)

Fliege, J., Drummond, L.G., Svaiter, B.F.: Newton’s method for multiobjective optimization. SIAM J. Optim. 20(2), 602–626 (2009)

Qu, S., Goh, M., Chan, F.T.: Quasi-newton methods for solving multiobjective optimization. Oper. Res. Lett. 39(5), 397–399 (2011)

Mishra, S.K., Panda, G., Ansary, M.A.T., Ram, B.: On q-newton’s method for unconstrained multiobjective optimization problems. J. Appl. Math. Comput. 63, 391–410 (2020)

Lai, K.K., Mishra, S.K., Panda, G., Ansary, M.A.T., Ram, B.: On q-steepest descent method for unconstrained multiobjective optimization problems. AIMS Math. 5(6), 5521–5540 (2020)

Kumar, S., Ansary, M.A.T., Mahato, N.K., Ghosh, D., Shehu, Y.: Newton’s method for uncertain multiobjective optimization problems under finite uncertainty sets. J. Nonlinear Var. Anal. 7(5) (2023)

Fliege, J., Vaz, A.I.F.: A method for constrained multiobjective optimization based on sqp techniques. SIAM J. Optim. 26(4), 2091–2119 (2016)

Ansary, M.A.T., Panda, G.: A sequential quadratically constrained quadratic programming technique for a multi-objective optimization problem. Eng. Optim. 51(1), 22–41 (2019)

Ansary, M.A.T., Panda, G.: A globally convergent sqcqp method for multiobjective optimization problems. SIAM J. Optim. 31(1), 91–113 (2021)

Tanabe, H., Fukuda, E.H., Yamashita, N.: Proximal gradient methods for multiobjective optimization and their applications. Comput. Optim. Appl. 72, 339–361 (2019)

Bento, G.D.C., Cruz Neto, J.X., López, G., Soubeyran, A., Souza, J.: The proximal point method for locally lipschitz functions in multiobjective optimization with application to the compromise problem. SIAM J. Optim. 28(2), 1104–1120 (2018)

Da Cruz Neto, J.X., Da Silva, G., Ferreira, O.P., Lopes, J.O.: A subgradient method for multiobjective optimization. Comput. Optim. Appl. 54(3), 461–472 (2013)

Ansary, M.A.T.: A newton-type proximal gradient method for nonlinear multi-objective optimization problems. Optim. Methods Softw. 38, 570–590 (2023)

Lukšan, L., Vlcek, J.: Test problems for nonsmooth unconstrained and linearly constrained optimization. Technical report (2000)

Montonen, O., Karmitsa, N., Mäkelä, M.: Multiple subgradient descent bundle method for convex nonsmooth multiobjective optimization. Optimization 67(1), 139–158 (2018)

Gebken, B., Peitz, S.: An efficient descent method for locally lipschitz multiobjective optimization problems. J. Optim. Theory Appl. 188, 696–723 (2021)

Acknowledgements

The authors wish to extend their heartfelt appreciation to the anonymous referees for their insightful comments and valuable feedback, which improved the quality and clarity of this paper.

Author information

Authors and Affiliations

Contributions

Both the authors have equal contribution to this work.

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest have been declared by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumar, D., Panda, G. A line search technique for a class of multi-objective optimization problems using subgradient. Positivity 28, 34 (2024). https://doi.org/10.1007/s11117-024-01051-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11117-024-01051-6

Keywords

- Line search technique

- Convex optimization

- Pareto optimal point

- Multi-objective optimization

- Sub-gradient method