Abstract

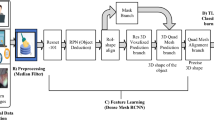

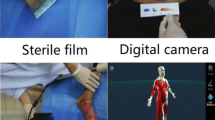

In the medical image processing, automatic segmentation of burn images is one of the critical tasks in the classification of skin burn into normal and burn area. Traditional models identify the burns from the image and distinguish the region as burn and non-burn regions. However, the earlier models cannot accurately classify the wound region and also requires more time in the prediction of burns. Also, the burn depth analysis is an important factor for the calculation of the percentage of burn depth i.e. degree of severity is analyzed by Total body surface area (TBSA). For those issues, we design a hybrid approach named DenseMask Regional convolutional neural network (RCNN) approach for segmenting the skin burn region based on the various degrees of burn severity. In this, hybrid integration of Mask-region based convolution neural network CNN (Mask R-CNN) and dense pose estimation are integrated into DenseMask RCNN that calculate the full-body human pose and performs semantic segmentation. At first, we use the Residual Network with a dilated convolution using a weighted map** model to generate the dense feature map. Then the feature map is fed into the Region proposal network (RPN) which utilizes a Feature pyramid network (FPN) to detect the objects at different scales of location and pyramid level from the input images. For the accurate alignment of pixel-to-pixel labels, we introduce a Region of interest (RoI)-pose align module that properly aligns the objects based on the human pose with the characteristics of scale, right-left, translation, and left–right flip to a standard scale. After the alignment task, a cascaded fully convolutional architecture is employed on the top of the RoI module that performs mask segmentation and dense pose regression task simultaneously. Finally, the transfer learning model classifies the detected burn regions into three classes of wound depths. Experimental analysis is performed on the burn dataset and the result obtained shows better accuracy than the state-of-art approaches.

Similar content being viewed by others

References

Evers LH, Bhavsar D, Mailänder P (2010) The biology of burn injury. ExpDermatol 19(9):777–783

Torpy Janet M, Lynm C, Glass RM (2009) Burn injuries. JAMA 302(16):1828–1928

Abraham JP, Plourde BD, Vallez LJ, Nelson-Cheeseman BB, Stark JR, Sparrow EM, Gorman JM (2018) Skin burns. Theory Appl Heat Trans Hum 2:723–739

Acha B, Serrano C, Fondón I, Gómez-Cía T (2013) Burn depth analysis using multidimensional scaling applied to psychophysical experiment data. IEEE Trans Med Imaging 32(6):1111–1120

Zhai LN, Li J (2015) Prediction methods of skin burn for performance evaluation of thermal protective clothing. Burns 41(7):1385–1396

Wantanajittikul K, Auephanwiriyakul S, Theera-Umpon N, Koanantakool T (2012) Automatic segmentation and degree identification in burn color images. In: The 4th 2011 biomedical engineering international conference. IEEE, pp 169–173

Stroppiana D, Bordogna G, Boschetti M, Carrara P, Boschetti L, Brivio PA (2011) Positive and negative information for assessing and revising scores of burn evidence. IEEE Geosci Remote Sens Lett 9(3):363–367

García JFG, Venegas-Andraca SE (2015) Region-based approach for the spectral clustering Nyström approximation with an application to burn depth assessment. Mach Vis Appl 26(2–3):353–368

Tran H, Le T, Le T, Nguyen T (2015) Burn image classification using one-class support vector machine. In: ICCASA. Springer, Cham, pp 233–242

Thumbunpeng P, Ruchanurucks M, Khongma A (2013) Surface area calculation using Kinect's filtered point cloud with an application of burn care. In: 2013 IEEE international conference on robotics and biomimetics (ROBIO). IEEE, pp 2166–2169

Şevik U, Karakullukçu E, Berber T, Akbaş Y, Türkyılmaz S (2019) Automatic classification of skin burn colour images using texture-based feature extraction. IET Image Proc 13(11):2018–2028

Serrano C, Boloix-Tortosa R, Gómez-Cía T, Acha B (2015) Features identification for automatic burn classification. Burns 41(8):1883–1890

Fauzi MFA, Khansa I, Catignani K, Gordillo G, Sen CK, Gurcan MN (2015) Computerized segmentation and measurement of chronic wound images. Comput Biol Med 60:74–85

Gao Y, Zoughi R (2016) Millimeter wave reflectometry and imaging for noninvasive diagnosis of skin burn injuries. IEEE Trans Instrum Meas 66(1):77–84

Yu J, Tan M, Zhang H, Tao D, Rui Y (2019) Hierarchical deep click feature prediction for fine-grained image recognition. In: IEEE transactions on pattern analysis and machine intelligence. 2019 July 30

Zhang J, Yu J, Tao D (2018) Local deep-feature alignment for unsupervised dimension reduction. IEEE Trans Image Process 27(5):2420–2432

Yu J, Li J, Yu Z, Huang Q (2019) Multimodal transformer with multi-view visual representation for image captioning. In: IEEE transactions on circuits and systems for video technology. 2019 Oct 15

Butt AUR, Ahmad W, Ashraf R, Asif M, Cheema SA (2019) Computer aided diagnosis (CAD) for segmentation and classification of burnt human skin. In: 2019 international conference on electrical, communication, and computer engineering (ICECCE). IEEE, pp 1–5

Yadav DP, Sharma A, Singh M, Goyal A (2019) Feature Extraction based machine learning for human burn diagnosis from burn images. IEEE J TranslEng Health Med 7:1–7

Suvarna M, Niranjan UC (2013) Classification methods of skin burn images. Int J Comput Sci InfTechnol 5(1):109

Trabelsi O, Tlig L, Sayadi M, Fnaiech F (2013) Skin disease analysis and tracking based on image segmentation. In: 2013 international conference on electrical engineering and software applications. IEEE, pp 1–7

Osborne CL, Petersson C, Graham JE, Meyer WJ III, Simeonsson RJ, Suman OE, Ottenbacher KJ (2017) The Burn Model Systems outcome measures: a content analysis using the. Int Class FunctDisabil Health DisabilRehabil 39(25):2584–2593

Ding H, Chang RC (2018) Simulating image-guided in situ bioprinting of a skin graft onto a phantom burn wound bed. AdditManuf 22:708–719

Hai TS, Triet LM, Thai LH, Thuy NT (2017) Real time burning image classification using support vector machine. EAI Endorsed Trans Context Aware Syst Appl 4(12).

Despo O, Yeung S, Jopling J, Pridgen B, Sheckter C, Silberstein S et al. (2017) BURNED: towards efficient and accurate burn prognosis using deep learning

Tran HS, Le TH, Nguyen TT (2016) The degree of skin burns images recognition using convolutional neural network. Indian J Sci Technol 9(45):1–6

Abubakar A, Ajuji M, Usman Yahya I (2020) Comparison of deep transfer learning techniques in human skin burns discrimination. ApplSystInnov 3(2):20

Tran H, Le T, Le T, Nguyen T (2015) Burn image classification using one-class support vector machine. In: ICCASA, pp 233–242

Rowland RA, Ponticorvo A, Baldado ML, Kennedy GT, Burmeister DM, Christy RJ, Durkin AJ (2019) Burn wound classification model using spatial frequency-domain imaging and machine learning. Journal of biomedical optics 24(5):056007

Lee S, Ye H, Chittajallu D, Kruger U, Boyko T, Lukan JK, De S (2020) Real-time burn classification using ultrasound imaging. Sci Rep 10(1):1–13

Hong C, Yu J, Zhang J, ** X, Lee KH (2018) Multimodal face-pose estimation with multitask manifold deep learning. IEEE Trans IndustrInf 15(7):3952–3961

Hong C, Yu J, Wan J, Tao D, Wang M (2015) Multimodal deep autoencoder for human pose recovery. IEEE Trans Image Process 24(12):5659–5670

Gao Q, Liu J, Ju Z, Zhang X (2019) Dual-hand detection for human–robot interaction by a parallel network based on hand detection and body pose estimation. IEEE Trans Industr Electron 66(12):9663–9672

Madadi M, Bertiche H, Escalera S (2020) SMPLR: Deep learning based SMPL reverse for 3D human pose and shape recovery. Pattern Recogn 25:107472

Abubakar A, Ajuji M, Usman YI (2020) Comparison of deep transfer learning techniques in human skin burns discrimination. ApplSystInnov 3(2):20

Alp Güler R, Neverova N, Kokkinos I (2018) Densepose: dense human pose estimation in the wild. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2018, pp 7297–7306

Funding

There is no funding for this study.

Author information

Authors and Affiliations

Contributions

All the authors have participated in writing the manuscript and have revised the final version. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The Authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants and/or animals performed by any of the authors.

Informed consent

There is no informed consent for this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pabitha, C., Vanathi, B. Densemask RCNN: A Hybrid Model for Skin Burn Image Classification and Severity Grading. Neural Process Lett 53, 319–337 (2021). https://doi.org/10.1007/s11063-020-10387-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-020-10387-5